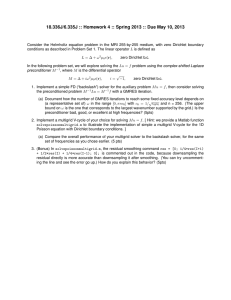

ETNA

advertisement