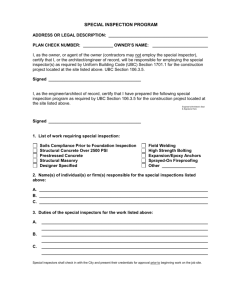

PER PA ION

advertisement