Robust overlays for privacy-preserving data dissemination over a social graph Abhishek Singh

advertisement

Robust overlays for privacy-preserving data

dissemination over a social graph

Abhishek Singh1 , Guido Urdaneta1 , Maarten van Steen2

& Roman Vitenberg1

2

1

University of Oslo, Norway

VU University Amsterdam, The Netherlands

20/06/2012

1 / 33

Motivation

Data exchange on sensitive topics;

e.g., politics or chronic illness

Data exchange

mechanism

Problem: Ensure privacy-preserving and robust interest-based data

exchange within a social community

2 / 33

Motivation

Data exchange on sensitive topics;

e.g., politics or chronic illness

Data exchange

mechanism

User’s interest or data

can be leaked

Problem: Ensure privacy-preserving and robust interest-based data

exchange within a social community

2 / 33

Motivation

Data exchange on sensitive topics;

e.g., politics or chronic illness

Data exchange

mechanism

Attacker can exploit this

knowledge to make profit

or harm users

Problem: Ensure privacy-preserving and robust interest-based data

exchange within a social community

2 / 33

Motivation

Data exchange on sensitive topics;

e.g., politics or chronic illness

Data exchange

mechanism

Privacy leakages have been

observed in centralized

architectures

Problem: Ensure privacy-preserving and robust interest-based data

exchange within a social community

2 / 33

Motivation

Data exchange on sensitive topics;

e.g., politics or chronic illness

Data exchange

mechanism

While decentralized architectures

have to address both privacy

and robustness

Problem: Ensure privacy-preserving and robust interest-based data

exchange within a social community

2 / 33

Our contribution

Robust communication overlay based on social relationships

I

Robust

I

I

Short path lengths

Low probability of partitions

I

Preserves privacy

I

Decentralized

I

Fast repair under churn

I

Low overhead

3 / 33

Basic idea: exploit trust relations between users

Friend-to-friend network

Edges represent

mutual trust

Trust graph

A connected friend-to-friend network formed by social interactions

Example: Facebook graph

4 / 33

Basic idea: exploit trust relations between users

Trust: mutual agreement between nodes to not disclose

each other’s identity and interests to unauthorized

enities

Edges represent

mutual trust

Trust graph

A connected friend-to-friend network formed by social interactions

Example: Facebook graph

4 / 33

Basic idea: exploit trust relations between users

Trust between nodes is symmetric and non-transitive

C

B

A and C do not

trust each other

A

B and C trust

each other

A and B trust

each other

Trust graph

A connected friend-to-friend network formed by social interactions

Example: Facebook graph

4 / 33

Basic idea: exploit trust relations between users

Nodes disclose their interests to their neighbors

out of band

Trust graph

A connected friend-to-friend network formed by social interactions

Example: Facebook graph

4 / 33

Naive overlay solutions that mimic trust graph are non robust

Example: Freenet in darknet mode [Clarke et al. 2010]

Fraction of disconnected nodes

Each node exchanges messages with only those nodes that it trusts

1

FB graph (1000 nodes)

0.8

0.6

0.4

0.2

0

0

0.2

0.4

0.6

0.8

1

Node Availability (fraction of time alive)

Insight: Social networks exhibit ‘small-world’ properties

Social networks tend to get partitioned when a small number of

high-degree nodes are removed [Mislove et al. IMC 2007]

5 / 33

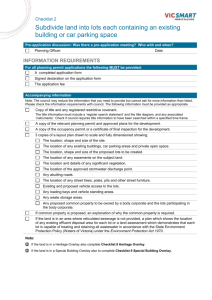

Problem statement

For a group of users, provide a scalable approach to create and

maintain robust overlay for data-dissemination while preserving

following privacy requirements,

Entities

Comm. providers

External users

Participating

Users

Information

Content of

messages

Participation of

an user

Only trusted

neighbors of the

user are aware

Trust relations of

a participating

user

Trusted

neighbors of the

user are only

aware of their

relation with him

: entities

should not be

able to access

the required

information

: entities

must be able to

access the

required

information

Assumption: Participating users do not actively disrupt communication

6 / 33

Underlying idea for the solution

Extend the overlay based on trust graph towards a random-like graph

by adding edges in a privacy-preserving manner

Edges in

trust graph

Example

I

Fan-out of each node in the overlay is increased to 4

I

Extra links are randomly selected

7 / 33

Underlying idea for the solution

Extend the overlay based on trust graph towards a random-like graph

by adding edges in a privacy-preserving manner

Participating online nodes get

partitioned

Offline

nodes

Example

I

Fan-out of each node in the overlay is increased to 4

I

Extra links are randomly selected

7 / 33

Underlying idea for the solution

Extend the overlay based on trust graph towards a random-like graph

by adding edges in a privacy-preserving manner

Privacy

preserving

links

Example

I

Fan-out of each node in the overlay is increased to 4

I

Extra links are randomly selected

7 / 33

Underlying idea for the solution

Extend the overlay based on trust graph towards a random-like graph

by adding edges in a privacy-preserving manner

Privacy

preserving

links

Offline

nodes

Example

I

Fan-out of each node in the overlay is increased to 4

I

Extra links are randomly selected

7 / 33

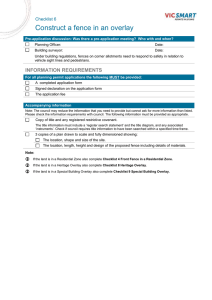

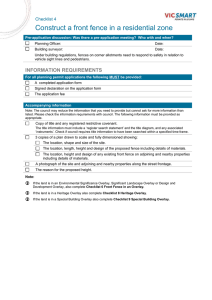

Solution architecture

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

8 / 33

Solution architecture

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Our main

contribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

8 / 33

Privacy-preserving link layer

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

9 / 33

Anonymity service

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

10 / 33

Anonymity service

Allows two nodes to hide communication between them from

adversaries who are monitoring traffic

Attacker monitors

communication

links

A

B

msg1

Anonymity

Service

msg2

Examples of anonymity services: TOR [Dingledine et al. SSYM 2004],

I2P, Email remailers [Danezis et al. 2003]

Requirement: node A must know the identity of node B

Implementations have latency versus security tradeoff

I Possible to decentralize [Schiavoni et al. ICDCS 2011]

I

I

10 / 33

Anonymity service

Allows two nodes to hide communication between them from

adversaries who are monitoring traffic

Attacker monitors

communication

links

Attacker is unable to

infer that A sent a

message to B

A

B

msg1

Anonymity

Service

msg2

Examples of anonymity services: TOR [Dingledine et al. SSYM 2004],

I2P, Email remailers [Danezis et al. 2003]

Requirement: node A must know the identity of node B

Implementations have latency versus security tradeoff

I Possible to decentralize [Schiavoni et al. ICDCS 2011]

I

I

10 / 33

Anonymity service

Allows two nodes to hide communication between them from

adversaries who are monitoring traffic

Attacker monitors

communication

links

Attacker is unable to

infer that A sent a

message to B

A

B

msg1

Anonymity

Service

msg2

Examples of anonymity services: TOR [Dingledine et al. SSYM 2004],

I2P, Email remailers [Danezis et al. 2003]

Requirement: node A must know the identity of node B

Implementations have latency versus security tradeoff

I Possible to decentralize [Schiavoni et al. ICDCS 2011]

I

I

10 / 33

Pseudonyms and pseudonym service

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

11 / 33

Pseudonyms and pseudonym service

Allows a node A to establish a short-lived identity PA (pseudonym)

such that no one else is aware of the identity of the node that created

PA

A

- Pseudonym PA is created at node P

- Node A is the owner of pseudonym PA

- Node P is not aware of the identity of node A

P

Pseudonym Service

Examples of pseudonym implementations: TOR rendezvous point, I2P

eepsite, Email address, DHT key

11 / 33

Pseudonyms and pseudonym service

Allows a node A to establish a short-lived identity PA (pseudonym)

such that no one else is aware of the identity of the node that created

PA

A

B

Node B can send a message to A

through PA

P

msg

msg

msg

msg

Pseudonym Service

Examples of pseudonym implementations: TOR rendezvous point, I2P

eepsite, Email address, DHT key

11 / 33

Need for limited pseudonym lifetime

A

Node P is aware that it acts as a proxy

for some unkown user

P

Pseudonym Service

Privacy versus robustness tradeoff:

I

For privacy, pseudonyms should have short duration

I

For robustness of the overlay, pseudonyms should last longer to

minimize churn handling

12 / 33

Need for limited pseudonym lifetime

A

Probability of successful traffic analysis is

higher if node A uses P as proxy for

long duration

P

P is malicious

Pseudonym Service

Privacy versus robustness tradeoff:

I

For privacy, pseudonyms should have short duration

I

For robustness of the overlay, pseudonyms should last longer to

minimize churn handling

12 / 33

Need for limited pseudonym lifetime

A

Thus, pseudonyms should last a limited time

P

P is malicious

Pseudonym Service

Privacy versus robustness tradeoff:

I

For privacy, pseudonyms should have short duration

I

For robustness of the overlay, pseudonyms should last longer to

minimize churn handling

12 / 33

Need for limited pseudonym lifetime

A

Thus, pseudonyms should last a limited time

P

P is malicious

Pseudonym Service

Privacy versus robustness tradeoff:

I

For privacy, pseudonyms should have short duration

I

For robustness of the overlay, pseudonyms should last longer to

minimize churn handling

12 / 33

Overlay layer

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

13 / 33

Pseudonym creation and removal

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

14 / 33

Pseudonym creation and removal

Node A

Own pseudonym

Pseudonym cache

(PC)

Overlay links

Generated pseudonym is composed of {PA , Tlife , PK (PA )}

I

PA : pseudonym identity

I

Tlife : lifetime of the pseudonym

I

PK (PA ): public key used to encrypt messages sent to PA

14 / 33

Pseudonym creation and removal

Node A

Own pseudonym

Pseudonym cache

(PC)

Pseudonym-creation

module generates a

new pseudonym

Overlay links

Generated pseudonym is composed of {PA , Tlife , PK (PA )}

I

PA : pseudonym identity

I

Tlife : lifetime of the pseudonym

I

PK (PA ): public key used to encrypt messages sent to PA

14 / 33

Pseudonym creation and removal

Node A

Own pseudonym

Pseudonym cache

(PC)

Pseudonym-removal

module purges

expired pseudonyms

Overlay links

When a pseudonym PR expires, it is purged from data structures of all

nodes

When the pseudonym PA expires, node A generates a new

pseudonym

14 / 33

Pseudonym distribution

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

15 / 33

Pseudonym distribution

Node A

Edge in the overlay

Node B

Own pseudonym

Own pseudonym

Pseudonym cache

(PC)

Pseudonym cache

(PC)

Overlay links

Overlay links

A modified version of the shuffle protocol in [Staurov et al. ICNP 2002]

is used

At each shuffle period, nodes exchange data with a random neighbor

in the overlay

15 / 33

Pseudonym distribution

Node A

Edge in the overlay

Node B

1. Shuffle request msg

Own pseudonym

Pseudonym cache

(PC)

Overlay links

Own pseudonym

Message contains a random

sample of node A’s

own pseudonym and its PC

Pseudonym cache

(PC)

Overlay links

SampleA is included in the message sent from node A to node B

SampleA contains a random sample of {{PA } ∪ PCA }

15 / 33

Pseudonym distribution

Node A

Edge in the overlay

Node B

1. Shuffle request msg

Own pseudonym

Own pseudonym

2. Shuffle response msg

Pseudonym cache

(PC)

Overlay links

Message contains a random

sample of node B’s

own pseudonym and its PC

Pseudonym cache

(PC)

Overlay links

SampleB is included in the message sent from node B to node A

SampleB contains a random sample of {{PB } ∪ PCB }

15 / 33

Pseudonym distribution

Edge in the overlay

Node A

Node B

1. Shuffle request msg

Own pseudonym

Own pseudonym

2. Shuffle response msg

Pseudonym cache

(PC)

Pseudonym cache

(PC)

PC of both nodes are updated

Overlay links

Overlay links

Each node updates its pseudonym cache after each shuffle exchange

PCA ← random sample of {PCA ∪ SampleB }

PCB ← random sample of {PCB ∪ SampleA }

15 / 33

Pseudonym distribution

Node A

Edge in the overlay

Node B

1. Shuffle request msg

Own pseudonym

Own pseudonym

2. Shuffle response msg

Pseudonym cache

(PC)

Overlay links

At both nodes, pseudonym

sampling module is invoked

that updates their overlay links

Pseudonym cache

(PC)

Overlay links

15 / 33

Pseudonym sampling

Application layer

Application-specific

data-dissemination

protocols

Overlay layer

Pseudonym

creation

and removal

Pseudonym

sampling

Pseudonym

distribution

Privacy-preserving link layer

Anonymity

service

Pseudonym

service

16 / 33

Pseudonym sampling

Node A

Own pseudonym

Pseudonym cache

(PC)

Uses pseudonym cache

Overlay links

Updates overlay links

I

Edges in the trust graph are retained in the overlay

I

Fan-out of nodes is extended to decrease the impact of skewed

degree distribution in the trust graph

16 / 33

Pseudonym sampling

Pseudonym sampling ensures that pseudonym links

for a node are selected uniformly at random

Privacy-preserving

overlay links

Trust graph

Pseudonyms are sampled using a protocol similar to Brahms

[Bortnikov et al. CN 2009]

16 / 33

Privacy preservation analysis

Privacy requirements

Entities

Comm. providers

External users

Participating

Users

Information

Content of

messages

Participation of

an user

Only trusted

neighbors of the

user are aware

Trust relations of

a participating

user

Trusted

neighbors of the

user are only

aware of their

relation with him

: entities

should not be

able to access

the required

information

: entities

must be able to

access the

required

information

17 / 33

Non-disclosure of content messages

Attack by external observers

I

Learn content of messages being exchanged

Defense

I

Messages from a node A to its trusted neighbor B

I Are encrypted by B’s PK (B )

I

Messages from node A to a pseudonym PB

I Are encrypted by PB ’s PK (PB )

18 / 33

Non-disclosure of participating nodes

Attack by internal or external observers

I

Traffic analysis attack to identify participating nodes

Defense

I

We rely on anonymity and pseudonym services

I

Distributing pseudonyms does not require low-latency guarantees

I

More secure anonymity services, such as email remailers, can be

used

19 / 33

More privacy preservation analysis

Non-disclosure of the edges in the trust graph

I

Timing analysis attack by a set of colluding internal observers

I

Low probability of a successful attack

I

Successful timing analysis attack is easier

I

I

If internal observers form a vertex cut in the trust graph, and

If internal observers deviate from the protocol

Non-disclosure of participating nodes

I

A participating node sends anomalous message or anomalously

timed messages

I

To counter the attack, we can use high-latency anonymity

services

20 / 33

More privacy preservation analysis

Non-disclosure of the edges in the trust graph

I

Timing analysis attack by a set of colluding internal observers

I

Low probability of a successful attack

I

Successful timing analysis attack is easier

I

I

If internal observers form a vertex cut in the trust graph, and

If internal observers deviate from the protocol

Non-disclosure of participating nodes

I

A participating node sends anomalous message or anomalously

timed messages

I

To counter the attack, we can use high-latency anonymity

services

20 / 33

Evaluation methodology

Goal

Evaluate if we can efficiently produce a robust overlay in a

privacy-preserving manner

Methodology

I

Friend-to-friend network sampled from Facebook social graph

I

Evaluate robustness of overlay under different node availability

I

Baselines for comparison

I

I

Trust graph (sampled Facebook social graph)

Erdös-Rényi random graph with an average out-degree similar to

that of our solution

21 / 33

Evaluation methodology

Goal

Evaluate if we can efficiently produce a robust overlay in a

privacy-preserving manner

Methodology

I

Friend-to-friend network sampled from Facebook social graph

I

Evaluate robustness of overlay under different node availability

I

Baselines for comparison

I

I

Trust graph (sampled Facebook social graph)

Erdös-Rényi random graph with an average out-degree similar to

that of our solution

21 / 33

System parameters

Churn settings

I

We use the churn model proposed in [Yao et al. ICNP 2006]

I

We model both online and offline times by exponential distribution

I

Average node availability, α = Ton /(Ton + Toff )

Parameters

Parameter

Number of nodes in trust graph

Toff , mean offline time (in shuffle periods)

α, average node availability

Ton , mean online time (in shuffle periods)

r , ratio of pseudonym lifetime and Toff

Target number of overlay links per node

Value

1000

30

Varies

Derived from α

3

50

Assumption: anonymity and pseudonym services are always available

22 / 33

System parameters

Churn settings

I

We use the churn model proposed in [Yao et al. ICNP 2006]

I

We model both online and offline times by exponential distribution

I

Average node availability, α = Ton /(Ton + Toff )

Parameters

Parameter

Number of nodes in trust graph

Toff , mean offline time (in shuffle periods)

α, average node availability

Ton , mean online time (in shuffle periods)

r , ratio of pseudonym lifetime and Toff

Target number of overlay links per node

Value

1000

30

Varies

Derived from α

3

50

Assumption: anonymity and pseudonym services are always available

22 / 33

System parameters

Churn settings

I

We use the churn model proposed in [Yao et al. ICNP 2006]

I

We model both online and offline times by exponential distribution

I

Average node availability, α = Ton /(Ton + Toff )

Parameters

Parameter

Number of nodes in trust graph

Toff , mean offline time (in shuffle periods)

α, average node availability

Ton , mean online time (in shuffle periods)

r , ratio of pseudonym lifetime and Toff

Target number of overlay links per node

Value

1000

30

Varies

Derived from α

3

50

Assumption: anonymity and pseudonym services are always available

22 / 33

Fraction of disconnected nodes

Robust overlay

1

0.8

Trust graph

Overlay

Random graph

0.6

0.4

0.2

0

0

0.2

0.4

0.6

0.8

1

Node Availability (fraction of time alive)

Fraction of disconnected nodes in the overlay is closer to the reference

random graph than to the trust graph

23 / 33

Normalized avg. path length

Short path lengths

1000

Trust graph

Overlay

Random graph

100

10

1

0

0.2

0.4

0.6

0.8

1

Node Availability (fraction of time alive)

The overlay has path lengths similar to the reference random graph

24 / 33

Fast convergence

Fraction of disconnected nodes

The overlay bootstraps from trust graph and it is extended towards a

more robust overlay

0.8

0.6

0.4

Trust graph

Overlay r = 3

Overlay r = 9

0.2

0

0

200

400

600

800

Time (shuffle periods)

1000

Node availability: 25%

The overlay converges in approx. 200 shuffle periods

25 / 33

More experimental results

Degree distribution of nodes in the overlay

I

Different than the reference random graph

I

Biased by the degree of a node in the trust graph

Low message overhead

I

Average messages sent per shuffle period is 2

I

Nodes with higher degree in trust graph sent more messages

I

However, this skewness is not large

Decreasing the lifetime of pseudonym

I

Decreases the robustness of the overlay

I

Increases the number of links to be replaced in the overlay

26 / 33

More experimental results

Degree distribution of nodes in the overlay

I

Different than the reference random graph

I

Biased by the degree of a node in the trust graph

Low message overhead

I

Average messages sent per shuffle period is 2

I

Nodes with higher degree in trust graph sent more messages

I

However, this skewness is not large

Decreasing the lifetime of pseudonym

I

Decreases the robustness of the overlay

I

Increases the number of links to be replaced in the overlay

26 / 33

More experimental results

Degree distribution of nodes in the overlay

I

Different than the reference random graph

I

Biased by the degree of a node in the trust graph

Low message overhead

I

Average messages sent per shuffle period is 2

I

Nodes with higher degree in trust graph sent more messages

I

However, this skewness is not large

Decreasing the lifetime of pseudonym

I

Decreases the robustness of the overlay

I

Increases the number of links to be replaced in the overlay

26 / 33

Conclusion

Problem

I

Provide a scalable approach to construct and maintain robust

overlays for a group of users while preserving their privacy

Our Approach

I

Extends the overlay from the underlying friend-to-friend network

towards a regular random graph network

I

Increases robustness

I

Preserves privacy

I

Decentralized solution

I

Low maintenance and fast convergence

Questions?

27 / 33

Conclusion

Problem

I

Provide a scalable approach to construct and maintain robust

overlays for a group of users while preserving their privacy

Our Approach

I

Extends the overlay from the underlying friend-to-friend network

towards a regular random graph network

I

Increases robustness

I

Preserves privacy

I

Decentralized solution

I

Low maintenance and fast convergence

Questions?

27 / 33

State of the art

Centralized approach

I

Example: Twitter

I

Single point of failure w.r.t availability as well as privacy

Use social relationships as bootstrap mechanism

I

Example: MCON [Vasserman et al. CCS 2009]

I

Overlay need not correspond to social relationships

I

Relies on trusted third party

Use social relationships as communication overlay

I

Example: Freenet in darknet mode [Clarke et al. 2010]

I

Resulting overlay may not be robust under node churn

28 / 33

Non-disclosure of the edges of the trust graph

Attack: predict if A and B have a trust

relationship between them?

B

A

Trust?

Attack by an internal observer

29 / 33

Non-disclosure of the edges of the trust graph

Methodology: attacker uses timing analysis to detect

presence of an overlay link between A and B

B

A

P

Attack by an internal observer

29 / 33

Non-disclosure of the edges of the trust graph

If A and B have more neighbors in the overlay, then

detecting presence of an overlay link between them

is more difficult

B

A

P

Attack by an internal observer

29 / 33

Non-disclosure of the edges of the trust graph

Also, the overlay link between A and B can be result

of pseudonym distribution over a path that does not

involve the attacker

B

A

Attack by an internal observer

29 / 33

Non-disclosure of the edges of the trust graph

Thus, an attacker can not be sure if the overlay link

between A and B is also a trust relationship

B

A

comm. link == trust ?

P

Attack by an internal observer

29 / 33

Degree distribution at 50% availability

Trust graph

Overlay

Random graph

Number of nodes

100

10

1

0

20

40

60 80 100 120 140

Degree

Nodes in the overlay have more neighbors than in the trust graph

Degree distribution of nodes in overlay is not similar to regular random

graph as it is biased by the degree of nodes in the trust graph

30 / 33

Message overhead

Max. out-degree in overlay

Degree in trust graph

Messages

8

100

4

10

2

1

1

10

100

Avg. messages sent

per shuffle period

(Out-) degree

1000

1

1000

Rank

Average number of messages sent per shuffle period by each node at

50% availability

However, nodes with higher degree in trust graph send more message

Maximum number of messages being sent is approximately twice the

total average (= 2)

31 / 33

Fraction of disconnected nodes

Effect of lifetime of pseudonym

1

Trust graph

r=1

r=3

r=9

r = Infinite

Random graph

0.8

0.6

0.4

0.2

0

0

0.2

0.4

0.6

0.8

Availability (fraction of time alive)

1

Lower pseudonym lifetime decreases the robustness of the overlay

32 / 33

Number of links replaced

per node per shuffle period

Number of links replaced over time

r=3

r=9

r = Infinite

16

14

12

10

8

6

4

2

0

0

2000 4000 6000 8000

Time (shuffle periods)

10000

Number of links replaced per node per shuffle period at 25%

availability

Lower pseudonym lifetime increases the number of links replaced in

the overlay

33 / 33