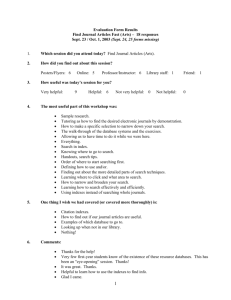

C/67-2 DATA A uAaDLIUG

advertisement

C/67-2

COMPLTR APPROAClES FOR THE uAaDLIUG

OF LARCE SOCIAL 5CIEN;'CL DATA FILES

A Progress Report to the National Science Foundation

on Grant GS-727

Submitted by

Ithiel de Sola Pool, James M. Beshers

Stuart McIntosh, and David Griffel

Center for International Studies

M.I.T.

January 1967

ACKNOWLUDGEMENTS

In addition to the National Science Foundation which supported tais

project, we wish to extend our thanks to Project MAC,

an IT research

program sponsored by the Advanced Research Projects Agency,

Defense,

Department of

under Office of Naval Research Contract Number N-402(01),

made exceptional computer facilities available to us,

wtich

and tothe Comcom

project of the MIT Center for International Studies, [supported by ARPA,

under Air Force Office of Scientific Research Contract Number AF 49(63j)

1237] which supported much of the initial program development.

Summary

This is

a reoort on an experiment in computer methods

for handling large social science data files.

Data processing methods in

the social sciences have

been heavily conditioned by the limitations of the data

processing equipment available, such as punch cards and

permit on-line time-

Third generation computers

tapes.

shared interactive

data analysis in

le

entirely new ways,

soulit to use these new capabilities in a system that

would enable sAojal scientists to do thinps that were previously impractical.

Among the most important objectives of the system is

to

permit social scientists to work on data from a whole library

of data simultaneously,

rather than on single studies.

we call working on multi-srurce

objective was

data.)

to permit social scientists

Another imoortant

to worK simultane-

ously on data at different levels of aggregation.

ample,

a

file

of voting statistics

(This

For ex-

by precinct and a file

-f

individual responses to a public opinion poll might be combined to examine how non-voters in a one-party neighborhood

differ in attitudes from non-voters in a contested neighborhood0

(This we call working on multi-level data.)

Another objective

was to Dermit researchers who do not know computer Drogram-

ming to work on-line on the comnuter.

To meet these objectives a data analysis system called

the Admins system was developed.

The system consists

of four

-2-

sub-system as follows:

(1) The Organizer: a sub-system for creation of machineexecutable codebooks.

(2)

The Processor:

a sub-system to bring the data into

correspondence with that codebook.

(3)

The Structurer: a

sub-system for inverting data

files.

(4) The Cross-analyzer: a sub-syste

for analvzing

data.

The development of the Admins system

cal exercise.

It

has not been a theoreti-

has been developed in

the environment

of an

actual data archive used by students and faculty members at

Approximately 15 users

MTT for the conduct of their research.

are now using the system.

The Organizer Sub-System

The codebooks and data as they come to our data archive

are full

of errors,

Experience has shown that error correction

takes a good half of the analyst's time.

The job of the ror-

ganizer sub-system is to enable the researcher to produce an

error-free machine-executable codebook covering that nart of

the data which he is

going to use right awav.

executable codebook we call an "adform",

(This

machine-

short for adrinistra-

tive form. )

The researcher types at the console the name by whic-i he

wants to designate a question.

answer, descriptions

typ)es

tae

will

(That

be labels

text that corresponds

along with question

on outnut tables.)

to that

nave,

tae

noner

A

then

l

or

1

ii ilii 1

lll11

-

-3-

possible answers, and where this data is to be found on the

data storage medium (e.g. card and column).

any rules to which the data must conform,

He can also type

e.g. single-punch,

multiple-punch, if non-voter is punched vote cannot be punched,

etc.

Por each question the researcher provides that minimum

information which will permit unambiguous interpretation of

the data format.

The computer then checks for errors in the codebook such

as failure to provide all

the needed information or contradic-

tory information provided e.g.

same punches.

user,

two questions listed in the

The errors are printed out, corrected by the

until after

2-3 cycles he is

likely to have a corlete

and clean adform.

lie needs to do this only with questions he is about to

use because he can always add adforms later.

Errors, of course, still remain but not errors in the

format of the adform.

That may remain are suostantive errors

or failure of the adform to conform to the data.

To check the

latter matter we turn to the next sub-system.

The Processor Sub-System

Now the user puts data onto the disc.

looked only at the codebook,

Up to now he has

not at data.

'She processor checks the data to see if

tAat consist

of discrepancy

For examnle,

are there multiple punches in

variable.

between

the data and

Are there nunches somewhere

To save

time,

the

processor

there are errors

the adform.

a single column

that should be blank.

checks errors

in

various

aYs

-4-

tnat

the

user may siecify.

1Jth record,

test

He may,

the first

for example,

test

everv

hundred records,, print out the

first hundred errors then stop, disregard less than three

errors, etc.

As the processor scans records it also computes the marFinals, so by the time a clean data file, consonant with the

adform,

is

produced,

a complete listing

of marginals

is

also

available to guide the user in his future analysis.

The Analy zer Sub S stem*

The analyzer sub-system permits

to any set of records.

educated"

"Union",

all

the user to give a name

For example we may name as "collere-

Dersons wno said they had attended

"intersection",

college.

and other Boolean commands permit

new indexes to be constructed,

for example the name "old-boys"

may be used for tiose "college-educated" who are also "ld

Each of these names designates an index,

ers

a list

of noint-

to those persons who have that characteristic,

-y building indexes out of indexes

comnolex indexes

in

i.e.,

can be constructed.

an index name and ask

The confused

the computer to tell

tions that compose that index, or for that

structions in which that index is used,

track of all

The

summarve

out of indexes baffling

user can tyr'e

him the construcmatter all

tne

The comnuter keeps

that the analyst has donec

inversion of

the files

con-

need not be explained

in

this

-5-

The analyzer produces labelled cross-tabulation tables of

one variable against another,

about an in-

or other statistics

dex if desired statistical tests can be applied.

There are

over 50 different instructions the user can pive the analyzer

If

sub--system.

he forpets what an instruction is

definition,

and the system will print out its

he can ask

and the proper

format for it.

Multi-source and multi-level data are easily handled by

the analyzer tnanks to the pointers and naminp conventions

which can be equated across sources.

File Manaement

Let us note that the same Admins system that permits improved analysis of data about respondents and their charactercan be turned onto the data archive

istics

the library management system.

itself

to become

To the computer, a study and

its characteristics, a question and its characteris'ics, or q

respondent and his characteristics are all the same sort of

thinp.

Thus the Admins system can be used for tne construc-

tion of a catalopue of the data archive,

the construction of

indexes to it, the creation of complex indexes to the collection, and the searchinp of the indexes for any particular

kind of data.

The

Future

Further steps in the development of Admins include:

(1)

Research on how Admins

is

used by its

users to

-6-

see how it

can be simplified and made more natural

to use.

(2)

Improvement of file

management capabilities.

(This we call macro-orpanization.)

(3) Introducinp a small scope to pive the user the advantages of a facility like a pencil and paner

rather than just a facility like a typewriter.

Part I

PURPOSES OF THE IR(ECT

On June 1, 1965 we comenced work on a pilot study of "Computer

Approaches for the Handling of Large Social Science Data Files".

In the

proposal for the project that had been submitted to the National Science

Foundation we made the following statements about our purposes and plans:

"The needs of the social sciences are not being fully met

by present trends in computer methods. This project is designed

to see that these needs are met, and thereby, we believe, to

drastically change the approach of social scientists to the

analysis of data.

"The social sciences are among those sciences which are

data-rich and theory-poor. Typical social research studies use

large bodies of statistical records such as social surveys, the

census, records of economic transactions, etc. Computation

centers and computer systems have been designed largely to serve

the needs of natural scientists and engineers who emphasize

computation rather than manipulation of large data files.

"The new system on which we are working and which we will

continue to develop has a number of important characteristics.

1.

It is part of the on-line time-shared system being pioneered at MIT by Project MAC .

The fact that we will be working on an on-line timeshared system also means the introduction into social

science analysis of naturalistic question and answer

modes of man-machine interaction, with quick response

rather than the present long time lags and overmassive

data production.

2, The research methods that result from our work are

relatively computer-independent and program-independent

That is to say, they can be used by social scientists

who are not themselves programmers.

3.

Our systems provide for the handling of large archives

of multi-level data.

4.

structures in

The systems being developed will use list

storage to facilitate use of the multi-source multi-level

data.

5.

The purpose is to create social data bases that facilitate

the construction and testing of computer models of social

systems."

2-..

Part 2

PROJECT ACTIVITIES

Our approach to finding better methods for handling social science

data was largely empirical--learning by doing.

We established a function-

ing prototype data archive with over 1000 surveys and with a score or two

of users.

At the same time we explored the problems of current data hand-

ling in a series of seminars and conferences,

first year of the project.

particularly during the

Three different types of seminar and meetings

were held.

Firstly, two meetings of the Technical Committee of the Council of

Social S:ience Data Archives were held here at MIT.

The first of these

was the inaugural meeting of the Technical Committee at which general

problems of data handling were outlined.

The second meeting, held this

fall, was on the subject of a telecommunications experiment which is being

supported by NSF and in which Berkeley, The University of Michigan, the

Roper Center, and MIT are participating.

A second series of meetings were seminars of persons in the Cambridge

community involved in computer data handling.

Among the activities from

which persons came were Project INIREX (concerned with automated library

systems),

the TIP system (concerned with retrieval of physics literature),

some economists concerned with economic series, the General Inquirer group

from Harvard (concerned with content

analysis data), and city planners

concerned with urban and demographic data as well as survey research specialists.

The seninars were largely devoted to the presentation of specific

data handling programs and problems.

The third series of seminars were user seminars.

We listened to

presentations by data .users from different substantive fields of study.

Each user described their substantive analysis problems .

The discussions

allowed interaction between these substantive users and the data base

oriented people who had participated in the previous seminar series.

Common problems that were revealed in these discussions deeply affected

our understanding of user requirements.

Let us list

some of the sessions.

Professors Lerner, Beshers, Tilly,

and others discussed examples of large, complex data*files.

Professor

Beshers vent into the problems of multi-level data, including particularly

census data, and also aggregate data such as that found in the city and

county data books.

Professors Charles Tilly and Stephen Thurnstrom of

Harvard University and Gilbert Shapiro of Boston College presented examples

of computer methods for historical archives such as records of political

disturbances and of social mobility in the nineteenth century.

Among

other presentations three dealt with problems arising in the analysis of

more or less free formated text (which in this report we call lightly

structured data.).

list

Professor Frank Bonilla and Peter Bos described their

processing approach to qualitative data analysis which has been par-

tially developed under the auspices of this project.

Professor Philip

Stone of Harvard presented his programs used for content analysis; Professor Joseph Weizenbaum described the ELIZA program which represents

natural language conversation.,

Professor Carl Overhage described the

INTREX project and its approach to data problems.

When we first started vorking under the 19F grant in 1965, we had

already begun development of a data handling system in connection with work

that we were doing at MIT on several research projects that required data

processing.

We continued with that effort to develop a modern datsa handling

We named it the MITSAS system,

system.

The basic objective of the MITSAS system was to produce tables (and

apply statistical tests to the data in them) rapidly, on demand of the

user, with the user able to draw the data from any one of a large number

of files (for example, surveys) in a large archive.

Other longer range

objectives were to facilitate analysis of multi-level data (e.g. combining

analysis of survey data with analysis of related census data) and analysing

loosely structured textual flows.

However, the initial problem to which we

addressed ourselves was to break two bottlenecks:

one, the need to pro-

duce tables quickly and easily on demand by persons not competent to write

computer programs, and, two, to take into an archive a large flow of raw

data.

The first of these bottlenecks was successfully broken by the development by Noel Morris of a CBDSSTAB program which with great speed produces a considerable number of tables in bulk processing mode and which

will print out such tables on-line at remote consoles one at a time as

selected.

any format.

The program is designed to accept survey data in virtually

Included in the system are facilities for doing simple re-

coding on input data and producing output in standard format on magnetic

tape.

The system is divided into several sections:

the cross tabulation

routines read and process survey input data and write magnetic tape containing tabular information; the labelling routine accepts information

about the variables used in the input data; the statistical package computes requested statistical measures.; the output routine produces designated

tabular output in readable formato

The main merits of the CROIAB program are its very high speed and

the relative flexibility and simp. icity of the control cards used to designate the variables to be run, t,

etc.

group codes and rearrange them if desired,

Although for reasons about to be discussed, the basic approach of

the MITSAS system has been aban&ned in favor of a new and improved approach,

the CROSSTAB program continues tc be extensively used and has been the

basis for further progranming developments for particular applications in

data processing.

It

has proved especially useful to persons desiring to

process large amounts of uniforimly formatted data.

In has been used much

more in batch processing mode than on-line although the on-line production

of tables is provided for.

The other bottleneck to Vich the MITSAS system addressed itself was

that of accepting large amounts of data into an archive.

lem was to get that data into

scientist.

The basic prob-

;hape where it could be used by the social

The philosophy of our approach all along has been to get a new

survey into the computer files with an absolute minium of human manipulation.

When an old survey comes to an archive from some original source,

what arrives are two pieces of equipent:

deck of cards or a tape.

(1) a codebook, and, (2) a

Sometims other things come along too such as

the original questionnaire separated from the codebook, or reports written

about the study, or memorandum information about the data such as the

number of cards in the deck, the sampling methods used, etc.

If

a couple

of thousand surveys come to a small archive, as is entirely possible these

days, the odds are that all but a few hundred of these will not be used

by anyone for years; it is also. certain that there will be large numbers

of errors in the material received.

There is no guarantee that the codebook

and the card deck actually match 100%.

There are likely to be errors and

omissions in the codebook as well as keypunching errors in the cards.

find errors in,

To

clean, edit, and later on otherwise manipulate the code-

books to provide machine readable labels, etc, can be by ordinary methods

a mtter of weeks of work for even a single survey.

The objective of the

MITBAS system was to reduce this labor to manageable proportions in a

number of ways.

We desired that it

should take no more than an hour to

get the codebook and forms for an average survey ready for the keypunch

operator.

This obviously meant that the survey went into the computer

uncleaned and full

It

of errors, inconsistencies and ambiguities.

soon became apparent that the scale of our new venture imposed

radical constraints upon our system.

The conventional methods for handling

single social surveys, which we were attempting to extend, simple could not

meet the problems posed by a large heterogeneous set of surveys.

data problem was insurmountable if

The "bad"

one were to attempt to re-format and

"clean" each set of data as it came in.

The place to straighten out most

of this trouble was on-line, on the console, after a real user had appeared.

To avoid an intolerable volume of wasted effort, cleaning and editing operations should be done in response to client demand rather than automatically

Even more important, the procedures for dealing with errors are not invariant between users; each will want to make his own decisions.

Nonetheless,

a certain amount of initial checking was felt to be necessary in order to

place the data in the archive and some rapidly usable tools were programed

for this purpose.

The MITSAS system was a collection of separate programs, each designed

to meet a current problem in survey processing.

M4ITSAS system included:

As far as developed the

(1) An X-ray program which gives a hole count of

2-6

the punches in the card images.

This is used initially to ascertain which

columns are multi-punched, vhich areas of the cards are used, etc.

It

is

also used to establish that the different decks in a single study are

complete, and that punches occur in the areas in which the codebook says

they should occur.

(2)

A tape map program which tells us in what order

the cards in a study are arranged on the tape.

(3)

A sort program to

rearrange the cards on a tape into a desired order, or to correct an error

in card order.

(4)

An edit program which is used to recode and re-organize

a data deck into packed binary format, by which new variables can be created

or placed in a desirable form, e.g. eliminating multiple punching.

The recoding capability provided by the edit program was designed to

achieve the following objectives:

(1) Recode multiple punches (this vas

intended to take much of the burden off cross tabulation and statistieal

routines).

(2) Redo poorly coded multiple level filtration questions (a

filtration question is one where the code for a certain response is dependent upon a response made to a previous question, e.g. "if

reason why").

nonvoter, ask

(3) Collapse data poor questions into more compact ones .

(4) Clean errors in dirty surveys.

(5) Sort disorganized surveys and

inform the user of incomplete respondent decks.

(6) Rearrange structural

format of the entire survey, i.e. group together questions pertaining to

similar topics on the same card.

A generalized editing system has to meet two of the more prevalent

problemi

in social science data:

(1) that of adaption to our methods of

obsolete conventions concerning the storage medium (e.g, punch card format),

and, (2) discrepancy errors in the data itself.

The editing system needs

to contain a problem oriented language with sufficient power to edit and/or

recode both convention discrepancies and error discrepancies,

An editing

system needs also to contain an inspection capability for comparing actual

data with a protocol and providing feedback for proper editing decisionso

These were the objectives of our first approach to an edit routine.

Despite considerable progress in developing the MITSAS system our

experience with that effort and the ideas generated in our seminar discussions led us to abandon that first approach.

The interfaces between separate

component programs proved extremely difficult to design when not integrated

into a unified system.

recognize that it

Furthermore, and even more important, we came to

is at least premature and possible destructive of creative

analysis to standardize structures for data content.

The MITSAS system from

the beginning had sought to maintain the original data in something very

nearly like its original format.

But, in response to constraints that

arose from the requirements of second generation computers, we did anticipate

at least a modest amount of editing to force the data into economical forms

for storage,, for retrieval from tape, and for simplification in analysis.

But as we noted above even this editing involved intolerable amounts of

human effort; furthermore, the availability of third generation computer

resources in Project MAC,

with random access to large disk files, suggested

a quite different approach free from some of the restrictions of MITSAS.

It

made

it

possible for the user to define data structures to meet his

own needs rather than conforming to system requirements.

The goal of a standardized and generally acceptable data structure may

never be achieved and in fact, in a dynamic science, probably should not be

aspired to.

Furthermore, the cost of trying to implement such standards

is considerable.

For example, it

took a competent technical assistant over

a year to rearrange about 100 surveys according to the wishes of an experienced survey analyst0

-

U

In the light of these considerations we scrapped our first attempt

(as must generally be done in developing any complex computer system))

and started over again.

The new approach which we call the ADML!E system has been developed

by Stuart McIntosh and David Griffel.

Their system harnesses computer-

usable interactivity to its fullest extent, in order to allow the user

to re-structure data in accordance with his own desires and to re-organize

the naming of his data.

The system, in order not to lose information and

to permit replication of transformations earlier made in the data, keeps

a record of all changes made and keeps the codebook and the data in correspondence with each other.

other criteria too.

The system was designed to meet a number of

Analysis of what social scientists do when working

with a data base yielded the folloving design criteria for the system:

(1)

The system must have the capability, under flexible error

checking procedures of keeping

(a)

the data prototype contained in the codebook, and

(b)

the data filesin correspondence with each other.

The user must have the ability to change either data or

prototype as he judges necessary.

(2)

The system must allow a social scientist to build indexes from

the data both within a single data file and across data files.

The indexes may be information indexes, a listing of the location of responses bearing on some subject, for example, social

mobility; or they may be social science indices, e.g. a composite measure of social mobility.

These indexes as retrieved

from the computer are a major analysis tool.

The manipulation

of these indexes may take the form of co-occurrence (for example,

crocs tabulation) tables or tree construction.

(3)

The system design should be embodied in a computer system such

as Project MAC where there is highly responsive interaction

between man and machine.

(4)

The system must be user oriented.

The goal is to place a

social scientist on-line, where he may interact with his data

without the aid of programmer, clerical, or technical interpreters.

(5)

The system must allow the social science user to provide considerable feedback into its design and embodiment.

The ADMIE system was designed to meet the above criteria.

In rough

outline the substance of the system consists of four sub-systems as

follows:

(1)

The Organizer is a subsystem which permits creation of machineexecutable codebooks, both for data processing and data

auditing.

(2)

The Processor is a sub-system which uses the executable

codebook, to transform the data to bring it

into correspondence

with that codebook.

(3)

The Structurer is a sub-system for inverting data files in a

way that will permit highly efficient analysis with third

generation computer systems.

(4)

The Cross-analyzer is a sub-system which operating in an interactive mode builds indexes and also produces co-occurrence tables

both within and across files a

At present, the ADMIS system is being used as a working prototype

and is constantly being improved as a result of procedural research user

feedback.

The development of the ADMI1E system has not been a theoretial

exercise0

It

has been developed in the environment of an actual data

archive used by students and faculty members at MIT for the conduct of their

research.

The MIT data archive now contains over a thousand foreign surveys

with several hundred American surveys on order.

The overwhelming majority

of these materials come from the Roper Pblic Opinion Research Center in

Williamstown,

The MIT archive is a member of the International Survey

Library Arsociated established by the Roper Center.

Our foreign survey

collection contains the equivalent of appro imately 1,250,000 cards,

We are developing a computer usable catalog of this collection of

codebooks.

We can use the ADMINS system to analyze our collection so as

to find the codebook description which fits the analyst Ua particular interest.

We can also use ADMINS to analyze the data file described by a par-

ticular codebook.

Also where the codebooks have been designed by CENIS

researebers we are making the codebooks machine readable and then developing

methods for producing adforms, i. e. machine-executable codebooks from these

machine readable codeboks.

Further, we are developing computer use

classified indexes to these machine readable codebooks wiich we will tntegrate into the toper item index,

We

ill

be able to transfer this exper-

ience to our total -collection when all of the codebooks become computer

usable.

Attached is a list

in Appendix 2-A of the MIT CENIS surveys whose

codebooks we are making computer usable in support of the required analysiso

Finally, there have been several classroom sessions and enumerable

tutorial sessions with the fifteen or so most active users who are learning

to use the ADMINS system for their particular substantive analysis.

of ADMINS users is attached, Appendix 2-B.

A list

Appendix 2-A

Pofessor Frank Bonilla in his study of the Venezuelan Elite has

biographic and career data for 200 respondents on which he is doing a

trend analysis using Admins.

Professor Daniel Lerner administered attitudinal surveys to elite

panels in three Eurorean countries (England, France, Germany) over five

time periods (1955,

1956, 1959, 1961, 1965).

The analysis of this con-

temporary multi-source data has already begun on Admins.

This research

involves analyzing attitude change towards the concept of the European

community vis a via the Atlantic community within these three countries

over the designated time period.

Professor Ithiel Pool is analyzing (in conjunction with a mass

media simulation of the communist bloc) eighteen surveys administered by

Radio Free Europe over five eastern European countries during the late

fifties and early sixties.

a multi-source nature,

Here too we have contemporary social data of

This research involves analyzing the effect of mass

media and communications---specifically Radio Free Europ--on political attitude change in five eastern European countries over the designated time

period,

Professor Jose Silva (in conjunction with the Cendes project at

MIT CIS) has a survey administered to forty social groups (eag.., Utudents,

priests) in Venezuela in the early sixties,

'Re vishes to build social

indices which are applicable across these many groups

.

Professor Silva

has already used an earlier version of Admins to cross two source files

These social

(e.g., social groups) building four social sciences indexes.

indices (e g., propensity to violence, want-get vs. satisfaction) when built

across the differing social groups vill provide initialization parameters

for a dynamic social simulation of Venezuelan society.

Professors Ithiel Pool and George Angell have biographic and

attitudinal surveys administered to 200 MIT sophomores taking an experimental

introductory course in social science, and 100 MIT sophomores in a control

group.

The attitudinal surveys were administered both before and after the

course was given.

As well results from psychological tests, histories at

MIT and admissions data for these 300 were available.

Here we have, five

different data sources prepared at different times, however describing the

same population; an integrated analysis of this data is planned on Admins.

This research is studying the political and social attitudes of MIT undergraduates--who are viewed as a prototype group for science undergraduates

at other universities--and the effect of a sophisticated introductory social

science course on these attitudes.

In the elite European panel data already described there exists

a cross reference file of people who repeated over the time periods, i.e.,,

we have a record of the attitude changes over time of individuals.

fessor Morton Gorden wishes to analyze the 'repeaters",

Pro-

which are a sub-

group of the entire panel, on Admins using the multi-level capabilities

of the analyzer to relate the identical individuals over time.

Professor James Beshers has urban data for the Boston area organized

by individual, by family unit, and by residence district.

In his study of

migration he needs to trace individual movement by relating individual,

family and residence subfiles. Stumarized migration data will be input to

a dynamic Markhov model of migration flov

vill feed back into the data analysis.

Results produced by these nodels

2B-l

Appendix 2-B

ADMINS USERS

Name

c

Research To

2

J. Beshers

Urban Planning

P, Alaman

Urban Planning

I. Pool

Eastern European Surveys

G. Angell

Educational Data

F. Bonilla

Venezuelan Elite Study

J. Silva

Venezuelan Survey

D. Lerner

European Elite Interviews

M. Gorden

European Elite Interviews

F. Frey

Turkish Peasant Survey

A. Kessler

Turkish Peasant Survey

Course 17.91

Student Research

Course 17-92

Student Research

David Griffel

Data Archive

Stuart McIntosh

Data Archive

90WOMOMON

3-1

Part 3

THE CURRUET ADMINS SYSTEM

Our purpose in the design of the current ADMINS system is to analyze

data at a console, starting with the rawest of materials and ending up with

analyzed measures and arrays.

To do this ADMINS provides highly interactive

sub-systems that will (1) allow the necessary clerical operations so that

the social scientist can re-stracture the content of his data by recoding

his variables and by re-grouping them; (2) provide a re-organizing capability by allowing the social scientist to name the grouped data according

to his purpose; (3) allow statistical manipulations on the named data files.

The data structures that we deal with are basically matrices.

There

are N records, each record describing an individual or social aggregate

of some sort.

by a codebook.

The structure of the data in each of these records is specified

Our objective is to bring this codebook 'scaffolding'

into

correspondence with the data by a series of clerical operations that are

interactive.

That is,

we can perform a clerical operation,

refer to the

result of this operation, and with this new evidence proceed to another

clerical operation.

We must also arrange our clerical operations so that

we can work in more than one file of data at the same time, because our

analysis

ill

require that we can refer to and use data from different

files, create new files, name new data sub-sets and indeed completely reorganize the original data according to our current purpose.

We have to be

able to save the results of our operations in a public and explicit way so

that they can be resumed andtor replicated, and at all times the codebook

and data must be in correspondence.

These activities must be computer

based (not people based); what is required of people is a knowledge of the

clerical operations, an ability to name the results of their operations

and a purpose that they can make public v1s-a-vis specific data.

lowing descriptive narrative indicates how ADMIU

The fol~

is used for the analysis

of social data.

The Organizer Sub-System

The codebook that describes the data does not usually come to as in

computer usable form.

In order to make it

computer usable we type in at

the console the questions and answers in the codebook for that portion of

the data wbich we wish to analyze, and add to this a variety of control

statements.

The information typed at the console we call an adform,

for administrative form.

short

This is normally typed selectively by the ques-

tions of interest to the user, rather than typing one codebook out from

beginning to end, though it can be done either way.

to the Organizer sub-system,

which he

The adform is input

If the researcher starts by typing only that

ants to use right away be does not limit himself later on because

data resulting from different adforms can be combined for analysis at willo

The codebook describes the state of the data as it ought to be,

Assuming

there were no errors in the actual recording of the data, the codebook will

be an exact de3cription of the data,

in the codeboook descriptions as well"

Of course, there might have been errors

Because errors are inevitable and

in fact turn out empirically to consume a major portion of the analyst'a

time, auditing capabilities arm provided

The function of the audit is to

take the codebook description of the data as it ought to be, and find if

the data compares exactly with the codebook description or not.

When it

not, there will be an error message of an audit nature telling us of the

discrepancy (the lack of correspondence) between what the codebook says

there ought to be against what the data is saying there is.

does

3-3

We cannot emphasize too much the importance of providing error correcting operations.

If we learned anything from the series of seminars

and data analysis experiments that we conducted it

was that errors of one

sort or another take at least half of the time of data analysts.

The particular computer system configuration that we use, the MAC system here at MIT, has several different programs for editing textual input

data of moderate size,.

One of these programs is called EDL.

program we use to edit adforms.

It

is this

In other words, if we make typographic

errors of one kind or another typing in our adform, we use the various

change,

delete, retype, printing features of EDL to allow us to pick up

our typographic errors as we go along and change them.

editing in the generic sense.

editing also.

This is called

There are many other uses of the word

For example, the word editing is used sometims to apply

to the change that one makes in transforming an input code (i.e. the list

of responses under a question as it cones in)

into an output code (i.e,

the revised grouping of these for analysis)

There are programs called

editing routines that do this and this is a very different kind of editing

to which we refer to below.

In the ADI4US system the social scientist never alters the actual

source data, even if he considers it

for his use if

he so desires.

to have errors in it.

He alters it

He does sch altering of data after he has

processed the codebook and the data file under the organizer-processor loop

to find out discrepancies, i ee lack of correspondence between the adform

and the data.

The analyst can then either change the codebook or change the

data as he concludes he ought.

The function of altering the data can also

be called editing and we have programs which will allow us to so alter the

data.

npaut

The generic term that we have applied to the act of altering the

record to the output record for sall of the respondents in a given condition

is "transformation" and the term we have used for changing a particular

record condition after one discovers an error in the data ve have called

altering.

The output from the organiser program which has taken the aform as

input, is,

in effect, a maehine-executable codebook that kowseverythiug

there is to knov about the state of a particular data file.

program is a kind of application compiler and oae is,

This organiser

in effect, processing

(this normative "data') which is another use of the word procesasing

the adform is input to this applicatio

i.e.

compiler program called the organ-

izer where one first of all organizes in what we cal

a diagnostic mode:

In this mode one gets back two kinds of error mssageis.

If there are some

syntactical errors In the adfonm, that is one has made some kind of transfomation statement or audit statement incorrectly according to tht ayntactical conventions of adforming, one will get an error message,

One also

gets error messages of another type for the organizer checks on adfom

incoherency as well.

For example, one has nine subject descriptions, nine

entries, but ten codes mentioned for transformation,

This will be described

as an error in the diagnostic mode of organizing the adform

There are a large variety of messages that the computer gives about

syntactical and coherence errors when it

It

is running in the diagnostic mode.

describes these errors in effect by running a

starts at the beginning and continues until,, in

historical commentary which

effect, the complexity that

ensues by one error sitting on top of another so disorients the beast that

it

stops 'talking',,

However, these error messages are isolated for the user

by the question ,ategory in which they occurred and for that part of the

statemnt within the question,

ie.

with which they are concerned.

It

the adfom statement within the question,

is quite easy for the person to go to

this error message at the console and relate it to the particular part of

the adform where the trouble occurred.

As the first set of errors are

eliminated, deeper ones will be flagged on the next round,

After two or three rounds of this organizer diagnostic loop one eventually clears out aU of one"s syntactical and coherence errors

One then

puter informs one that the adform is within itself errer-free.

runs the adfona in

a non-diagnostic mode.

This results in

The com-

outputing a

machine-executable codebook, which contains programs for auditing and

transforming data, tables of subject description (e.g. question and ansver

text), tables of format locations and so on.

The Processor Sabb-ystem

ome data on the di$sk

After o rganiing the adform one then usually pats

Note that up to now ve have worked only on the codebook without having to

have any data

We now wish to process data under this machine-executable

codebook, first to look for errors in correspondence between the two,

have a program called the processor that is,

We

in effect, a program that runs

the dWAa under the controls in the executable codebook.

This process pro-

gram has many different types of error statements and different types of

modes of use

One can sample items of the data as one is processing tbem,

one can set for errors by category, one can set for the total numbers of

errors, one can run in silence mode but say with error verifications every

100 errors, one can operate in dummy mode and so on.

That is

,

there are

many different types of control available during the running of the processor.

3-6

The provision of this variety of controls can save enormous amounts

of computer time.

Instead of running from beginning of the file to end

and tabulating every error, one can tell

the program to scan the data

until N errors have been found, stop there and report.

Thus, corrections

can be made as soon as enough data exists to spot them.

The result of the running of the processor is what we call an error

An error report is in effect an analysis of errors by data cate-

report.

gory.

For every category of question which is being processed, we have

the errors which occurred in this category by type of error.

is very useful.

This report

If we have, say, 150 errors and we found that 149 were

all due to the same discrepancy then one has a very useful overview as to

what one wishes to do.

Whether one wishes to change the codebook for the

149 errors which seems the likely thing to do when it

is probably an error

of interpretation, or whether one wishes to go in and alter the data; there

are tools for either.

There is the edit of data--the alter instruction

which we discussed previously when one wishes to change the data.

is EDL if

There

one wishes to change the adform with the error report in hand.

There are usually many errors, one has to think whether one is going back

to change the adform in one of many different kinds of ways or whether

one is going to alter the data somehow.

When one has made the necessary changes to codebook and/or data, one

runs the data again under control of the now corrected adform.

Another

type of summary file that one gets from this processing of the data under

codebook control is what is called the marginals, that is the aggregate

frequency of each entry under each question or category

to pocess .

that we have chosen

The marginals are in effect the initial information necessary

3-7

for one to implement one' s analysis plan.

The marginals are obtained in a

report form where the frequency for each entry appears alongside the ordinary

language subject descriptions by which it

is designated in the adform.

Such processing of data could just as well be done without the user

sitting at the console if the data were in good shape, if

errors of one kind or another.

there were no

However, our experience to date is--or to

say it more particularly--we have never yet processed a data file where

this was the case.

Errors in data go from a variety of unique, scattered,

individual type errors that are not particularly errors of quantity per se

(they are errors of consistency) to the other end of the spectrum where the

data are not at all what the codebook says they are supposed to be, that is,

the data are not there or are not there in the code format that one expects

them to be in.

In either case, the interactive capability helps one to

learn very quickly what way the data process is going, whether one wants

to quit and go back to do something else again in the adform, or get some

other data, or to learn as one goes finding what exactly is wrong with any

given particular data and decide what to do with it.

In the ADMIIS system,

at the same moment that one has finally established that one's data are

clean and correct as far as one cares (for minor substantive errors can be

labelled as such and left), one has one's marginal automatically.

In order to accomplish the processing the user has to specify for the

data he is interested in,

it

ought to be in.

and if

and only the data he is interested in,

He need not talk about the data he is not interested in

the data he is interested in is not as it

will tell

him so0

only in the way it

what state

ought to be the program

He does not have to state in what way it

ought to be.

ought not to be,

The user specifies only the data he is

3-8

interested in and the condition that it

ought to be in.

The computer, or

more correctly the AMDIS program designed for this purpose, is progranmed

to ignore the data that the user is not interested in and to tell him what

of the data he is

be.

interested in is not in the condition that it

ought to

This means that the user does not have to state all of the possible

'ought not' conditions for data, but only the "ought" conditions.

It

should also be noted that there is an append feature that allows

one to process data at different times and combine the resulto

The append

feature would be used in processing under the following circumwtances.

Let

us presume that we had previously done a survey in two interviewing waves

and that we only wished to look at one wave of this survey, and then append

the other wave later.

Or, let us assume that we wished to select from a

particular survey in some specified--random or otherwise--vay *ome of the

survey respondents to process and later go and look at some of the other

survey respondents and append them also to the previously processed data.

This permits one to complete one portion of the analysis vithcut waiting

to establish that there are no errors in other portions of the data first.

In addition it permits the saving of computer time by sampling as one is

processing, by random choice over ID numbers,

for example.

The result of the process operation is that we have a file of marginal

frequencies and a report file in which there are zero errors if we carried

the correspondencing of codebook and data to the point of clearing out all

of the errors.

We also have a file of data, and, of course, from the result

of the Organizer sub-system we have a subject description file for the data

(e.g. the codebook) and a file of, in effect, basic control information about

the data stateo

3-9

The subject description file, the control information file, the marginals (which we called the aggregates file), and the errors file are all

in a sense intermediate files generated by ADMIENS for use by the ADMIE

system during the operations that we call organizing and processing.

We

needed an error report file to help us to decide what to do with errors0

We needed an aggregates file in order to help us decide what to do about

analysis, we needed a subject description file because it

can be used in

analysis, in effect to label the data that we are analyzing, and we needed

a control information file to assist the system to know everything about

the state of the data.

The user in carrying out the activities that generated these files has

not had to think about computer technique problems.

He has focussed solely

on substantive questions about his data and what he wants to do with it.

We have designed into the ADNMI

system the responsibility for the system

to attend to problems of form and packing and not tie the user as he is

tied in conventional cross tabulation systems to the very tedioa3, tiresome,

detailed, finicky specifications of form and packing.

We now come to some rather technical points, but one which lies at the

heart of the efficiency of the ADMIE system.

The usual fo:m in which data

has been stored and handled in virtually all social science data processing

systems--since the days of the punch card counter-sorter-is what we call

an item record file.

For each respondent, or other unit ,f analysis, we

keep a file of each item about him in some set sequence, e. g., the IBM card

is about an individual and records his answer to each question in turn,

is logically obviously possible,

and

for

certain purposes it

It

is much more

efficient to invert the method of record keeping, to make the unit record a

particulax answer (or other ctegory) and under tbat Wo list

all,

the individuals who, gave such an answer.

Tie

in sequece

Inverted :file is

what

we call a category file,

The advantages of working with a category file will become clarer

as we talk abott the problems of socal

eussin of the Analyzer sub-system,

Eere let us zimply take an extreme

Su.ppose In a cross section sample of the population one wished to

case

findthe

requxir

Ph .Jos who were non-voters; An analysis using item records would

the prLoceing oxf every record to ascertain if

a Ph.D . and if he wva a non-voter,

would go to the relatively short li.t

list

science data analysis in the dis-

of non -voter

the respondent was

An analysis using category records

of Ph.D.-

and to the relatively ahort

and make up a new very s'hort list

of the intersection

of these tvwo saort lista

In an interactive operation at a cosole where the analyst asks one

spec.fic question at a time and thea goes on1.o another specifie question

depending on the anser to the firtit

-',.a almost certain that much efftc-

iency will be gained by keping the data in category form,, The analysis is

proceetding in tens of categories no more than a half dozen or so at a

tim.

The researcher ought to be able to pick up a category without having

the computer scan every One,

For tbat reason

once the Organizer and Processor operations have put

the data into shape for analysis, but befoxre the anlysis begins, we invert

the data file--that is,

the process file.

A process file which is an item

record file in the ADWLES system along with the control information files

from the organizer output and the subject description file and the aggregation file that was the basis for the marginals is input to a program

which is called the structure program, which could equally be called the

3-11

We

This program outputs what we call category records.

invert program.

in effect take the items from. the item record file, and invert them to

categories; within each category is the control information for this

category, the subject description for this category, the umber of items

that exist and the responses for each item within this category; this

with the aggregation of responses for all the items under this category

compose a category record.

It

is these category records which are input

to the analyser.

The Analyser Sub-System

Once a file has been inverted, the ADMIN

system's Analyser is able to

go to the disk and read into core just the categories necessary for a specific

operation.

It

is secure in knoving that as soon as these categories have

been used for this specific purpose it

can delete them in core because it

always has readily accessible copies of them on the disk.

This swinging of

categories in and out of core allows a lot of the flexibility which characterizes the analyser sub-system.

Such a mode of operation which makas full

use of the available 'fast" memory that the computer has, is almost inpossible in a tape based system.

This is due to the fact that a magnetic

tape is a serial access device, that is,

in order to read a section of

tape, a tape head has to be mechanically moved along the tape till

appropriate section is reached and then the reading can begin.

however, it

the

With a disk,

is as if one had many tape heads moving along different parts of

the disk, all under control of a program residing in core.

how a disk really works but it

(This is not

is a useful way to think about it.)

An

efficient system is the one that has to bring into core only that material

which one wishes to analyse, i.e. the wanted categories.

The basic thing to understand about what one is doing when one

analyzes a file is this:

one is seeking out particular characteristick

of one or more categories, combining the characteristics thus sought, and

then identifying the individuals who have the intersection of characteristics., This process can become exceedingly complex as one becomes more

and more elegant In the combinations of characteristics that one pursues.

What one is doing when one analyzes a file, is,

that one is re-structuring

the content, and re-organizing by giving new names.

The other way that one proceeds in

analysis is

that as one goes along

one brings statistical tests to the measurements that one ban isolated by

the first kind of analysis.

One need not do this but one can.

For example,

one can go to a personnel file looking for the characteristics of various

different types of people according to some purpose, looking at their

education, their language skills, their geographical area experience, and

so on.

One can come up with the individuals who have certain distinctive

characteristics, and leave it

this measureint at aL.

at that .

However, if

One brings no statistical test to

one is looking at a file from the

point of view of social science, one normally brings some variety of

statitical

tests to these measurements according to the scientific

purpose one has.

The activity of isolating characteristics we call indexing0

indexing?

What is

An index answers the question: Where are the people located who

have a particular characteristic we are pursuing

This location is,

of

course, the location in the record, not the location out there where real

live people live.

In other words, when we know where in the record these

3-13

who have a particular characteristic and how many of then there are

people ar

who have this particular characteristic we have what we call an index to that

particular characteristic.

In any particular category record, there are two types of characteristics that one uses.

of the record,

that is

There is the characteristic that describes the form

(e.g. the ID number of the record); that is,

relevant only because there exists a record.

a characteristic

However, most of the

characteristics described are characteristics of the content of the record;

they tell

you what occupation, what age,

what name, what social security

number, and so on characterizes the object (here a person) described by

the record.

In ADIl

we can re-structure the file according to what is in the

content of the record.

This in something which is most difficult on con-

ventional analysis systems.

embodiment, but disk is.

pointers .

Magnetic tape is not an addressable media of

Re-structuring implies re-structuring of data

If you cannot address a tape flexibly, you cannot re-stru cture

the data on it without actually physically moving data around which, if

only for economic reasons, is impractica l-

However, a disk is addressable

so we can in effect simulate the re-structuring of the file by really moving

data pointers around--pointers which addressably reference data existent on

the disk.

The generic term to be

taed

for this activity is that of indexing.

An index states that such and such characteristics of a record exist at a

particular place.

When you have many indexes you are in effect looking at

the records in the file from the point of view of those which you have

indexed, as opposed to the point of view by which they are in fact physically

in the file

0

Let us give an example of an index before we go any further.

If som

respondents were asked, "what is your religion?" and the responses that

they were allowed to chose from were names of different religions, for

example, Protestant, Ctholic, Jewish, Mormon, and no religious affiliations, then an index to the Protestant religion would be an index to the

code representing the Protestant religion in the particular question

asked; it would be an index listing the persons who took this option in

response to this religion question.

The ADMINS naming mechanisms are used to re-organize such indexes

according to the user's purposes.

If

one has indexed Protestants and has

also indexed persons with university level of education then the combination

of the characteristics "Protestant and university educated" would be the

result of the intersection of these two indexes.

This new index could be

named "Establishment" and could be called upon whenever it

is needed by

That is the name in this case may be the name for

referring to that namea

a concept in a social theory under which the data are to be re-organized.

The term intersection is borrowed from the language of set theory in

mathematics,

For example, we could have a set, say set A,

names of men wearing green shoes.

men wearing white shirts.

containing the

A second set, B, could be the names of

The intersection of these two set A and B, would

be the names of men wearing green shoes and white shirts.

Notice that an

index of the contents of the set is the names of the individuals which

belong to it.

That is,

given an indexed set, we have a way of tracing back

to the individuals who make it

up.

In an ADMINS index, the analogy to these

names of individual elements are pointers to the individual items.

The

basic indexes are defined in terms of characteristics recorded in the

3-15

categories and entries describing each item.

The instruction "intersection"

can be used to combine indexes constructed in this way to obtain an index

which contains pointers to people who were in all of the original indexes.

The intersection instruction can, of course, be used on indexes which were

constructed by previous intersection instructions.

we had two simple indexes.

That is,

In our earlier example

we had two indexes which were built

up from the category and entries of the individual responses.

The first

was an index to Protestants and the second was an index to university

educated people.

The intersection of these two indexes gave us an index

which pointed to people who were both Protestants and who had university

training.

This index once constructed was named and could be referenced

in further indexing instructions.

Another instruction in the analywer,, whose name we have again borrowed

from the language of set theory is union,

Whereas, intersect gave us an

ANDing of the original indexes, the union instruction gives an ORing of ihe

original indexes.

To return to our example,

if we unioned an index of

Protestants with the index of university-trained people, we would obtain

an index that had the following types of people in it:

Protestants who

did not have university education, Protestants who did have university

education, and university educated people who are not Protestant.

That is,

each member of the new index must have been in at least one of the original

indexes, and perhaps may have been in both of them.

The complement instruc-

tion is used to construct an index whose members are not members of the

original index.

except that it

The relative complement is quite similar to complement

only deals with a subset of our total population.

M6

The effect we have then is the following

by referencing categories and their entries.

One can build simple lrdexes

One can thean build

o

ructon

basd on these indexes using the indexing instructions; intersect, union,

complment and relative complement,

may be input to fturther instractionso

The results of these constructions

This process can continue indf Initely

until the purpose of the user is Satisfied,

The index to a particular cbaracteristic of a category is not th

type of simple index that may be constructedc

One may construct an index

that is the eategory itself, one way construct a

of a particular category.

onlUy

index to a nw*rical value

Nvertheless, one knows what has been idexed and

one knows what further operation one visbes to implement on the construction

After the user gives a particular indexing instruction he sees the nuwmber o

people in the index (raw figure and percentage cf population) and the nan

he has assigned to the index.

This information is- immedlately printed <n

the console in an interactive mode for him

quent indexing natuxe.

omake decsione of r subse-

When the index is the resuit of an intersection

the computer also retarns the statistical significance,

for example, the

probabilistic measure of non-randcmness tor this particular intersectionc

Suppose a user does not have his adform by his side and cannot remember what all the categories and entries represent.

The "subject descrip-

tion" instruction will allow the user to get the subject description and

marginaU for a particular category, either in toto, or selectively for the

question, or for some of the entries for this question as required,

The analyser also has a directory capability, i.e.

a capability

ft keep-

ing a record of the names of the ongoing constructions as the user purues

a particular analysisz

We will talk about this in detail later

structions we mean, of course, that which results fzm using the

y, cot

theaoretic instructionso

(Wit call the instruction 1cross, whI'ich -is typed

at the console to invoke the analyzer, a command.

We call &U of thi

instructions which are used in the analysis, and we have mentioned so far,

index, intersect, union,, complement,

description--instructions

)

relative complement, and subject

Costructions can be very complex and ewh

constriction is known by the name which is assigned to it

by the userc

In the classified directory, previoily mentioned, one has in affect a

contents list

of the constructions and their names.

There are other

instructions for gaining access to the information in the classified

directory, 'which we will discuss later,

Tb

construction of complex indexes way, if

it

user, be perceived as passing one's vay down a tree.

is convenient to the

However, when one

has arrived at a particuLar cluster of characteristics,

(that is,

a com-

plex index) one may wish to take the complex indexes and make them into

the columns of a table0

One may chose to put in the rows of thi& table,

for exmple, twc categories, that is,

two questions with their entries

By invoking the table instruction one gets the result of the intersection

of the indexes in the columns with the category entries in the row

This information may be supported by statistical tests of these interseetions such as simple percentages of one kind or another or a pbabilisti

measure of non-randomness or any other statistical test the user may thnk

appropriate,

One mday also put indexes in the rows of the table.

The way

the user uses the tabling facility is to put up in tthe column as many

indexes as he can keep in analysis attention &pace against the various

categories and indexes in the rows., bringing tests with which he feels

comfortable to these mesurements.

He keeps taking the results of the

intersections (that are in the cells of the table) which are of interest

3-i8

to him, making new complex indexes out of them, putting them back up as columns

of the new table, introducing other indexes or the categories to the rows, and

working through this in effect data sieving cycle in order to get out of the

data these significances that he is seeking.

Just what does the users have to do to, say, get from the computer a

table with five columns with indexes in them and two or three questions plus

some indexes in the rows?

The user builds each of the give indexes according to the discussion

that has gone previously.

Similarly for any indexes in the rows

of course, thereby named his indexes.

He has,

He has nothing to do to bring in the

categories except to type their names in the relevant row instruction,,

He then

names a particular statistical test that he wishes to invoke with the lstat e'

instruction, invokes the

table is

constructed.

active decision making.

table" instruction, and consequent upon this the

ie may have the table come out at the console. for interIt may at the same time be being filed on the disk

file of the given name that he has allocated for this table.

The naming restrictions for files are the restrictions of the MAC

system.

We have two names of six characters each for a file.

This, however,

should not be confused with the naming conventions for indexes in the Analyzer.>

In effect, the naming conventions for indexes can be considered from two

points of view.

We call a name for an index that is six characters or less,

a symbol, and a name that is greater than six characters a name.

index can have associated with it a symbol and/or a name.

Thus, an

Furthermore, the

way one chooses to use the symbols and names may be considered from the point

of view of faceted use .

That is to say, symbols may be used rather in the

way the Dewey decimal classification uses symbols,,

That is,

one can break down

3--19

a symbol into sections.

The difference between sections of the symbol string

are facets of the concept one is naming.

Likewise, one may do the same using

names, with periods delineating variable length sections.

One could have three

word forms separated by two periods that constitute a name for an index.

Each

one of these word forms is a facet within the particular use of the name for

this index.

rather similar to the Ranganathan Colon classification

This is

use of names.

The analyzer has thirty or fourty error messages as to what is incorrect instruction usage which may occur under the pressure of analysis.

We

mentioned the existence of coherence errors when we were discussing adforming.

it

Likewise,

is possible to make coherence errors during analysis,,

one instructs the analyzer to build an index to a non-existern

For example,

entry in a

category, say to the ninth entry in a category which only has five.

Or one

instructs the analyzer to build an index to a category but this category does

not exist.

Or by the type of syntactic expressions one uses in instructing

the analyzer to build an index one is in effect saying that one believes the

category one is

referencing is nominal whereas the analyzer knows it

numerical values.

not exist.

contains

Or one references the name of an index but this index does

Or one builds a complex index, but this complex index has already

been built, in which case the analyzer just tells you it has been built and

gives you the name you previously assigned it.

If the analyzer detects an

error, an informative but polite comment is firmly printed and the user is

alowed to continue.

Although we havc- just begun to talk about the analyzer,

already we have

referred to quite a few different instructions with different options in their

syntax.

How can a forgetful user keep track in his mind of all the possible

options the syntax offers, especially during actual analysis?

There is an instruction that the user can give.

list

The console will then

the various instructions available in the analyzer.

There is also a

"syntax' instruction which, when invoked along with the name of an operational instruction, will give the appropriate syntax for the use of this

particular instruction.

We have mentioned on several previous occasions the classified

directory.

This is a very important feature of the analyser.

We have to

remind ourselves that when we are building indexes to the original file of

individuals we are in effect simulating the construction of a new file of

the individuals who exhibit the relevant characteristics which define these

complex indexes.

As we build many of these indexes we have to invent

means of keeping a usable record of them.

means by which we do this.

Eome

The classified directory is the

In a classified directory there is a record of

all of one "a constructions and the names for each of these constructions.

The classified directory also contains information as to how to rebuil4

these constructions on request; under control of a complex memory purging

algorithm,

the analyzer throws away all of the pointers to the locations

of the individuals who exhibit particular characteristics,

classified directory it

but using the

knows how to rebuild these constructions.

Further-

more, as the only way a user has of accessing the indexes he has constructed

is through the names he has given to these indexes, the classified directory

must keep these names in an accessible fashion, and there can be many, many

names.

Therefore, we need several instructions for accessing the names in

the classified directory in selective ways and we also need procedures which

look at the classified directory in order to rebuild the constructions as

required, so as to rebuild the old constructions that were originally named.

If the classified directory were only concerned with the analysis of one file

this might appear to be a relatively simple housekeeping matter.

However,

as the analyser has been designed to work many files at the same time or

many files discontinuously, that is to say, work one file, stop working

this file, go work another file, come back and work the file you were

working previously and so on, it is evident that the classified directory

assumes a very important role as it

activities.

is responsible for recording all these

Furthermore, when a user has completed a certain amount of

analysis and ceases to work, one has to have economic and intelligent ways

of saving the results of his operations for further use when he comes back

to the analyzer and wishes to start off analysis exactly where he left off