Multiple Regression Model • The model: y = β + β

advertisement

Multiple Regression Model

• The model:

y = β0 + β1x1 + · · · + βk xk + where y: response or the dependent variable,

x1, x2, . . . , xk : the explanatory variables

(or independent variables or predictors)

β0, β1, . . . , βk : unknown constants (“the coefficients”)

: an unobservable random variable, the error in observing y

• Under this model, E(y) = µ(x) = β0 + β1x1 + · · · + βk xk

• Multiple regression data: n observations or cases of k + 1

values

(yi, xi1, xi2, . . . , xik ), i = 1, 2, . . . , n

• For making statistical inference, it is usually assumed that

1, 2, . . . , n is random sample from the N (0, σ 2) distribution.

• Estimation of Parameters:

Minimize the sum of squares of residuals:

Q=

n

X

i=1

{yi − (β0 + β1 x1i + · · · + βk xki)}2

with respect to β̂0, β̂1, . . . , β̂k

• Set the partial derivatives of Q with respect to each of the β

coefficients equal to zero. The resulting set of equations are

linear in the β’s and is called the normal equations.

• Least squares estimates: the solution to these equations

of the β’s denoted by β̂0, β̂1, . . . , β̂k

• The prediction equation:

ŷ = β̂0 + β̂1 x1 + · · · + β̂k xk

• Matrix Notation:

y =Xβ+

where

y1

y2

y = . ,

.

yn

1 x11 · · · xk1

1

β0

1 x12 · · · xk2

2

β1

X= . .

, β = . , = .

.

. .

.

.

.

n

βk

1 x1n

xkn

• Minimize the sum of squares: Q = (y − Xβ)0(y − Xβ)

• The normal equations: X 0Xβ = X 0y

• The solution: β̂ = (X 0X)−1X 0y

where (X 0X)−1=inverse of the X 0X matrix (assuming it is

nonsingular)

• X 0X, a (k + 1) × (k + 1) matrix, and its inverse are imporatnt

in multiple regression computations.

• Testing the hypothesis:

H0 : β1 = β2 = · · · = βk = 0vs.Ha : at least one β 6= 0.

• An analysis of variance for regression:

Source

df

SS

Regression

k

β̂ X 0 y − nȳ 2

n−k−1

y0 y − β̂ X 0 y

Error

Total

n−1

MS

0

0

0

y y − nȳ

MSReg= SSReg/k

F

MSReg/MSE

MSE=SSE/(n − k − 1)

2

Reject H0 at α-level if the F-statistic > Fα,k,n−k−1

• Estimate of σ 2: Let MSE = SSE/(n − k − 1) = s2. Then

Estimate of σ 2, the variance of the random errors is σ̂ 2 = s2.

• The coefficient of determination R2 (or the multiple

correlation coefficient):

R2 = Regression SS/Total (Corrected) SS = SSReg/SSTot

• Elements of (X 0X)−1:

(X 0X)−1

c00

c

= . 10

.

ck0

c01 c02 . . . c0k

c11 c12 . . . c1k

..

..

ck1

. . . ckk

• Standard Error of β̂m: is sβ̂m = c1/2

mm s for m = 1 . . . , k.

• A (1 − α)100% confidence interval for βm:

β̂m ± tα/2,(n−k−1) × sβ̂m

• A t-statistic for testing H0 : βm = 0 versus Ha :

βm 6= 0:

t = β̂m/sβ̂m

• Predicted or Fitted Values: ŷ = X β̂

where

ŷi = β̂0 + β̂1 x1i + · · · + β̂k xki,

i = 1, . . . , n

• Residuals: e = y − ŷ

where e = (e1, e2, . . . , en)0 and ei = yi − ŷi, i = 1, . . . , n.

• Hat Matrix, H:

ŷ = X β̂

= X(X 0X)−1X 0y

= Hy

where H = X(X 0X)−1X 0 is an n × n symmetric matrix. The

ith diagonal element of H satisfies,

1

1

≤ hii ≤

n

d

• Standard Error of ŷi:

2

Since ŷ = Hy, it can be shown that

Var(ŷ

)

=

σ

hii.

i

√

Thus the standard error of ŷi = s hii, for i = 1, 2, . . . , n.

• Standard Error of ei:

Since e = y − ŷ

= y − Hy

= (I − H)y,

it can be shown that Var(ei) = σr2 (1 − hii)

Thus the standard error of ei = s (1 − hii) for i = 1, 2, . . . , n.

• A (1 − α)100% Confidence Interval for the Mean

E(yi):

√

ŷi ± tα/2,(n−k−1) × s hii

• A (1 − α)100% Prediction Interval for yi:

√

ŷi ± tα/2,(n−k−1) × s 1 + hii

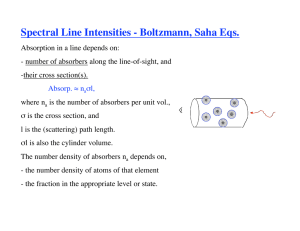

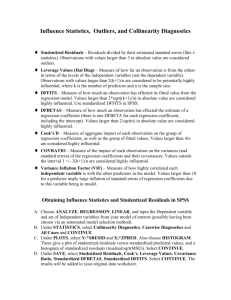

• Studentized Residuals: a standardized version of the ordinary residuals

• An internally studentized residuals: ri

divide the residuals by their standard errors

√

ri = ei/(s 1 − hii)

for i = 1, . . . , n.

• The statistic maxi |ri|: used to test for the presence of a

single y-outlier using Tables B.8 and B.9

H0 : No Outliers vs. Ha : A Single Outlier Present

Reject H0 : if maxi |ri| exceeds the appropriate percentage

point.

• An externally studentized residuals: ti

divide residuals by s2(i), the MSE from a regression model fitted

with the ith case deleted

√

ti = ei/(s(i) 1 − hii)

for i = 1, . . . , n.

• Advantage: ti’s have t-distributions with n − k − 2 degrees

of freedom.

• Bonferroni method adjusts for multiple testing :

That is, we must use α/(2n) for testing at a specified α-level.

• Test each externally studentized residual using Tables B.10

or B.11 for y-outlier. These tables are adjusted for doing the

Bonferroni method.

• Note that this will be a two-tailed test using |ti|; hence, .025

must be used instead of .05 to look up the table.

• Leverage: Note ŷ = Hy. H = n × n hat matrix. Also

var(ŷi) = hii σ 2

var(ei) = (1 − hii) σ 2

• larger hii → larger var(ŷi); smaller var(ei)

• Look at hii to check if yi will be predicted well or not

• Rewriting ŷ = Hy as

ŷi = hii yi +

X

j6=i

hij yj

hii is called the leverage of the ith observation or case:

• It measures the effect that yi will have on determining ŷi.

• Note that ŷi, and ei, depends on both hii and yi.

• A rule of thumb: leverages larger than 2(k + 1)/n are considered large

• Influenial Cases: Those when deleted causes major

changes in the fitted model

• Cook’s D statistic: A diagnostic tool that measures the

influence of the ith case

2

h

1

ei

ii

Di = 0 √

k s 1 − hii

1 − hii

hii

1

= 0 ri2

k

1 − hii

where k 0 = k + 1.

• Factoring Di:

Di = const × studentized residual2× monotone increasing func

• A large Di may be caused by a large ri, or a large hii, or both.

• cases with large leverages may not be influential because ri is

small

→ that these cases actually fit the model well.

• Cases with relatively large values for both hii and ri should be

of more concern.

• A rule of thumb: cases with Cook’s D larger than 4/n are

flagged for further investigation.

• DFBETAS: The dfbetas are scaled measures of the change

in each parameter estimate when a case is deleted and thus

measures the influence of the deleted case on the estimation.

• The magnitude of dfbetas indicates the impact or influence

of the case on estimating the regression parameter.

• A suggested measure of high influence is a value for dfbetas

√

larger than 1 for smaller data sets and larger than 2/ n for

large data sets.

• The sign of the dfbetas indicates whether the inclusion of

the case leads to an increase or a decrease of the estimated

parameter.

• The plot of dfbetas against case indices enable user to identify

cases that are influential on the regression parameters.

• Scatter plots of dfbetas for pairs of regressor variables is also

useful.

• DFFITS:The dffits are scaled measures of the change in each

predicted value when a case is deleted and thus measures the

influence of the deleted case on the prediction.

• The magnitude of dffits indicates the impact or influence of

the case on the predicting a response.

• A suggested measure of high influence is a value forrdffits

larger than 1 for smaller data sets and larger than 2 p/n for

large data sets.

• It can be shown that the magnitude of dffits tends to be

large when the case is a y-outlier, x-outlier, or both (similar to

the behaviour of Cook’s D.)

• Cook’s D measures of influence of a case on all fitted values

jointly while dffits measures it on a individual fitted value.

• The plot of dffits against case indices enable user to identify

cases that are influential on the predicted values.