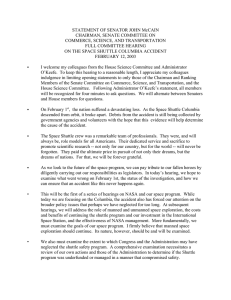

COLUMBIA

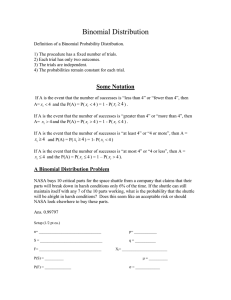

advertisement