Calibration of a Microscopic Traffic Simulator

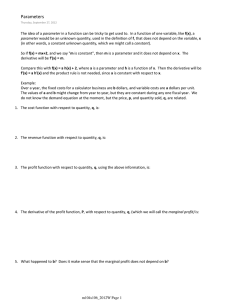

advertisement