FLIGHT TRANSPORTATION LABORATORY 87-4 PARALLEL PARAMETRIC SCHEDULING

advertisement

FLIGHT TRANSPORTATION

REPORT R 87-4

PARALLEL

LABORATORY

PARAMETRIC

COMBINATORIAL SEARCH

- -

ITS APPLICATION TO

RUNWAY SCHEDULING

S

A

Dionyssios A. Trivizas

February 1987

1 IN-..

FTL REPORT R87-4

PARALLEL PARAMETRIC COMBINATORIAL SEARCH -ITS APPLICATION TO RUNWAY SCHEDULING

Dionyssios A.

Trivizas

February 1987

ACKNOWLEDGMENTS

At first I want to express my profound respect and gratitude to the members

of my doctoral committee.

Despite his many responsibilities as director of the Flight Transportation

Laboratory I enjoyed a complete "teacher - student" relationship with my

advisor Professor Robert. W. Simpson. Full of "conflicts and resolutions" this

relationship was a creative, almost operatic experience. He did not simply

guide me in research. He made me communicate it properly.

Professor Amedeo R. Odoni is responsible for luring me into the field of

Operations Research (OR) and for giving me the opportunity to work and

learn at FTL. He went beyond sharpening my powers of analysis in probability

and queueing theory. He gave me the model of a sincere and concerned teacher.

Professor Stephen C. Graves is the third member of my committee. I thank

him for his comments and advice.

I should not omit from the list of my teachers professor Tom L. Magnanti

of the Sloan School of Management. His network optimization course was a

rich nutrient for my early intuition and general understanding of OR.

It would be impossible to mention all those people who kindly helped me

one way or another. I need, however, to express my sincere thanks to my

friend and colleague Dr. John D. Pararas. With a certain degree of guilt I

admit to having exploited his limitless patience. Our discussions revealed a

good number of hidden facets and caveats in my research, and his intimate

knowledge of computers and LISP enriched my programming skills, besides

getting me out of trouble.

My research has been mainly supported by grants from the FAA, and I hope

that the results of my work will be useful in its honorable pursuits of safety

and efficiency in Air Traffic Control.

I also thank the computer lab for providing the wonderful TEX word processor.

Last but not least, I want to thank my parents for insisting that I should

become useful to myself and society. They gave me a model of self respect,

discipline and sense of purpose that has kept me going.

As a last word, I hope to have lived up to the expectations of all those who

expected something of me.

PARALLEL PARAMETRIC COMBINATORIAL SEARCH ITS APPLICATION TO RUNWAY SCHEDULING

Doctoral Dissertation in Flight Transportation - Operations Research

By Dionyssios A. Trivizas

Submitted in partial fulfillment of the requirements for the degree of

"Doctor of Philosophy" at the Massachusetts Institute of Technology, in the

Department of Aeronautics and Astronautics, February 1987.

ABSTRACT

The Runway Scheduling Problem (RSP) addresses the fundamental issues

of airport congestion and energy conservation. It is a variation of the Traveling Salesman Problem (TSP) from which it differs in three basic points: the

maximum position shift (MPS) constraints, the requirement to enforce the triangular in its cost structure and the multiplicity of runways (corresponding to

multiple salesmen in TSP).

The RSP is dynamic, requiring fast and frequent schedule updates. The

MPS constraints, designed to prevent inequitable treatment of aircraft, define

a combinatorial neighborhood of tours around a base tour, determined by the

arrival sequence of aircraft in RSP. The neighborhood contains all tours in

which the position of an object (aircraft, city etc.) in the new tour is within

MPS positions of its position in the base tour. The parameter MPS controls

the radius of the neighborhood, which covers the full solution space when MPS

equals half the number of aircraft.

We first describe the RSP and then develop a parallel processor (PPMPS)

that finds the optimal solution in the MPS-neighborhood in time linear to

the number of objects, using up to 4 MPS processors in parallel. Subsequently,

PPM'S is applied to the general RSP and a case study is presented to justify

simplifying assumptions in the scheduling of mixed traffic on multiple runways.

The case study shows substantial improvements in the capacity of a system of

three runways.

Suggestions are made on how to use the PPMPS to create fast heuristic procedures for the TSP, based on divide and conquer and node insertion strategies.

Thesis

Dr.

Dr.

Dr.

Committee:

Robert W. Simpson, Professor of Aeronautics and Astronautics,

Director, Flight Transportation Laboratory, Thesis Supervisor

Amedeo R. Odoni, Professor of Aeronautics and Astronautics,

Co-Director, Operations Research Center

Stephen C. Graves, Associate Professor

Sloan School of Management

Contents

V

1 Introduction

2 Description of The Runway Scheduling Problem (RSP) and

Previous Work

2.1

Introduction . . . . . . . . . . . . . . . .

2.2

The RSP .................

2.2.1 General Definitions . . . . . . . .

2.2.2 Definition Of The RSP . . . . . .

2.2.3 The Dynamic Nature of RSP . .

2.2.4 The Least Time Separations . . .

2.2.5 The Constraints . . . . . . . . .

2.2.6 The FCFS Runway Assignment .

2.2.7 The Cost Function . . . . . . . .

Computing An Expected Lower Bound, On Runway Capacity,

Through Scheduling . . . . . . . . . . .

2.3.1

Assumptions . . . . . . . . . .

2.3.2

Definitions . . . . . . . . . . . .

2.3.3

Formulas Used . . . . . . . . . .

2.3.4

Rational And Derivations: . . .

2.3.5

Results and Conclusions . . . .

Literature Review . . . . . . . . . . . .

Appendix . . . . . . . . . . . . . . . . .

2.5.1

Horizontal Separations . . . . .

2.3

2.4

2.5

3

Combinatorial Concepts

3.1 The Permutation Tree ....................

3.2 The Combination Graph . . . . . . . . . . . . . . . . . . .

3.3 The Cost Of A Permutation . . . . . . . . . . . . . . . . .

3.4 Combinatorial Search . . . . . . . . . . . . . . . . . . . .

3.5 The State-Stage (SS) Graph reduction of the Permutation Tree

3.6 MPS-Tree, MPS-Graph And MPS-Combination-Graph. .

9

17

17

19

20

22

26

30

33

37

37

37

38

38

45

49

56

56

58

61

63

64

66

67

70

The Bipartite Graph Representation Of The Subtrees In

The MPS-Tree . . . . . . . . . . . . . . . . . . . . . . .

3.6.2 Computing The Size Of The MPS-Tree. The Number Of

Matchings In A B-Graph . . . . . . . . . . . . . . . . .

3.6.3 The Number, T(MPS), Of Distinct Bipartite Graphs .

The Chessboard Representation And The Label Vector . . . . .

3.6.1

3.7

4

The

4.1

4.2

4.3

Parallel Processor

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . .

The MPS-Graph As A Solution Space. . . . . . . . . . . .

The MPS-Combination-Graph (MPS-CG) . . . . . . . . .

4.3.1 Construction . . . . . . . . . . . . . . . . . . . . .

4.3.2 Global And Tentative MPS-Graphs Reflecting The

namics Of The RSP. . . . . . . . . . . . . . . . . .

4.3.3 Symmetry - The Complement Label Vector. . . . .

4.4

The Parallel Processor Network, PPM ..

4.5

The Parallel Processor Function And Implementation . .

4.5.1 The Definition Of A Letter. . . . . . . . . . . . . .

4.5.2 The Optimization Step. . . . . . . . . . . . . . . .

4.5.3 Initialization and Termination - Adapting To The

namic RSP Environment. . . . . . . . . . . . . . .

4.5.4 The Parallel Processor Algorithm. . . . . . . . . .

4.6

4.7

4.8

-

73

78

83

88

96

96

99

102

102

. . .

. . .

. . .

. . .

Dy. . . 104

. . . 104

. . . . . . . . . . . .

. . .

. . .

. . .

Dy. . .

. . .

107

111

112

112

113

114

The Implementation Of The PPMPS . . . . . . . . . . . . . . . .

115

4.6.1 The Parallel Processor Data Structure. . . . . . . . . .

4.6.2 The Path Storage . . . . . . . . . . . . . . . . . . . . . .

Refinement In The Label Vector Storage. . . . . . . . . . . . .

4.7.1 Label Vector Tree Representation . . . . . . . . . . . . .

4.7.2 Proof That Half Of The Processors Have Only One Descendant . . . . . . . . . . . . . . . . . . . . . . . . . . .

Performance Aspects Of The Parallel Processor . . . . . . . . .

4.8.1 The Effect Of Additional Constraints . . . . . . . . . .

4.8.2 The Effect Of Limited Number of Classes . . . . . . . .

115

118

120

120

123

126

128

129

5 Applications Of The Parallel Processor In Runway Scheduling

With Takeoffs And Multiple Runways.

132

5.1 Mixed Landings And Takeoffs On A Single Runway. . . . . . . 132

5.1.1 The Parallel Processor Operation . . . . . . . . . . . . . 133

5.1.2 The Contents Of The Current Mail - The Generalized

State. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

5.1.3 Restrictions On The Feasible States. . . . . . . . . . . . 140

5.1.4 Counting The Number Of Letters In The Current Mail

142

5.1.5 General Remarks. . . . . . . . . . . . . . . . . . . . . . 145

mmmmmmft

5.2

5.3

Multiple Runways .........................

5.2.1 The Parallel Processor Operation. . . . . . .

5.2.2 Possible TIV For Crossing And Open Parallel

5.2.3 Mixed Operations On Multiple Runways. . .

Case Study. . . . . . . . . . . . . . . . . . . . . . . .

5.3.1 Case Description. . . . . . . . . . . . . . . . .

5.3.2 Results . . . . . . . . . . . . . . . . . . . . .

. . . . . .

Runways.

. . . . . .

. . . . . .

. . . . . .

. . . . . .

147

147

148

149

150

150

151

6 Conclusions - Directions for further research.

160

* 6.1 Summarized Review. . . . . . . . . . . . . . . . . . . . . . . . . 160

6.2 Application Of The Parallel Processor In Solving The TSP. . . 163

6.3 Research Directions. . . . . . . . . . . . . . . . . . . . . . . . . 165

Chapter 1

Introduction

Adapting to the increased demand for air transport, the flight transportation network (FTN) has grown in dimension and complexity. Its accelerated

growth has raised a number of interesting mathematical problems, concerning

the efficiency and safety of operations. The FTN can be modeled as a queueing network in which the service times depend on the sequence of the users.

Thus the rate at which operations take place is variable and can be maximized

through appropriate runway scheduling. Finding the optimal runway schedule

is a combinatorial problem related to the traveling salesman problem (TSP).

The relation to TSP and other combinatorial problems is discussed further

in chapter 2. In this chapter we take the opportunity to present the airport

terminal area and practices there and to motivate the solution of the runway

scheduling problem. We also provide an outline of the remaining chapters.

At the outset, we would like to mention that this thesis is part of the

broader research effort, undertaken at the Flight Transportation Laboratory

(FTL) at MIT, to study aspects of the FTN, using analytical and experimental

means. The aspects in question include the automation and optimization of Air

Traffic Control (ATC) in the airport terminal area, with the assistance of the

digital computer. The experimental tools in FTL include a detailed terminal

area (TA) simulation, which is implemented in a flexible manner in LISP. The

simulation can generate randomly arriving and departing aircraft, which are

capable of navigating, obeying ATC instructions and displaying themselves on

the screen of a simulated radar scope. Furthermore, the simulation allows for

good controller interaction, using speech recognition. This simulation will be

the ultimate test facility of the runway scheduling process designed in this

thesis.

The TA is the essential component of the FTN, mathematically, since organizing the converging and diverging traffic in a safe and efficient manner is a

formidable combinatorial problem. Physically, we may view the TA as a set of

arrival and departure paths between a set of entry/exit points (situated on the

boundary) and the runways and, holding stacks provided at the entry points

to accommodate queues under congestion. The TA fits naturally the model of

a queueing system, in which the runways are the servers and the aircraft are

the users. The important characteristics of the users are: arrival process, time

window and service time.

The arrival process will be assumed to be a Poisson process. As user arrival

time we will consider the time that the prospective user becomes known to

the ATC, which is typically before the time he is available to operate (earliest

operating time).

By a user's time window we mean the time interval defined by the earliest

possible time and the latest possible time a user can operate, i.e. the earliest

time an aircraft can possibly reach the runway and the time it runs out of fuel.

The service times are determined by the user characteristics e.g. takeoff

acceleration, takeoff speed and approach speed. As we shall see in the next

section, the current separation standards, result in the interoperation time for

any user pair being asymmetric and variable. This property constitutes the

combinatorial character of runway scheduling by making the schedule times,

dependent on the order of operation. The schedule is defined as the assignment

of operating time and runway to each user and is equivalent to a sequence of

aircraft, given the aircraft separations. We can now search for the optimal

schedule, i.e. the aircraft sequence which minimizes the long term mean service

time, thus maximizing the runway capacity, i.e. the rate at which operations

take place. On the other hand delays, which are in general uncomfortable,

wasteful and hazardous, are very responsive, especially during times of congestion, to changes in runway capacity. So runway scheduling is very attractive

because it can help to drastically reduce delays, improving economy and safety

in the terminal area.

Traditionally runway scheduling in the TA is based on rules that evolved

mainly through experience and common sense and have limited scientific character, which may not even be necessary since the schedule is bound to the First

Come First Serve (FCFS) ordering, with very few exceptions. The reasons for

having FCFS are simple:

1. FCFS reflects an indisputable sense of justice to the users.

2. Optimal reordering is a tough combinatorial problem for computers to

solve, let alone the human operators.

The latter reason refers to the Runway Scheduling Problem (RSP),which

has been dealt with effectively in this thesis. In plain words, optimal scheduling

is a rewarding alternative to FCFS, which can be expressed in the form of the

following proposition to the terminal area user:

"if you are willing to be displaced, occasionally, up to a given maximum number, MPS, of positions away from your position in the

FCFS sequence you will on the average operate m minutes ahead of

your FCFS service-time, i.e. at tpCFS - m -

In the case study presented in chapter 5, we see that the average number of

shifts in the optimal schedule does not exceed 2 and half the time these shifts

are forward anyway. The runway capacity is almost double that of FCFS and

the delays are reduced up to 90 percent.

Now, this proposition contains the "contractual" maximum position shift

(MPS) constraints, which limit the maximum number or position shifts of a

given aircraft in the optimal schedule, to MPS. The MPS-constraints define a

combinatorial neighborhood of schedules around the FCFS schedule, which is

explored and exploited computationally. They are thus a central theme in this

thesis.

Chapter 2 presents formally the RSP, discusses its complexities and brings

out the dynamics of the arrival process which require frequent schedule updates. This requirement translates into the need for fast solution procedures.

Sources of complexity are also cited in that the least time separations, which

define the cost structure of RSP, generally violate the triangular inequality

(TI). The potential TI violation (TIV) is a consequence of the fact that one

or more takeoffs will often fit between successive landings without stretching

the interlanding separation. This condition, if unattended, will not only lead

to suboptimal scheduling but also to incorrect spacing of the landings in the

schedule.

Chapter 2 also contains a literature review and a calculation of the expected

runway capacity gains from scheduling.

In Chapters 3 and 4 we take a more abstract view of the problem looking at

a sequence of aircraft as a permutation of discrete objects. Chapter 3 prepares

the ground for the parallel processor, which is developed in chapter 4.

In

particular, it presents and analyzes the combinatorial concepts, which represent

the solution space, such as the permutation tree (PT), the combination graph

and the state-stage graph, as well as the effects of the MPS-constraints on their

size and form. Searching for the optimal permutation can be effected in the

PT or can be done more efficiently in the form of a shortest path problem on

the state stage graph.

Now, the MPS constraints reduce the representations of the solution space

to the MPS-tree, the MPS-combination graph (MPS-CG) and the MPS-graph

respectively. They further result in the appearance of stage invariant features

that allow the MPS-CG to collapse into the parallel processor network. In

chapter 4 we examine the structure of these graphs. We show how to transform

the MPS-graph into an MPS-CG with a special node structure that allows each

stage of the optimization to be carried out in parallel. The parallel processor

is, in effect, a generalized cross-section of the MPS-CG. Thus, by assigning a

processing unit to each node of the parallel processor we construct a parallel

processing machine to carry out the optimization. Chapter 4 also discusses a

refined implementation of the parallel processor and its performance.

Chapter 5 discusses the application of the parallel processor to the RSP.

Specifically we show that it can cope optimally with the TIV on a single runway and use the specifics of runway scheduling to limit the amount of additional

computation. Next we discuss the application of the parallel processor in the

case of multiple runways and suggest a heuristic way of dealing with the problem. This heuristic is demonstrated with the study of a randomly generated

case.

Finally in chapter 6 we present a summary of the thesis with conclusions

and directions for further research, discussing in greater detail heuristics for

the TSP based on the parallel processor.

Chapter 2

Description of The Runway

Scheduling Problem (RSP) and

Previous Work

2.1

Introduction

This chapter gives a detailed presentation of, and examines work related to

the Runway Scheduling Problem (RSP). Our discussion begins by placing the

RSP in its proper context, of combinatorial problems, underlining its distinctive

features. In this sense, the RSP is a relative of the traveling salesman problem

(TSP) and its derivatives which range from variations of the multiple vehicle

routing problem (VRP) to job scheduling with sequence dependent setup costs.

In all these problems we seek to:

Find an optimal sequence - permutation - of a given set of objects, that

can be aircraft, cities or jobs to be processed on machines, given:

a) a matrix of succession costs ci3 , that can be the least time separations

tij between consecutive runway operations1 , distances dig between cities

or sequence dependent setup costs.

runway operations are landings and takeoffs

b) an objective function that induces a preference order on these permutations.

c) a set of constraints.

All these problems are notorious for their computational complexity. They belong to the class of NP - complete problems and require immense amounts

of time to solve them to optimality.

The name NP, standing for "Non-

deterministic Polynomial", derives from the fact that these problems can be

cast as language recognition problems of complexity theory, and signifies that

no polynomial bounded algorithm is known for their solution. The attribute

complete distinguishes the subclass of NP problems, defined as follows: "a

problem X is in class NP-complete if it can be transformed into an instance of

the satisfiabilityproblem and if satisfiabilitycan be transformed into an instance

of X, with polynomial bounded transformations.

Though these problems transform into each other, the transformations have

little practical interest, because, usually, the special structure of each problem

dictates different solution procedures.

However, research results on related

areas are still a source of mutual inspiration and occasionally they can be used

unmodified. For instance, Parker

[30]

used ideas from vehicle routing to solve

a job scheduling problem.

The most common feature, from the practical point of view, is that most

of these problems need a backtrack search procedure or dynamic programming

to be solved optimally.

Next, focusing on the RSP, we can identify several features, which distinguish it rather sharply from its affiliates, the most notable being:

1. The dynamic nature of the RSP optimization environment where new

events - i.e. information about new arriving aircraft or sudden changes of

the weather etc. - incessantly alter the picture. Consequently, a stream

of optimal schedule (sequence) updates is in order, rendering the speed

of the optimization critical, because, if the rate of coming events exceeds

the rate of optimization, we have missed the point in optimizing.

2. The arrival sequence of the aircraft - referred to as First Come First

Serve (FCFS) sequence - is of central importance, mainly because we

want to prevent the eventuality of an aircraft being indefinitely displaced

backwards, behind later arriving aircraft, given the repeated schedule

updates.

To avert such a possibility we instituted the Maximum Position Shift

(MPS) Constraints which prevent an aircraft from being displaced by

more than a prespecified number (MPS) of positions (shifts) away from

its position (order) in the FCFS sequence.

Fortunately the MPS constraints endow RSP with a special structure that

can be exploited in order to expedite the search for an optimal solution.

The MPS constraint solution space is central to the work in this thesis

and has no direct analog in the literature. The MPS constraints define a

neighborhood of sequences, corresponding to tours in the TSP, just like

the k-exchange heuristic defines a neighborhood of tours2 for the TSP,

and we have designed an algorithm (AMPS) that will efficiently find the

optimal sequence in the neighborhood.

In fact we could now try to solve the TSP by applying AMPS on some

initial guess tour. Such a tour could be anything from a random tour to

the result of a heuristic algorithm.

3. The distance matrix of the Euclidean TSP, where the triangular inequality holds, assures our ability to make incremental evaluations and rejec2More

precisely the sequences correspond to Hamiltonian paths, but the problem of finding a

path and that of finding a tour are easily transformable to each other

tions, based on these evaluations, of (partial) permutations. However, the

least time separation matrix in the presence of both takeoffs and landings

violates this inequality, thus creating special problems (to be dealt with

later on in this work).

4. The multiplicity of runways makes the least time separation matrix a

four dimensional quantity because typically the time separation between

the same two successive operations, of aircraft i and

j,

on two different

runways, will differ from their separation if they operate on any other

runway pair or if they both operate on the same runway.

5. In the runway scheduling problem, we are mainly concerned with the

user's perspective, which is, of course, the aircraft delays, as opposed to

the system's perspective. For example in the classical TSP or vehicle

routing we wish to minimize the total distance traveled, by the salesmen

or the vehicles. This is equivalent to minimizing the time the runways are

busy (occupied). But this objective reflects an aspect of the system and

is only pertinent in RSP implicitly, because it induces an improvement

on the system capacity - rate of operations - which in turn has a drastic

effect on delays of aircraft especially in times of congestion.

It has thus been suggested to employ an alternative objective function,

the Total Weighted Delay (TWD), which directly expresses the user's

interest, and offers the possibility of fair discrimination among the users

according to their special needs. In this work we investigate both objectives of minimum busy time and TWD. In TWD we minimize the

weighted sum of the waiting to land times for all the aircraft. This objective divorces RSP from the classical TSP because now the succession

cost of two operations is not known ahead of time but depends on the

previous sequence.

An analog to the TWD, called average tardiness, is found in the job

scheduling literature. It differs, however, from the TWD in two ways.

First, tardiness starts counting from some specified delivery deadline and

not from the time the job is available to be processed. Second, and most

important, is that in most of the job scheduling problems there is no

sequence dependent setup costs.

6. Another feature of the RSP which is also found in the vehicle routing

literature is the time window constraints. In the RSP, the time window of

an aircraft is the interval between the aircraft's earliest possible operating

time and its latest possible operating time, perhaps before it runs out

of fuel. Usually the last possible operating time is not binding, since

aircraft always carry extra fuel. There are a few interesting comments

however about the earliest possible times Ef. First of all, each aircraft

i will have a different such time for each of the possible runways. So we

need a superscript, as well, to denote the runway. Often, we will omit

the superscript if we talk about only one runway or with the implicit

assumption that all the E[ s are approximately equal for our purposes.

Next, we observe that the interval between successive earliest arrival times

is a random variable, with an exponential probability distribution. This

follows from the assumption that the aircraft arrivals follow a Poisson

process. Now the expected value of this interval is the inverse of the

arrival rate. These observations enable us to use results from queueing

theory in order to predict the macroscopic behavior of the system, and

motivate the optimal scheduling. The basic insight from queueing theory

is that the delays of users - aircraft in the RSP - in a system is very

sensitive to the value of p, where p is the ratio' of the arrival rate to the

'The expected delay for a system of one server is proportional to (1 - p) -1.

mean service rate, which is the runway capacity in the RSP.

Given this result we may conclude that optimization is needed most in

congested situations when the arrival rate approaches the mean service

rate. The build up of queues, in such case, of aircraft that hold enroute or

within the airport terminal area, creates also the most interesting computational situation, because now it is more difficult to find an optimal

solution. With a small arrival rate the earliest time constraints will be

strongly binding, thus reducing the number of feasible schedules to a

minimum. However when large queues build up then the earliest time

constraints become secondary because increasingly all the aircraft in the

queue are available for immediate operation.

Such congested situations can be triggered or accentuated by less frequent events like bad weather, runway changes, accidents etc.

There

good "traffic management" will also contribute to safety.

7. Another appropriate remark is that the aircraft become known to the

ATC before their earliest possible time of operation. This "lead time"

is a parameter of the optimization and contributes to better scheduling.

Ideally we should know about all the future arrivals, within a time horizon, say a day, right at the outset.

8. On the other hand, in a practical situation, we will also have to fix the

schedule of aircraft awaiting operation typically 10 to 20 minutes before

they actually operate. This "advance notice time" is essential for aircraft

to carry out their prelanding checks and maneuvers which will allow them

to meet the runway safely and on schedule time or to taxi to their assigned runway for takeoffs. Ideally, from the optimization's point of view,

this advanced notice time should be as small as possible, whereas from

the pilot's and controller's perspective, small notice time is undesirable

because it increases their workload and level of stress. So, it is interesting to find a good value of the advanced notice time that will resolve this

conflict. Such a value could be determined experimentally.

Alternatively we could have requested to fix the schedule for the first say

5 or 6 aircraft in the tentative schedule. In general, we expect that the a

new aircraft arrival will affect the optimality of the tentative schedule ir

and its effect may in principle ripple back to the beginning of 7r. However,

given a large enough number of aircraft in the queue, we anticipate that

the front part of the reoptimized schedule 7r will not differ greatly from

the the corresponding front part of 7r. We are thus not losing very much

by fixing the schedule for the first few aircraft if we have 5 to 10 times

as many in the queue. These issues, of lead time and advanced notice

time, are secondary to the optimization problem and will be addressed

later on in a experimental fashion since they are intractable to deal with

analytically.

These special features of the RSP, in conjunction with the peculiarities of

the other problems, isolate it computationally. So we have concentrated our

efforts to tailoring an algorithm to the needs of the RSP and as we said above

we hope to use this algorithm, in future research, to address the TSP and the

vehicle routing problem.

In the following presentation we start with definitions of relevant quantities,

culminating with a formal definition of the RSP. We discuss its important

aspects including its dynamic nature, the origin of the least time separations,

the origin and effect of the constraints, the sequence evaluation rule and the

choice of cost function.

Further on we present some properties of the optimal solution which allow us to calculate an expected lower bound on the improvement in runway

capacity under optimization, assuming only landings of the same weight class

on a single runway. The bound is presented as a function of MPS and is derived by considering the reduction in the average time separations E(sep) after

optimization. This reduction in E(sep) is found to be more than half of the

expected total reduction margin for MPS values of 5 and 6.

The chapter concludes with a literature review section where we give an

account of preceding work on the RSP and recent developments in related

problems.

2.2

The RSP

2.2.1

General Definitions

Before looking at the definitions the reader is warned that some symbols,

especially i and j serve as dummy variables and are redefined within each new

environment (context). So i could be a particular aircraft or the position of an

aircraft in the schedule.

F

={all prospective aircraft over a time horizon h

at a given current time, to}.

Later on we will give more precise meaning to the term horizon.

Q

=

{

all prospective aircraft known to ATC at a given current time, to}

n = the total number of users we consider in our optimization.

S

= {i : 1 =' i - n}

Q, if for instance we are limited

superset of Q if we include predicted

= a subset of F that may be subset of

by computational resources, or

aircraft. S is the input to the algorithm that solves the RSP.

R = the set of runway indices, r.

I; = time when aircraft i becomes known to the ATC and we will call it

aircraft entry time.

El = earliest possible time aircraft i may operate on runway r

= arrival time at r if not delayed.

E; = min,{E}

FCFS = First Come First Serve order of S based on ascending E's.

Note that we have a choice in defining the FCFS order and runway assignment rule in the presence of multiple runways. Also for convenience

we will use i to identify the aircraft in the i th FCFS position. Hence:

i<j

=>- Ej

Ej, Vij < n

ty= least allowable time separation between aircraft i (leading) and

Ir

j

(fol-

lowing), Vj E S on the same runway.

Here we note that we expect coupling in the operations on different

runways. This coupling or interference will be reflected in an increased

number of subscripts for the minimum time separation. Thus

t(ip),(j,r) = least time separation between aircraft i and

j

operating in succes-

sion on runways p and r and obviously

t(iP),(jP)

=

t;,j

Now we observe that a schedule assigns to each aircraft a time of operation and a runway. The time assignment is equivalent to a permutation

of the aircraft and a rule of assigning times given the minimum time

separations and earliest possible arrival times. We will thus denote:

7rS =

-

=ri

{ permutation of the available aircraft, i.e. the set S},

such that:

aircraft in position k of ir, and:

(rfs)~1 =

position of the aircraft i in 7r, the superscript -1 denotes the in-

verse.

Note that we will frequently drop the superscript S when there is no

ambiguity.

rf = the runway assigned to aircraft r.

t,,-

h(,rS,rs;{Er})

= the time evaluation rule assigning operating time to the aircraft in

position

j

of the sequence 7rs. The function h, to be discussed later on

in detail, is parametrically dependent on the set of the earliest possible

times because the time aircraft 7ry is scheduled to operate, under a given

sequence 7r, is affected by the Er,Vk < j and not solely by E..

d =ti

- Ei=

delay incurred by aircraft i

w= weight of aircraft i reflecting fuel consumption, number of passengers

etc.

2.2.2

Definition Of The RSP

Now we will define problem P over the aircraft set S as follows:

Given S and the set {Ei}, containing the

earliest time arrivals of the aircraft in S, find

the permutation irS and runway assignment

rs that:

minimize z = f(rs,r)

(2.1)

subject to the schedule time evaluation rule:

t

= h(ir, r 5 ; {E})

-,.

Vi E S

(2.2)

and the position shift constraints:

g(i,7rs) E 0 Vi E S

(2.3)

where g and h are given functions to be considered shortly.

For reasons, that we will talk about next, RSP is P defined over S = F and

we will call it dynamic (RSPD) to underline the fact that the set F - of all

prospective aircraft - is not completely known ahead of time, but reveals itself

as time goes on. We will also call static (RSPS) any instance of P in which

S=

Q and

2.2.3

all the prospective aircraft are known ahead of time.

The Dynamic Nature of RSP

r In the above definitions of static and dynamic RSP we implicitly attribute

the dynamic nature of the problem to the lack of timely information about the

aircraft to come. In this sense, if we knew all the future arrivals a priori, then

we could schedule them absolutely optimally by solving a huge static problem.

Evidently not knowing all the future arrivals condemns our schedule in suboptimality, because, though we are prepared to update the schedule upon receiving

new information, we have to make irrevocable decisions, i.e. operate aircraft

according to the tentative best schedule. We may not have the opportunity

later to refute these decisions while reoptimizing.

The quality of our scheduling process - of continuous tentative schedule

updates - can only be judged a posteriori at the end of the operating horizon,

by looking at the actual operations. We can then compare it to the FCFS

discipline or the absolute optimal schedule which can be obtained by solving

the complete static problem.

Technically, the formulation of problem P, aims to bring out the identical

nature of the static and dynamic problems. Their only difference is lies in their

input aircraft sets. However, we only know how to solve exaclty the static

problem, when S equals the available aircraft set

Q,

using procedure A(S)

which takes as inputs a set S and returns its optimal sequence, subject to the

existing constraints. We then propose to construct procedure B(S) that solves

the RSPD based on the set of optimal sequences,

{A(Qi): Qi E Q}

1.11

1111111WIIM-1'.

i ,

where

Q is

a set of collectively exhaustive subsets of F.

The composition of each

Q, is

determined, by the aircraft entry times, and by

the structure of B (S) itself. In turn, the value of B (F) depends on Q and

obviously

v(B(F)) <

v(A(F))

where v(A(F)) is the a posteriori value of the problem, constituting an absolute

best bound on the problem.

We want to briefly extend this discussion in order to point out that whereas

in the dynamic RSPD we are forced (by the lack of future information) to break

up the problem by partitioning the set F, we might indeed find decomposition

desirable. We could for instance make a good "divide and conquer", or an iterative heuristic procedure - where we apply A(S) on partitions of the available

set - in order to solve large static problems, sacrificing optimality in favor of

speed of computation and savings in computational resources. In fact some

of the initial plans of attacking the RSP were focusing on such fragmentation

ideas. However the parallel processor, developed later, proved by far superior

because it very efficiently solves the landings only problem optimally, and it

can be easily extended to accommodate takeoffs and multiple runways.

Busy Periods

So far the reader may still wonder what is a typical time horizon.

To

answer this question we first observe that the arrival rates of aircraft are not

constant during the day. There are peak hours of extreme congestion, and time

periods - most notably at night - where the system is completely empty. So like

any other schedule the FCFS schedule contains periods of contiguous activity,

which we call busy periods, interchanged with idle periods where the system

is empty. A Busy Period (BP), which in technical terms is defined as the time

interval in which all operations on the runways are separated by their least

W1111-WWW""MI, -

time separations, resulting from the FCFS schedule, is a natural time horizon.

This is so because the composition 4 of and the optimization within a given busy

period are completely independent from any other busy period.

A practical point here is that if we want to evaluate experimentally our

optimizing process, we can generate random aircraft arrival times and run our

procedure based on the BPs that emerge from that random FCFS sequence.

9

2.2.4

The Least Time Separations

The least time separations, whose variability obviously gives rise to the RSP,

originate from the existing horizontal ATC radar separation standards, pertaining to safety in the terminal area especially during Instrument Meteorological

Conditions (IMC). However, we will not be concerned with the derivation of

the least time separations. We will only single out their characteristics that

relate to the solution of the RSP. For samples of horizontal separations the

reader is referred to the appendix, though for an expert discussion on the topic

the reader is referred to R. Simpson [37].

The least time separations can be seen as entries of a 4-dimensional matrix

ti,

were i and

j

rj

are successive aircraft in a sequence, with i preceding

j,

and ri, r6

are their runways assignments. (We may, occasionally, drop the runway indices

if we talk about successive operations on the same runway.)

Crossing Runways

Lets now look at an actual scenario in which the least time separations take

on meaning. Suppose that we have decided on which aircraft will land on each

4the composition of busy periods is independent if we assume a memoryless arrival process for

aircraft, for example a Poisson arrival process

of the runways, assuming for the moment that we have only two crossing runways. In landing the aircraft on each of the runways we now have an increased

responsibility. While we still have to maintain the least time separations between successive aircraft on the same runway we have to also ensure that there

is no conflict with aircraft operating on the second runway. Thus if A1 and A 2

are successive operations on runway A and B 1 is an operation on runway B,

w~iere A and B are crossing, then if B 1 is inserted between A1 and A 2 it has to

wait until A1 has cleared the runway crossing point. Then A 2 will have to wait

until B 1 has cleared the runway crossing point etc. Schematically we have:

2tA

B1

1

,A

2

A 2 ...

>tA 1 ,B 1

Obviously the notation is not consistent but convenient with

the same runway separation and

tA,,B,

tAi,A

2

being

being the cross runway separation.

The case of crossing runways is easy and we can, for simplicity of illustration, assume, that the inter runway time separations are all constant, irrespective of the type of aircraft and type of operation (landing or takeoff), and

approximately equal to 30 seconds. These 30 seconds account for an average

time, required by a landing or a takeoff to clear the runway crossing point,

augmented by some safety factor. Of course, in a real situation, we would use

precise numbers.

Parallel Runways

Next consider a scenario of parallel runways. Depending on the distance between the runways the FAA regulations distinguish between close and widely spaced

parallel runways.

The wide parallel case is simple. Given that runways A and B are widely

spaced parallel then a landing on A followed by a landing or takeoff on B

or, takeoff on A followed by landing on B are completely independent, in the

sense that the two consecutive operations can take place simultaneously on

the two runways. A takeoff can be initiated as a landing aircraft is touching

down or two aircraft, landing on A and B respectively, can fly in parallel

The only restriction is for successive takeoffs where

their final approaches.

a minimum separation is imposed according to the departure routing of the

aircraft involved.

If the runways are close parallel, the FAA regulations further restrict consecutive landings on the two runways to maintain a minimum horizontal separation

whose projection on the runway axis has to be greater than 2 nautical miles

(NM). Based on this horizontal separation and denoting with vk the approach

speed of the aircraft k we can calculate the least time separation between aircraft i on runway A and j on runway B using the following formula:

ti,AaE =

-

21

+ F max

0,

(vi

vi

- -)

1

V;

where 2 NM is the minimum horizontal separation to be maintained during the

final approach and F is the length of the final approach. In case the leading

aircraft i is faster than

j

then the minimum separation will occur at the outer

marker, which, on the runway extended line, marks the beginning of the final

descent of aircraft onto the runway threshold, and the term

F max 0, - -)

vi

vi

represents the additional time that the following aircraft j needs to cover the

final approach, in excess of the time 2 it needs to cover the least horizontal

separation. Otherwise, if aircraft

j

is faster than i, the minimum horizontal

separation will be realized when i is at the runway threshold. Thus j has to

only cover the minimum horizontal separation and so the second term on the

RHS is zero.

Single Runway

The same calculation carries over in the case of successive landings on the

same runway. The same formula holds but now we have to consider a minimum

horizontal separation si, which is variable between 3 and 6 NM depending

on the weight category of the successive aircraft.

The reason for increased

separations is that heavy aircraft generate severe wake vortices that can greatly

disturb the stability and general welfare of following aircraft if the later come

tbo close. So the least time separations on the same runway can be expressed

as:

t

=

-'+F

vi

max 0,

(Vi

vi

Finally we are left with takeoff to takeoff, takeoff to landing and landing

to takeoff separations on the same runway. The takeoff to takeoff separation

depends again on the departure procedures. The landing to takeoff and takeoff

to landing time separations depend on the runway occupancy times of the

preceding aircraft. As in the case of crossing runways above we can again

assume a constant 30 seconds for all these times.

The important point is that the least time separations do not satisfy the

triangular inequality5 . So, the sum of the least time separations between a

landing L, followed by a takeoff T and then by a landing L 2 might be 60

seconds where as the minimum landing separation between the landings only

would to be perhaps 130 seconds. Thus if were to evaluate the schedule by

adding successive time separations we would space the landings dangerously

close.

In order to correctly evaluate the schedule we have to keep track of a last

landing record, for each of the runways, during our sequential schedule evaluation, which will be updated upon every new landing and will help us maintain

the correct spacing of landings and takeoffs.

5By triangular inequality we mean that each of the sides of a triangle has to be less than the

sum of the other two

2.2.5

The Constraints

The constraints of this problem can be broadly grouped in two categories.

As can be seen from the definition of problem P we are required to use a Time

Evaluation rule, equation 2.2.2, and to satisfy the inequality constraints 2.3

called indefinite delay constraints. We will examine first the later constraints

vwhich require considerably less explanation than the former.

The Maximum Position Shift (MPS) Constraints

The MPS constraints form a set of artificial of constraints, imposed on the

RSP. Their form is given by the inequalities 2.3 and they are intended to:

ensure that no aircraft is indefinitely delayed, given the repeated

schedule updates upon the arrival of new aircraft.

This is achieved by limiting the position of an aircraft, in any MPS feasible

sequence, to be within a prespecified maximum number of position shifts away

from its position in the FCFS sequence. Thus we can write:

{pos}{

_

poirnpstoFCFS

position

resequenced

}

<

MP

MPS

Using the foregoing general definitions where Irk is the aircraft in the k th

position of the sequence

7r,

7rj is the position of aircraft i in gr we can rewrite

the MPS constraints as follows:

(7rPCFS)~1-1

_MPg

=

Owd

!

(7rFCFS)-1 + Mpsackward

and recalling that aircraft are named after their FCFS position, i.e.

i - MPS!*=''''

<

= i + MPSVi"****'d Vi

where S is the set of available aircraft.

e

S

Vi E S

7 rfCFS

=

(2.4)

Note also in the above equation that the superscripts backward and forward

suggest an asymmetric use for the MPS constraints which enables the controller

to fix the position of any aircraft in any feasible schedule to a desired one, within

the MPS window6, by setting

MPSorward = -MPS"ackward

In the next chapter we will see that symmetric MPS constraints endow

the RSP a regular structure that can be exploited algorithmically and can be

analyzed mathematically.

Latest Possible Time (LPT) Constraints

It is possible to also consider Latest Possible Time (LPT) constraints,which

can either ensure that no aircraft is assigned an operating time after a critical

time, when for instance the aircraft would run out of fuel, or, in an emergency,

they can offer an alternate way to give absolute priority to an aircraft with an

engine fire.

The LPT constraints are provided for in our solution procedures but we

will ignore them because usually they are not binding, as aircraft always carry

extra fuel, and thus, they do not contribute to the computational aspects of

the problem.

The Time Evaluation Rule (TER)

The TER is a recursive way of assigning operating times to a sequence

of aircraft, given the aircraft's earliest arrival times and the subtleties due to

mixed takeoffs and landings as well as multiple runways. The TER is first of all

necessary in order to find the operating times under the FCFS discipline and

'In case the desired position is not within the MPS window one could change the original

FCFS sequence, so that the aircraft in question has the appropriate place.

we will shortly discuss its alternative use as an implicit way of implementing

the natural constraints of the problem which are the following:

Least Time Separation constraints: So far we have treated the least time separation between successive aircraft as a cost. However, it reflects indeed

a constraint, which forces the schedule times of consecutive operations

to be spaced apart by prespecified amounts, as described in the previous

subsection.

Earliest Possible Time (EPT) constraints: they ensure that no aircraft is assigned operating before its E,.

The EPT constraints have a favorable effect on the speed of finding an

optimal solution because they reduce the number of feasible sequences.

TER With Landings Only On Single Runway

Now, in the absence of earliest time constraints, with landings only on a

single runway and given a sequence 7r of aircraft, the Time Evaluation Rule

(TER) would find recursively the operating time for the aircraft 7ry in the

j

th

position gr, based on the total time of the preceding successive operations. This

simplest TER relates the operation times of successive aircraft

7r;

preceding ?ry

in the following way:

t

=

t,; + t,

Given t,. the aircraft that operated last on the runway we can recursively compute all the t, 's Vj E S. Strictly speaking the equality should be replaced by a

greater or equal sign to correctly represent the least time separation constraint.

However we are, in principle, always able to satisfy the equality provided that

aircraft

j

can be on time. Otherwise we have to modify the TER as follows:

t,, =

max(E,,t,

1 ; + tl,,,)

and now the earliest time constraints are also satisfied.

TER With Landings Only And Multiple Runways

Extending to the case of multiple runways we have to ensure that inter runway separations are satisfied, as described in the previous section. In deciding

to land aircraft 7r on a runway ry, where 7r is the given sequence, for which we

want to determine the operating times, and r is its associated runway assignment, we must check on the landing times of all the preceding aircraft 7r;, with

i < j, that were last to operate on each of the runways and ensure that all the

time separations - same runway and other inter-runway - are satisfied. So the

TER becomes:

t',. = max (Max (ti

iEl'

+ tr,,r,,jrj,), E.)

(2.5)

where:

I = {i : r; = the last aircraft to operate on its assigned runway r;}

TER With Mixed Landings And Takeoffs On A Single Runway

If we were to consider a single runway and mixed takeoffs and landings then

we would have to keep track of the preceding landing if the current aircraft in

position i is a takeoff, following the discussion in the previous section. Thus

the TER now becomes:

t,,. = max (t, + t',',,

1t,,

+ t,,,.

Er,)

(2.6)

where I is the position, before position i, containing a landing, if 7ri is a takeoff,

and l +- i if xi; is a landing.

Mixed Takeoff And Landings On Multiple Runways

Finally when we have both multiple runways and mixed traffic, we obviously

have to keep track of the last landing on all the runways. So we can write the

TER as:

= max (m

t.

(t','

+

t,,

)

ax (t'. + t,,,,,,,),

E.)

(2.7)

where:

I = {i : i =

the last aircraft to operate on runway ri, i < j, ti,r,,j,r > 0}

f=

the last aircraft to land on runway rg, i <j, ti,r,,

{i: i =

2.2.6

> 0}

The FCFS Runway Assignment

In the case of multiple runways we clearly have a choice in assigning operating runway to aircraft. In fact with m runways and n aircraft we have m"

such possible partitions. For the FCFS sequence we have chosen the following

rule for the runway assignments, which states that:

each aircraft will be assigned to the runway on which it can safely

operate as early as possible, given the runway assignments of its

preceding arrivals.

This rule will generate a unique schedule that reflects a small degree of optimization. In order to calculate the FCFS operating times we can use the

TER with a small modification to account for the case of multiple runways

when operations on parallel runways are uncoupled. As we will see later, a

strict requirement for the optimization is that the schedule times should be

monotonically increasing with increasing position in the sequence. This monotonicity assumption restricts the inter runway least time separations to be at

least equal to zero. However this requirement is lifted for the FCFS sequence

and an aircraft rfCPs in position i can be scheduled earlier than its predecessor

?rfCFS if r;,

ri_1 and the operations on these runways are independent.

Recalling that

as follows:

rs

= i, we can formally restate the above rule recursively

MMWJbM"_

Assign to aircraftj the runway rAand time ti such that:

ti = t

=

min max (E,7, max (t+

,jER

iEI

i

EC

+ ti,,,j,rj))

(2.8)

where:

R is the set of available runways and

1 = {i : i = the last aircraft to operate on runway rg, i < j, ti,rij,r > O}

£ = {i : i = the last aircraft to land on runway r;, i <j, t;,,,,. > 0}

Note that the clause t;,,j,.

> 0 in the definitions of I and L allows for

a later aircraft to operate earlier than its preceding aircraft in the absence of

runway interference.

For example, this condition of zero inter runway separation, is met when

we have a takeoff T succeeding a landing L in the FCFS sequence, and two

close parallel runways A and B. Suppose now that the landing can land at its

earliest on runway A at time tL. Then the T can be initiated at

tA < tB,

if

not limited otherwise.

This "forward slip" of the takeoff would not be permitted by the optimization in general because it revokes the monotonicity of the objective function.

The forward slip could be achieved through an alternative sequence. This argument thus implies that increasing the number of runways we should increase

the MPS to allow more sequences to be examined. Note, however, that we

can always apply the FCFS TER on the resulting optimal sequence to take

advantage of any additional improvements, from forward slips, that the MPS

restrictions did not allow for. We will return to the topic later on when we will

talk about the application of the parallel processor in the RSP with multiple

runways.

On The Use Of The TER As A Constraint

In this subsection we want to make just a few remarks on the TER. The

TER has been constructed so as to incorporate the earliest possible time (EPA)

constraints, thus always producing an EPA feasible schedule, and now, we want

to compare this approach with the alternative where a simple TER would

simply add up all the previous time separations. In the latter case we would

Ir

have to check for EPA violations explicitly, casting inferior and rejecting any

sequence 7r, in which any aircraft 7ry violates substantially 7 its E,,, unless all

its preceding aircraft 7r; had

E,

Er,

Vi <j

If this condition holds it means we have discovered a new Busy Period in 7r with

less than n aircraft and so we have a partition of the aircraft in two independent

sets. We can then optimize these two sets individually.

Now such an approach clearly suffers from technical complications if more

busy periods appear in the optimal schedule, because then we have two smaller

problems to solve and we must further decide which of the aircraft in the second

BP is going to be first. Additionally we would still have to modify the TER to

properly handle mixed takeoffs and landings and the multiple runways.

Taking this modification a step further, by also including the Ei in the

TER we do away with fathoming due to EPT infeasibility, in an explicit way.

We have not, however, affected the size of the computation, because this fathoming is indirectly carried out, most of the time, by virtue of the fact that

any sequence that is EPT infeasible according to the simple ETR and furthermore violates the above condition will have an exorbitant cost that will cast it

inferior. This inferiority will be established very quickly by the TER in the incremental evaluation of the operating times and, for instance, a combinatorial

7 By substantially we mean some fraction of the average least time separation.

search procedure will backtrack. Meanwhile we have done away with tedious

details concerning the formation of new BPs and the best aircraft to initiate the

schedule in the subsequent BPs if more BPs emerge in the optimal schedule.

More will be said on this issue after we discuss the solution procedure.

2.2.7

The Cost Function

The cost function f(7 rS, rs) maps the set of the cartesian products of permutations

7rS

and runway assignments rS onto the real numbers. The purpose of

having a cost function is to minimize the aircraft delays in the airport terminal

area.

There are two basic requirements that have to be satisfied by the cost

function, with respect to our solution procedures, and will be looked at in

detail later on in the next chapter, namely:

" The monotonicity with increasing position in the sequence.

" The separability of the cost function, which entails the ability to evaluate the cost function incrementally. This property ensures that we can

make incremental inferences about the quality of incomplete sequences

containing the same aircraft.

It is also important to note that the quality of solution will have a dependence on the aggregate level of constraints. In this respect we first note that

the gains in optimality will be a function of maximum number (MPS) of allowed position shifts. A lower bound on the expected improvement is given in

the following subsection as a function of MPS, for landings only on a single

runway. The interpretation of this lower bound is that by optimizing we will

get an expected improvement at least as good as the bound.

Two alternative cost functions have been proposed, one of which minimizes

delays explicitly and the other implicitly, by expediting the rate at which op-

erations take place i.e. the operational capacity. We will begin with the latter

cost function, called Total Busy Time.

Total Busy Time (TBT)

As we discussed in the introduction to this chapter (see page 14), optimizing

the operational capacity is important, especially in situations of congestion and

we quoted the queue theoretical result which illustrates the sensitivity of the

system delays to minute changes of operational capacity.

Now the operational capacity is the inverse of the average service time which

is in turn the total busy time - defined as the sum of the busy periods of the

system - divided by the total number of aircraft in them. The queue theoretical

mean service time translates, of course, to the average least time separation,

and is a function of the aircraft sequencing. Thus, in order to maximize the

operational capacity we have to find the sequence of aircraft that minimizes

the total busy time (TBT).

In the absence of earliest possible time (EPT) constraints we will certainly

have only one busy period and therefore minimizing the TBT is equivalent to

minimizing the last operating time, given by the time evaluation rule (TER)

discussed in the previous section. We can also formally write the cost function

as:

z T(grS,)

=

t"

min

zEP.

(where n is the total number of aircraft)

However if, due to the EPT constraints the optimal schedule contains more

than one BPs, then it suffices to minimize the last operating time within each

one of the new BPs. The average least time separation will be computed from

the sum of the lengths of the BPs in the optimal schedule.

Intuitively we can see that the existence of several BPs in the optimal

schedule is a positive sign. The more BPs we have in the optimal schedule the

better the optimal schedule compares to the FCFS.

Total Weighted Delay (TWD)

The total weighted delay is a cost function that explicitly minimizes the

aircraft delays. In addition it provides for discriminating the aircraft by means

o[ weights, which reflect their individual characteristics as for instance fuel

consumption, number of passengers etc. In TWD each aircraft i contributes

to the objective function an amount of delay equal to the length of the time

interval between its earliest possible arrival time E; and its time of operation

ti, weighted by a certain number w;. So we can write the following expression:

zTWD

(2.9)

wi (ti - E;)

=

rSrS)

i=1

where the operating time t; will be given by the TER.

We observe that this expression is adequate if we have to compute the TWD

of an entire sequence. In a backtrack search, as well as in dynamic programming

solution procedures we are interested in incremental evaluation of the TWD

in the sequence. Thus we are interested in the value of the objective (cost)

function up to position i in the sequence. In the case of TBT, that was no

problem, since the incremental value of zTEBT was given by t,,. With TWD, if

all aircraft are present at the beginning of the optimization, we can also easily

write down an expression for the incremental values of the TWD objective as:

TWD

zS--

=

TW

=zS-rwn

(X,

-

+

(t,,_-,

-

ti)

W -

where

W

=

twj

=

total weight

jES

In this recursive formula we note the following:

W,,.

* the second term of the RHS is the increment in delay accrued by the

remaining (n - i) aircraft between the (i - 1) st and i th operations.

It is equal to the time interval between these two successive operations

multiplied by the total weight of the aircraft which are being delayed.

" the factor (t, ,1 - t, ) is used for the time interval because it accounts for

the fact that the earliest time constraints are already incorporated in the

TER, as well as for the insertion of takeoffs. In case of no earliest time

violation with landings only this expression reduces to

.

When not all the aircraft are initially available then the delay increment

will have to be modified. It will be the sum of the following two parts:

1. The sum of the delays of the aircraft that arrived in the last interoperation

interval which equals

(

t,,_,<E,,<tj

w,,i (Er - t,,i)

2. The weight sum, multiplied by the interoperation interval, of only those

aircraft, in the set U, that arrived prior to tj_, and have not yet operated.

We can thus write this contribution as

(t,,_, -

t, 1 )

w,

rEU

2.3

Computing An Expected Lower Bound,

On Runway Capacity, Through Scheduling

In this section we develop and present a lower bound (LB) on the expected

runway capacity under scheduling. The LB is affected by the MPS constraints,

which were described in the previous section. In particular, the LB is a function

of the maximum number of permitted position shifts (MPS). Furthermore, the

LB depends on the approach speed range of the aircraft using the runway. In

the following discussion, after a summary of the assumptions, definitions and

relevant formulas, we analyze the LB as a function of MPS and of the speed

range and present the results for a set of different speed ranges. The section

concludes with quantitative remarks on the results.

2.3.1

Assumptions

1. Landings Only

2. Single Runway

3. One Weight Class

4. Time Window Constraints Are Not Critical

5. Poisson Arrivals Of Aircraft

2.3.2

ti;=

L

Vi

Definitions

+ 6t

6ti; = F max (0, i

S = 3 N. Miles

F = 5 N. Miles

-

)

a = min. speed

b = max. speed

k = the length of the interval within the FCFS sequence

in which we find the E(6t).

.j,,= E (minEK

( )),where

K = a set of k aircraft.

ty.= E (maxjEK W)

dif =ta

E (bt) =

2.3.3

- t,,in

di

Formulas Used

1.

2.

2.3.4

E

E (max

(0,

, 1ViV)

-

."2

(b-a)

2

b-a

Rationale And Derivations:

The expected runway capacity is defined as the inverse of the mean service

time, where, by mean service time we imply the average least time separations

between successive operations on the runway. Therefore decreasing the mean

service time will increase the runway capacity, which, in turn, is responsible

for delay reductions. In the following discussion we will try to show that there

is an absolute minimum decrease in the mean service time, E(bt), that can be

achieved through optimization, corresponding (to first order of accuracy) to an

equal percentage increase in capacity. We will then show how this relates to

delay reductions.

This discussion is valid for a traffic composed of same weight class aircraft,

waiting to land on a single runway and with non binding earliest time constraints.

We start by showing how to compute the mean time separation and for this

purpose we further assume that the aircraft arrive according to a Poisson arrival

process, which is equivalent to assuming that new arrivals are independent

of previous ones. Also often the approach speed of the arriving aircraft is

discretized. So tij will stand for the least time separation between aircraft

belonging to speed classes i and j. If we let P be the probability of an aircraft

belonging to class i, we can compute the mean service time E(t;;) for a FCFS

sequence - the random arrival sequence - with the following formula:

E(t;;)

=

E

PPiti;

1<i

(2.10)

ii',

Though, most of the work so far was based on discrete distributions, here

we used a continuous probability density function (PDF) for the speed in the

range (a, b) (a and b represent the minimum and maximum speeds), because it

provides analytical formulas and it is more realistic. So, assuming a uniform

PDF:

_

=

f(v)

1

-

E (a, b)

,

and replacing the summation by integration we have:

b

E(tii) =

b

dv f(v;)] dvi f(vj) ti,(vi,vj)

Our subsequent results are based on the following observations:

1. From:

ti

=

S

-+Fmax

11

0,--64i3

(2.11)

,

we see that t;, decomposes into the sum of two terms. The first term,

is sequence independent. So it contributes a constant amount to the total

busy time (TBT) and is thus of no interest to the optimization of TBT'.

The second term 6tis, is sequence dependent and, noting also that we can

express:

E(tii)

E -

=

+ E(6tii)

(S)

we have to center our efforts in minimizing the E(6ti), which is the

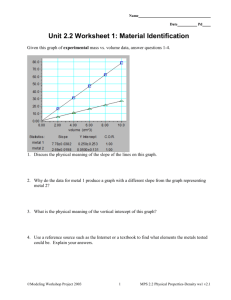

expected limit in improvement. Figure 2.1 shows the sample space for

the evaluation of E(6tg).

2. The sequence dependent term, Stig, is zero when the following aircraft,

j, is faster than its preceding aircraft i. Due to this fact only descending speed sequences contribute towards the sequence dependent cost and

should, therefore, be avoided.

3. The total sequence dependent cost - the sum of successive btij's - of any

y, where 1 and n

descending speed subsequence is proportional to I -

are the first and last aircraft of the subsequence. The intermediate terms

cancel as can be verified in the following example with vi > v 2

(1

-1

V2 /1

1_v1

UVn-1

+

1

2 1\/11 V4

V3

Vn-2)

(n

>

vn:

1

1

1

vs

11

> ...

V3

Vn-1)

1\

Vn

V1

(2.12)

From these observations we may deduce that the optimal sequence, in the

absence of MPS constraints, would contain at most one descending subsequence, descending from the first fixed aircraft (the previous landing) to the

8

However,

-

is important in the minimization of Total weighted delay.

b

vi > vj

ab

Figure 2.1: Sample space for calculating E (max

v

(0,

-))

SPEED

0 = AIRCRAFT

0

0

140

130

0

PREVIOUS LANDING

120

11000000

100000

900000

10

805

20

15

LANDING ORDER

Figure 2.2: The optimal sequence without MPS constraints.

slowest aircraft of the set. In other words, we have the freedom to rearrange

all the aircraft in ascending speed order but we are stuck with the initial fixed

aircraft - the previous landing - which will, with large probability, be faster

than the slowest aircraft in the set, thus imposing a descending subsequence

from the fixed aircraft to the slowest. One way to arrange such an optimal

sequence is by placing the slowest aircraft right next to the fixed one as shown

on figure 2.2.

The expected additional cost, t(tig), in this unconstrained case is the

expected cost of the descending subsequence, divided by the total number of

aircraft, and thus, we expect that it would be negligible for a large enough

aircraft set.

Now, in the presence of MPS constraints, we have to accept considerably

more descending speed subsequences, and we can resequence the aircraft in

order to minimize the total extra length (the sum of ' - ') of all the descending

SPEED

FCFS|

14

130

21

16

13

120

7

110

27

100

10

15

18

20

11

17

8

90

80

20

25

Landing

Order

25

Landing

Order

SPEED

MPS=4

14

130

S 4

1w4

120

110

2

100

13

16

21

19

7

8

69IR

1

18 20

15

17

90

80

5

SPEED

MPS=5

130

120

110

100

3

1l

21

13 F1116

19

10

4

2

18 20

15

7W11

8

17(

12

90

25

Landing

Order

Figure 2.3: Example of an FCFS arrival sequence with many descending speed

subsequences, as well as resequencings that contain one descending subsequence

for every 2MPS interval.

subsequences.

In a real situation we may use elaborate techniques to arrive at the optimal

sequence under the MPS constraints. We have, however, no way of computing

the expected improvement - i.e. the expected reduction in E(6tyj), under such

optimization. So, in order to arrive at some lower bound to this reduction, we

propose a suboptimal procedure, whose performance we can estimate based on

the following claim:

Given an FCFS sequence we can always rearrange the aircraft, in a

MPS feasible way, so that the new sequence will have at most one

descending subsequence for every 2MPS wide interval of the FCFS

sequence.

PROOF: At first note that figure 2.3 shows a typical FCFS sequence and

resequencings, for MPS values of 4 and 5. The resequencings are MPS

feasible rearrangements of the FCFS sequence, containing only one descending speed subsequence in every 2MPS long interval.

Then, to justify the claim, we recall the fact that within any MPS

long interval we have the liberty to rearrange the aircraft in any desirable way. Thus, we split the original sequence in MPS long intervals

M 1 , M 2 , M 3 ,... and then create descending sequences in the odd MPS

intervals and ascending sequences in the even intervals.

The maximum speed of the descending sequence in M 3 for example is the

maximum speed over M 2 and M 3 whereas its minimum is the minimum

speed over intervals M 3 and M 4 .

In general the descending subsequence in the odd interval 2i + 1 starts

with the maximum speed speed that has occured in the intervals 2i and

2i + 1 and terminates with the minimum speed that has occured in the

intervals 2+ 1 and 2i+ 2.

The above argument concludes the justification of the claim.

QED

Accepting the above claim, the sequence dependent E(6t*), resulting from

this rearrangement, equals the expected cost of a descending subsequence recurring every 2MPS - and using the expression 2.12 we have:

E(6t*)

=F