with ( ) = Basic model:

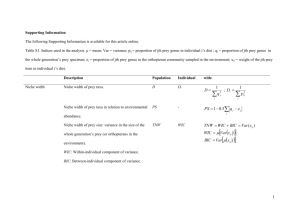

advertisement

Mixed Model Analysis

with

E (e) = 0

E (u) = 0

Cov(e; u) = 0

Basic model:

Y = X + Zu + e

where

X is a n p model matrix of known constants

is a p 1 vector of \xed" unknown pa-

rameter values

Z is a n q model matrix of known constants

u

is a q 1 random vector

e

is a n 1 vector of random errors

V ar(e) = R

V ar(u) = G

Then

E (Y) = E (X + Z u + e)

= X + ZE (u) + E (e)

= X

V ar(Y) = V ar(X + Z u + e)

= V ar(Z u) + V ar(e)

= ZGZ T + R

608

609

Example 10.1: Random Block Eects

Comparison of four variants of a penicillin

production process:

Normal-theory mixed model:

" #

u

e

Then,

N

9

ProcessA >>=

ProcessB

ProcessC >>; Levels of a \xed" treatment factor

ProcessD

" # "

#!

0

G 0

;

0

0 R

Blocks correspond to dierent batches of an

important raw material, corn steep liquor

Random sample of ve batches

Y N (X ; ZGZ T

+ R)

" call this Split each batch into four parts:

{ run each process on one part

{ randomize the order in which the

processes are run within each batch

610

611

Here, batch eects are considered as random

block eects:

Batches are sampled from a population of

many possible batches

To repeat this experiment you would need

to use a dierent set of batches of raw

material

Data Source: Box, Hunter & Hunter (1978),

Statistics for Experimenters,

Wiley & Sons, New York.

Data le:

penclln.dat

SAS code:

penclln.sas

S-PLUS code: penclln.spl

Model:

Yij =

"

Yield

for the

i-th process

applied

to the

j-th batch

where

+ i

+j

+eij

"

"

"

mean

random random

yield

batch error

for the

eect

i-th process,

averaging

across the

entire

population

of

possible

batches

j NID(0; 2)

eij NID(0; e2)

and any eij is independent of any j .

612

613

In S-PLUS you could use the "treatment"

constraints where 1 = 0. Then

Then,

i = E (Yij ) = E ( + i + j + eij )

= + i + E (j ) + E (eij )

= + i

i = 1; 2; 3; 4

represents the mean yield for the i-th process,

averaging across all possible batches.

PROC GLM and PROC MIXED in SAS would

t a restricted model with 4 = 0. Then

= 4 is the mean yield for process D

i = i ; 4

i = 1; 2; 3; 4:

= 1 is the mean yield for process A

i = i ; 1

i = 1; 2; 3; 4:

Alternatively, you could choose the solution

to the normal equations corresponding to the

"sum" constraints where

1 + 2 + 3 + 4 = 0

= (1 + 2 + 3 + 4)=4 is an overall mean

yield

i = i ; is the dierence between the

mean yield for the i-th process and the

overall mean yield.

614

615

Variance-covariance structure:

V ar(Yij ) = V ar( + i + j + eij )

= V ar(j + eij )

= V ar(j ) + V ar(eij )

= 2 + e2

for all (i; j )

Correlation among yields for runs on the

same batch:

P = q Cov(Yij ; Ykj

V ar(Yij )V ar(Ykj )

Runs on the same batch:

Cov(Yij ; Ykj )

= Cov( + i + j + eij ; + k + j + ekj )

= Cov(j + eij ; j + ekj )

= Cov(j ; j ) + Cov(j ; ekj ) + Cov(eij ; j )

+Cov(eij ; ekj )

= V ar(j )

= 2

for all i 6= k

2

= 2 + 2 for i 6= k

e

Results for runs on dierent batches are

uncorrelated (independent).

617

616

Write this model in the form Y = X + Z u + e

Results from the four runs on a single batch:

2 Y1j

6Y

V ar 664 2j

Y3j

Y4j

"

2 2 + 2 2

3

2

66 2 e 2 + e2 2

77

75 = 66 2

2 2 + e2

4 2

2

2

2

2

2

2

+ e2

= 2J + e2I

3

77

77

5

"

matrix identity

of

matrix

ones

This special type of covariance structure is

called compound symmetry

618

2 Y11 3 2 1 1 0 0 0 3

66 YY2131 77 66 11 00 10 01 00 77

66 YY41 77 66 11 01 00 00 10 77

66 Y1222 77 66 1 0 1 0 0 77

66 YY32 77 66 11 00 00 10 01 77 2 3

66 Y4213 77 66 1 1 0 0 0 77 66 YY23 77 = 66 1 0 1 0 0 77 64 12 75

66 Y3343 77 66 11 00 00 10 01 77 3

66 Y14 77 66 1 1 0 0 0 77 4

66 YY2434 77 66 11 00 10 01 00 77

66 Y44 77 66 1 0 0 0 1 77

64 YY1525 75 64 11 10 01 00 00 75

Y35

1 0 0 1 0

Y45

1 0 0 0 1

2 e11 3

21 0 0 0 03

1

0

0

0

0

66 ee2131 77

66 1 0 0 0 0 77

66 ee41 77

66 10 01 00 00 00 77

6 12 7

66 0 1 0 0 0 77

66 00 11 00 00 00 77 2 3 666 eee2232 777

66 0 0 1 0 0 77 1 66 e4213 77

+ 666 00 00 11 00 00 777 64 23 75 + 666 ee2333 777

66 00 00 10 01 00 77 45 66 ee4314 77

66 e24 77

66 0 0 0 1 0 77

66 ee3444 77

66 00 00 00 11 00 77

66 e15 77

66 0 0 0 0 1 77

4 ee2535 5

4 00 00 00 00 11 5

0 0 0 0 1

e45

619

Example 10:2: Hierarchical Random Eects

Model

Here

G = V ar(u) = B2 I55

R = V ar(e) = e2Inn

Analysis of sources of variation in a process

used to monitor the production of a pigment

paste.

Current Procedure:

and

V ar(Y) =

=

=

=

V ar(X + Z u + e)

V ar(Z u) + V ar(e)

ZGZ T + R

2ZZ T + e2I

2 2

2

66 J + e I 2

J + e2I

= 666

...

4

2J + e2I

Sample barrels of pigment paste

Take one sample from each barrel

3

77

77

75

Send the sample to a lab for determination

of moisture content

Measured Response: (Y ) moisture content of

the pigment paste (units of one tenth of 1%).

621

620

Current status: Moisture content varied

about a mean of approximately 25

(or 2.5%)

Data Collection: Hierarchical (or nested)

Study Design

with standard deviation of about 6

Problem: Variation in moisture content was

regarded as excessive.

Sources of Variation

Sampled b barrels of pigment paste

s samples are teaken from the content

of each barrel

Each sample is mixed and divided into

r parts. Each part is sent to the lab.

There are n = (b)(s)(r) observations.

622

623

Model:

Yijk = + i + ij + eijk

where

Yijk is the moisture content determination for

the k-th part of the j -th sample from the

i-th barrel

is the overall mean moisture content

i is a random barrel eect

i NID(0; 2)

Covariance structure:

V ar(Yijk ) = V ar( + i + ij + eijk )

= V ar(i) + V ar(ij ) + V ar(eijk )

= 2 + 2 + e2

" estimate these variance

components

Two parts of one sample:

Cov(Yijk; Yij`)

= Cov( + i + ij + eijk ; + i + ij + eij`)

= Cov(i; i) + Cov(ij ; ij )

= 2 + 2

for k 6= `

Observations from dierent samples taken

from the same barrel:

Cov(Yijk ; Yim`) = Cov(i; i)

= 2 j 6= m

ij is a random sample eect

ij NID(0; 2)

Observations from dierent barrels:

Cov(Yijk ; Ycm` = 0; i 6= c

eijk corresponds to random measurement

(lab analysis) error eijk NID(0; e2)

624

625

Write this model in the form:

Y = X + Zu + e

In this study

b = 15 barrels were sampled

s = 2 samples were taken from each barrel

r = 2 sub-samples were analyzed from each sample taken from each barrel

Data le: pigment.dat

SAS code: pigment.sas

S-PLUS code: pigment.spl

626

2 Y111 3 2 1 3

66 Y112 77 66 1 77

66 Y121 77 66 1 77

66 Y122 77 66 1 77

66 YY211 77 66 11 77

66 Y212 77 = 66 1 77 +

7 6 7

66 Y221

66 .. 222 777 666 1.. 777

66 Y15;1;1 77 66 1 77

66 Y15;1;2 77 66 1 77

5 4 5

4

2

66

66

66

66

66

66

66

66

66

66

66

4

Y15;2;1

Y15;2;2

1 0 :::

1 0 :::

1 0 :::

1 0 :::

1 0 :::

0 1 :::

0 1 :::

0 1 :::

0 1 :::

..0 ..1 ..: : :

0 0 :::

0 0 :::

0 0 :::

0 0 :::

1

0

0

0

0

0

0

0

0

..0

1

1

1

1

1

1

0

1

1

1

1

0

0

0

0

0.

.

0

0

0

0

0

0

0

0

0

0

0

0

0

..0

0

0

0

0

0

0

0

0

0

1

1

1

0

0.

.

0

0

0

0

:::

0

0

0

0

0

0

0

1

1.

.

0

0

0

0

0

:::

:::

:::

:::

:::

:::

:::

:::

:::

..

:::

:::

:::

:::

0

0

0

0

0

0

0

0

0

0.

.

1

1

0

0

3

0 72

3

0 777 1

0 7 6 2 7

0 777 666 .. 777

0 7 6 5 7

0 77 666 1;1 777

0 7 6 1;2 7 + e

0 777 66 2;1 77

0. 77 66 2;2 77

. 7 66 .. 77

0 777 4 15;1 5

0 7 15;2

15

1

627

where

R = V ar(e) = e2I

" 2

#

I

0

G = V ar(u) = 0 2I

Analysis of Mixed Linear Models

Then

E (Y) = X = 1

V ar(Y ) = = ZGZ T + R

2 2

3

I

0

b

5 Z T + e2Ibsr

= Z4

0 2Ibs

= 2(Ib Jsr) + 2(Ibs Jr) + e2Ibsr

2

3

4

because Z = Ib 1srIbS 1r5

%

(sr) 1

vector

of ones

Y = X + Zu + e

where Xnp and Znq are known model

matrices and

" #

u

e

N

" # "

#!

0

G 0

;

0

0 R

Then

-

Y N (X ; )

where

r1

vector

of ones

= ZGZ T + R

629

628

Some objectives:

Methods of Estimation

(i) Inferences about estimable functions of

xed eects

Point estimates

Condence intervals

I. Ordinary Least Squares Estimation:

For the linear model

Yj = 0 + 1X1j + : : : ; rXrj + j j = 1; : : : ; n

2 3

0

an OLS estimator for = 64 .. 75 is any

r

2 3

b

0

b = 64 .. 75 that minimizes

br

Tests of hypotheses

(ii) Estimation of variance components

(elements of G and R)

(iii) Predictions of random eects

Q(b) =

(iv) Predictions of future observations

630

n

X

[Yj ; b0 ; b1X1j ; : : : ; brXrj ]2

j =1

631

Normal equations (estimating equations):

n

@Q(b) = ;2 X

(Yj ; b0 ; b1X1j ; : : : ; br Xrj ) = 0

@b0

j =1

The Gauss-Markov Theorem (Result 3.11)

cannot be applied because it requires uncorrelated responses, i.e.,

n

@Q(b) = ;2 X

Xij (Yj ; b0 ; b1X1j = : : : ; brXrj ) = 0

@b1

j =1

for i = 1; 2; : : : ; r

V ar(Y) = 2I

Hence, the OLS estimator of an estimable

function CT is not necessarily a best linear

unbiased estimator (b.l.u.e.).

The matrix form of these equations is

(X T X )b = X T Y

The OLS estimator for CT is

CT b = CT (X T X );X T Y

where

b = (X T X );X T Y

is a solution to the normal equations.

and solutions have the form

b = (X T X );X T Y

633

632

II. Generalized least squares (GLS)

estimation:

Suppose

The OLS estimator CT b is a linear function

of Y.

E (CT b) = CT V ar(CT b) =

CT (X T X );X T (ZGZ T + R)X (X T X );C

If Y N (X ; ZGZ T + R); then CT b

has a

and

E (Y ) = X = V ar(Y) = ZGZ T + R

is known. Then a GLS estimator for is any

b that minimizes

Q(b) = (Y ; X b)T ;1(Y ; X b)

(from Defn. 3.8). The estimating equations

are:

N (X ; CT (X T X );X T (ZGZ T + R)X (X T X );C):

distribution.

and

(X T ;1X )b = X T ;1Y

bGLS

634

is a solution.

= (X T ;1X );(X T ;1Y)

635

For any estimable function

b.l.u.e. is

CT bGLS

CT ,

the unique

= CT (X T ;1X );X T ;1Y

with

V ar(CT bGLS )

= CT (X T ;1X );X T ;1X [(X T ;1X );]T C

(from result 3.12).

by replacing the unknown parameters with consistent estimators to obtain

^ T + R^

^ = Z GZ

and

C T bGLS = C T (X T ^ ;1X );^ ;1Y

If Y N (X ; ), then

C T bGLS N (C T ; C T (X T ;1X );C )

When G and/or R contain unknown parameters, you could obtain an \approximate BLUE"

636

C T bGLS is not a linear function of Y

C T bGLS is not a best linear unbiased estimator (BLUE)

See Kackar and Harville (1981, 1984)

for conditions under which C T bGLS is

an unbiased estimator for C T C T (X T ^ ;1X );C tends to

\underestimate" V ar(C bGLS )

(see Eaton (1984))

For \large" samples

C T bGLS N (C T ; C T (X T ;1X );C )

637

Variance component estimation:

Estimation of parameters in G and R

Crucial to the estimation of estimable functions of xed eects (e.g. E (Y) = X )

Of interest in its own right (sources of variation in the pigment paste production example)

638

ANOVA method (method of moments):

Compute an ANOVA table

Basic approaches:

(i) ANOVA methods (method of moments):

Set observed values of mean squares equal

to their expectations and solve the resulting equations.

(ii) Maximum likelihood estimation (ML)

(iii) Restricted maximum likelihood estimation

(REML)

Equate mean squares to their expected values

Solve the resulting equations

Example 10.1 Penicillin production

Yij = + i + j + eij

where Bj NID(0; 2) and eij NID(0; e2)

Source of

Variation d.f. Sums

of Squares

Blocks

4 a Pbj=1(Y:j ; Y::)2 = SSblocks

Processes 3 b Pai=1(Y1: ; Y::)2 = SSprocesses

error

12 Pai=1 Pbj=1(Yij ; Y1: ; Y:j + Y::)2 = SSE

C. total

19 Pai=1 Pbj=1(Yij ; Y::)2

640

639

Start at the bottom:

Then,

MSerror = (a ; SSE

1)(b ; 1)

E (MSerror) = e2

) ; e2

2 = E (MSblocks

a

E (MSerror)

= E (MSblocks) ;

a

Then an unbiased estimator for e is

^e2 = MSerror

Next, consider the mean square for the random

block eects:

MSblocks = SSb blocks

;1

E (MSblocks) = e2 + a2

"

number of

observations

for each

block

641

and an unbiased estimator for 2 is

MSerror

^2 = MSblocks ;

a

For the penicillin data

^e2 = MSerror = 18:83

^ = MSblocks 4; MSerror

= 66:0 ;418:83 = 11:79

V ar(Yij ) = 2 + e2

= 11:79 + 18:83 = 30:62

642

/*

This is a program for analyzing the penicillan

data from Box, Hunter, and Hunter.

stored in the file

penclln.sas

First enter the data

*/

It is

proc rank data=set2 normal=blom out=set2;

var resid; ranks q;

run;

data set1;

infile '/home/kkoehler/st511/penclln.dat';

input batch process $ yield;

run;

/* Compute the ANOVA table, formulas for

expectations of mean squares, process

means and their standard errors */

proc glm data=set1;

proc univariate data=set2 normal plot;

var resid;

run;

filename graffile pipe 'lpr -Dpostscript';

goptions gsfmode=replace gsfname=graffile

cback=white colors=(black)

targetdevice=ps300 rotate=landscape;

axis1 label=(h=2.5 r=0 a=90 f=swiss 'Residuals')

value=(f=swiss h=2.0) w=3.0 length=5.0 in;

class batch process;

model yield = batch process / e e3;

random batch / q test;

axis2 label=(h=2.5 f=swiss 'Standard

Normal Quantiles')

value=(f=swiss h=2.0) w=3.0 length=5.0 in;

lsmeans process / stderr pdiff tdiff;

output out=set2 r=resid p=yhat;

run;

/* Compute a normal probability plot for

the residuals and the Shapiro-Wilk test

for normality */

643

axis3 label=(h=2.5 f=swiss 'Production Process')

value=(f=swiss h=2.0) w=3.0 length=5.0 in;

symbol1 v=circle i=none h=2 w=3 c=black;

644

/* Fit the same model using PROC MIXED.

REML estimates of variance components.

Note

that PROC MIXED provides appropriate standard

errors for process means.

When block effects

are random. PROC GLM does not provide correct

standard errors for process means

proc gplot data=set2;

Compute

*/

plot resid*q / vaxis=axis1 haxis=axis2;

title h=3.5 f=swiss c=black

'Normal Probability Plot';

proc mixed data=set1;

run;

class process batch;

model yield = process / ddfm=satterth solution;

proc gplot data=set2;

plot resid*process / vaxis=axis1 haxis=axis3;

title h=3.5 f=swiss c=black 'Residual Plot';

run;

645

random batch / solution cl alpha=.05;

lsmeans process / pdiff tdiff;

run;

646

Type III Estimable Functions for: BATCH

General Linear Models Procedure

Class Level Information

Class

Levels

BATCH

5

1 2 3 4 5

PROCESS

4

A B C D

Effect

INTERCEPT

Values

BATCH

General Form of Estimable Functions

L2

L3

L4

L5

-L2-L3-L4-L5

A

B

C

D

0

0

0

0

Type III Estimable Functions for: PROCESS

Coefficients

INTERCEPT

1

2

3

4

5

PROCESS

Number of observations in data set = 20

Effect

Coefficients

0

Effect

INTERCEPT

L1

Coefficients

0

BATCH

1

2

3

4

5

L2

L3

L4

L5

L1-L2-L3-L4-L5

BATCH

1

2

3

4

5

0

0

0

0

0

PROCESS

A

B

C

D

L7

L8

L9

L1-L7-L8-L9

PROCESS

A

B

C

D

L7

L8

L9

-L7-L8-L9

648

647

Dependent Variable: YIELD

Source

DF

Sum of

Squares

Mean

Square

Model

7

334.00

47.71

Error

12

226.00

18.83

Cor. Total

19

560.00

R-Square

C.V.

0.596

5.046

F Value

Quadratic Forms of Fixed Effects in the

Expected Mean Squares

Pr > F

2.53

0.0754

Source: Type III Mean Square for PROCESS

PROCESS

PROCESS

PROCESS

PROCESS

Root MSE

YIELD Mean

4.3397

86.0

Source

Source

DF

BATCH

PROCESS

4

3

Type III

SS

264.0

70.0

Mean

Square

66.0

23.3

F Value

3.50

1.24

Pr > F

A

B

C

D

PROCESS

A

3.750

-1.250

-1.250

-1.250

PROCESS

B

-1.250

3.750

-1.250

-1.250

PROCESS

C

-1.250

-1.250

3.750

-1.250

PROCESS

D

-1.250

-1.250

-1.250

3.750

Type III Expected Mean Square

BATCH

Var(Error) + 4 Var(BATCH)

PROCESS

Var(Error) + Q(PROCESS)

0.0407

0.3387

649

650

Tests of Hypotheses for Mixed Model

Analysis of Variance

The MIXED Procedure

Dependent Variable: YIELD

Source: BATCH

Error: MS(Error)

DF

4

Class

Denominator

DF

MS

12 18.83

Type III MS

66

Source: PROCESS

Error: MS(Error)

DF

3

Class Level Information

F Value

3.5044

PROCESS

BATCH

Pr > F

0.0407

F Value

1.2389

Pr > F

0.3387

Iteration

Std

Error

Pr > |T|

A B C D

1 2 3 4 5

Evaluations

0

1

Least Squares Means

YIELD

LSMEAN

4

5

Values

REML Estimation Iteration History

Denominator

DF

MS

12

18.83

Type III MS

23.33

Levels

Objective

1

1

Criterion

77.18681835

74.42391081

0.00000000

Convergence criteria met.

t-tests / p-values

A

84

1.941

0.0001

1

B

85

1.941

0.0001

2

C

89

1.941

0.0001

3

D

86

1.941

0.0001

4

.

-0.364

0.722

0.364

.

0.722

1.822 1.457

0.094 0.171

0.729 0.364

0.480 0.723

-1.822

0.093

-1.457

0.171

.

-1.093

0.296

-0.729

0.480

-0.364

0.722

1.093

0.296

.

Covariance Parameter Estimates (REML)

Cov Parm

Ratio

Estimate

BATCH

0.6261

Residual 1.0000

11.79167

18.83333

Std Error

Z

11.8245

7.6887

1.00

2.45

651

Pr > |Z|

0.3187

0.0143

652

Model Fitting Information for YIELD

Description

Value

Observations

Variance Estimate

Standard Deviation Estimate

REML Log Likelihood

Akaike's Information Criterion

Schwarz's Bayesian Criterion

-2 REML Log Likelihood

20.0000

18.8333

4.3397

-51.9150

-53.9150

-54.6876

103.8299

Least Squares Means

Level

LSMEAN

PROCESS

PROCESS

PROCESS

PROCESS

A

B

C

D

84.00000000

85.00000000

89.00000000

86.00000000

Std Error

DDF

T

2.47487373

2.47487373

2.47487373

2.47487373

11.1

11.1

11.1

11.1

Pr > |T|

33.94

34.35

35.96

34.75

0.0001

0.0001

0.0001

0.0001

Differences of Least Squares Means

Level 1

Tests of Fixed Effects

Source

PROCESS

NDF

DDF

Type III F

Pr > F

3

12

1.24

0.3387

653

PROCESS

PROCESS

PROCESS

PROCESS

PROCESS

PROCESS

Level 2

A

A

A

B

B

C

PROCESS

PROCESS

PROCESS

PROCESS

PROCESS

PROCESS

Diff.

B

C

D

C

D

D

-1.000

-5.000

-2.000

-4.000

-1.000

3.000

Std Error

2.74469

2.74469

2.74469

2.74469

2.74469

2.74469

DDF

12

12

12

12

12

12

T

-0.36

-1.82

-0.73

-1.46

-0.30

1.09

654

Pr > |T|

0.7219

0.0935

0.4802

0.1707

0.7219

0.2958

Inferences about treatment means:

Yij = + i + j + eij

and

b

X

V ar(Y1:) = V ar( 1b Yij )

j =1

Consider the sample mean Y1: = 1b Pbj=1 Yij

8

>

+ i

>

>

>

<

E (Y1:) = >

1 Pb j

>

+

+

i

>

b j =1

>

:

b

X

= b12 V ar(Yij )

j =1

8

>

>

>

<

= >

>

>

:

for random

block eects

j NID(0; 2)

for xed

block eects

1 (e2 + 2)

b

b

1 (e2)

b

random block

eects

xed block

eects

656

655

Variance estimates & condence intervals:

Fixed additive block eects:

SY2i: = 1b ^e2 = 1b MSerror

t-tests:

Reject H0 : + i + 1b Pbj=1 j = d if

The standard error for Yi: is

s

SYi: = 1b MSerror = 1:941

jtj = qj1Yi: ; dj > t(a;1)(b;1); 2

b MSerror

dom

A (1 ; ) 100% condence interval for

b

X

+ i + 1b j

j =1

is

s

Yi: t(a;1)(b;1); 2 1b MSerror

"

degrees of freedom

for SSerror

"

degrees of freefor SSerror

This is what is done by the LSMEANS option

in the GLM procedure in SAS, even when you

specify

RANDOM BATCH;

657

658

Models with random additive block eects:

SY2i: = 1b (^e2 + ^2)

MSerror = 1b MSerror + MSblocks ;

a

; 1 MSerror + 1 MS

= a ab

ab blocks

= ab(b1; 1) [SSerror + SSblocks]

%

e22(a;1)(b;a)

(e2 + a2)2(b;1)

Hence, the distribution of SY2i: is not a multiple

of a central chi-square random variable.

Standard error for Yi: is

s

; 1 MSerror + 1 MS

SYi: = a ab

ab blocks

= 2:4749

An approximate (1 ; ) 100% condence

interval for + i is

s

; 1 MSerror + 1 MS

Yi: t; 2 a ab

ab blocks

where

h a;1

i

MSerror + ab1 MSblocks 2

ab

v = a;1

2 1

2

ab MSerror

ab MSblocks

b;1

(a;1)(b;a) +

h 4;1

i

MSerror + ab1 MSblocks 2

(4)(5)

= a;1

2 1

2 = 11:075

ab MSerror

ab MSblocks

b;1

(a;1)(b;1) +

659

Result 10.1: Cochran-Satterthwaite

approximation

Suppose MS1; MS2; ; MSk are mean squares

with

660

S 2 = a1MS1 + a2MS2 + : : : + ak MSk

is approximated by

independent distributions

V S 2 _ 2

V

E (S 2 )

degrees of freedom = dfi

where

)MSi 2

(Edf(iMS

dfi

i)

2 2

V = [a1E (MS1)]2[E (S )] [akE (MSk)]2

+ ::: +

Then, for positive constants

ai > 0;

i = 1; 2; : : : ; k

the distribution of

df1

dfk

is the value for the degrees of freedom.

661

662

Dierence between two treatment means:

In practice, the degrees of freedom are

evaluated as

22

V = (a1MS2)2 [S ] (akMSk)2

+ ::: +

df1

dfk

These are called the Cochran-Satterthwaite

degrees of freedom.

Cochran, W.G. (1951) Testing a Linear

Relation among Variances, Biometrics 7,

17-32.

E (Yi: ; Yk:)

0 b

1

b

X

X

1

1

@

= E b Yij ; b Ykj A

j =1

j =1

0 b

1

X

1

= E @ b (Yij ; Ykj )A

j =1

1

0 b

X

1

= E @ b (Yij ; Ykj )A

j =1

0 b

1

X

1

@

= E b ( + ali + j + ij ; ; 2k ; j ; kj )A

j =1

b

X

= i ; k + 1b E (ij ; kj )

j =1

" this is zero

= i ; k

= ( + i) ; ( + k)

whether block eects are xed or random.

663

664

0

1

b

X

1

V ar(Yi: ; Yk:) = V ar @i ; k + b (ij ; kj )A

j =1

b

X

= b12 V ar(ij ; kj )

j =1

t-test:

2

= 2b e

Reject H0 : i ; k = 0 if

The standard error for Yi: ; Yk: is

s

SYi:;Yk: = 2MSberror

; Yj:j

jtj = qjY2i:MS

> t(a;1)(b;1); 2

error

b

A (1 ; ) 100% condence interval for i ; i

is

s

(Yi: ; Yk:) t(a;1)(b;1); 2 2MSberror

"

d.f. for MSerror

665

"

d.f. for MSerror

666

/* This is a SAS program for computing an ANOVA table

for data from a nested or heirarchical experiment and

estimating components of variance. This program is

stored in the file

Example 10.2 Pigment production

In this example the main objective is the estimation of the variance components

Source of

Variation

d.f.

MS E(MS)

Batches

15-1=14

86.495 e2 + 2S2 + 42

Samples (Batches) 15(2-1)=15 57.983 e2 + 2S2

Tests (Samples)

(30)(2-1)=30 0.917 e2

Estimates of variance components:

pigment.sas

The data are measurements of moisture content of a

pigment taken from Box, Hunter and Hunter (page 574).*/

data set1;

infile 'c:\courses\st511\pigment.dat';

input batch sample test y;

run;

proc print data=set1;

run;

^e2 = MStests = 0:917

^2 = MSsamples2= MStests = 28:533

^2 = MSbatches ;4 MSsamples = 7:128

/*

The "random" statement in the following GLM procedure

requests the printing of formulas for expectations of

mean squares. These results are used in the estimation

of the variance components.

*/

proc glm data=set1;

class batch sample;

model y = batch sample(batch) / e1;

random batch sample(batch) / q test;

run;

667

668

OBS

/*

Alternatively, REML estimates of variance

components are produced by the MIXED

procedure in SAS. Note that there are

no terms on the rigth of the equal sign in

the model statement because the only

non-random effect is the intercept.

*/

proc mixed data=set1;

class batch sample test;

model y = ;

random batch sample(batch);

run;

/*

Use the MIXED procedure in SAS to compute

maximum likelihood estimates of variance

components */

proc mixed data=set1 method=ml;

class batch sample test;

model y = ;

random batch sample(batch);

run;

669

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

BATCH

1

1

1

1

2

2

2

2

3

3

3

3

4

4

4

4

5

5

5

5

6

6

6

6

7

7

7

7

8

8

SAMPLE

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

TEST

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

Y

40

39

30

30

26

28

25

26

29

28

14

15

30

31

24

24

19

20

17

17

33

32

26

24

23

24

32

33

34

34

670

OBS

BATCH

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

8

8

9

9

9

9

10

10

10

10

11

11

11

11

12

12

12

12

13

13

13

13

14

14

14

14

15

15

15

15

SAMPLE

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

TEST

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

Y

General Linear Models Procedure

Class Level Information

29

29

27

27

31

31

13

16

27

24

25

23

25

27

29

29

31

32

19

20

29

30

23

24

25

25

39

37

26

28

Class

Levels

BATCH

15

SAMPLE

Values

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

2

1 2

Number of observations in the data set = 60

Dependent Variable: Y

Source

DF

Model

29

Error

30

C.Total

59

Sum of Squares

2080.68

27.5000

Mean Square

F Value

Pr > F

71.7477

78.27

0.0001

0.9167

2108.18333333

Source

DF

Type I SS

Mean Square

F Value

Pr > F

BATCH

SAMPLE(BATCH)

14

15

1210.93

869.75

86.4952

57.9833

94.36

63.25

0.0001

0.0001

671

Source

Type I Expected Mean Square

BATCH

Var(Error) + 2 Var(SAMPLE(BATCH))

+ 4 Var(BATCH)

SAMPLE(BATCH)

Var(Error) + 2 Var(SAMPLE(BATCH))

672

The MIXED Procedure

Class Level Information

Class

BATCH

SAMPLE

TEST

Tests of Hypotheses for Random Model

Analysis of Variance

Source: BATCH

Error: MS(SAMPLE(BATCH))

Denominator

DF Type I MS

DF

MS

14

86.4952

15

57.9833

Source: SAMPLE(BATCH)

Error: MS(Error)

DF

15

Type I MS

57.9833

Denominator

DF

MS

30

0.916667

Levels

15

2

2

Values

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

1 2

1 2

REML Estimation Iteration History

F Value

1.4917

Pr > F

0.2256

Iteration

Evaluations

0

1

1

1

Objective

274.08096606

183.82758851

Criterion

0.0000000

Convergence criteria met.

F Value

63.2545

Pr > F

0.0001

673

Covariance Parameter Estimates (REML)

Cov Parm

Ratio

BATCH

SAMPLE(BATCH)

Residual

7.7760

31.1273

1.0000

Estimate

Std Error

7.1280

28.5333

0.9167

9.7373

10.5869

0.2367

Z

The MIXED Procedure

Pr > |Z|

0.73

2.70

3.87

0.4642

0.0070

0.0001

Class Level Information

Class

Levels

BATCH

SAMPLE

TEST

15

2

2

Values

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

1 2

1 2

Model Fitting Information for Y

Description

Value

Observations

Variance Estimate

Standard Deviation Estimate

REML Log Likelihood

Akaike's Information Criterion

Schwarz's Bayesian Criterion

-2 REML Log Likelihood

ML Estimation Iteration History

60.0000

0.9167

0.9574

-146.131

-149.131

-152.247

292.2623

Iteration

Evaluations

Objective

Criterion

0

1

1

1

273.55423884

184.15844023

0.00000000

Convergence criteria met.

675

674

Estimation of = E (Yijk):

Covariance Parameter Estimates (MLE)

Cov Parm

BATCH

SAMPLE(BATCH)

Residual

Ratio

Estimate

Std Error

6.2033

31.1273

1.0000

5.68639

28.53333

0.91667

9.07341

10.58692

0.23668

Z

0.63

2.70

3.87

Model Fitting Information for Y

Description

Observations

Variance Estimate

Standard Deviation Estimate

Log Likelihood

Akaike's Information Criterion

Schwarz's Bayesian Criterion

-2 Log Likelihood

Value

60.0000

0.9167

0.9574

-147.216

-150.216

-153.357

294.4311

676

Pr > |Z|

0.5309

0.0070

0.0001

b X

s X

r

1 X

^ = Y ::: = bsr

Yijk

i=1 j =1 k=1

E (Y :::) = 1 (2 + r2 + Sr2)

V ar(Y :::) = bsr

e

S

Standard error:

s

1 ^2 + r^2 + sr^2

bsr e

s

1 (MS

= bsr

Batches)

s

:495 = 1:4416

= 8660

SY ::: =

677

Properties of ANOVA methods for variance

component estimation:

A 95% condence interval for (i) Broad applicability

easy to compute (balanced cases)

Y ::: t14;:025SY :::

%

df for MSBatches

ANOVA is widely known

Here, t14;:025 = 2:510 and the condence

interval is

26:783 (2:510)(1:4416)

not required to completely specify

distributions for random eects

(ii) Unbiased estimators

(iii) Sampling distribution is not exactly known,

even under the usual normality assumptions (except for ^e2 = MSerror)

) (23:16; 30:40)

(iv) May produce negative estimates of

variances

678

679

Result 10.2:

(v) REML estimates have the same values

in simple balanced cases

where ANOVA estimates of variance

components are inside the parameter

space

(vi) For unbalanced studies, there may be no

\natural" way to choose

^2 =

k

X

i=1

aiMSi

If MS1; MS2; : : : ; MSk are distributed independently with

(dfi)MSi 2

E (MSi) dfi

and constants ai > 0; i = 1; 2; : : : ; k are

selected so that

^2 =

k

X

i=1

aiMSi

has expectation 2, then

V ar(^2) = 2

680

k a2[E (MSi)]2

X

i

dfi

i=1

681

From the independence of the MSi's, we have

and an unbiased estimator of this variance is

=

2 2

i

Vdar(^2) = (adfi MS

i + 2)

k

X

a2i V ar(MSi)

i=1

k 2

2

X

2 ai [E (dfMSi)]

i

i=1

Furthermore,

)MSi 2 and E (2 ) = df

Proof: Since (Edf(iMS

i

dfi

dfi

i)

2

and V ar(dfi ) = 2dfi, it follows that

V ar(MSi) = V ar(

V ar(^2) =

E (MSi)2dfi 2[E (MSi)]2

:

dfi ) =

dfi

E (MSi2) = V ar(MSi) + [E (MSi)]2

MSi)]2 + [E (MS )]2

= 2[E (df

i

i

!

df

+

2

i

2

=

dfi [E (MSi)]

Consequently,

2 k 2 23

X

i 5 = V ar(^2)

E 42 (adfi MS

i=1 i + 2)

683

682

Using the Cochran-Satterthwaite approximation (Result 10.1), an approximate (1 ; ) 100% condence interval for 2 could be constructed as follows:

A \standard error" for

^2 =

k

X

i=1

(

aiMSi

2

1 ; =_ Pr 2;1;=2 v^2 2;1;=2

)

9

8

>

>

2

=

< v^2

= Pr >: 2 2 v^ >;

;1;=2

;=2

could be reported as

"

"

lower

upper

limit

limit

where ^2 = Pki=1 aiMSi and

hPk

i

aiMSi 2

i

=1

v = Pk [aiMSi]2

v

u

u X

k a2MS 2

i

i

t2

S^2 = u

(

df

+

2)

i

i=1

i=1

684

dfi

685

10.3 Likelihood-based methods:

Maximum Likelihood Estimation

Consider the mixed model

Yn1 = X p1 + Z uq1 + en1

where

" #

" # "

#!

u

0

G 0

N

;

e

0

0 R

Multivariate normal likelihood:

L(; ; Y) = (2);n=2jj;1=2

exp ; 12 (Y ; X )T ;1(Y ; X )

Then,

Yn1 N (X ; )

where = ZGZ T + R

Maximum Likelihood Estimation (ML)

Restricted Maximum Likelihood Estimation (REML)

The log-likelihood function is

`(; ; Y) = ; n2 log(2) ; 12 log(jj)

; 21 (Y ; X )T ;1(Y ; X )

Given the values of the observed responses, Y,

nd values and that maximize the loglikelihood function.

686

This is a dicult computational problem:

687

Constrained optimization problem

{ estimates of variances cannot

be negative

no analytic solution (except in some

balanced cases)

{ estimated correlations are between

use iterative numerical methods

{ starting values (initial guesses at the

^ T + R^.

values of ^ and ^ = Z GZ

-1 and 1

{ ^ ; G^, and R^ are positive denite (or

non-negative denite)

Large sample distributional properties of

estimators follow from the general theory

of maximum likelihood estimation

{ local or global maxima?

{ what if ^ becomes singular or is not

positive denite?

{ consistency

{ normality

{ eciency

not guaranteed for ANOVA methods

688

689

Estimates of variancee components tend to

be too small

Consider a sample Y1; : : : ; Yn from a N (; 2)

distribution. An unbiased estimator for 2 is

S 2 = n ;1 1

n

X

When Y N (1; 2I ),

(Yj ; Y )2

j =1

2 3

e1

e = 64 .. 75 = (I ; P1)Y N (0; 2(I ; P1))

en

The MLE for 2 is

n

X

^2 = n1 (Yj ; Y )2

with

The MLE ^2 = n1 Pnj=1 e2j fails to

acknowledge that e is restrictedPto an

(n ; 1)-dimensional space, i.e., nj=1 ej = 0.

j =1

E (^2) = n ;n 1 2 < 2

690

Example: Suppose n = 4 and Y N (0; 2I ).

Then

2 Y ; Y 3

66 Y12 ; Y 77

2

e = 64

Y3 ; Y 75 = (I ; P1)Y N (0; (I ; P1))

Y4 ; Y

uparrow

This covariance

matrix is singular.

The density

function does

not exist.

Here, m = rank(I ; P1) = n ; 1 = 3.

Dene

where

Note that S 2 and ^2 are based on \error

contrasts"

e1 = Y1 ; Y = n;n 1 ; ; n1 ; : : : ; ; n1 Y

..

en = Yn ; Y = ; n1 ; ; n1 ; : : : ; ; n1 ; n;n 1 Y

whose distribution does not depend on

= E (Yj ) :

r = M e = M (I ; PX )Y

2 1 1 ;1 ;1 3

6 ;1 1 ;1 77

M = 664 11 ;

1 1 ;1 75

1 ;1 ;1 1

has row rank equal to m = rank(I ; PX ).

692

The MLE fails to make the appropriate adjustment in \degrees of freedom" needed to obtain

an unbiased estimator for 2.

691

Then

r

2 3 2

3

r

Y

+

Y

;

Y

;

Y

1

1

2

3

4

= 64 r2 75 = 64 Y1 ; Y2 + Y3 ; Y4 75

r3

Y1 ; Y2 ; Y3 + Y4

= M (I ; PX )Y

N (0; 2M (I ; P1)M T )

"

call this 2W

Restricted Likelihood function:

1 T ;1

1

L(2; r) =

e; 22 r W r

M=

2

1

=

2

2

(2) j W j

Restricted Log-likelihood:

`(2; r) = ;2m log(2) ; m2 log(2)

; 12 logjW j ; 212 rT W ;1r

(Note that j2W j = (2)mjW j)

693

(Restricted) likelihood equation:

2; r) = ;m + 1 rT W ;1r

0 = @`(@

2

22 2(2)2

Solution (REML estimator for 2):

2

^REML

= m1 rT W ;1r

= m1 YT (I ; P1)T M T (M (I ; P1)M T );1M (I ; P1)Y

%

This is a projection of Y onto the

column space of M (I ; P1) which is

the column space of M (I ; P1)

1 YT (I ; P )Y

1

= m

n

X

= n ;1 1 (Yj ; Y )2 = S 2

REML (Restricted Maximum Likelihood)

estimation:

Estimate parameters in

= ZGZ T + R

by maximizing the part of the likelihood

that does not depend on E (Y) = X .

Maximize a likelihood function for

\error contrasts"

{ linear combinations of observations that

do not depend on X { will need a set of

n ; rank(X )

linearly independent \error contrasts"

j =1

694

695

Mixed (normal-theory) model:

Y = X + Zu + e

where

" #

u

e

To avoid losing information we must have

row rank(M ) = n ; rank(X )

= n;p

" # "

#!

0

G

0

N 0 ; 0 R

Then

Then a set of n ; p error contrasts is

r = M (I ; PX )Y

Nn;p(0; M (I ; PX );1(I ; PX )M T )

%

call

this

W,

then rank(W ) = n ; p

and W ;1 exists.

LY = L(X + Z u + e)

= LX + LZ u + Le

is invariant to X if and only if LX = 0.

But LX = 0 if and only if

L = M (I ; PX )

for some M.

(Here PX = X (X T X );X T )

696

697

The "Restricted" likelihood is

L(; r) =

1

e; 12 rT W ;1r

(

n

;

p

)

=

2

1

=

2

(2)

jW j

The resulting log-likelihood is

`(; r) = ;(n2; p) log(2) ; 21 logjW j

; 12 rT W ;1r

For any M(n;p)n with row rank equal to

n ; p = n ; rank(X )

the log-likelihood can be expressed in terms of

e = (I ; X (X ;1X T );X T ;1)Y

as

`(; e) = constant ; 12 log(jj)

; 21 log(jXT ;1Xj) ; 12 eT ;1e

where X is any set of p =rank(X ) linearly

independent columns of X .

Denote the resulting REML estimators as

^ T + R^

G^ R^ and ^ = Z GZ

698

699

Estimation of xed eects:

Prediction of random eects:

For any estimable function C , the

blue is the generalized least squares estimator

C bGLS = C (X T ;1X );X T ;1Y

Given the observed reponses Y, predict the

value of u.

For our model,

" #

u

e

Using the REML estimator for

= ZGZ T + R

" # "

#!

0

G

0

N 0 ; 0 R :

Then (from result 4.1)

"

an approximation is

C ^ = C (X T ^ ;1X );X T ^ ;1Y

and for \large" samples:

C ^ _ N (C ; C (X T ;1X );C T )

700

u

Y

#

=

"

u

#

X + Zu + e

# "

#" #

0

I

0

= X + Z I ue

"

# "

#!

T

0

G

GZ

N X ; ZG ZGZ T + R

"

701

The Best Linear Unbiased Predictor (BLUP):

is the b.l.u.e. for

E (ujY)

= E (u) + (GZ T )(ZGZ T + R);1(Y ; E (Y))

= 0 + GZ T (ZGZ T + R);1(Y ; X )

(from result 4.5)

"

substitute

the b.l.u.e.

for X X bGLS = X (X T ;1X );X T ;1Y

Then, the BLUP for u is

BLUP (u) = GZ T ;1(Y ; X bGLS )

= GZ T ;1(I ; X (X T ;1X );X T ;1)Y

when G and = ZGZ T + R are known.

Substituting REML estimators G^ and R^ for G

and R, an approximate BLUP for u is

u

^

^ T ^ ;1(I ; X (X T ^ ;1X );X T ^ ;1)Y

= GZ

^ T ^ ;1(Y ; X ^)

= GZ

" the approximate

b.l.u.e. for X .

For \large" samples, the distribution of u^ is

approximately multivariate normal with mean

vector 0 and covariance matrix

GZ T ;1(I ; P )(I ; P );1ZG

where

P = X (X T ;1X );X T ;1

703

702

^ R^ and ^ = Z GZ

^ T + R;

^

Given estimates G;

^ and u^ provide a solution to the mixed model

equations:

" T ^;1

#" ^ #

X R X X T R^;1Z

Z T R^;1 Z T R^;1Z + G^;1 u^

" T ^;1 #

= XZ T RR^;1YY

References:

Hartley, H.O. and Rao, J.N.K. (1967)

Maximum-likelihood estimation for the mixed

analysis of variance model, Biometrika, 54, 93108.

Harville, D.A. (1977) Maximum likelihood approaches

to

variance

component estimation and to related problems,

Journal of the American Statistical Association,

72, 320-338.

A generalized inverse of

" T ^ ;1

#

X R X X T R^;1Z

Z T R^;1 Z T R^;1Z + G^;1

Jennrich, R.I. and Schluchter, M.D. (1986)

Unbalanced repeated-measures models with

structured covariance matrices, Biometrics,

42, 805-820.

is used

" ^ #to approximate the covariance matrix

for u^

Kackar, R.N. and Harville, D.A. (1984) Approximations for standard errors of estimators

of xed and random eects in mixed linear

models.

704

705

Journal of the American Statistical Association,

79, 853-862.

Laird, N.M. and Ware, J.H. (1982) Randomeects models for longitudal data, Biometrics,

38, 963-974.

Lindstrom, M.J. and Bates, D.M. (1988)

NewtonRaphson and EM algorithms for linear-mixedeects models for repeated-measures data.

Journal of the American Statistical Association,

83, 1014-1022.

Robinson, G.K. (1991) That BLUP is a good

thing: the estimation of random eects,

Statistical Science, 6, 15-51.

Searle, S.R., Casella, G. and McCulloch, C.E.

(1992) Variance Components, New York, John

Wiley & Sons.

Wolnger, R.D., Tobias, R.D. and Sall, J.

(1994) Computing Gaussian likelihoods and

their derivatives for general linear mixed models, SIAM Journal on Scientic Computing,

15(6), 1294-1310.

Sub-plot factor: b = 3 bacterial innoculation

treatments:

CON for control (no innoculation)

DEA for dead

LIV for live

Each whole plot is split into three sub-plots and

independent random assignments of innoculation treatments to sub-plots are done within

whole plots.

Example 10.3 A split-plot experiment with

whole plots arranged in blocks.

Blocks: r = 4 elds (or locations).

Whole plots: Each eld is divided into a = 2

whole plots.

Whole plot factor: two cultivars of grasses (A

and B)

within each block, cultivar A is grown in

one whole plot, cultivar B is grown in the

other whole plot

separate random assignments of cultivars

to whole plots is done in each block

706

Source: Littel, R.C. Freund, R.J. and Spector,

P.C. (1991) SAS Systems for Linear Models,

3rd edition, SAS Institute, Cary, NC

Data: grass.dat

SAS code: grass.sas

S-PLUS code: grass.spl

Measured response: Dry weight yield

707

708

Model with random block eects:

Yijk = + i + j + ij + k + ik + eijk

i = 1; : : : ; a

j = 1; : : : ; r

k = 1; : : : ; b

Block 1

Cultivar B CON DEA LIV

Cultivar A LIV CON DEA

Block 2

DEA

LIV

LIV

CON

CON

DEA

Cultivar A Cultivar B

Yijk ) observed yield for the k-th innoculant

applied to the i-th cultivar in the

j -th eld

i ) xed cultivar eect

k ) xed innoculant eect

ik ) cultivar*innoculant interaction

Block 3

DEA

CON

CON

DEA

LIV

LIV

Cultivar B Cultivar A

Block 4

LIV DEA CON Cultivar B

CON DEA LIV Cultivar A

709

ANOVA table:

Source of

Variation

df

SS

Blocks

r ; 1 = 3 25.32

Cultivars

a;1=1

2.41

Whole Plot error

(r-1)(a-1)=3

9.48

(Block cultivar

interaction)

Innoculants

b ; 1 = 2 118.18

Cult. Innoc. (a ; 1)(b ; 1) = 2

1.83

Sub-plot error

12 8.465

Corrected total

23 165.673

711

The following random eects are independent

of each other:

j NID(0; 2) ) random block eects

ij NID(0; w2 ) ) random whole plot eects

eijk NID(0; e2) ) random errors

710

ANOVA table:

Source of

Variation

Blocks

Cultivars

Whole Plot error

(Block cult.

interaction)

Innoculants

Cult. Innoc.

Sub-plot error

Corrected total

df MS

E (MS )

2

2

3 8.44 e + bw + ba2

1 2.41 e2 + bw2 + (i)

3 3.16

e2 + bw2

2 59.09

2 0.91

12 0.705

23

(i)

br Pai=1(i+i:;:;::)2

a;1

(ii)

ar Pbk=1(k +:k ;:;::)2

b;1

(iii)

r Pi Pk (ik;1:;:k +::)2

(a;1)(b;1)

e2 + (ii)

e2 + (iii)

e2

712

/*

SAS code for analyzing the data from the

split plot experiment corresponding to

example 10.3 in class

*/

data set1;

infile 'c:\courses\st511\grass.dat';

input block cultivar $ innoc $ yield;

run;

proc print data=set1;

run;

/*

proc mixed data=set1;

class block cultivar innoc;

model yield = cultivar innoc cultivar*innoc

/ ddfm=satterth;

random block block*cultivar;

lsmeans cultivar / e pdiff tdiff;

lsmeans innoc / e pdiff tdiff;

lsmeans cultivar*innoc / e pdiff tdiff;

estimate 'a:live vs b:live'

cultivar 1 -1 innoc 0 0 0

cultivar*innoc 0 0 1 0 0 -1;

estimate 'a:live vs b:live'

cultivar 1 -1 innoc 0 0 1

cultivar*innoc 0 0 1 0 0 -1 / e;

estimate 'a:live vs a:dead'

cultivar 0 0 innoc 0 -1 1

cultivar*innoc 0 -1 1 0 0 0 / e;

run;

Use Proc GLM to compute an ANOVA table */

proc glm data=set1;

class block cultivar innoc;

model yield = cultivar block cultivar*block

innoc cultivar*innoc;

random block block*cultivar;

test h=cultivar block e=cultivar*block;

run;

714

713

The MIXED Procedure

OBS

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

BLOCK

CULTIVAR

INNOC

1

1

1

1

1

1

2

2

2

2

2

2

3

3

3

3

3

3

4

4

4

4

4

4

A

A

A

B

B

B

A

A

A

B

B

B

A

A

A

B

B

B

A

A

A

B

B

B

CON

DEA

LIV

CON

DEA

LIV

CON

DEA

LIV

CON

DEA

LIV

CON

DEA

LIV

CON

DEA

LIV

CON

DEA

LIV

CON

DEA

LIV

Class Level Information

YIELD

Class

27.4

29.7

34.5

29.4

32.5

34.4

28.9

28.7

33.4

28.7

32.4

36.4

28.6

29.7

32.9

27.2

29.1

32.6

26.7

28.9

31.8

26.8

28.6

30.7

Levels

BLOCK

CULTIVAR

INNOC

4

2

3

Values

1 2 3 4

A B

CON DEA LIV

REML Estimation Iteration History

Iteration

Evaluations

0

1

1

1

Objective

Criterion

42.10326642

31.98082956

0.00000000

Convergence criteria met.

Covariance Parameter Estimates (REML)

Cov Parm

BLOCK

BLOCK*CULTIVAR

Residual

715

Ratio

1.24749

1.15987

1.00000

Estimate

Std Error

0.88000

0.81819

0.70542

1.22640

0.86538

0.28799

Z

0.72

0.95

2.45

716

Pr > |Z|

0.4730

0.3444

0.0143

Coefficients for a:live vs b:live

Parameter

INTERCEPT

CULTIVAR A

CULTIVAR B

INNOC CON

INNOC DEA

INNOC LIV

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

Model Fitting Information for YIELD

Description

Value

Observations

Variance Estimate

Standard Deviation Estimate

REML Log Likelihood

Akaike's Information Criterion

Schwarz's Bayesian Criterion

-2 REML Log Likelihood

Row 1

24.0000

0.7054

0.8399

-32.5313

-35.5313

-36.8669

65.0626

A

A

A

B

B

B

0

1

-1

0

0

1

0

0

1

0

0

-1

CON

DEA

LIV

CON

DEA

LIV

Coefficients for a:live vs a:dead

Parameter

Row 1

INTERCEPT

CULTIVAR A

CULTIVAR B

INNOC CON

INNOC DEA

INNOC LIV

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

Tests of Fixed Effects

Source

NDF

DDF

Type III F

Pr > F

1

2

2

3

12

12

0.76

83.76

1.29

0.4471

0.0001

0.3098

CULTIVAR

INNOC

CULTIVAR*INNOC

A

A

A

B

B

B

0

0

0

0

-1

1

0

-1

1

0

0

0

CON

DEA

LIV

CON

DEA

LIV

718

717

ESTIMATE Statement Results

Parameter

Estimate Std Error

a:live vs b:live

a:live vs b:live

a:live vs a:dead

-0.375

.

3.900

0.87281

.

0.59389

DDF

5.98

.

12

T

-0.43

.

6.57

Coefficients for CULTIVAR Least Squares Means

Parameter

Row 1

Row 2

INTERCEPT

1

1

CULTIVAR A

CULTIVAR B

1

0

0

1

0.33333

0.33333

0.33333

0.33333

0.33333

0.33333

0.33333

0.33333

0.33333

0

0

0

0

0

0

0.33333

0.33333

0.33333

INNOC CON

INNOC DEA

INNOC LIV

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

A

A

A

B

B

B

CON

DEA

LIV

CON

DEA

LIV

Pr > |T|

0.6825

.

0.0001

Coefficients for INNOC Least Squares Means

Parameter

Row 1

Row 2

Row 3

INTERCEPT

1

1

1

CULTIVAR A

CULTIVAR B

0.5

0.5

0.5

0.5

0.5

0.5

CON

DEA

LIV

1

0

0

0

1

0

0

0

1

CON

DEA

LIV

CON

DEA

LIV

0.5

0

0

0.5

0

0

0

0.5

0

0

0.5

0

0

0

0.5

0

0

0.5

INNOC

INNOC

INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

719

A

A

A

B

B

B

720

Coefficients for CULTIVAR*INNOC Least Squares Means

Parameter

INTERCEPT

CULTIVAR A

CULTIVAR B

INNOC CON

INNOC DEA

INNOC LIV

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

A

A

A

B

B

B

Row

1

Row

2

Row

3

Row

4

Row

5

Row

6

1

1

0

1

0

0

1

0

0

0

0

0

1

1

0

0

1

0

0

1

0

0

0

0

1

1

0

0

0

1

0

0

1

0

0

0

1

0

1

1

0

0

0

0

0

1

0

0

1

0

1

0

1

0

0

0

0

0

1

0

1

0

1

0

0

1

0

0

0

0

0

1

CON

DEA

LIV

CON

DEA

LIV

Least Squares Means

LSMEAN

Std.

Error

DDF

30.1000

30.7333

0.6952

0.6952

4.97

4.97

43.30

44.21

0.0001

0.0001

CON

DEA

LIV

27.9625

29.9500

33.3375

0.6407

0.6407

0.6407

4.06

4.06

4.06

43.65

46.75

52.04

0.0001

0.0001

0.0001

CON

DEA

LIV

CON

DEA

LIV

27.9000

29.2500

33.1500

28.0250

30.6500

33.5250

0.7752

0.7752

0.7752

0.7752

0.7752

0.7752

7.5

7.5

7.5

7.5

7.5

7.5

35.99

37.73

42.76

36.15

39.54

43.25

0.0001

0.0001

0.0001

0.0001

0.0001

0.0001

Level

CULTIVAR A

CULTIVAR B

INNOC

INNOC

INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

CULTIVAR*INNOC

A

A

A

B

B

B

721

T

Pr > |T|

722

General Linear Models Procedure

Dependent Variable: YIELD

Differences of Least Squares Means

Differ

-rence

Std.

Error

Level 1

Level 2

CULT A

CULT B

-0.6333

0.72571

3

-0.87

0.4471

IN CON

IN CON

IN DEA

IN DEA

IN LIV

IN LIV

-1.9875

-5.3750

-3.3875

0.41994

0.41994

0.41994

12

12

12

-4.73

-12.80

-8.07

0.0005

0.0001

0.0001

A

A

B

B

B

A

B

B

B

B

B

B

B

B

B

-1.3500

-5.2500

-0.1250

-2.7500

-5.6250

-3.9000

1.2250

-1.4000

-4.2750

5.1250

2.5000

-0.3750

-2.6250

-5.5000

-2.8750

0.59389

0.59389

0.87281

0.87281

0.87281

0.59389

0.87281

0.87281

0.87281

0.87281

0.87281

0.87281

0.59389

0.59389

0.59389

12

12

5.98

5.98

5.98

12

5.98

5.98

5.98

5.98

5.98

5.98

12

12

12

-2.27

-8.84

-0.14

-3.15

-6.44

-6.57

1.40

-1.60

-4.90

5.87

2.86

-0.43

-4.42

-9.26

-4.84

0.0422

0.0001

0.8908

0.0199

0.0007

0.0001

0.2102

0.1600

0.0027

0.0011

0.0288

0.6825

0.0008

0.0001

0.0004

A

A

A

A

A

A

A

A

A

A

A

A

B

B

B

CON

CON

CON

CON

CON

DEA

DEA

DEA

DEA

LIV

LIV

LIV

CON

CON

DEA

DEA

LIV

CON

DEA

LIV

LIV

CON

DEA

LIV

CON

DEA

LIV

DEA

LIV

LIV

DDF

T

723

Pr > |T|

Source

DF

Sum of

Squares

Mean

Square

F Value

Pr > F

Model

11

157.208

14.292

20.26

0.0001

Error

12

8.465

0.705

Corrected Total

23

165.673

Source

CULTIVAR

BLOCK

BLOCK*CULTIVAR

INNOC

CULTIVAR*INNOC

Source

CULTIVAR

BLOCK

BLOCK*CULTIVAR

INNOC

CULTIVAR*INNOC

DF

1

3

3

2

2

Type I SS

2.40667

25.32000

9.48000

118.17583

1.82583

DF

Type III

SS

1

3

3

2

2

2.40667

25.32000

9.48000

118.17583

1.82583

Mean

Square

F Value

Pr > F

2.40667

8.44000

3.16000

59.08792

0.91292

3.41

11.96

4.48

83.76

1.29

0.0895

0.0006

0.0249

0.0001

0.3098

Mean

Square

F Value

2.40667

8.44000

3.16000

59.08792

0.91292

3.41

11.96

4.48

83.76

1.29

724

Pr > F

0.0895

0.0006

0.0249

0.0001

0.3098

Standard errors for sample means:

Source

Type III Expected Mean Square

CULTIVAR

Var(Error) + 3 Var(BLOCK*CULTIVAR)

+ Q(CULTIVAR,CULTIVAR*INNOC)

BLOCK

Var(Error) + 3 Var(BLOCK*CULTIVAR)

+ 6 Var(BLOCK)

BLOCK*CULTIVAR

Var(Error) + 3 Var(BLOCK*CULTIVAR)

INNOC

Var(Error) + Q(INNOC,CULTIVAR*INNOC)

CULTIVAR*INNOC

Var(Error) + Q(CULTIVAR*INNOC)

Cultivars:

V ar(Yi::)

P P ( + + + + + + e ) !

i

j

ij

j k

k

ik

ijk

rb

0 r 1

0 r 1

X

X

1

= V ar @ r j A + V ar @ 1r ij A

j =1

j =1

0 r b

1

X X A

1

@

+V ar br

eijk

= V ar

j =1 k=1

Tests of Hypotheses using the Type III MS

for BLOCK*CULTIVAR as an error term

Source

CULTIVAR

BLOCK

DF

Type III SS

1

3

2.40667

25.32000

Mean

Square

2.40667

8.44000

F Value

0.76

2.67

Pr > F

0.4471

0.2206

2 2 2

= r + rw + rbe

2 + b2 + b2

= e rbw

725

Estimation:

^e2 = MSerror = :7054

; MSerror = :8182

^w2 = MSblockCult

3

^2 = MSblocks ; 6MSblockcult = :8800

^2 + b^2 + b^2

Vdar(Y1::) = e rbw

= rb1 a ;a 1 MSblockCult + 1a MSblocks

= 0:48333 (and SYi:: = 0:6952)

h a;1

i

MSblockcult + 1a MSblocks 2

a

with d:f: = h a;1

i2 h 1

i2

a MSblocksclut

a MSblocks

r ;1

(a;1)(r;1) +

= 4:97

727

726

Example 10.4: Repeated Measures

In an exercise therapy study, subjects were assigned to one of three weightlifting programs

(i=1) The number of repetitions of weightlifting

was increased as subjects became stronger

(RI)

(i=2) The amount of weight was increased as

subjects became stronger (WI)

(i=3) Subjects did not participate in weightlifting

(XCont)

Measurements of strength (Y ) were taken on

days 2, 4, 6, 8, 10, 12 and 14 for each subject.

728

Mixed model

Yijk = + i + Sij + k + ik + eijk

Yijk strength measurement at the k-th time

point for the j -th subject in the

i-th program

Source: Littel, Freund, and Spector (1991)

SAS System for Linear Models

i \xed" program eect

Data: weight2.dat

Sij random subject eect

SAS code: weight2.sas

k \xed" time eect

S-PLUS code: weight2.spl

eijk random error

where the random eects are all independent

and

Sij NID(0; S2 )

ijk NID(0; 2)

730

729

Correlation between observations taken on the

same subject:

Average strength after 2k days on the

i-th program is

ik = E (Yijk )

= E ( + i + Sij + k + ik + eijk )

= + i + E (Sij ) + k + ik + E (eijk )

= + i + k + ik

for i = 1; 2; 3 and k = 1; 2; : : : ; 7

The variance of any single observation is

V ar(Yijk ) = V ar( + i + Sij + k + ik + eijk )

= V ar(Sij + eijk )

= V ar(Sij ) + V ar(eijk )

= S2 + e2

731

Cov(Yijk; Yij`)

= Cov( + i + Sij + k + ik + eijk;

+ i + Sij + ` + i` + eij`)

= Cov(Sij + eijk ; Sij + eij`)

= Cov(Sij ; Sij ) + Cov(Sij ; eij`)

+Cov(eijk; Sij ) + Cov(eijk; eij`)

= V ar(Sij )

= S2 for k 6= `:

The correlation between Yijk and Yij` is

S2 2

S + e2

732

Observations taken on dierent subjects are

uncorrelated.

For the set of observations taken on a single

subject, we have

02 Y 31

B6 ij 1 7C

V ar BB@664 Yij. 3 775CCA

Yij 7

2 2 2

2

66 e +2 S 2 +S 2

e . S

= 66 ..S

.

4

S2

S2

3

S2 7

S2 77

S2 75

2

2

S e + S2

...

= e2I + S2 J

"

matrix of ones

Write this model in the form

Y = X + Zu + e

2 Y111 3 2 1

66 Y112

7 61

66 .. 777 666

66 Y117 77 66 1

66 Y121 77 66 1

66 Y122

7 61

66 Y .. 777 666 1

66 127

7 6

66 Y .. 777 = 666 1..

66 Y211

77 66

66 212

.. 77 66 1

66 Y217 77 66 1

66 .. 77 66 ..

66 Y3;n 1 77 66 1

66 Y3;n 2 77 66 1

4 .. 5 4 ..

3

3

Y3;n 7

This covariance structure is called

compound symmetry.

3

1000000

0100000

...

0000001

1000000

0100000

...

0000001

1000000

0100000

...

0000001

0 0 1 1000000

0 0. 1 0100000

...

.

1 0 0 1 0000001

1

1.

.

1

1

1.

.

1

0

0

0

0

0

0

0

0

0

1

1.

.

1

0

0

0

0

0

0

0

0

0

j

j

j

j

j

j

j

j

j

j

j

j

j

j

j

j

j

j

3

77 2 3

7

21

columns 777 666 1 777

for

76 2 7

interaction 77 66 3 77

77 66 1 77

77 66 2 77

77 66 3 77

77 66 45 77

77 66 6 77

77 66 7 77

77 66 11 77

77 66 12 77

77 4 .. 5

75 37

734

733

2

66 11

66 ..

66

66 1

66 0

66 0

66 ..

+ 666 0.

66 .

66 ..

66 ..

66 0

66

66 0.

4.

0

0.

.

0

1

1.

.

1.

..

..

.

0

0.

.

0 0

3

2

e111 7

6

3

66 e112 77

07

66 .. 77

0. 77

66 e117 77

. 77

66 e121 77

77

6

0 777

0 77 2 S11 3 6666 e122

.. 777

0. 77 66 S12 77 66 e127 77

. 777 66 .. 77 66 .. 77

0. 77 666 .. 777 + 666 e211 777

.. 77 64 .. 75 66 e212 77

66 .. 77

.. 777 S3;n

3

77

66

. 77

77

66 e217

1 77

.

.

77

6

7

6

1. 77

6

e

3

;n

1

66 3 777

.5

66 e3;n. 32 77

1

4 . 5

e3;n37

735

In this case:

R = V ar(e) = e2I(7r)(7r)

G = V ar(u) = S2 Irr

where r = number of subjects

= V ar(Y) = ZGZ T + R

= e2Irr (S2 J77)

736

/*

SAS code for analyzing repeated measures data

across time(longitudinal studies). This code

is applied to the weightlifting data from

Littel, et. al. (1991) This code is stored in

the file.

weight2.sas

*/

data set1;

infile '/home/kkoehler/st511/weight2.dat';

input subj program$ s1 s2 s3 s4 s5 s6 s7;

if program='XCONT' then cprogram=3;

if program='RI' then cprogram=1;

if program='WI' then cprogram=2;

run;;

/* Create a profile plot with time on the horizontal axis */

proc sort data=set2; by cprogram time;

run;

proc means data=set2 noprint;

by cprogram time;

var strength;

output out=seta mean=strength;

run;

filename graffile pipe 'lpr -Dpostscript';

goptions gsfmode=replace gsfname=graffile cback=white

colors=(black) targetdevice=ps300 rotate=landscape;

/* Create a data file where responses at different

time points are on different lines */

data set2;

set set1;

time=2; strength=s1; output;

time=4; strength=s2; output;

time=6; strength=s3; output;

time=8; strength=s4; output;

time=10; strength=s5; output;

time=12; strength=s6; output;

time=14; strength=s7; output;

keep subj program cprogram time strength;

run;

axis1 label=(f=swiss h=2.5)

value=(f=swiss h=2.0) w=3.0

length= 5.5 in;

axis2 label=(f=swiss h=2.2 a=270 r=90)

value=(f=swiss h=2.0) w= 3.0 length = 4.2 in;

SYMBOL1 V=circle H=2.0 w=3 l=1 i=join ;

SYMBOL2 V=diamond H=2.0 w=3 l=3 i=join ;

SYMBOL3 V=square H=2.0 w=3 l=9 i=join ;

proc gplot data=seta;

plot strength*time=cprogram / vaxis=axis2 haxis=axis1;

title H=3.0 F=swiss "Observed Strength Means";

label strength=' ';

label time = 'Time (days) ';

run;

737

/*

Fit the standard mixed model with a

compound symmetric covariance structure

by specifying a random subject effect */

proc mixed data=set2;

class program subj time;

model strength = program time program*time / dfm=satterth;

random subj(program) / cl;

lsmeans program / pdiff tdiff;

lsmeans time / pdiff tdiff;

lsmeans program*time / slice=time pdiff tdiff;

run;

738

/* Fit the same model with the repeated statement.

Here the compound symmetry covariance structure

is selected.

*/

proc mixed data=set2;

class program subj time;

model strength = program time program*time;

repeated / type=cs sub=subj(program) r rcorr;

run;

/* Use the GLM procedure in SAS to get formulas for

expectations of mean squares. */

/* Fit a model with the same fixed effects,

but change the covariance structure to

an AR(1) model

*/

proc glm data=set2;

class program subj time;

model strength = program subj(program) time program*time;

random subj(program);

lsmeans program / pdiff tdiff;

lsmeans time / pdiff tdiff;

lsmeans program*time / slice=time pdiff tdiff;

run;

proc mixed data=set2;

class program subj time;

model strength = program time program*time;

repeated / type=ar(1) sub=subj(program) r rcorr;

run;

739

740

/* Fit a model with the same fixed effects,

but use an arbitary covariance matrix

for repated measures on the same subject. */

/* By removing the automatic intercept and adding

the solution option to the model statement, the

estimates of the parameters in the model are obtained.

Here we use a power function model for the covariance

structure. This is a generalization of the AR(1)

covariance structure that can be used with unequally

spaced time points. */

proc mixed data=set2;

class program subj time;

model strength = program time program*time;

repeated / type=un sub=subj(program) r rcorr;

run;

/*

proc mixed data=set2;

class program subj;

model strength = program time*program time*time*program

/ noint solution htype=1;

repeated / type=sp(pow)(time) sub=subj(program) r rcorr;

run;

Fit a model with linear and quadratic

trends across time and different trends

across time for different programs.

*/

proc mixed data=set2;

class program subj;

model strength = program time time*program

time*time time*time*program / htype=1;

repeated / type=ar(1) sub=subj(program);

run;

/*

/*

Fit a model with linear, quadratic and

cubic trends across time and different

trends across time for different programs. */