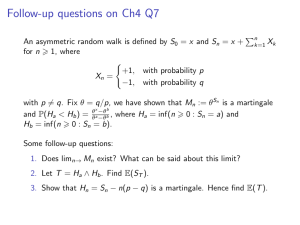

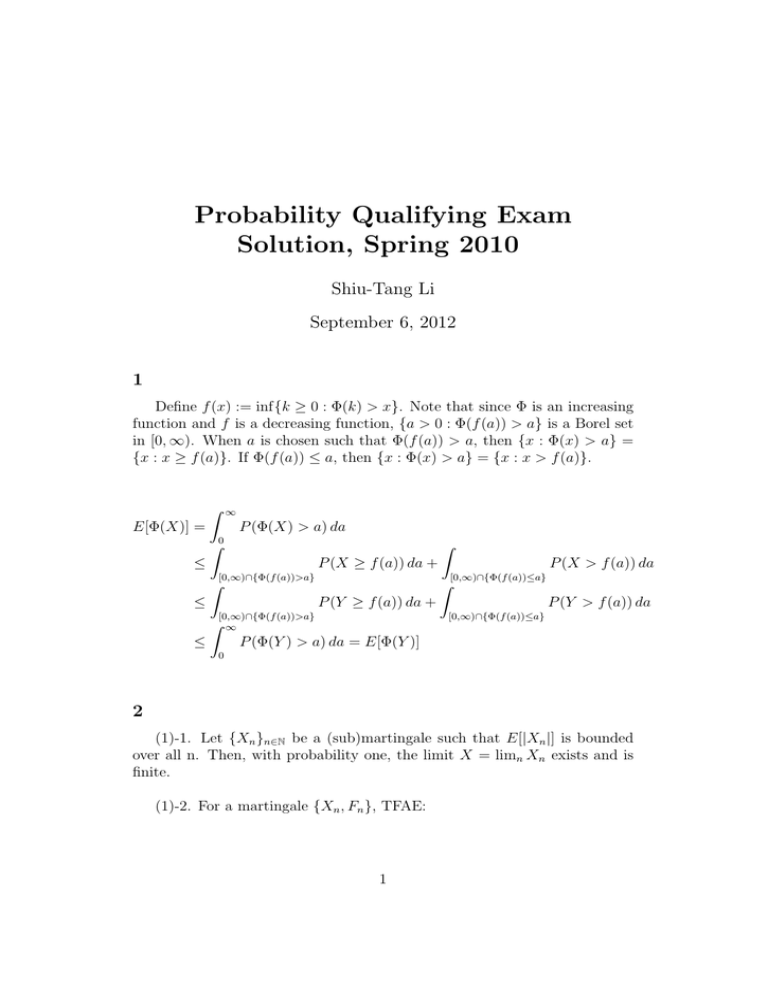

Probability Qualifying Exam Solution, Spring 2010 Shiu-Tang Li September 6, 2012

advertisement

Probability Qualifying Exam

Solution, Spring 2010

Shiu-Tang Li

September 6, 2012

1

Define f (x) := inf{k ≥ 0 : Φ(k) > x}. Note that since Φ is an increasing

function and f is a decreasing function, {a > 0 : Φ(f (a)) > a} is a Borel set

in [0, ∞). When a is chosen such that Φ(f (a)) > a, then {x : Φ(x) > a} =

{x : x ≥ f (a)}. If Φ(f (a)) ≤ a, then {x : Φ(x) > a} = {x : x > f (a)}.

∞

Z

P (Φ(X) > a) da

E[Φ(X)] =

Z0

Z

≤

P (X ≥ f (a)) da +

P (X > f (a)) da

[0,∞)∩{Φ(f (a))>a}

[0,∞)∩{Φ(f (a))≤a}

Z

Z

≤

P (Y ≥ f (a)) da +

[0,∞)∩{Φ(f (a))>a}

Z ∞

≤

P (Y > f (a)) da

[0,∞)∩{Φ(f (a))≤a}

P (Φ(Y ) > a) da = E[Φ(Y )]

0

2

(1)-1. Let {Xn }n∈N be a (sub)martingale such that E[|Xn |] is bounded

over all n. Then, with probability one, the limit X = limn Xn exists and is

finite.

(1)-2. For a martingale {Xn , Fn }, TFAE:

1

(i) it is uniformly integrable.

(ii) It converges to X 0 a.s. and in L1 , and Xn = E[X 0 |Fn ].

(iii) It converges to X 0 in L1 , and Xn = E[X 0 |Fn ].

(iv) There is some X ∈ L1 s.t. Xn = E[X|Fn ].

(Note that the X 0 in (ii) and (iii) is the same one but is different from

the X in (iv).)

(2) For each n, E[E[Z|Fn ]|Fn−1 ] = E[Z|Fn−1 ], by the definition of conditional expectation, and hence {E[Z|Fn ], Fn } is a martingale. We observe

that

W∞

X = E[Z|F∞ ] satisfies E[Z|Fn ] = E[E[Z|F∞ ]|Fn ], where F∞ := n=1 Fn , so

by Doob’s martingale convergence theorem E[Z|Fn ] → Y a.s. and in L1 for

some Y , and E[Y |Fn ] = E[Z|Fn ] by the statements in martingale convergence theorem. We claim that Y = E[Z|F∞ ].

R

R

S∞

F

,

we

have

Y

dP

=

ZdP . The set {B :

For

any

A

∈

n

n=1

A

A

R

R

Y

dP

=

ZdP

}

is

a

λ-system

(The

monotone

class

property follows

B

B

S∞

from LDCT), which contains n=1 Fn , Rwhich is a Rπ-system that generates

F∞ . Thus by π − λ theorem we have A Y dP = A ZdP for A ∈ F∞ , so

Y = E[Z|Fn ].

3

First note that a +

enough, we have

1

λ

ln n > 0 for all n large. Therefore, when n is large

P (max(X1 , · · · , Xn ) ≤ a +

λa

1

1

ln n) = (1 − eλ(a+ λ ln n) )n

λ

e−λa n

= (1 −

)

n

λa

= e−e as n → ∞

Let F (a) = 1 − e−e . It is the distribution function of some r.v. X and

we conclude that max(X1 , · · · , Xn ) − λ1 ln n ⇒ X.

2

4

5

6

(a)

P (N = k; N1 < N2 ) = P (N1 = k; N2 > k)

= P (X1 = 3, · · · , Xk−1 = 3, Xk = 1)

− P (X1 = 3, · · · , Xk−1 = 3, Xk = 1; Xn 6= 2 for all n > k)

= P (X1 = 3, · · · , Xk−1 = 3, Xk = 1)

= pk−1

3 p1

2

= pk−1

3 (p1 + p2 ) · (p1 + p1 p3 + p1 p3 + · · · )

= P (X1 = 3, · · · , Xk−1 = 3, Xk 6= 3)

∞

X

P (X1 = 3, · · · Xn = 3, Xn+1 = 1)

×

n=0

= P (N = k)P (N1 < N2 )

(b)

E[N ; N1 < N2 ]

P (N1 < N2 )

E[N ]P (N1 < N2 )

=

P (N1 < N2 )

∞

X

nP (N = n)

=

E[N1 |N1 < N2 ] =

n=1

=

∞

X

np3n−1 (p1 + p2 ) =

n=1

1

1 − p3

(c)Let N1 be the numer of trials needed for 6 to appear, N2 be the numer

of trials needed for 7 to appear, N3 be the numer of trials needed for any

number other that 6 or 7 to appear. By (b), the conditional expected numer

1

of rolls is 11/36

= 36/11.

7

it

(a) The characteristic function φ(t)√ of X ∼ Poisson(λ)

is eλ(e −1) . The

√

p

it/

λ

−1) −iλ(t/ λ)

ch.f. of (X − EX)/ V ar(X) is eλ(e

e

. We observe that

3

√

it/ λ

lim λ(e

λ→∞

√

it/ λ

√

eit/λ − 1 − it/λ

− 1 − it/ λ) = lim

λ→∞

1/λ2

−iteit/λ /λ2 + it/λ2

= lim

λ→∞

−2/λ3

−iteit/λ + it

= lim

λ→∞

−2/λ

(it)2 eit/λ /λ2 + it

= lim

λ→∞

2/λ2

−t2

=

2

√

2 /2

−1) −iλ(t/ λ)

As a result, eλ(e

e

→ e−t

shown the desired weak convergence.

while λ → ∞, and we have

8

X

n 2 2

1

Xi Xj −

µ )]

E[ n (

2

2 1≤i<j≤n

2

X

X

X

n 2

n

4

X

X

]

−

2E[

X

X

]

µ

+

µ4 )

(E[

X

X

]E[

= 2

k

l

i

j

i

j

2

2

n (n − 1)2

1≤i<j≤n

1≤i<j≤n

1≤k<l≤n

X

X

4

= 2

(6E[

X

X

X

X

]

+

2E[

Xi2 Xj Xk ]

i

j

k

l

2

n (n − 1)

1≤i<j<k<l≤n

1≤i,j,k<l≤n,i6=j,j6=k,k6=i

2

X

X

n 2

n

2 2

+E[

Xi Xj ] − 2E[

Xi Xj ]

µ +

µ4 )

2

2

1≤i<j≤n

1≤i<j≤n

2

2

n 2 2

n

n

n

4

n 4

2

2

2 2

4

= 2

(6

µ

+

2

·

3

·

µ

(σ

+

ν

)

+

(σ

+

ν

)

−

2

µ

+

µ4 )

2

n (n − 1)

4

3

2

2

2

→ 0 as n → ∞

We have proved the L2 convergence of it and hence convergence in pr.

4

9

Let B, B 0 ∈ B(R).

Z

1{X1 ∈B 0 } dP = P (X1 ∈ B 0 , X2 + · · · + Xn ∈ B − B 0 )

{Sn ∈B}

= P (Xk ∈ B 0 , Sn − Xk ∈ B − B 0 )

Z

1{Xk ∈B 0 } dP

=

{Sn ∈B}

We thus have

m

m2

X

Z

{Sn ∈B} j=1

j

j−1 j

1

dP =

2m {X1 ∈[ 2m , 2m )}

m

m2

X

Z

{Sn ∈B} j=1

j

j−1 j

1

dP

2m {Xk ∈[ 2m , 2m )}

and let m → ∞ using the fact that E[X1 ] < ∞, we have

Z

Z

+

X1 dP =

Xk+ dP.

{Sn ∈B}

{Sn ∈B}

Similarly, we have

Z

X1−

Z

dP =

{Sn ∈B}

{Sn ∈B}

Xk− dP

For any 1 ≤ k ≤ n.

Therefore,

R

{Sn ∈B}

Xk dP =

R

{Sn ∈B}

X1 +···+Xn

n

dP , and thus E[Xk |Sn ] =

Sn

.

n

(b) Let Fk = σ(Sn , · · · , Sn−k+1 ).

Sn−k+1

|σ(Sn , · · · , Sn−k+2 )]

n−k+1

1

=

E[Sn−k+1 |σ(Sn−k+2 , Xn−k+3 , · · · , Xn )]

n−k+1

1

=

E[Sn−k+1 |σ(Sn−k+2 )] Since Xi0 s are independent.

n−k+1

1

Sn−k+2

=

(n − k + 1)

= Mk−1 .

n−k+1

n−k+2

E[Mk |Fk−1 ] = E[

Remarks. If σ(X, Y ) is independent if Z, then E[X|Y, Z] = E[X|Y ]. To

5

see this,

Z

Z

E[X|Y, Z] dP =

X dP

{Y ∈B1 ,Z∈B2 }

{Y ∈B1 ,Z∈B2 }

Z

= P (Z ∈ B2 )

X dP

{Y ∈B1 }

Z

E[X|Y ] dP

= P (Z ∈ B2 )

{Y ∈B1 }

Z

E[X|Y ]1{Z∈B2 } dP

=

{Y ∈B1 }

Z

E[X|Y ] dP.

=

{Y ∈B1 ,Z∈B2 }

10

Let a, b > 0, 0 < x < 1. Let p =

1

,q

1−x

= x1 , we have p + q = 1.

(1 − x) ln E|X|a + x ln E|X|b = ln(E[|X|a ])1−x E[|X|b ]x

≥ ln E[|X|a(1−x) |X|bx ] = ln E[|X|a(1−x)+bx ]

6