Document 11243526

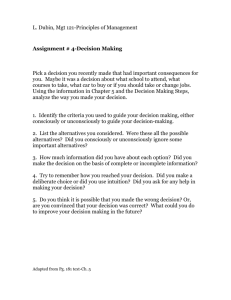

advertisement