AN ABSTRACT OF THE THESIS OF VISHNU BALCHAND JUMANI March 28, 1973

advertisement

AN ABSTRACT OF THE THESIS OF

VISHNU BALCHAND JUMANI

DOCTOR OF PHILOSOPHY

for the

(Degree)

(Name)

APPLIED MATHEMATICS

presented on

March 28, 1973

(Date)

(Major)

Title:

A STUDY OF THE QUANTIZATION PROCEDURE:

HIGHER ORDER INFORMATICN

AND THE CONDITIONS FOR MINIMUM ERROR

Signature redacted for privacy.

Abstract approved:

(William M. Stone)

Quantization is a non-linear operation of converting a continuous

signal into a discrete one, assuming a finite number of levels

A

N.

study is made of the quantization procedure, starting from the year

1898

with

to the present time.

consideration

Conditions for minimum error are derived

of quantization in magnitude and time.

An extension

of the Mehler and Carlitz formulas involving Hermitian polynomials

(quadrilinear case) has been created.

Further, investigation is con-

ducted toward obtaining an autocorrelation function of the output of

the quantizer for Gaussian input.

The method calls for the use of two

different forms of the Euler-Maclaurin sum formulas and results are derived for a hard limiter, linear detector, clipper, and a smooth limiter.

The method lends itself to the extension to the non-uniform case.

A Study of the Quantization Procedure:

Higher Order Information and the

Conditions for Mininum Error.

by

Vishnu Balchand Jumani

A THESIS

submitted to

Oregon State University

in partial fulfillment of

the requirements for the

degree of

Doctor of Philosophy

June 1973

APPROVED:

Signature redacted for privacy.

Professor of Mathematics

In Charge of Major

Signature redacted for privacy.

91igfman of Department of Mathematics

Signature redacted for privacy.

Yr

Dean of Graduate School

Date thesis is presented

March 28, 1973

Typed by W. M. Stone for Vishnu Balchand Jumani

ACKNOWLEDGEMENT

I am thankful and will always be very grateful to my major professor, William M. Stone, for the kindness, patient help, encouragement

and guidance that I received from him during this research and my

studies in general.

I am thankful to my wife Debborah for her understanding and untiring encouragement.

I would also like to extend my appreciation to Professor Arvid T.

Lonseth, Department of Mathematics, Oregon State University, for his

kindness and his

extension

of a part of the research grant under the

Atomic Energy Commission for the completion of this thesis.

I also wish to extend my appreciation to Ms. Jolan Bross, secretary in the Mathematics Department, for her assistance in this entire

project.

TABLE OF CONTENTS

Page

Chapter

QUANTIZATION AND ITS HISTOU

1

MINIMIZING CONDITIONS

22

EXTENSIONS OF MEHLER AND CARLITZ FORMULAS

33

AUTOCOFtRELATION

39

BIBLIOGRAPHY

54

APPENDIX

59

A STUDY OF N-LEVEL QUANTIZATICU:

HIGHER ORDER INFORMATION

AND CONDITIONS FOR MINIMAL ERROR

I.

QUANTIZATION AND ITS HISTORY

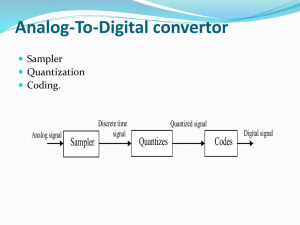

The quantization process is very widely used today in the field

od communication systems.

However, its origin dates back to Sheppard

(1898), who derived what is known today as Sheppard's correction formula.

He used the idea of breaking up the domain of the frequency

p(x)--which is assumed to be single-valued and continuous--

function

into equal intervals of length

w = 1+

x1 - x'

i

.

that is,

w:

+

+

i = 0, -1, -2,

He defined

A.=fgx)dx=fgx.+30

1

xi-

dy

(1.2)

_ w

2

and bY means of the Euler-MripLaurin identity arrived at his formula.

Statistical data, divided into uniform intervals of the domain, thus

may show its effect on variance.

In the communication field, quanti-

zation is described as a nonlinear operation, converting a continuous

incoming signal into an outgoing signal that can take on a finite number of levels.

This operation, which is essentially an analog to

digital conversion, intoduces an error.

Our basic aim is to faith-

fully reproduce the quantizer input signal at the system output

2

To achieve this one has to minimize the error between the

terminal.

quantizer input and output.

Figure I illustrates the input-output

xi, x2,

characteristic of a quantizer where

, xN

which subdivide the input signal amplitude range into

ping intervals and

yl, y2,

YN

are the points

N nonoverlap-

indicate the outputs correspond-

ing to the respective input subintervals.

Output, y=Q[x]

YN

Input

This results in

:=

CD,

N

levels and thus the name N-level quantizer.

If

a constant for all i, then we have a uniform quantizer,

otherwise we have a nonuniform quantizer.

were the first to implement this idea.

of an 8-channel transmission system.

Goodall and Reeves (1947)

They guided the construction

In a subsequent paper published

by Black and Edson (1947) quantization, along with the number and size

of levels or steps, was investigated.

Widrow (1956) showed in analog to digital conversion that if the

3

probability density of the quantizer input signal is zero outside some

bandwidth, then the amplitude density of the error signal, the difference between the input and output signal, is given by

( 1. 3)

0, elsewhere ,

where

w is the stepsize.

Studies were conducted concerning application of this pulse code

modulation scheme involving sampling and quantization in transmission

Foremost in this field was Bennett (19).i.8).

of telephone signals.

His

method called for quantization of the magnitude of speech signals.

The selection was made, not from a continuous range of amplitudes but

only from discrete ranges.

The speech signal is replaced by a wave

constructed of quantized values, the selection made on the basis of

minimum distortion.

The quantized signal is then transmitted and re-

covered at the receiver end, then restored to give the original message, provided the interference does not exceed half the difference

between adjacent steps.

He considered quantization of magnitude and

time, whereby it was made possible to encode speech signals and to

transmit a discrete set of magnitudes for each distinct time interval.

Consider voltage quantization in time, depicted by Figure (1.2):

4

put

Figure 1.2

The distortion resulting from the quantization process--that is, the

difference between input and outout--is shown in Figure (1.3), a saw tooth function.

tooth

/1Figure 1.3

If V is the voltage corresponding to any one step and

m the slope,

then the error can be expressed as

e(t) = mt, -V<t<

=Pm.

2m

2m

and the mean square error is

V/2m

"

E =-;2 =

e2 (t) dt

-11-1

,

V/2m

=

V2

(1.5)

12

the well-known Sheppard's correction.

As mentioned by Bennett (1948), not all distortions of the original signal fall within the signal band.

Higher order modulations may

have frequencies quite different from those in the original signal,

5

which can be eliminated by a sharply defined filter.

It thus becomes

important to calculate the spectrum of the error wave, which is posThis is based on the fact

sible by using the theory of correlation.

that the power spectrum of the wave is the Fourier cosine transform

At this stage we shall introduce a nota-

of the correlation function.

tion that will help us review the work of several authors since the

earliest of tines.

As described above, quantization is the nonlinear

operation of converting a continuous signal into a discrete signal that

N

assumes a finite number

levels.

A typical input-output relation-

The output is denoted by yk when

ship is exhibited in Figure (1.1).

the input signal x lies in the range

xk...1 < x < xk.

In most com-

munication systems the main problem is to reproduce the original input

signal at the receiver output.

The quantization process introduces a

certain amount of error which is denoted by

e(t) = x(t)

where

x(t)

is the input signal and

of the quantizer.

(1.6)

CAx(t)]

is the characteristic

Q[x(t)]

The continuous signal of Equation (1.6) is written

as

e = x

for notational convenience.

(1.7)

Q[x]

The mean value of

e

in Equation (1.6)

measures the efficiency of the quantizer, defined as

OD

E = ccf

f(x - CILx]) p(x) dx

,

(1.8)

6

where

p(x)

x(t), and

is the amplitude probability density of the input signal

The

is the error function.

f(e) = f(x- Q[x])

f(e)

is

assumed to be a nonnegative function of its argument since it is not

desired to have positive and negative instantaneous values to cancel

each other.

Considering an N-level quantizer, the domain of definition

nonoverlapping subintervals.

N

is broken up into

Equation (1.8) can

be written in the form

N-1

xk4.1

E =

k=o

where

xk

= co.

and

xo = -oo

(1.9)

f(x- Q[x]) p(x) dx ,

If an explicit characteristic of the

quantizer is defined such that

Q[x] = Yk

then Equation (1.9)

xk_i

5_

x < xk,

k = 1, 2,

iN.

(1.10)

can be written as

xkia

ff(x Yk+)) P(x) dx.

With the measure of error as indicated by Equation (1.11) Bennett

(1948), Painter and Dite (1951), and Smith (1957) investigated the mean

square error criterion with their error relationshio given by

xk+1

-1

E2 =

ko

=

2

(x - yk+1) p(x) dx .

(1.12)

I

xk

It is noted that Equation (1.12) is a special case of Equation (1.11),

7

where E

is replaced by

function

f(x) = x2.

which depends on

E2 = E

2

E2

for notational convenience and the error

For best results it is necessary to minimize

2N-1

E2

quantizer parameters,

-1x'2'

(1.13)

'xN-l'Yl

Y2'

'

Y11)*

The work of the three authors mentioned above dealt with the minimization of

E2

for large values of

N.

Bennett (1948) further dis-

cusses the fact that in the case of speech it is advantageous to taper

the steps of the quantizer in such a way that finer steps would be

available for weak signals.

Tapered quantization is equivalent to in-

serting complementary nonlinear, zero-memory transducers in the signal

path before and after the analog-to-digital converter, as shown in

Figure (1.4).

Input

,Transducer

Cutput

Transducer

rjv

Figure -1.4

Smith (1957) calls the same process companding.

Others who have

studied this area of optimum and nonoptimum transducers include

Lozovoy (1961),

Davis (1962), Wiggins and Branham (1963), Mann,

Straube and Villars (1962).

Max

(1960)

works the expression for dis-

tortion as given by Equation (1.11) and has derived conditions for the

minimum of

E in Equation (1.11) for fixed

N.

He shows that for

8

minimum error,

(1.14a)

f(xj- yj) = f(xj-y, j = 1, 2, ....,N-1

and

xi

0,

fi(x-Yi) P(x) dx =

(1.14b)

j = 1, 2,

His special application of Equation (1.14)

to the mean-square error,

as expressed by Equation (1.13), yields

xj. =

Y.

3+1

+1

.

j = 1, 2, ... ,N-1

(1.15a)

2

and

xi

Ix p(x) dx

j-1

N.

j = 1, 2,

Y.

(1.15b)

x.

p(x) dx

xj-1

He further considers the case of input signal of normal amplitude distribution and derives expressions for the meab-square error.

He also

looks into equally spaced input-output relationshio which referred to as

uniform quantization.

Other authors who have studied the mean-square

error in the quantization process include Panter and Dite (1951),

Algazi (1966), Bluestein (1964) and Wood (1969).

It should be noted

here that the results derived by Max (1960) were first derived by Panter

and Dite (1951), using a slightly different approach.

9

It is also to be noted that the procedure used to derive conditions for the minimum, as done by Max (1960), was first suggested by

He concentrated his effort in minimizing the mean-

Garmash (1957).

square error as expressed by

xk+1

N-1

E2=

2

xk

In Equation (1.15c) the quantizer output

x

Q[x] =

Differentiating

(1.15c)

(x_xk)P(x)dx.

k=o

x

,

is given by

Q[x]

k = 1, 2,

Equation (1.15c) with respect to

,N-2 .

(1.15d)

xk he was able to

generate an expression

=

k

where Lic..1 =

xk -

1

P(x10.1)

1

(1.15e)

2j p(x)

xk..1

Roe (1964) deals with a special case of Equation (1.11), the mth

power distortion.

giving

In Equation (1.11) he sets f(x-yk)

=0

,

xkia

N-1

Em =

= Ix ykl

IIx- y

I

p(x) dx , m = 1, 2,

.

(1.16)

xic

Using Max's (1960) conditions in Equation (1.14) he was able to show

that for minimum Em the breakup of the input points

tinuously differentiable density function

mately the relationship

p(x)

xk

for a con-

must satisfy approxi-

10

xn

0

where

1

fb(x)]

and

C1

111+1

dx

=

2C1n

Roe

02 '

n = 1,

and x. Max's (1960) tabulated

xo

can be derived from Equation (1.17).

xic

(1.17)

N,

are constants which can be adjusted to give the re-

C2

quired fit at the extreme points

results for

+

Furthermore,

(1964) shows that m = 2, a normally distributed input, the

xri

must satisfy the relationship

2n N

-1

./6 erf

)

(

(1.18)

,

N+42

where m =

0.8532-.-

and the error function is well-tabulated in the

Annals of the Harvard Computation Laboratory, No.

(1964)

Using Roe's

result, Wood

square error as expressed by Max

function of

N

(1969)

(1960),

23 (1952).

E2, the mean-

showed that

can be approximated as a

by a relationship

E2

2.73

=

N

(1.19)

(N + 0.8532)3

Zador

(1964)

(1964)

generalized the Panter and Dite

(1951)

results by considering multivariate distributions.

X = [x(1), x(2),..., x(k)]

probability measure

Let

be a random, vector-valued variable with

F) defined on Lebesgue-measurable subsets of

k-dimensional Euclidean space

tribution function.

and the Roe

Let

1 _

<i <N,

_ be a set of

p(*)

Ek with absolutely continuous

denote the density of

X.

Let

dis{19}

N Lebesgue measurable disjoint subsets of

11

Ek, with

yi

1.

p(Ri)=

(1.20)

i=1

N-region

In this case the

quantizer with quantization regions

theunionetheilonto the integers

1

to

N

covered by

ic

may be regarded as a function mapping the portion of

Ri

given by

Q(x) =i, xER1 .

(1.21)

This maps each x into integer index i, 1 < i <N, which labels the

region

Ri

representation of x , since

of

Quantization introduces an error in

into which x falls.

Zador (1964)

Q(x) = 1.

continuous and bounded

x must be estimated by some function

first showed that for

k = 1, absolutely

0 <r < OD, the mean

p , and

f

rth

error is

defined by

1

E

where

C

.

min

=

-Er

l+r

p

l+r

dX ]

(1.22)

N

X is the Lebesgue measure,

Cr is a known constant.

The

Panter and Dite (1951) result is a special case of Equation (1.22)

with r = 2.

sidered

As seen there,

k > 1

Cr =

C2

= 2/3.

and derives the general result

Zador (1964)

also con-

12

k+r

E

Ckr

-7/k

.

Dan

pk+r 0, 3

[

k

(1.23)

Ek

Here p

is absolutely continuous and bounded and X is the Lebesgue

measure on

Ek.

are not known for k> 1.

Ckr

The constants

Elias (1970) introduces a different aporoach in measuring the

He defines a quantizer that

performance of a given N-level quantizer.

[0,1]

divides the interval

of a random variable

.th

i

quantizing intervals, of which the

has length

x into a set of

Qxj.

N

He measures

his quantization for particular values of x as the length of the

quantizing interval in which x finds itself and measures the quantizer performance by the

rth

mean value of the quantizer interval

length, averaged uith respect to the distribution function of the ran-

dom variable x. That is,

1

mr(q)

Axr r

(1.24)

Work done by other authors describes the quantization error,

shown by Equaions (1.12) and (1.16), in terms of the absolute value of

the difference betneen

x, the random variable being quantized, and

some representative point

y(x)

lying in the quantizing interval and

not as the size of the quantizing interval.

The performance of the

quantizer, as described by these authors, is measured by

Er,

the

13

mean

rth

power of the difference as given by Equation (1.16).

Over the interval Px = x k+1

the error is defined by

xk

xkia

Ix-yip(x)dx

(1.25)

Ekr

xk+1

Ip(x) dx

xi(

Let

p(x)

xk and xivia,

be approximated by a straight line between

so

xk.o.

P(y)

f

(1.26)

P/(Yk)(x - Yk).

P(Yk)

P(x)

xk+1

yki

dx

(x_y)ix-y

P/(Yk)

xk

I

xk

(1.27)

Ekr

xk+1

p(yk)

Let

LSx = xk41

f

dx

xk+1

+

pi(yk)rj

= w be constant so

that

(x-yk) dx

yk = xk +

;

also observe

xivia

f(x-yk)lx-yki dx= 0

(1. 28 )

xk

for even distortion measure, and, of course, the second term in the

denominator of Equation (1.27) has value zero.

So, the straight line

14

approximation to the density function leads finally to

cm

Ekr =

(1.29)

2r (r+1)

r

The two measures related by Equations (1.24) and (1.29), fixed

F(x), the distribution function associated with

and increasing values of

x, have approximately

Thqfbecome better for smooth

the same optimum quantizing intervals.

p(x)

Elias (1970) works with Mr(q)

N.

F

and it is possible, by imposing smoothness conditions on

Ekr from Equation (1.29).

to extract results about

and

and

p

But for arbi-

the generality and exactness of the results available for

trary

F

Mr(q)

seem unlikely to hold for

Comments about

Ekr.

Mr(q)

and

Ekr will be made later.

To discuss details of the work conducted by Elias (1970) it is

necessary to review some concepts, especially those relating to

weighted means as discussed by Hardy, Littlewood and Polya (1934), and

those defining what we call asymptotic optimum quantizers.

The un-

derlying definition of asymptotic optimum quantizer will be given

first.

Two of the first authors to discuss them were Panter and

Dite

(1951), who showed that the minimum mean square error attainable is

asymptotic in

N

to

C2

E

2

N2

f p 1/3

El

,3

dx J

(1.30)

15

Bluestein (1964) investigated asymptotically optimum quantizers with

His aim was to find such a

noisy, continuously varying input signal.

quantizer that the performance would approach that of the zero memory

as the number of levels

This implies that for any

is increased.

N

E

C > 0 the error due to quamtization can be made less than

ing the number of quantizing levels appropriately large.

by mak-

Any quantizer

designed to fit with the above is an asymptotically optimum quantizer.

(1934)

Some definitions from page 12, Hardy, Littlewood and Polya

are in order for subsequent use.

a = as

,

s = 1, 2,

Define a set of nonnegative numbers,

n, and, for convenience, let the summations and

the products with respect to

be understood to range from

s

s = 1

to

Primary definitions are then

s = n.

l/r

a

[

n

]

,

r > 0 ,

s

(1.31)

M (a)

0

if

as

r < 0 and if

at least one of the

is 0,

and

1/n

G(a) =

Clearly,

A(a) = Mi(a),

(1.32)

Tra53

H(a) = M_1(a),

and

G(a)

arithmetic, harmonic and geometric means of the set

r = 0

is excluded but it can be shown that

the geometric mean.

M0

are the ordinary

a.

The case

(a) is interpreted

as

A more general system of mean values of the set

a

16

is in terms of a set of weights

ws

s = 1, 2, ,

,

n,

ws

> 0,

r)

Ew sas

(

, r > 0

Ew

(1.33)

Mr(a,w)

if both r < 0 and at least one

0

as

is zero,

and

G(a,w)

Replace

ws

rra

w

w

1/

=C " s

(1.30

by ps with the requirement

so that, finally,

(1.35)

1

=

Ps

r

Epsas)

,

r > 0 ,

Mr(PP) =

(1.35a)

0

if both r < 0

A(a,p) =M1 (a,p)

=

psas

H(a,p) = 24_1(a,p)

=C

-1 -1

psas

and at least one

as

is 0,

(1.35b

1,

(1.35c)

Ps

G(a,p)

=

iras

Additional identities, such as

(1.35d)

hr

M (a,P)

=

G(a,p)

=

[A(a

op)]

and

xgA(a,p)log a]

etc., but the principal interest lies in Equation (1.35a).

Returning to Elias's (1970) work, the discrete set of inputs

are mapped into corresponding output symbols yi

as a real number

[0,1] with distribution

finds itself in the unit interval

So

x

q = (xi,pi) where

He defines his quantizer as

, 1 <1< N.

p = F(x).

is a set of (N+1) points in the unit square with

q = (ci,pi)

pi_i

< X.

X.

< pi ,

1 < i < N,

(1.38)

xo 7 po

= 1

.

compatible with q

F

We call the distribution

p = F(x)

XN=

= 0 ,

passes through the

(b1.04 points of

q.

if the graph of

The Lxi =

are the quantization levels and ppi = pi - piaa correspond to the

x falls into the quantizing interval Lxi and is

probability that

yi.

thus encoded into

Xi

Also,

=

i=1

and

Lxi. +Ppi > 0 for

,

= 1

(1.39)

i=1

i = 1,

,N.

As mentioned before, the performance of the quantizer is measured

18

by the r-th mean of the Lxi.

alized set of weights so the

The p pi may be treated as the normMr(a,p)

of Equation (1.36a) comes into

play,

r 1/r

M(q)

CEP(Ax)

1.

(1.40)

This may be computed from the quantizer itself with no knowledge of

of

x

If

beyond the one limitation that it has compact support, i.e.,

F(x)

lies within the unit interval, a normalization of the range of

x

x.

should have a nonfinite range, as in the case of a Gaussian

For

variable, one or two of the quantizing intervals are not finite.

the case of uniform, nonoptimum quantization,

Ax.

1

1

, Equation

N

has the form

(1.40)

r

Mr(q)

If

=

=

Mr(171.4 =

CELS.P

1/r,

=-; .

(1.41)

represents an optimum quantizer then we see that it can be no

ql

worse than in Equation (1.41), or

N M(q1) < 1,

with equality for all

er case.

.

and N when

F(x) = x, the uniform quantiz_

To double the number of quantizing intervals reduces the rth

mean by half.

theA xi

r

(1.42)

However, it is usually possible to do better by making

small when the pp.

are large and vice versa.

Equation

19

It is possible to derive

Mr(q).

(1.42) indicates the upper bound on

a lower bound, which is exhibited by Elias (1970) as

1/q

P

[f(x)]

= [ il

(1.43)

dx ]

0

1

where p =

, q =

,

f(x)

F (x), the density of the absolute-

l+r

1-Pr

ly continuous part of

F(x).

Before we go further we note here the definition of

rth

given F, N and r, one asks how small the

val may be made by adjusting &)(i.

For

Mr(qi).

mean quantizing interql,

and Api. A quantizer

whose

rth mean quantizing interval is given by

m %La

fAx.1OLAYi

min

M (n ) =

r '11

K6, Xi tAPj)

(1.44)

II

subject to conditions stated in Equation (1.39), is defined to be the

optimum quantizer for

nonnegative

r

With Equation (1.44) one can for

F, N and r.

and optimum quantizer ql, consistent with

F, write

(1.45)

Ir < NM(q) < 1.

r = 0 (q = 0) Equation (1.45)

It should be noted that in the limit

x

boundsthegeometricmes

(1.35d) while in the limit

Equation (1.45) bounds the

r=co (q=1)

maximum value M(q1) of the Lx1

.

as given by Equation

Elias (1970a) does not stop

here but goes on to establish the existence of class

that are asymptotically optimum as

N

Co

Q

quantizers

and shows that for q

Ir < NMr(q1) < NM(q) < 1.

Q

(1.46)

20

He also proves that for

q E Q

N M (q) =

lim

(1.47)

Ir .

N-400

After the proof of the existence of this limit he discusses the rate of

convergence of the above and shows that if certain conditions of convexity and concavity on

rate of convergence.

to

I

F

are met then bounds can be found on the

This discussion on the rate of approach of NN(q)

for qEQ is generalized by the consideration of a class

of distributions

of

F

F where the subscript

of no fewer than the number

convex up pieces.

J

J

C

indicates the composition

of alternately convex down and

He goes on to show how the convergence is governed

n = N/J, the average number of quantizing intervals per

* *

convex domain of F. There is a resulting inequality for qEQ

n-1

by the ratio

I < N M (q ) < I

exp

1+1n n

q n-1

).

(1.48)

He lists more results which are tighter than Equation (1.48) if

f sat-

isfies certain conditions.

Elias (1970a) also derives results concerning the bounds on the

optimum quantizer and the convergence of this quantizer, considering a

multi-dimensional case.

Some of Elias's (1970a) results can be men-

tioned in terms of Zador's (1964) multi-dimensional results.

The

measure of performance is the quantization error in the tth coordinate

of xERl' which is defined as the width A(x) of

coordinate.

That is

R

1

in the t-th

21

Li( xt) =

sup

inf {x.,

-

x E R.

xE R.

As before, the measure of performance of a quantizer q

to the measure

f)

is given by

Ri, Mr(q)

with respect

Mr(q), the rth mean of errors

For equal weights and dif-

averaging over k coordinates of all R.

ferent weights over

(1.49)

1 < t < k .

in the multi-dimensional cases is given

by

Mr( q)

>1

p(Ri)1/r

,

Li (xt)]

,

0 < r < OD.

(1.50)

i=1

A second measure of performance is given by

Mr(q)

=[

,

f(R1) gai)

r/k l/r

, 0< r < oo.

(1.51)

i=1

Here

X(R)

is the Lebesgue measure of

R.

in the kth dimension and

he goes on to show that

Mr(q) < M (q)

(1.52)

He has additional results analogous to those of the one-dimensional

case which are not mentioned here.

22

II.

MINIMIZING CONDITIONS

A quantization system, or as we say, an N-lsvel quantizer, is described by specifying the endpoints of the

input ranges and the

N

output level yk , which correspond to each input range.

Given the

amplitude probability density of the input signal the probability density of the output can be determined as a function of the

If a symbol

xk

yk.

and

indicates the total distortion in quantization it can

D

be expressed as

i+1

D = Ef(sin

where

xo

and

error function

sout)]

n+1

f(x)

=

f(x-y1) p(x) dx

i=

(2.1)

xi

are arbitrarily large left and right and the

is defined on page 6.

Indeed in the study of

realistic communication problems one is interested in the minimization

of

Max (1960) derives the necessary conditions for the minimum

D.

value of

x.'s

D by differentiating Equation (2.1) with respect to the

and they 's

.3

and setting such derivatives equal to zero:

0,

arl...Ef(c._y.1)_foc....

3-

j = 2,3,-.,N

(2.2)

c)xi

and

fl(x-yi) p(x) dx = 0,

= -

oYi

j = 1, 20.--,11.

(2.3)

xi

These two equations reduce to

- y. -1) = f(x.- y.),

3

provided p(xj)

0

and

J

J

j =

(2.4)

23

C

x.+1

3

fl(x-) p(x) dx = 0

j =

,

(2.5)

x.

As Max (1960) indicates these conditions are also sufficient, a state-

(1965)

ment proved by Bruce

and Fleischer

(1967).

Max

(1960)

f(x) = x2 Equations (2.4) and (2.5)

to show that for such

goes on

reduce to

the form

YT

-

xj

Y'll

Trj.

g

j - 2,3,

*

,N,

(2.6)

2

and

x.

1

Note that Max

j = 1,2,....,N.

(x. y) p(x) dx = 0

J

Xi

(1960)

nitude alone.

(2.7)

is involved with the problem of quantizing mag-

Consider Figure (1.1), which depicts the operations of

interest from the initial input to the final output of the quantizer.

Thus, from Equation (2.7),

x.

x p(x) dx

Yj =

.

j = 2,3,....,N.

,

(2.8)

x.

I

p(x) dx

xj-1

If the normal distribution,

= y(°)(x)

p(x)

2,

-x /2

=

e

,

-co <x < co,

(2.9)

2ir

then the associated functions are defined as

y

(-1)

(x) =

f

y

(0)

0

and for values of the index > 1,

(y) dy

,

-OD < X < OD,

(2.10)

24

=

(1)(n)(x)

In_

y(o)(x)

_co <x < 00,

,

(2.11)

dxn

then there is formed a complete 3et in the usual sense.

That is,

(0)

x y

(x) dx

(0)

(0)

(xj -1

Y3

.

)

(x.)

-

(2.12)

-

(-1)

x.

J (0)

( xj

(-1)

-

( xj_i)

(x) dx

j= 1,2,-...,N.

xj-1

Keep in mind that Equation (2.12) yields the quantization levels in

terms of amplitude quantization only.

Later this result is to be com-

pared with one obtained in terms of quantization in amplitude and

time.

L.

XA

Input

Initial

Samp.

1ci,.

='QUant.

Encod.

-3r 1-

Rec.

--> Decod.

Const.

Figure 2.1

Tran.

25

This figure shows the stages involved in transmission of data

through the channel.

It is clear that this involves the operation of

encoding, transmission, decoding, etc.

the final output

yi

is obtained involve a certain delay before yi

is reproduced at the output.

bol

These side operations before

Denote this amount of delay by tge sym-

"d" (see Figure 2.2)

Xj.

Yi

t-tj

_

_

t

J

T=cowtant sampling

d

period

T

T

ddelay

Figure 2.2

J

26

indicates the input signal on which N-level

In the figure ,x(t)

quantization is performed for transmission and recovery at the output

terminal as

itial range of

with xo

The N-level action calls for breaking up the in-

y(t).

into

x(t)

N

intervals (xi_i,xi), i =

large left and right respectively.

and x

For<x(t.)<x1.,i=1,2,--,N,thex(t.)is

comparison with the endpoints of the ith interval.

transmits a signal

The quantizer

x(t)

indicates the instant of time at which

is sampled.

This means

quantization with respect to both amplitude level and time.

transmitter signal

xi(ti)

t.

indicates the level and

i

xi(ti), where

obtained by

The

is reconstructed at the terminal output

to yield yi , a mapping defined by

x.(t.),

1

< x(t) <

j

,

i = 1,2,...,N-1.

(2.13)

x.1

J

The amount of distortion, or the expected value of the difference beith

tween input and output during the

range on the time scale, is

(2.14)

D = E[x(t) - yi(t)],

and the mean square distortion in the transmission of

OD.X

by

is given

2

(x-yi) p[x,x(ti),7-] dx(t)dx, i = 1,2,..,N,

di =

-OD

y(t)

(2.15)

xi_l

wherefr=t-t.inthe argument of the probability density associated with the input signal.

is analyzed.

The

t

is the instant at which the

prror

Averaging Equation (2.15) with respect to all the levels

27

T

and the time

the total distortion--using the mean square criteria--

can be expressed as

d+T

T

D= >I

i=1 1 d f

oo

2

(x- yi) p[x,x(ti), fir ] dx(tpdxd

f

-oo

'

x.

(2.16)

,

xi,1

or as

Xi

a)

2

>

D=

(x-yi)pT[x,x(t.)] dx(t.)dx

(2.17)

1=1

d+T

where

Cx,x(b.)]

P

gx,x(t.),T] d

=

(2.18)

.

For minimum value of D require that the partial derivatives take

zero value,

xi

oo

aD

àyj

= -2

(x- y1) pTEx,x(t .)] dx(t .)dx = 0

1.

(2.19)

-ao

and a principal result of this paper is that

00

X

i

T

x p[x,x(t.)]dx(L)xd

I

(2.20)

xi-1

Yi

CD

fiT

X

1 <i < N-1.

P [X,X(t

(t)dx

.)] dx

-CO

Note that Equation (2.17) may be written in the form

01.,

D =

OD

EI

OD Xi

x.

I 1x2PT[x,x(t.)]dx(t.)dx

i=l_w xi_,

J

J

- y2

i

f

IPT[x,x(t.)dx(t.)dx].

-CO X

J

J

(2.21)

i-1

Note that there are two terms in each summation that involve

x.,

2.

28

i fixed.ThusD.might

be written as

I

D.

1

=

-

co

x.1

-co-a) x.

OD

1

x2pTEx,x(t.)3dx(t.)dx+

J

J

xi_i

[

I

x

co

yp[x,x(t.fldx(t.)dx

J

J

x

(2.22)

1

2°° xi

-a)

x,x(t)]dx(t)dx

IX p..11,

f

Y:+11

-co

i-1

x.

)dx.

:Tbc,x(t.)31x(t

J

1

Referring to Equation (2.20), the requirement that the partial deriv-

ativeaDllithrespectto.xi Yields

oo

x pTEx,x(ti)]

2(y1-yi+1)

(2.23)

-a)

2

2

r

T

Yi4.1) j P [xOcitj)] dx

=

-co

or

co

Y.

al x pT[xoltj)]

200

y.

_of

dx

(2.2)4.)

P Cx,x(t.)]

dx

I

the Jecond important recult of this paper.

Another important result is the delay "d" (see Figure 2.2), defined by an extension of' Equation (2.17) as

-co x d(2.25)

OD

11

D =

i=1

x.

d+T

1 I x2p [x,

1-1

N

i=1

So, a final form goes as

x(t ) , T ] dq- dx(t

[0/

-

OD

fX1.

)dli]

d+T

f

pCx,x.(t ),T

x d

1-1

dx(t )dxl.

29

N

OD

Xi

(2.26)

I2

kx - Y.) Pbc,x(t.),d+Ti - gx,x(t.),d3dx(t.)dx.

2

,

=

()D

J

od

1=1 -bp xl_i

require that this partial deriva-

D

To obtain the minimum value of

tive take value zero and this yields

p[x,x(t.),d]

P[xoc(t.),d+T]

(2.27)

or

(2.28)

ld+T1 = Idl

and this implies

d- -

(2.29)

.

For application let us consider the input signal having a normally distributed amplitude.

In terns of the set of functions defined

by Equations (2.9), (2.10) and (2.11) above Slepian (1972) has written

down the bilinear expansion

x,y..

a2+ 02-

1

P

(4.0, ) -

20T)G0 ,

exp[

J

2

2(1 - u

2u/17-11-7

)

(2.30)

(17(n)(

°DI

cp(n)(s) un(,7_).

)

n1

n=o

-op<

where

is the correlation function.

OD

X.

u(T)

x.

1

J

x

PT[x,x(t.),

1-1

J

] dx(t.)

.

J

Xf

'

1

n=o

1111

< 1,

Therefore

(n)

1

(n)cp(n)[x(ti)]

n=o

undx(t.)

J

n

xi-1

oo

= /

< oo,

1+T(n)

..xi

p

1

T.

2

(n)

(x)Y

a

d

n1

n

[x(t.)]

dx(t)j

u

.

dTe

30

or, finally,

op

p

fxiT[x,x(t.)3 dx(t

)(n)(x)[cp(n)

n=o

(xi)-p

ni

(n)

(xi..1)]

d+T

(2.32)

xi-1

un(T)d

.

T

From Equation (2.20)

d+T

1

(0)(0)

Ecp(x.) y(x.1-1 )]T I

001

fPTLx,x(ti)3

since

u(T) d

(2.33)

dx

co

x

n = 1,

-1,

(n)

(x) dx =

( 2

.

310

0, otherwise.

-CO

By a similar argument

xi

OD

pTLX,X(ti)3C1X(tj) =

J

xi-1

>7

riy(n)(X)p(n)EX(tin

!"1

dx(ti)

x

n=o

1-1

d+T

I

1

(2.35)

n

u (T) d

7

d

d+T

a)

= \/

n=o

OD

1

-7 cp

(n)

(x)[cp

(n-1)

(xi)

- cp

fu

(xi..1)]

n:

n

(T)d

d

Xi

CD

11

_I xi-1

(n-1)

r

tp(n)(x)[9(n -1)(x.

)

P bc,x(tj)idx(ti)dx =

n= 0

(2.36)

r1.1.-Oo

d+T

(n-1)

-

(2Ci_i)3

un(r)dxd

d'

Since

.

31

fa)

(n)

(x) dx

n =

={

(2-.37)

0 othertrise,

-co

Equation (2.36) reduces to

m1

fp [x,x(ti)] dx(t)dx = p(-1)(xi)

x.

T

-oo

p(-1)(xi_1)

(2.38)

xi-1

and, finally,

y

yi =

(0),(x.1)

(0)1

9

d+T

(xi)

(..1)

f

1

(xi)

OT)

d

.

(2.39)

d

Compare Equation (2.39) with Equation (2.8).

Note that Equation

(2.39) involves quantization in amplitude and time -.1hile Equation

(2.8) implies quantization in amplitude only with no delay present.

Equation (2.24) implies that for the usual expression of the

density function,

0000

olo

Lx

gx,x(tI),7-] dx

x(n)(x)(n)[x(t.)] un (T) dx

=061

1

n=o

ni

(2.40)

d+T

(1)

Ex(t.)]1TI

=- cp

Also

u(r) dq-.

OD

(0)

J.

P[xex(t.)]

dX =

T

Ex(t )]

(2.41)

-co

and

d+T

(1)

Yi+ Yi+1

cp.

(xi)

0),

1

1

T

11141

u(T) d

d

=

xJ

1

u(T) dT

,

(2.42)

32

or, finally,

yi

Yi+1

Yi+1

dAT

u(fT)d

Td'

2u (T)

(2.43)

33

EXTENSION OF MEHLER AND CARL1TZ FORMULAS

III.

Mach recent progress has been made with the Mohler formula in

terms of the bilinear generating functions for the Hermite polynomial

set, usually written as

H(x) tn

exp[2xt- t2]

n=1";

Itl < 1

(3.1)

.

n1

Slepian (1972), in a very recent paper, rewrites this in a form of

considerable interest to probabilists and statistians,

OD

22

a +0 -awe

1

Px,y (40) =

(n)(4),(n)(0

>

exP

no

2(1-u )

27/1-u2

n

U

n!

1111

(3.2)

<1,

where the set of derivatives of the well-known normal density function

aave been defined in Equations (2.9), (2.10) and (2.11).

They have

been well-tabulated by the Harvard Computation Laboratory (1952).

Garlitz (1970) and Srivastava and Singhal (1972) extended the theory

The formula due to the latter authors goes

-a) the trivariate case.

m

as

Hyi+p(X)Hp+(Y)H(z)

m,

p

w

pl

=0

= D-aexp[

n

(3.3)

2

1

2

EI(Ex -

v., 2 2

42_,11 x - 4EwXY + 8Euvx3r)]

where

and

E x2,

T,

D = 1 -

4u2 -1v2 -11w2

u 2X2 ,

,wxy,

the indicated variables.

+ 16uvw

(3.4)

and Tuvxy are symmetric functions in

34

The principal purpose of this chapter is to point out that these

recent and interesting extensions of the Mehler formula for the Hermite

polynomials lack applicability to interesting and real world problems,

particularly in the sense of the well-known state of the art paper by

So, consider the transform mate approach as emphasized

Tukey (196 ).

The moment generating or characteristic function

by Cramer (1946).

Mx(s) and the probability density function

Gaussian variates (0,1)

n

That is, if

form mates in vector terms.

are Fourier trans-

px(4)

are under discussion

m I e iSt4

1

x(a) =

1 StA2

(3.5)

dsn ,

ds1

-OD

(2u)n

where

Mx(s)

1 tA

- .±s

=e 2

s

(3.6)

and the variance-covariance or moment matrix

[E(xrxs)] -

rs

, r,s =

]

and the determinent of the moment matrix is

(3.7)

D.

These transform mates

start out simply but there is an explosive build-up.

Thus

OD

- .istIs

n = 1,

1

Mx(s) = e

isa - 1; s2

2

X(4, =

ds

-00

-

( 3.8 )

a

e

-CO <

< 00,

2n

provided a simple shift of the line of integration is carried out.

r1

n

2'

P

A = Lu

D =1-u

2

(4) '

1

2nil-u2e4P4-

a2+02 - 21110,

2(1-u2)

(3.9)

35

The case for

the same form as in Equation (3.2).

is

n = 3

already

quite complex; because of the symmetry moment matrix and determinant

4=0

u12

1

u12

u13

222

u13

u23

1

1

u

and

u12-u13-u23 + 2u12u13u23

D = 1 -

(3.10)

and let the third order density be uritten in the form

2

--1

u12U23-u15

u12u13-u23

u23

-

2

-1,2-13-23

2D

21

u23u13-u12 a

1-1113

1 U12

2

-

j112u23-u13 u23u13-u12

(3.11)

-

(27)2 D2

4 is

The case n =

too complicated to write completely in this form but

lu

at least

/1

u12

=

12

u 13 u-14

1

u23

U13 u23

-u14 u24

u24

(3.12)

1

u

and

2

2

2

2

2

2

D = 1 -u12 -u13 -u14 -u23 -u24 -u34

22

22 22 +uu

+uu+uu

1L23

13 24

(3.13)

123L1.

+2[1112u13u23 + u12u14u24 + ul3u14u34 + u23u24u34]

-2Cu12u13u24u34 + u12u14u23u34 + u131114u23u24] .

Note that if all u's

with

4

zero this would reduce to the

in the subscript were set equal to

D for the case

n

= 3.

A piecemeal

36

construction of the density function has been made but is relegated to

the appendix.

The alternative approach is to arrange that each probability density of increasing order be representable by a sum of products of nth

order derivatives of the Gaussian density, each factor being a function

Since the moment generating function is con-

of one variate only.

Aructed directly from the variance-covariance matrix:Equation (3.5)

is pertinent but the decomposition feature just mentioned requires

that if

9(0)(x) =e

-X2/2

=iflsx. OD

2u

then

n

2u

d

(x) = y

ds

OD

(n)

(0)

-Jch y

I

(x)

=

1

s2

7

I

e

n isx z

ds.

(is) e

2u-co

The Mehler type expansion of the bivariate density function in Equation (3.2) is determined from

2

-

Px,y (a.0)

s2+t +2ust

1m 1 .(.., tO) -

1

.

2

(2Tr)2

dsdt

(3.16)

_co

OD

=

1

21

fe i(s4+ to _

22

+t

2

n

i ust)

dsdt

2

(27)

j-OD

(n)

0"1)

\>1

=

S

/

n=o

T

.

(4)T

1

(n),_)

(P8

u

.

lui < 1 .

n1.

As established in the next chapter this form is useful in resolving

some interesting questions, particularly in the matter of quantization

over a finite range.

37

The Mehler expansion for order 3 goes as

(203

x,y,z

op

1

=

.1

r2.1.524.t2

I

(3.17)

i(rapi- s0+ty)-

2

1/4111244+u2313Y+u134Vdrdsdt

-CD

=

Co-r2+s2+t2

i(r2+

r

1

(2)3

j

2

2

e

2n

\Di

513+ ty)

st + u rt )n

rs + u

(u

12

23

13

n=o

_co j

drdsdt

One may further simplify the notation if the order of factors in

each term is preserved such that

i j k

T T = T

(k)

(i)

(4),

(0),

(3.18)

(Y)

A few terms written out go as

000

,

1 o 1

o 1 1

1 1 o

(4.09Y) =TYT+ u12TT9+ u23TTT+ u13YTT

x,y,z

2 220

1 r

2 022

-21411129 9 9

+ 21u139 9 9

3 303

1r3 33°

y y +

y y 3+

y y + 303

u23y

_Iix13,

123

2

(3.19)

211

112

121

4.

u139 9 9

4' u239 9 9

12u239 9 9 + 2u23u319 9 9

4*

2 o 2

2

.

2,

3

231

321

3u1iu23y y y +u3ly y y )

u13y

132

2

312

213

+ 3u23(u13y y y + u12y y y ) + 3u13(u12y y y + u23, y y

2

2

)

2 222

+ 6u12u23u31y y y ]

1 c

ril

= u (equivalent to reducing

= 0 and

and

u12

u23

u13

clear out of consideration) then Equation (3.19) reduces to

Note that if

z

] +

38

Equation (3.16)

A more compact form for the third order density

is

(3.20)

x,y,z (egO,Y)

oo

i

n

1

j

k

(i+j)

(k+i)

u12u2P139

()q:

(j+k)

(4)1)

1

n=o

n. i,j,k=o

where the multinomial is defined as

n

n:

, i+ j+ k = n .

(3.21)

iT717

remark similar to the one above is true for the case of removing

z

from consideration.

To paraphrase David Hilbert, the important step seems to be from

order 2 to order 3, hence it is tempting to write down the 4th order

For third order case there are

4

(3) correlation functions, for the fourth order case there are (2)

case after making a remark or two

Hence the conjecture goes as

P

correlation functions.

OD

11

(4200(96) =

P

xiYvz,w

1

p=o

P.

>

,

ijklmn

u12u13u14u23u24u34

i,j,k,l,m,n= 0

(3.22)

(i+1+m)

(4)9

(j+1+n)

(0)9

(Y), (k+m+n)(6)

P(i+j+k)

where

p-

(itj,1011911

ilj lkillmini

, i+j+k+1+m+n= p.

(3.23)

39

AUTOCORRELATION

IV.

Consider the mean square error criterion as defined by Max

(1960).

Distortion is expressed by

jxk

cr2

2

=

(4.1)

yk_i) p(x) dx,

(x

xk..1

where

E2

has been replaced by(72 for notational convenience.

Let

xk

p(x) dx

Pk-1 =

(4.2)

,

Xkl

then

1

(4.3)

x p(x) dx

Yk-1 =

Pk-1

-1

Yk + Yk-1

(4 .

4)

xk =

2

Then Equation (4.1) may be written as

(see Equation (2.24)).

xk

'

CF2 =CF2 - 2

'

k=1

Y

x p(x) dx +

k-1

k=1

2

Yk 1Pk'

(4.5)

-

xk-1

where

OD

0-

2

2

(4 .

x p(x) dx

=

6 )

-co

is the variance of the original signal.

From the second of Max's

(1960) conditions Equation (4.5) can be written

2

CF

2

2

= °x

Yk-1Pk-1'

(4.7)

40

Note that

can be written in an expanded form by assuming a lin-

D

k-1

and

xk_i

ear relation between the points

xk

so Equation (4.7) be-

comes

2

2

2

Cr -CTx

)/6/

=

)1

(4.8)

(x-Yk-1-10 ''k-l'-

Yk-1[13(Yk-1)

k=1

If the derivative of the probability function at v

-k-1

may be con-

sidered to be negligible

y2

2

2

Cr

=

_Cr

k=1

where, as observed above,

(4.9)

Yk 1P(Yk-1)'

cr

2

is the variance of the quantized signal.

Wood (1969) expressed this result as

2

2

a-

1

_

A2k

;5-1

k=i

12

P(xk-1)'

(4.10)-1

where

= xk

k-1

-

K-1

(4.11)

Note that Equations (4.9) and (4.10) express the difference in the

variances in terms of nonuniform quantization, different from the

uniform case in which we can assume

=

k-1

w'

a constant, k =

(4.12)

and the result corresponding to Equation (4.10) is

2

Crx

2

2

-a- = w

(4.13)

12

which is the "Sheppard's Correction", repeatedly proved by many

authors using different approaches.

One such approach is that of

41

Banta (1965) who considers a general theory of the autocorrelation of

quantizer output of a certain signal.

He shows how the autocorrelaticn

error can be isolated and reduced to

2

(4.14)

RE(0) =

12

where

in turn is derived from the definition of the autocorRE(0)

relation function

Rf(T) = E[f(t)f(t+T)]

where

f(t)

(4.15)

Naturally

is random.

2

(4.16)

Rf(0) = E[f (0],

the variance of

f(t)

in the usual statistical terms.

A flow diagram

of Banta's (1965) analysis is depicted in Figure (4.9).

Output

Input = x-f(x)

y

Quantizer

Figure 4.1

Herewith the Fourier Series representation of the input quantized signal, uniform quantization,

oo

f(x) =

(-1)kw sin 2nkx

,

(4.17)

k=1

The input signal is contaminated by noise,

x(t) = m(t) + n(t)t

(4.18)

and signal and noise are assumed to be statistically independent.

The input-output quantizer and the noise characteristics are plotted

in Figures (4.2) and (4.3) respectively.

42

Output

Voltage

2c)

.

Input

Voltage

-w

w

22

2

2

2

-w

-2w

Figure 4.3

Figure 4.2

(1965)

Considering a determinant signal and Gaussian noise input Banta

derived a generalized expression for the autocorrelation function of

the quantized output

R(q) =

o

R (T)

mm

+ Ran(T) +

R (T)

E

(4.19)

'

the terms on the right represent the separate autocorrelation functions of the signal, the noise and the quantizer effect respectively.

Certain assumptions on the magnitude of the signal voltage with reHe

spect to RMS noise voltage were made to derive Equation (4.19).

established Equation (4.14) again, and

scribed as the "quantizing power".

this field are Rice

RE(0) = w2/12

Some people who have worked in

(1945), Robin (1952),

(1958),

Baum

(1957),

Trofimov

Hurd

(1967),

to name a few.

can be de-

Bennett

(1956),

Kosygin (1961), Velechkin

Widrow

(1962)

(1956)

and

43

In order to clarify what we intend to discuss in this chapter we

make some remarks on what each author has done.

Bennett

(1956) was the

first to derive the expression for the autocorrelation function for tha

quantizer power and this was also obtained by Kogygin

method of characteristic functions.

Widrow

(1956)

(1961),

using a

introduced the

characteristic function theory in analyzing the quantization process,

later developed by Kosygin

(1961).

Hurd

(1967) worked with the auto-

correlation of the output where the input to the quantizer is the gam

of a sine wave and zero mean, stationary Gaussian noise.

Price

(1958)

laid down his theory for the autocorrelation function of the output

for strictly stationary Gaussian inputs and derived a relationship expressing the partial derivatives of the output aatocorrelation in

terms of the input correlation coefficients.

Several authors later

improved on his basic theorem and he himself checked out earlier results with his method, even though it involved differential equations

with almost impossible boundary conditions.

(4.15)

to

n

Extension of Equation

random variables,

R(T) = E 11- fi(xi),

i=1

f(x) the n

(4.20)

zero-memory nonlinear devices specifying the input-output

relationships, allowed Price

(1958)

limiter and the smooth limiter.

to consider the clipper, the hard

A unified approach to problems of

this type is to resort to the bilinear expansion of the joint Gaussian

density function in terms of the derivatives of the error function, as

given by Equation (3.2).

The immediate question is about the

u(T)

44

function.

Consider a linear filter

K(f)

to be opened over the fre-

quency as so that the normalized autocorrelation

u( ), associated

with sampled values of signal and noise input, is sufficient to deterrine the joint density function.

The process is described in Fig-

ures (4.4) and (4.5):

Input

noise

spectrum

Magnitude

of signal

spectrum

Input =

signal + noise

Output>

K(f)

Figure 4.5

Figure 4.4

If

wi

is the half purer bandwidth of the input noise power spectrum,

is the half power bandwidth of the signal spectrum, and A

is de-

ws

fined by

A- 1 =

1Ps

(4.21)

,

1Po

the ratio of input noise power level to input signal power level, then

2 2

(t) -A )e

2

-Ws

+ b(A -1)e

u(T,45) -

'

2

(6- 1)(6+ A )

(4.22)

45

If there is no distributed signal power present

6 = wi/w > 1.

where

then for A = 1 the u(T05) quickly reduces to

-wl

u(T) = e

Let

(4.23)

.

x and y be two normally distributed (0,1) variates; the joint

density is that of Equation (3.2) with either of the two designated

values of

u(T).

An important special case is to set

lim p x,y(m.0) = 6(a-0)

where

u(q) = 1.

y(0)(a),

is defined by Equation (2.9).

y(0)(a)

That is,

(4.24)

An interesting effect

of the Dirac delta function is developed by Papoulis (1962).

OD

.2

Y

lim

C>0

C

0

I(0)

C°

(2n)

(3)

(4)da =

I 6(e) y

0

(2n)n

(-1) (2n).

(2n)

(4) d4

(4.25)

I

= 1 y

(0)

-

2n+1,11

Consider a finite form of the input-output relationship of Banta

(1965):

Figure 4.6

L6

Quantization takes place into

different levels, equally spaced,

2N-1

< v.(t)

<

if input voltage lies in the range -a(1-2-)

1

2N

a(1-1);

if

2N

if input lies outside this range the output is taken to be either ma

or -ma, as the case may be. Let the interval be defined as

a.

w=

(4.26)

then the input-output relationship may be defined in terms of the

greatest integer notation as

b,

(N- f)w < a ,

4

f(s)

-(N- 4)w < 4 < (N- 1--)w,

[(7)

-b , a <

(4.27)

b_w-N.

a

m = -a-

This staircase function of finite scope meets the conditions outlined

by Price (1958), so autocorrelation of output has the form

co

y

fx(a) fy(0)

R(u,a,w) =

(n)

(4) y

(s) un(T)dadS

(4.28)

ni

n=o

"-OD

(n)

Only the odd order terms of the resulting series are not zero, a point

which is established by the identities

2cp(n)(a), n odd,

9

(n)

(4) oo

So

n=

N-1

(-4) =

2n+1

(2n+1)1

(4.29)

n even .

N-1 N-1

(j+i)w

0,

m2 w2i ,ik

U

a, w) =

+2N

(n)

k=1

(k+i)w

19(2n+1)(2)641)(2n+1)(0)do

j=1 04)w

CI-19w

( j+-29w

00

(4.30)

(2r11)(4)ctarcp(2n11)(0)do}

iify(2n+1)(4)d4fT(2n+1)(0)do+NITI

j=1(j -1)w

(N 4)w

(N -2)w

(N=7)w

47

OD

N-1

2n+1

(2n)

[(j+i)w]

= 4m2w2,/

-(2n)[(j-i)w]]

j=1

n.(2n+i)!

(2n)

-N

00

i

C(N-.0w]

2

2

u2n+1

{!;',

= 4m2w2

cp(

2n

)[(j-Dw3

,

lu

I

j=i

n=0 (2n+1)!

The extreme value u(T) = 1 is of value as

5. 1 .

R(1,a,w) is an elementary

From Equation (4.24)

function.

oo

lim R(u,a,w) =

u--*1

I2

f x(a)

y(0) (z) dm

-00

(4.31)

0+1)W

N-3,-

=2m

OD

(j-*)w

2 2

= 2m w

1\171-

2

1

2(

m22_

,w2

7

"

(

T(-1)[0-1-)]]

W-104-1)w7

j=1

y(0)(a)

y(o)(a) de + N2

j2 I

dm

-I)w

+ N2[1.

2

cp(-1)[(N-00]]

.

cp(-1)c04)(03].

2)

A smooth quantizer in the sense of Baum (1957) would be specified

by an input-output relationship

fx(42) = 2b Y(-1)(DA4' Ig)

(4.32)

9

where the argument implies an integer multiple of

of all the subintervals on the entire axis,

controlling the curvature.

c

22, w the length

an arbitrary parameter

48

b=ma

-7"

-b

Figure 4.7

In this case Equation (4.28) takes the form

R(U,W,O)

2

=(Lb)

OD

\\I

//

u2n+1

.111.2

c

cP

{_

°D

u2n+1

n=8

2

(2n)

[(j+i)iil-Y

[(j-f)w]]

j=1

n=o (2n+1)!

=(4bf

)E,

(2n)

(2n+1)I

(4.33)

op

/7

OD

,wN2k

k"q

(-1) iw

2

,

k=o

j=1

(21-7a)2!cP(2"1+2k)(jw)

oo

22 \

= (4b) w

//

n=c:

u2n+1

Lc:

2

,100)cp(2n+1+2k)

(f)2koo

Ow)

("2;;51.

j=].cp(-l)(

There is a simple application of the Taylor series formula here.

From

Equation (4.21)

co

7-,

(-1) 413

lim R(u,w,c) = 2(2b)2 2_, Cy

j=1

u-41

(

2 (-1)

)] C y

[(j+i)w]-

(-1)

Y

[04)103]

(4.34)

= 8b2w

j=1

(22)2k

(g=)]

CY

(jw)

k=o

2k

OD

2

= 8b w

(2k)

(2k+1)!

(-1)

(7)

CY

.

(i2)]

2 (2k)

ce

(jW)

(2k-fa)!

These formulations appear to be of questionable value but simplifications are possible by means of the two Etler-MacLaurin summation

formulas.

That is, the summation with respect to the index

j

may

149

be evaluated in terms of elementary functions.

Hildebrand (1956) and

Gould and Squire (1963) have discussed the two slightly different forms

of the Euler-NacLaurin 5UM formula, both involving the Bernoulli num-

bers defined by the identity

op

1

B

e

2k-1

2k

= 2

z

,

Izi

< 2rr

(4.35)

,

(2k)I

1 - e

Bo = 1, =-(5

B2,

1

1

= -6-

p

1

B

1

ID '

'6 = 7 ' '8 -

10 = 66

The second form may be written out as (see Equation (5.8.18),Hildebrand (1956))

N

a

f[k4)W]

k=1

=

OD

B

.41.1.(1

f(a)da =

2.4

w

0

1

)w2k-1

22k-1

k=1 (2k)I

(4.36)

(2k-1)

(2k-1)

(a) - f

(0)]

a

=I

f(a) cl4

--[f '(a

fl(0)J+ 22.334f1/16)

)

-

1'111(0)]

5760

0

V

11 5

V

Ef (a) - f (0)] +

--w

967,680

This may be applied at once to the finite sums occurring

Equation (4.30) becomes

of the quantizer of finite scope.

a

oo

R(u,a,w) = 4m2

in the study

p(2n)(4)

u2n+1

w2

(2114.1)(a)

(2n+1)!

0J

24

(4.37)

7w

5760

(2n1 a)

_ .....

50

u2n+1

2

= (2m

n=o

(2n+1)

14.

w

(2n-1)

(2n+1)'

(a)]

2

(a)] -

LY

/

4

2

(2n-1)

(2n-)

(a)y

y

7w

p(2n-1)(a) p(2n+3)(a)

J.

2880

576

(a)

12

1

[Y

2

The first term of this series would represent the autocorrelation of

output of a finite clipper, discussed by Price (1958) and tabulated by

Laning and Battin (1956).

Tabulation by this means would seem to be

easier and more precise than by the numerical solution of the second

order differential equation with singularities.

taken to be zero then

w

Note that if

a

is

is also, and the hard limiter result is

2n+1

lim R(u,a,w) = lim

a-40

a,w -40

a

(2n+1)1

4)

(4.38)

[2n)(0)]2

u2n+1

2

(a)]

n=0

(0)] =

= 2b

u

(2b)

2n+1

1

(2n+1)1

21T

n=o (2n+1)I

2n)l]2

22n(n!)2

-1

2

2b

(2n -l)

u

(2b

sin

[u(T)3

7

as given by Price (1958).

If

N, the number of steps on each side of

zero, is allowed to become arbitrarily large, then the parameter

a

allowed to do likewise, the input-output relationship becomes trivial

2

and the autocorrelation of output would be simply m u.

In similar fashion Equation (4.31) becomes

is

51

2 [ 2

R(1,a,w) = m

[1-2cp

(-1)

(a)]

(-1)

2

+ 2.

a

[cP

(a) + a cP

+ 2y

(0)

(-3)

(a) - 2a 9

(4.39)

(0)7 4

(a)] -

(a)

(1)

--LIL

L3cP

(2)

(a) + acP

R(1,a,0) was discussed and tabulated by Laning and Battin

m

(a)1+.71.

1440

6

(1956)

with

It is clear that

taken to be unity.

lim

R(1,a,0) = m2

(4.40)

.

For further study of the smooth limiter the first Euler-MacLaurin

(1956))

sum formula (see Equation (5.8.12), Hildebrand

series expansion for

leads to a

R(u,w,c) in our Equation (4.33) and

-1

2b2

lim R(u,w,c) =

w>0

u(T)

sin

11

the inverse sine function obtained by Baum

(4.41)

l+c2

(1957).

For the different forms of the autocorrelation functions of the

output of the several quantizers discussed above we were concerned with

uniform quantizers.

Initially the domain of definition was broken up

into intervals, each

of

size

w.

For the non-uniform case one might

set up a method of analysis to encompass non-uniformity, which results

from use of the least mean square criterion.

This brings in Max's

(1960) minimizing conditions.

Consider the second order case and the corresponding autocorrelation function of the output, as expressed by Equations

(4.32).

The function

fx(m)

(4.31)

and

does not follow the step-wise character-

istic as shown in Figure (4.6).

The step characteristic will be

52

entirely dependent upon the minimizing conditions as given by Equations

(4.3) and (4.4).

Consider a generalized step-wise behavior given by

y = g(x),

x takes on discrete values dictated by the condi-

where

tions of Equations (4.3) and (4.4).

Taking input as Gaussian (0,1)

the autocorrelation function of output can be written as

co

CO

(n)

(n)

I

R(u,a,g) =

()un(T) dad0

(1)IP

P

g(a)g(13)

n=o

(4.42)

n I

111(7)4 < 1,

or

oo

7 I

R(u,a,g) =

(n)

(n)

g(

kcil) de

)

I g(0) p

(0) dp.

(4.43)

-oo

n. -oo

Integration by parts leads to

oo

oo

fo

un

R(u,a,g) =

g(a)

/

y

(n-1)

/

g (0) y

(4)da

(n-1)

(0) d0.

(4.44)

_

y=g(x)

I

I

a

Figure 4.8

In Figure (4.8) the quantizer levels may be described by a relationship

y = g(x)

N-1

= yo +

,

k=1

where Ak =

yk - yk-1

f Ak a(s- xk) ds

(4.45)

-oo

and the Dirac function serves to pinpoint the

53

jump steps in the quantization procedure.

The

is the initial quantizer level fixed by choosing

of Equation

(4.45)

Differentiation

(4.46)

into Equation

-1

(4.44)

leads to

OD

.

6(a- xk) y

k

n

(4.46)

6'k gx-Xk)

un

R(u,a,g) =

xo.

(4.45)

yields

g/(x) =

Putting Equation

in Equation

yo

(n-1)

Oa) da

-bo

N-1

°ID

.16(0-

J

un

(4.47)

(n-1)

(n-1)

k T.

xj) Y

(0) d0

.

12

-n1

Compare Equation

(4.47)

with Equation

(4.30)

and note the effect of a

non-uniform quantizer on the structure of the resulting equations.

in Equation

(4.47)

the

u(

takes value unity for

)

00

R(1,a,g) =

1

ni

= 0 then

If

54

BIBLIOGRAPHY

1.

Algazi, V. R. Useful Approximations to Optimum Quantization.

IEEE Trans. Communication Technology, vol. COM-14, pp. 297-301,

1966.

2,

Banta, E. 1, On the autocorrelation function of the quantized

signal plus noise.

IEEE Trans. Information Theory, vol. IT-11,

pp. 114-118.

1965.

Baum, R. F. The correlation function of smoothly limited Gaussian

noise.

IEEE Trans. Information Theory, vol. IT-3, pp. 193-197.

1957.

Bennett, W. R.

Spectra of Quantized signals.

Bell Sys. Tech. J.,

vol. 27, Pp. 446-472. 1948.

Black, H. S. and J. O. Edson.

vol. 66, pp. 495-499.

Pulse code modulation.

AIEE Trans.

1947.

Jauestein, L. I. Asymptotically optimum quantizers and optimum

anPlog to digital converters. IEEE Trans. Information Theory

(Correspondence), vol. IT-10, pp. 242-246. 1964.

Bluestein, L. I. and R. J. Schwarz. Optimum zero-memory filters.

IRE Trans., vol. IT-8, pp. 337-342. 1962.

Bruce, J. D. Optimum quantization. Massachusetts Institute of

Technology, Research Laboratory of Electronics, Cambridge, Mass.

Tech. Rept. 429, March, 1965.

Carlitz, L.

Ital. (4)

An extension of Mehler's formula.

3, pp. 43-46, 1970.

Some extensions of Mahler formula.

pp. 117-130. 1970

Cramer, H. Mathematical Methods of Statistics.

varsity Press, 1946. 575 p.

Boll.Un. Math. 21,

Collect. Math.

Princeton Uni-

Davis, C. G. An experimental pulse-code modulation system for

short-haul systems.

Bell. Sys. Tech. J., vol. 41, pp. 1-24.1962.

Elias, P. Bounds on performance of optimum quantizers.

Information Theory, vol. IT-16, pp. 172-184. 1970.

IEEE Trans

. Bounds and agymototes for the performance of multivariate quantizers. Annals of Mathematical Statistics, vol. 41,

part II, pp. 1249-1259. 1970.

55

Fleischer, P. E. Sufficient conditions for achieving minimum distortion in a quantizer. IEEE International Convention Record,

Part I, pp. 104-111. 1964.

Garmash, V. A. The quantization of signals with non-uniform steps.

Elektrosvyaz, vol. 10, pp. 10-12. 1957.

Gish, H. and J. N. Pierce. Asymptotically efficient quantizing.

IEEE Trans. Information Theory, vol. IT-14, pp. 676-683.

September, 1968.

Goodall, W. M.

J., vol. 26,

Telephony by pulse-code modulation.

pp. 395-409.

Bell Sys. Tech

1947.

Goulds, H. W. and W. Squire. Maclaurin's second formula and its

generalizations. American Mathematical Monthly, vol. 70, pp.

44-52, January, 1963.

On the effect of noisy smeared signal, first ordHammerle, K. J.

er-first order receiver system. Analysis Group Report 3 .

(Boeing Company).

Hardy, G. H. and J. E. Littlewood and G. Polya. Inequalities.

314 p.

London: Cambridge University Press, 1934.

Harvard Computation Laboratory. Tables of the Error Function and

Its First Twenty Derivatives, vol. 23, Annals of Harvard Computation Laboratory, Harvard University Press, 1946.

Hildebrand, F. B. Introduction to Numerical Analysis.

McGraw-Hill, 1956. 511 p.

New York:

Hurd, W. J. Correlation function of quantized sine wave plus

Gaussian noise. IEEE Trans. Information Theory, vol. IT-13,

pp. 65-68, January 1967.

Jackson, D. Fourier Series and Orthogonal Polynomials. The Carus

Mathematical Monographs, No. 6, The Mathematical Association of

America, Oberlin, Ohio, 1941. 234 p.

Kendall, M. G. Proof of relations connected with the tetrachoric

series and its generalization. Biometrika, vol. 40, pp. 196-

198.

1953.

The statistical theory of amplitude quantization.

Kogyakin, A. A.

Automatika Telemekh, vol. 22, pp. 624-630. June, 1961.

Random Processes in Automatic

Laning, J. H. and R. H. Battin.

Control. New York, McGraw-Hill, 1956. 434 p.

56

Lozovoy, I. A. Regarding the computation of the characteristics

of compression in systems with pulse-code modulation.

Telecommunications, No. 10, pp. 18-25.

Mann, H. H. M. Straube, and C. P. Villars. A companded coder for

an experiMental PCM terminal. Bell Sys. Tech. J., vol. 41, pp.

173_226.

1962.

Max, J. Quantizing for minimum distortion.

tion Theory, vol. IT-6, pp. 7-12. 1960.

32.

IEEE Trans. Informa-

Messerschmitt, D. G. Quantizing for maximum output entropy.

IEEE

Trans. Information Theory, vol. IT-17, pp. 612-615. September,

1971.

MUnroe, M. E. An Introduction to Measure and Integration.

bridge, Mass., Addison-Wesley, 1953. 310 p.

Cam-

Panter, P. F. and W. Dite. Quantization distortion in pulse count

modulation with non-uniform spacing of levels. Proc. IRE, vol.

39, pp. 44-48. January, 1951.

Papoulis, A. The Fourier Integral and its applications.

McGraw-Hill, 1962.

318 p.