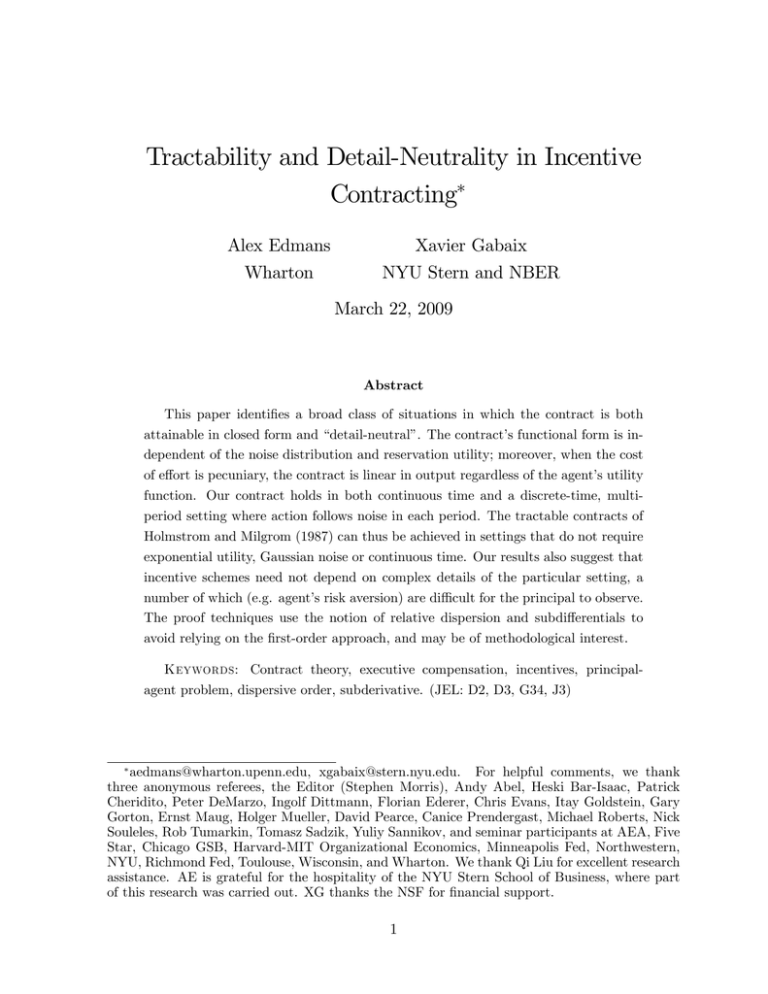

Tractability and Detail-Neutrality in Incentive Contracting Alex Edmans Xavier Gabaix

advertisement