May 2011 Subhra B. Saha (Cleveland State University)

advertisement

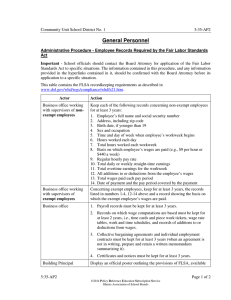

A Framework for Quantifying the Economic Spillovers from Government Activity Applied to Science May 2011 Subhra B. Saha (Cleveland State University) s.b.saha@csuohio.edu Bruce A. Weinberg (Ohio State University, IZA, and NBER) weinberg.27@osu.edu ABSTRACT Governments invest heavily in science and those investments are increasingly being justified in terms of the economic spillovers they generate, such as “jobs created.” Yet there are no accepted methods for quantifying these benefits and their magnitude is widely disputed. We analyze the ways in which science generates economic benefits; lay out how to (and not to) quantify those benefits; and provide a range of estimates. While our estimates vary considerably across specifications, our baseline estimates indicate that a $1B increase in science spending might raise wages by $1.68B and that these wage effects are likely to understate the effects on productivity. We also find that a $1B increase in science might generate 92,500 jobs, with 90% of these jobs being missed even using state-of-the-art “job creation” methods. Our methods can be applied to measure the local productivity spillovers from other government activity as well. JEL Codes: O33, O38, H54, J20 Keywords: Economic Impacts of Science, Knowledge Spillovers, Job Creation We wish to thank Paul Bauer, Karen Berhnardt-Walther, David Blau, Steve Cosslett, Timothy Dunne, Don Haurin, Francisca Richter, Steve Ross, Mark Schweitzer, and Stephan Whitaker for helpful discussions; seminar participants at the Federal Reserve Bank of Cleveland, Ohio State University, the London School of Economics, and the Science of Science Measurement workshop, and the National Academies for comments; and the Federal Reserve Bank of Cleveland and the National Science Foundation for financial support. Paul Bauer and Mark Schweitzer also provided patent data. Mason Pierce and Anna Winston provided excellent research assistance. 1 I. Introduction Governments undertake a wide range of activities and are increasingly measuring the benefits of those activities in terms of their economic impacts, such as the number of “jobs created.” This paper provides a formal analysis of the ways in which one government activity, namely investments in science, generates economic benefits; lays out the best ways to (and not to) quantify those benefits; and provides a range of estimates. Science investments provide a useful window into measuring the impact of government activity. Governments are major supporters of scientific research (OECD [2008]); and since 2003, the United States Federal Government spent roughly $60 billion annually (in 2009 dollars) on basic and applied research (Clemins [2009]). Like many government activities, these investments are increasingly being justified in terms of the economic spillovers they generate, but there is no widely-accepted method for quantifying these benefits and the size of these benefits is widely disputed (Macilwain [2010]). It is important to be clear about the ways in which science generates economic benefits. Perhaps the greatest benefits from science come from the new knowledge, products, and processes that derive from it (just as the primary benefit from building roads, for instance, is to improve transportation). We refer to these benefits as the “direct benefits” of science. At least since Vannevar Bush’s 1945 Science: The Endless Frontier report, government support for science has been motivated by these direct benefits. In recent years, however policy discussions have increasingly focused on what we will refer to as the “spillover benefits” from science, the benefits that arise over and above the direct benefits, such as the number of “jobs created” by science. There are many reasons 2 why science would generate spillover benefits, especially locally. Scientists and their students often work for (or start) companies, increasing the translation and absorption of science; graduates from research institutions may be better equipped to perform in the knowledge economy; and science may generate infrastructure, including equipment and facilities that support industrial innovation, or provide a hub for innovation (e.g. the research institutions in San Francisco may have attracted venture capital that then generated more innovation).1 Our focus is in this area, on the benefits that are generated by science over and above the knowledge and innovations that arise from it directly, an area of increasing policy importance. At a theoretical level, our work points to the advantages of measuring the economic spillovers from science and other government activities using the effects on wages rather than the number of jobs created. Despite the policy interest in job creation metrics, we show that creating jobs per se does not increase social welfare, although it may drive up wages, benefiting households, albeit at the expense of firms. By contrast, the effect of science on wages provides a useful measure of the economic spillovers from science. Specifically, it provides a direct measure of the increase in surplus received per worker and, if the job creation benefits of science are small relative to spillovers, the effects of science on wages provides a lower bound for the effects of science on productivity and total surplus. To estimate the spillover benefits from science, we relate wages and changes in employment in a metropolitan area to academic R&D as a measure of scientific activity. One challenge to this approach is that academic R&D may well be endogenous – cities 1 Salter and Martin [2001] and Scott, Steyn, Geuna, Brusoni, and Steinmueller [2001] discuss mechanisms and survey the literature. 3 with more academic R&D may have more (or less) desirable amenities. In this case, workers would tend to move to those cities depressing wages. This effect will be offset if people with higher (unmeasured) abilities disproportionately move to cities with more academic R&D. On the other hand, universities may be located in cities with lower productivity for economic or political reasons or because they were founded by industrialists in cities that have lagged economically during deindustrialization (Crispin, Saha, and Weinberg [2011]). To address these concerns, we include metropolitan area fixed effects. These account for all time-invariant differences across cities (e.g. in climate or geography). We also employ a share-shift index for academic R&D. This instrument exploits initial differences across metropolitan areas in the academic fields in which their R&D is concentrated interacted with changes in federal support for different fields (e.g. Baltimore is heavily focused in the life sciences, so fluctuations in life sciences funding affect Baltimore more than most cities). While our estimates vary considerably across specifications, our baseline estimates indicate that a $1 billion increase in science spending would likely raise wages by $1.68 billion and that the wage effects are likely to understate the effects on productivity. Our baseline estimates also indicate that a $1 billion increase in science would generate 92,500 jobs, and that roughly 90% of them would be missed even using state-of-the-art “job creation” methods. It is important to note that while our analysis focuses on science, our points about measuring impacts (and the associated empirical challenges) apply far beyond science and research. Wage impacts are likely to measure the increase in social welfare and household surplus better than the number of jobs created for many public works, 4 including investments in local infrastructure. Similarly, the challenge of unobserved differences across cities applies to cross-city regression analysis quite generally. Although the literature on the spillover benefits from science is small, there are a few related studies. An early study by Beeson and Montgomery [1993] finds that university activities are related to the share of scientists and workers with science degrees, but not significantly related to income, employment rates, net migration rates, or the share of employment in high tech industries in a cross-section of cities. Using panel data and instrumental variables to account for the endogeneity of science spending, Saha [2008] finds positive and significant effects of academic R&D and science and engineering degrees in a city on income. Kantor and Whalley [2009] find small but statistically significant agglomeration spillovers caused by university spending induced by shocks to university endowments, although their university activities include a substantial amount of non-scientific activity. There is some evidence that the benefits of science are increasing over time (Goldstein and Renault [2004]; Saha [2008]; and Crispin, Saha, and Weinberg [2010]). Hausman [2010] links these increasing spillovers to the Bayh-Dole Act of 1980 giving universities the rights to license their research. Given that research universities produce large numbers of students as well as science, it is important to control for both individual education and the education distribution of the population. Abel and Deitz [2009] find that controlling for the share of college graduates in the population reduces the estimated effect of academic R&D on wages. Lastly, there is evidence linking University research to innovation. Zucker, Darby, and Brewer [1998] find that the presence of star scientists is associated with more 5 biotechnology startups. Carlino and Hunt [2009] find academic R&D has positive and significant effects on innovative activity as measured by patents Bauer, Schweitzer, and Shane [2009] find that patenting and human capital levels are important determinants of per capita income. These results complement each other – science generates patents, which in turn raise income – providing a first step toward understanding the ways in which science affects wages. Thus the literature has explored how science is related to a wide range of economic outcomes, but there are no accepted methods nor is there agreement on the outcomes and the results are mixed. We contribute to this literature by using rigorous economic principles to compare the relative merits of different approaches. We also produce a set of estimates using a strong research design that allow us to compare the various estimates empirically. II. Theory We model how a government paying to employ people in science (or another activity) affects employment, wages, surplus, and the division of surplus. Our model comprises 2 sectors demanding homogenous labor, a science sector, which is government funded, and a competitive, private, non-science sector. Our model accounts for the fact that science employment may generate productivity spillovers that raise the value of the marginal product of labor in the non-science sector as well as direct benefits in the form of better knowledge and technological advances. We do not account for any multipliers, which might be applied to the job creation estimates.2 2 The Congressional Budget Office [2010] provides a convenient overview of the literature on multipliers. It argues for multipliers for government purchases of goods and services between 1 and 2.5. Even an estimate at the high end of this range would not qualitatively alter our results. Moreover, the benefits captured by the multiplier may be geographically dispersed, while we focus only on local benefits. 6 In the non-science sector, the inverse demand curve for labor is given by the value of the marginal product of labor, V q, s . The value of the marginal product is decreasing in the quantity of labor employed by the non-science sector, q, and non-decreasing in the number of people employed producing science, s because of productivity spillovers. In a competitive equilibrium, the wage paid by firms satisfies, W V q, s . Science generates direct benefits (in the form of knowledge and technological improvements) of Bs for the population as a whole. The reservation wage for the qth worker is W Rq , defining the inverse supply curve for labor. The equilibrium is a wage, W, an (exogenously set) level of employment in science, s, and a total employment level, Q, which includes employment in both the science and non-science sectors (employment in the non-science sector is Q-s) such that, W RQ and W V Q s, s . (II.1) (Here and below, capital Q and W denote equilibrium values.) In analyzing the effect of science on the market, we will refer to Figure 1. The equilibrium in the absence of any science is indicated by (i). We decompose the effect of science on the equilibrium into two components, the “productivity spillovers” from science, given by the movement from (i) to the counterfactual point (*), and a “job creation” effect due to the effect of hiring s people doing science, given by the movement from (*) to (ii). Effect of Science on Employment and Wages We begin by determining the effect of science on employment and wages. The effect of increasing science on the equilibrium can be obtained by totally differentiating the equilibrium conditions in (II.1). Formally, 7 dW dQ dW dQ and Rq Vq 1 Vs . ds ds ds ds (II.2.a and II.2.b) Equating these and solving for the change in quantity implies, Vq Vs dQ 0. ds Rq Vq Rq Vq Job Creation (II.3) Productivity Spillover Using this expression, the log change in employment from an increase in science employment (relative to total employment) is d ln Q dQ S 1 Q D Vs 0 .3 S ds 1 1 ds W D S Q Job Creation (II.4) Productivity Spillover As reflected by the first term, creating science jobs raises total employment less than 1for-1 with the increase in science employment because science employment crowds out non-science employment by raising wages. These effects can be seen in Figure 1, where total employment increases from (*) to (ii) by less than the increase in science jobs, indicated by the distance s, because wages are higher at (ii) than at (*). Put somewhat differently, the number of jobs created doing science, s, overstates the net increase in employment. The greater the labor demand elasticity (the smaller the labor supply elasticity), the more job creation overstates the number of jobs created net of those crowded out. Increasing science also raises the value of the marginal product in the non-science sector if Vs 0 . In Figure 1, the movement from (i) to the counterfactual point (*) gives 3 To derive these expressions, we use the conditions Vq denotes the demand elasticity (written as positive) and 8 S 1 W 1 W D and Rq S , where D Q Q denotes the supply elasticity. the effect of productivity spillovers on employment in the private sector. As is intuitive and can be seen from (II.4), the productivity spillover effect on employment is increasing in both the labor demand and supply elasticities. Given that the labor supply curve is stable, wages increase by the amount that it necessary to induce the increase in total employment. Multiplying the slope of the inverse supply curve (II.2.a) by the employment response (II.3) and rearranging yields, dW 1 W D D Vs 0 . S S ds Q D Job Creation (II.5) Productivity Spillover Wages increase both because demand increases in the science sector (given by the distance s in the figure labeled “job creation”) and because of the productivity spillover to the non-science sector. These effects can be seen separately in Figure 1 as the movement from (*) to (ii) and the movement from (i) to (*). The effect of science on wages is decreasing in the elasticity of labor supply – as the labor supply elasticity increases, the same increase in employment can be achieved with a smaller increase in wages. Below we argue that on the order of 90% of the impacts of science operate through productivity spillovers and that job creation accounts for on the order of 10% of the effects. If so, the wage response will be increasing in the demand elasticity. Moreover, any changes in wages will be dominated by the productivity spillover effect, and the effect of science on will provide a convenient lower bound for the productivity spillovers from science. Under this assumption, the change in wages approaches the change in productivity as D and/or S 0 . Effect of Science on Surplus Having characterized the effect of increasing science on employment and wages, 9 we turn to the effects of increasing science on total surplus and its division. Again, we distinguish the job creation effect from the productivity spillover effect. We also assume that science generates social benefits (in the form of new knowledge and technologies). These benefits are captured in a reduced form way by the curve Bs and generate social welfare, but not employment or wages. Total surplus in the model is TS Qs 0 s Q V q, s dq B~ s d~ s Rq dq . 0 0 (II.6) Intuitively, total surplus is the value of the marginal product received by firms in the nonscience sector plus the direct benefits of science less the opportunity cost of the workers who are employed in science. (Here and elsewhere we ignore the deadweight loss of taxation, although it may be considerable.) The effect of increasing science on total surplus is, Qs dTS Vs q, s dq Bs RQ . 0 ds (II.7) The first term gives the increase in surplus from productivity spillovers as the value of marginal product increases for each of the Q-s workers employed in the private sector. This is reflected in Figure 1 by the parallelogram (a). The second two terms combine the direct benefits of science and the job creation effects. The science itself generates direct benefits but imposes a cost in the form of the opportunity cost of the people employed to produce it. This difference is given by the region (b) in Figure 1. If the direct benefits of science are less than the amount it costs then (b) becomes a loss of surplus. It is noteworthy that job creation per se only generates deadweight loss. If, for instance, the science being produced generates no direct benefits (v(s)=0) then job creation generates a 10 deadweight loss equal to workers’ opportunity costs, given by the area (c). Thus, from a welfare perspective, the gains from science are the direct benefits and the productivity spillovers, with job creation itself generating a deadweight loss. Policy discussions frequently focus on the benefits received by households, so we look particularly at the producer surplus, which accrues to workers. The producer surplus is PS W Rq dq . The change in producer surplus from increasing science is Q 0 equal to the increase in the wage dPS dW . Thus, if one wants to estimate the effects Q ds ds of increasing government spending on science, one wants to focus on wage benefits. Our model has a number of implications. First, it highlights the limitations of job creation as a measure of the benefits from science (or other government activity). Job creation per se does not increase social welfare and, insofar as job creation drives up wages, the number of jobs created will overstate the net increase in employment. At the same time, the model points clearly to the advantages of measuring the benefits of science (and other government activities) using changes in wages. If productivity spillovers dominate the effect of science on wages, then the effect of science on wages provides a lower bound on productivity spillovers. Under any circumstances, the increase in wages measures the increase in consumer surplus received by each worker. Lastly, it generates specific predictions for how the demand and supply elasticities affect how wages (and employment) respond to science. III. Estimation To estimate the relationship between science and employment and wages, we run reduced-form cross-city regressions. There are large variations in the sizes of cities and 11 researchers have emphasized that flows of workers respond to demand shocks slowly (Blanchard and Katz [1992]), so we estimate the effects of science on employment using 10-year changes in (log) employment. As a price, wages respond to demand more quickly, so we a use contemporaneous wage measure. (Wage regressions with lags of science are generally similar, but less precise than those reported here. Consequently, our ability to comment on the timing of impacts is limited.) Our models are of the form, W ln wcti S ct W Z ct W xcti W tW cW ctW cti (III.1) E ln ct 10 S ct E Z ct E tE cE ctE . Ect (III.2) Here ln wcti denotes the log wage of person i in city c at time t; E ct denotes employment in city c at time t; S ct denotes academic R&D per capita in city c at time t; Z ct denotes other characteristics of city c at time t; x cti denotes characteristics of person i in city c at W time t; and the t , c , ct , and cti denote time, city, city-time, and individual level effects, which can be treated as fixed effects or error components. It is worth noting that we estimate our wage equation at the individual level, adjusting our standard errors for the presence of metropolitan area effects. Employment growth is estimated at the metropolitan area using 10-year changes between census years. As indicated, uncontrolled differences in productivity or amenities will bias ̂ W . Changes in productivity or amenities will also bias ˆ E . If, for instance, universities tend to be located in places where productivity would otherwise be low (e.g. because of a lower opportunity cost of real estate) or, in the case of employment, where productivity is decreasing, ̂ W and ˆ E will both be biased downward. (The opposite is true if the most 12 science is in places where productivity would otherwise be high or increasing.) Interestingly, if science is highest in places with (increasingly) desirable consumption amenities then ̂ W will be biased downward and ˆ E upward. (The opposite patterns will generate the opposite bias.) It is also possible that cities with higher S ct will attract the most skilled workers. If so, we would expect ̂ W to be biased upward (it is unclear what effect that would have on ˆ E ). To address these biases, we estimate (III.1) with fixed effects and with instrumental variables. The fixed effects estimates control for time-invariant differences in production and/or consumption amenities across cities, but not time-varying differences, including changes in innovation and average education that are driven by changes in wages. We estimate equation (III.2) in differences (which are equivalent to fixed effects given that we have 2 10-year changes) and with instrumental variables. The difference estimates will account for secular trend differences in growth rates across cities (e.g. the migration from the Northeast and Midwest to the South and West). Instrumental Variables Strategy To address these time-varying differences across cities, we turn to an instrumental variables strategy, relying on share-shift indexes for scientific activity. These instruments exploit historic variations in the research focus of different cities interacted with trends in federal support for different fields. To illustrate our approach, consider a simple, stylized example with two sectors – information and computing technology and bio-medical technology. West Lafayette, Indiana and Baltimore both have considerable academic R&D, but R&D in West Lafayette is more focused in engineering while Baltimore is more heavily focused on the life sciences technology. An increase in life sciences R&D 13 will likely raise R&D in the Baltimore more than in the West Lafayette. Formally, Let e fnt and e fct denote spending on field f in year t nationally and in city c. Total spending in year t in city c is ect f e fct . Field f’s share of all spending in city c in year t is s f |ct e fct ect e fct f e fct . The share shift index starts with the shares in some base year, t=0, which we take to be 1973. Then for each city c the imputed growth (where 1 equates to no change) in spending between 0 and year t is, e fnt ect f s f |c 0 ec 0 e fn 0 f e fc 0 f e fc 0 e fnt e fn 0 (III.3) For each city, the implied growth is a weighted average of the growth in academic R&D spending in each field where the weights for each city correspond to the share of spending in that city in field f. We then interact these growth rates with per capital spending in the base year ( ecPC 0 ) to get e fnt ect ecPC 0 f s f |c 0 e fn 0 PC ec 0 f e fc 0 f e fc 0 e fnt PC ec 0 . e fn 0 (III.4) These are our estimates of predicted academic R&D spending, which vary across cities and over time within cities. Instrumental Variables Estimation In our individual-level wage regressions, (III.1) we instrument for the metropolitan area-level science variable, S ct . Our first stage equation contains individual characteristics, x cti and, insofar as there is selection into cities, these characteristics may themselves be endogenous. To address this concern, we estimate the mean of the 14 individual characteristics in city c in year t, xct , and use the deviation of the characteristics from the city-time mean, xcti xcti xct as instruments for x cti . Formally, the first stage equations for our wage regression (III.1) are of the form, IEct H ct IE Z ct IE xcti IE tIE cIE ctIE ctiIE xcti H ct x Z ct x xcti x tx cx ctx ctix (III.5.a) (III.5.b) where H ct denotes the historic instruments. In both (III.5.a) and (III.5.b) the unit of observation is an individual i in city c at time t, with all people in the city in that year being assigned common values for the city-time variables, S ct , H ct , and Z ct . Instrumenting for x cti with xcti eliminates any bias from selective migration and eliminates noise in the predicted values of IE ct generated by the inclusion of individual level variables in the first stage (because in (II.5.a), ˆ IE 0 by construction). The first stage regressions for the employment growth equation (III.2) are straightforward because this model is estimated at the metropolitan area-level without individual controls. Comparison of Estimates from the Different Strategies If cities where incomes are higher produce more science (e.g. because they invest more in scientific institutions) including city fixed effects and using instrumental variables should reduce the magnitude of the estimates. Interestingly, most work (Saha [2008]; Bauer, Schweitzer, and Shane [2009]; and Kantor and Whalley [2009]) finds that fixed effects and/or instrumental variable estimators are larger than the OLS estimates. This finding suggests that scientific activity is highest in areas that are appealing places to live or where productivity would otherwise be lower (e.g. because universities are sited in out-of-the-way places). 15 The various strategies emphasize different sources of variation in innovation and average education. In particular, the fixed effects estimates place more weight on the high- to middle-frequency variation compared to models without fixed effects, including our instrumental variables estimates. For a given magnitude of change, higher-frequency shocks should have smaller effects on labor supply, consequently wages should respond more. There may be measurement error in scientific activity. Insofar as there is timevarying measurement error, fixed effects estimates are likely to suffer most from attenuation bias. On the other hand, the instrumental variables estimates will correct for attenuation bias. IV. Data We draw together data from a variety of sources. Our main independent variable is academic R&D in a metropolitan area for 1980, 1990, and 2000. We also use data on patenting and the share of the population with a college degree as controls. We use historic data as instruments. Our outcomes are wages and employment, which are drawn from and constructed from the Census Public Use Micro Samples. We also proxy for the supply and demand elasticities using the demographic composition of the population and the industrial composition of employment. Our data draw on and extend Saha [2008] and Crispin, Saha, and Weinberg [2010]. Science, Patenting, and Average Education Variables Data for academic R&D expenditures for individual colleges and universities are obtained from the National Science Foundation’s Survey of Research and Development Expenditures at Universities and Colleges. Spending is reported by field (physical sciences, life sciences, math, engineering, geology, psychology, and social science, which 16 includes the humanities) and source (e.g. federal, state, local, and industrial) for 1980, 1990 and 2000.4 Matching these schools to the Carnegie Classification [2002], about 93% of universities and colleges that have positive R&D are Ph.D. granting research schools, or mining engineering schools. The national observatories and national laboratories, which are large producers of scientific research, are excluded from this sample. R&D is measured in thousands of dollars. Total R&D from all universities and colleges is 64.3 billion in 1980 rising to 110.5 billion in 1990 and to 158.2 billion in 2000. The data is aggregated to a metropolitan area level by matching the schools to IPUMS metropolitan area codes. The New York, Boston, San Francisco, Chicago, and Los Angeles metros have the most R&D but, not surprisingly, university towns like College Station, TX; State College, PA; Iowa City, IA; Lafayette, IN; and Champaign, IL have the most R&D in per capita terms. Our instruments for academic R&D are constructed using these data and are described above. Data on patents for individual metropolitan areas were generously provided by Mark Schweitzer and Paul Bauer of the Federal Reserve Bank of Cleveland. Patent data was extracted from government patent files. See Bauer, Schweitzer, and Shane [2006] for additional details. Data on the education distribution in cities was estimated from the census. Outcomes: Census Micro-Data We measure wages and construct employment using Census data from the Integrated Public Use Microdata Series (IPUMS; see Ruggles; Alexander; Genadek; Goeken; Schroeder; and Sobek [2010]). We use the 1980 1% unweighted metro sample, 4 A limitation of the data is that it does not include information on subcontracts to other organizations or from other organizations. This is only a problem for subcontracts that are to or from organizations that are outside of the lead institution’s metropolitan area. 17 the 1990 1% weighted sample, and the 2000 1% unweighted sample from IPUMS. These samples were chosen to maximize identification of metropolitan areas. These data contain a range of individual characteristics including education, gender, race, ethnicity, marital status as well as city of residence, employment status, earnings, and weeks worked. The wage sample is limited to non-institutionalized civilians not currently enrolled in school living in metropolitan areas age 18 and above. Earnings are measured in real weekly wages (deflated to 1982-1984=100 dollars). Individuals whose real weekly wages were below 40 dollars or above 4000 are excluded from the sample as were people who did not report earnings. Lastly, to ensure that our estimates capture spillovers from academic R&D on the local economy, we discard people who are post-secondary teachers or who work in universities or colleges. Our wage sample includes 382,337 individuals in 125 metropolitan areas in 1980, 420,280 individuals in 127 metropolitan areas in 1990, and 459,574 individuals in 129 metropolitan areas in 2000. The employment sample is similar to the wage sample except that it includes all employed, non-institutionalized civilians over 18 who are not currently enrolled in school (i.e. people with high, low, and unreported earnings are included in this sample). We also include people who work at colleges and Universities and post-secondary school teachers. Thus, when we measure changes in employment for this sample, we will capture effects on researchers, but not student employees. Our theory implies an important role for the labor demand and supply elasticities, for which we generate proxies based on the industrial and demographic composition of cities. Industries that produce goods for local consumption or that rely on inelastically supplied local inputs, such as natural resources or climate have fewer options in terms of 18 where they locate their production and hence a less elastic demands for local labor. We divide industries into three groups, two of which have low demand elasticities. Industries that produce goods consumed locally cannot service the market if they move (e.g. personal services and construction). We refer to these as “local consumption industries.” We refer to industries that are capital intensive (e.g. manufacturing) or rely on natural resources (e.g. agriculture and mining) and cannot relocate quickly or inexpensively as “locationally-tied.” By contrast industries that produce broadly-consumed products and that are not capital intensive to measure those with a high demand elasticity (e.g. wholesale trade).5 Research shows that labor supply is less elastic for educated, prime age-men, than women, less-educated men, or workers who are early or late in their careers (Killingsworth and Heckman [1986]; Pencavel [1986]; Juhn [1992]; Blau [1994]; and Blundell and MaCurdy [1999]). We proxy for the labor supply elasticity using one minus the share of the workforce that is male with a high school degree or higher between ages 35 and 55. For convenience, we refer to this group as people with a “high” elasticity of supply (although it might be more accurate to describe them as the share that does not have a very low elasticity of supply). The education level in a city may generate spillovers. Our use of micro-data enables us to distinguish spillovers from an educated population from the direct effect of the education of the individuals in a city. 5 Our local consumption industries are construction, transportation, retail trade, personal services, and entertainment and our locationally-tied industries are agriculture, mining, manufacturing, and public administration. The other industries are wholesale trade, finance, business and related services, and professional services. 19 Other Metropolitan Area Characteristics We also obtained data on a range of control variables for metropolitan areas like population, crime rates and public school attendance from the State and Metropolitan Data Set 1980, 1990 and 2000 from ICPSR. To measure the cost of living in each metropolitan area, we obtained data for utilities, mortgages, and taxes, from the Places Rated Almanac of 1972, 1980, 1990 and 2000. We include population and its square and their interactions with year in the wage regressions we report here to control for differences in the cost of living, but exclude the other variables, which are likely to be endogenous. Our wage results are robust to including those variables as controls as well.6 Aggregation Metropolitan areas are aggregated to Consolidated Metropolitan Statistical Areas (CMSA), New England Consolidated Metropolitan Areas, (NECMA) and Metropolitan Statistical Areas (MSA). The constituent metropolitan areas in CMSAs and NECMAs change from year to year. For consistency, we use the CMSA, NECMA and MSA classification in the State and Metropolitan Area Data Book 1997-1998 (U.S. Bureau of Census [1998]). Descriptive Statistics Table 1 reports descriptive statistics. On average academic R&D spending is $60 per person in 1982-1984 dollars, with a standard deviation of $84. R&D close to doubles over the period, increasing from $40 in 1980 to $61 in 1990 to $76 in 2000. It is also worth noting that academic R&D is relatively weakly correlated with patenting (on the order of .1 depending on the year). It is more highly correlated with the college graduate 6 We do not control for population in the employment regressions because we are interested in total employment as an indication of the demand for labor, not variations in employment relative to population. 20 population share, frequently having correlations in the range of .3-.4. This correlations is reflected in the estimates below where the coefficient on scientific activity frequently declines once the college graduate population share is controlled. The table also shows the distribution of our proxies for the labor supply and demand elasticities. IV. Results Employment Columns (1a)-(1d) in the top panel of Table 2 report estimates of the (log) employment growth for 1980-90 and 1990-2000 on scientific activity in the initial year (i.e. the 1980-90 change is related to 1980 independent variables and the 1990-2000 change is related to 1990 independent variables), pooling data for both periods. The estimates show a positive relationship between academic R&D (and other variables) in the initial year and employment growth over the following 10 years. The estimate is robust to the inclusion of patenting per capita, but is reduced substantially and becomes insignificant once the college-graduate population share is controlled. Columns (1a)-(1d) in the bottom panel report instrumental variables estimates. (The corresponding first stage regressions are reported in Appendix Table 1 and are very strong, showing F-statistics on the excluded instrument between 100 and 150.) These estimates are very close to those reported in the top panel. Columns (2a)-(2d) in the top panel of Table 2 report estimates of the change in employment growth for 1990 to 2000 relative to 1980 and 1990 regressed on the change in academic R&D between 1980 and 1990. These estimates are somewhat larger than the pooled estimates in Columns (1a)-(1d), but imprecise. They also decline much less with the inclusion of the college-graduate population share. Columns (2a)-(2d) in the bottom 21 panel of Table 2 report instrumental variables estimates. (Here too the first stage equations are quite strong, with F-statistics on the excluded instrument between 22 and 27.) Although these estimates are noisy, they are, if anything, larger than the corresponding cross-sectional estimates and the pooled estimates in Columns (1a)-(1d), providing some reassurance that our estimates are not biased by endogeneity. Moreover, the estimated effects of scientific activity remain quite large even controlling for the college-graduate population share. To get a sense of the magnitudes, here and below, we consider the effect of a $1 billion increase in academic R&D, which would raise R&D per capita by roughly $3.2 per person. Our baseline estimate in the top panel of Column (1a), of .208 (SE=.101) is close to the midpoint of our estimates. Based on it, such an expansion would raise employment by .0675%. With civilian employment of 139 million in 2010, this increase in academic R&D would increase employment by 92.5 thousand people, implying a cost of $10,809 in academic R&D per job, combining local job creation and local productivity effects. This estimate should be interpreted with caution both because the coefficients are estimated with uncertainty and because they vary across the models. To estimate the relative sizes of the job creation and productivity effects, we turn to estimates from the STAR-METRICS Program, the most sophisticated effort to date to evaluate job creation by federal research funding. These estimates indicate that every $1 million spent generates just over 10 jobs and between 4.5 and 7.75 full time equivalent jobs per year (including jobs held by students), for a cost of roughly $100 thousand per job and $222-$129 thousand per full time job per year.7 Thus $1 billion in spending 7 Specifically the Aggregate Employment Estimates are that $1million of quarterly support generated 28 jobs and 18 full time equivalent jobs directly and an additional 13 jobs indirectly through vendors, 22 would generate roughly 10,000 jobs and between 4,500 and 7,750 full-time jobs per year. Our baseline estimates are an order of magnitude larger than those from STARMETRICS, indicating that the productivity effects of science on employment are likely considerably larger than those from job creation. Main Wage Effects Table 3 reports estimates for wages. The top panel of Columns (1a)-(1d) report OLS estimates from regressions that pool data for the 3 census years. The first column shows a positive relationship between science and wages. Including patenting (in the second column) does little to change the estimates, but including the share of the population with college degrees reduces it substantially. The bottom panel of Columns (1a)-(1d) presents comparable estimates using instrumental variables. (The first stage equation is in Appendix Table 2 and shows an F-statistic on the excluded instrument between 110 and 160.) The estimates are quite similar to the OLS estimates. The top panel of Columns (2a)-(2d) reports estimates that include city fixed effects. The estimates are noticeably larger than the ordinary least squares regression, although less precise. The bottom panel of Columns (2a)-(2d) reports two stage least squares estimates with fixed effects. (The first stage equation is in Appendix Table 2 and shows a T-statistic on the excluded instrument between 18 and 19.) These estimates are considerably larger than the previous estimates. As discussed, our results are consistent with the literature, which generally finds subcontracts, and institutional support. The total number of jobs is 41 per $1 million per quarter. Because the indirect jobs are not expressed in full time equivalents, the total number of full-time jobs ranges from an absolute minimum of just over 18 (if all indirect jobs are small, part time jobs) to 31 jobs (if all indirect jobs are full time jobs) per $1 million per quarter. To obtain an annual figure per $1 million, we divide the quarterly figures by 4. It is also noteworthy that STAR-METRICS indicates that roughly 25% of the full time equivalent jobs created by science spending are held by students. These jobs are not included in our estimates. 23 that including fixed effects increases the estimates and there are a few reasons why this might happen. First, science may be performed in places that would otherwise be less productive if, for instance, universities are sited in places where productivity is lower (e.g. because the opportunity cost of land was lower) or if the cities with more research institutions are experiencing negative shocks (see Crispin, Saha, and Weinberg [2010]). The fixed effects and fixed effects instrumental variables estimates also capture higherfrequency variation in scientific activity, which may be more correlated with wages if (for instance), supply is less elastic in the short run because population moves slowly (or labor demand is more elastic) than in the long run. In either case, neither the inclusion of fixed effects nor the use of instrumental variables suggest that endogeneity is biasing the estimated effects of science upward and it is reassuring that the fixed effects instrumental variables estimates, which are the most convincing a priori, are quite strong. As above, we are hesitant to identify a particular magnitude from these estimates given their range, but it may be helpful to quantify the estimated effects of science on wages. Again, consider an increase of $1 billion in science spending, which would raise academic R&D by $3.2 per capita. Taking a coefficient of .082 (from the first OLS estimates, which is at the low end of the coefficients), this would lead to a .026% increase in wages. Given that labor income was roughly $6 trillion in 2010, total wages would increase by $1.68 billion. If, as we have argued, job creation has a small effect on wages, then the effect of science on wages provides a convenient lower bound for the effect of science on productivity and surplus. It is also worth noting that this return ignores the direct benefits of science, which may well exceed the productivity spillovers. Wage Elasticity Effects The effects of science on wages depend on the labor demand and labor supply 24 elasticities. And, as indicated in equation (II.5), when productivity spillovers dominate the wage effects, the higher the labor demand elasticity or the lower the labor supply elasticity, the closer the greater the wage responses. Moreover, as the demand elasticity approaches infinity or the supply elasticity approaches zero, the wage effect affects approach the effects on productivity. This section tests whether wage effects are increasing in the demand elasticity and decreasing in the supply elasticity and uses these estimates to impute the effects of science on productivity. As discussed, we employ proxies for the demand and supply elasticities because we do not measure them directly. To proxy for an inelastic labor demand, we use the share of employment in local consumption industries and in industries that are locationally-tied in that they are capital intensive (e.g. manufacturing) or rely on natural resources (e.g. agriculture and mining). We proxy for the labor supply elasticity using the demographic composition of the population. Specifically, we estimate the share of the workforce that is male with a high school degree or higher between ages 35 and 55. Research shows that labor supply is less elastic for educated, prime age-men, so as the share of workers in this group increases, the elasticity of labor supply decreases. We incorporate these variables into the models by interacting them with academic R&D. Note that we include the main effect of the share of people employed in various industries or the share of the population with a high elasticity of labor supply as well as individual characteristics to control for the direct effect of those variables on wages. Columns (1a)-(1d) of Table 4A report OLS estimates that include interactions between academic R&D and employment in local consumption industries and in locationally-tied industries pooling data for 1980, 1990, and 2000 without fixed effects. 25 We include both of these variables separately because it is impossible to say a priori how the two sets of industries compare in terms of their demand elasticities although, in practice, they produce broadly similar coefficients. As expected, the less elastic the demand for labor, the less wages respond to academic R&D. The magnitudes are large. Using the estimates in column (1a), the effect of $1000 per capita science spending on wages at the mean employment shares is .116. A standard deviation across cities in the employment share of local consumption industries is .043 and locationally-tied industries is .065, so a 1 standard deviation increase in the employment share of both industries would lower the wage response by .162, eliminating the entire wage effect. As indicated, the effect of scientific activity on wages approaches the effect of scientific activity on productivity as the elasticity of demand is increased. We estimate the effect of academic R&D on productivity by looking at the effect of science on wages, where our proxies for the demand elasticity are both 1 standard deviation below their mean. In a city where the employment shares of local consumption and locationally-tied industries is 1 standard deviation above the average, a $1000 per capita increase in science spending would raise wages by .278 percent. A $1 billion increase in science spending would raise wages by .089% or $5.3 billion. Here it is important to bear in mind that in addition to the uncertainty over the coefficients, there is uncertainty as to whether a 1 standard deviation increase in the employment share of both industries corresponds to an infinitely elastic labor demand. Columns (2a)-(2d) of Table 4A report estimates that capture variations in the labor supply elasticity. In keeping with the model, they show that the more workers in groups with a high elasticity of labor supply, the less wages respond to academic R&D. 26 The magnitudes are quite large, with the interaction term being somewhat larger in magnitude than the main effect of academic R&D. It is hard to trace out large variations in the labor supply elasticity using this variable because the share of this high elasticity group does not vary much across cities, but it is clear that when the labor supply is more elastic, wages respond less to science. Table 4B reports fixed effects estimates for both sets of models. These estimates are similar to the estimates without fixed effects, but show an increase in magnitudes like the fixed effects estimates reported above and in the literature. V. Conclusions This paper studies how scientific activity is related to employment and wages. We contribute to this literature by using rigorous economic principles to compare the relative merits of different methods and measures. While much of the policy discussion has focused on the benefits of science in terms of the number of people who are employed doing it, what we refer to as “job creation,” we show that job creation measures do not reflect the benefits to workers or firms but rather reduce surplus. Insofar as one wants to measure the effects of science on employment, our model shows that it is important to account for how science raises local productivity through spillovers. Empirically, we show that the local productivity spillovers from science on employment are likely to be considerably larger than the “job creation” effects. Perhaps more fundamentally, we show that if one wants to measure the benefits of science either to workers or on productivity, it is important to focus on the effects of science on wages rather than employment. Specifically, under assumptions that seem to hold, the effects of science on local wages provide a convenient lower bound for the 27 effects of science on local productivity. We also produce a set of estimates using a strong research design that allow us to compare the various estimates empirically. While our estimates vary considerably across specifications, our baseline estimates indicate that a $1B increase in science spending might raise wages by $1.68 Billion and that these wage effects are likely to understate the effects on productivity. We also find that a $1B increase in science might generate 92,500 jobs, with the vast majority of these jobs being missed even using state-of-the-art “job creation” methods. Perhaps most significantly, our arguments and methods can be applied to measure the economic benefits from government activity more generally. 28 References Bauer, Paul W.; Mark E. Schweitzer, and Scott Shane. 2006. State Growth Empirics: The Long-Run Determinants of State Income Growth. Working Paper. Beeson, Patricia and Edward Montgomery. “The Effects of Colleges and Universities on Local Labor Markets.” The Review of Economics and Statistics 75 (No. 4, November): 753-61. Blanchard, Olivier Jean and Lawrence F. Katz. 1992. “Regional Evolutions.” Brookings Papers on Economic Activity 1: 1-75. Blau, David M. 1994. “Labor Force Dynamics of Older Men.” Econometrica 62 (January, No. 1): 117-56. Blundell, Richard and Thomas MaCurdy. 1999. “Labor Supply: A Review of Approaches.” In Handbook of Labor Economics, Volume 3. Orley Aschenfelter and David Card, Editors. Amsterdam. North-Holland Press. Pp. 1559-1695. Carlino, Gerald and Robert Hunt. 2009. “What Explains the Quantity and Quality of Local Inventive Activity?” Brookings-Wharton Papers on Urban Affairs 10: 65123. Crispin, Laura; Subhra B. Saha; and Bruce A. Weinberg. 2010. “Innovation Spillovers in Industrial Cities.” Working Paper. Clemins, Patrick J. 2009. “Historic Trends in Federal R&D Budgets.” in AAAS Report XXXIV: Research and Development, FY 2010. Washington, D.C.: American Association for the Advancement of Science. pp. 21-26. Congressional Budget Office. 2010. “Estimated Impact of the American Recover and Reinvestment Act on Employment and Economic Output from April 2010-June 2010.” Downloaded from http://www.cbo.gov/ftpdocs/117xx/doc11706/08-24ARRA.pdf on May 23, 2011. Hausman, Naomi. 2010. “Effects of University Innovation on Local Economic Growth and Entrepreneurship.” Working Paper. Juhn, Chinhui. 1992. “The Decline in Male Labor Market Participation: The Role of Declining Market Opportunities,” Quarterly Journal of Economics 107 (February, No. 1): 79-122. Killingsworth, Mark R. and James J. Heckman. 1986. “Female Labor Supply: A Survey.” In Handbook of Labor Economics, Volume 1. Orley Aschenfelter and Richard Layard, Editors. Amsterdam. North-Holland Press. National Science Foundation. 2011. Initial Results of Phase I of the STAR METRICS Program: Aggregate Employment Estimates. Washington, D.C.: Government Printing Office. 29 OECD. 2008. OECD Science Technology and Industry Outlook. Washington, DC: OECD. Pencavel, John. 1986. “Labor Supply of Men: A Survey.” In Handbook of Labor Economics, Volume 1. Orley Aschenfelter and Richard Layard, Editors. Amsterdam. North-Holland Press. Saha, Subhra B. 2008. “Economic Effects of Universities and Colleges.” Working Paper. Zucker, Lynne G.; Michael R. Darby; and Marilynn B. Brewer. 1998. “Intellectual Human Capital and the Birth of U.S. Biotechnology Enterprises.” American Economic Review. (Vol. 88, No. 1, March): 209-306. 30 Figure 1. The Effect of a Subsidy on Wages and Employment with a Productivity Spillover. ln(Wage) Supply B(s) (b) Wii (a) (ii) (*) Wi (i) (c) Productivity Spillover Demand Qi s Qii ln(Employment) Note. The original equilibrium is indicated by (i). We assume that demand is increased by a subsidy and by a productivity spillover generated by the subsidy leading to a new equilibrium at (ii). The parallelogram (a) gives the increase in (total) surplus from the productivity spillover; the triangle (b) gives the loss in surplus from the subsidy. The parallelogram (c) plus the triangle (b) are transferred to firms. 31 Table 1. Descriptive Statistics. Log Wage Academic R&D (Thousand $) Per Capita Patents Per Capita College Grad. Population Share Supply / Demand Elasticity Proxies Highly Supply Elasticity Share Locationally-Tied Industry Share Local-Consumption Industry Share Other Industry Share People Cities All Years Mean SD 5.854 (0.736) 0.060 (0.084) 0.000 (0.000) 0.281 (0.073) 1980 Mean SD 5.810 (0.703) 0.040 (0.055) 0.000 (0.000) 0.217 (0.040) 1990 Mean SD 5.835 (0.736) 0.061 (0.089) 0.000 (0.000) 0.274 (0.052) 2000 Mean SD 5.910 (0.758) 0.076 (0.094) 0.000 (0.001) 0.342 (0.063) 0.791 (0.034) 0.322 (0.043) 0.268 (0.065) 0.410 (0.052) 1,262,193 381 0.831 (0.013) 0.310 (0.044) 0.328 (0.057) 0.361 (0.037) 382,337 125 0.793 (0.014) 0.324 (0.040) 0.264 (0.047) 0.412 (0.039) 420,282 127 0.755 (0.015) 0.329 (0.042) 0.329 (0.042) 0.449 (0.039) 459,574 129 32 Table 2. Employment Regressions. Sample: Initial Academic R&D per Capita Initial Patenting per Capita Initial Col. Grad. Pop. Share Period=1990-2000 Constant Observations R-Squared Initial Academic R&D per Capita Initial Patenting per Capita Initial Col. Grad. Pop. Share Period=1990-2000 Constant Observations R-squared (1a) (1b) (1c) (1d) (2a) (2b) (2c) (2d) 1980-90 and 1990-2000 Changes Pooled (2000-1990)-(1990-80) Log Changes Changes in Log Changes 0.208* 0.225* 0.062 0.075 0.399 0.446 0.369 0.413 (0.101) (0.102) (0.107) (0.105) (0.302) (0.299) (0.255) (0.257) -53.642+ -58.329* -114.308 -116.877 (27.806) (27.799) (123.979) (121.360) 0.640* 0.661* 0.252 0.281 (0.315) (0.305) (0.941) (0.945) -0.095*** -0.093*** -0.125*** -0.124*** (0.022) (0.022) (0.023) (0.024) 0.140*** 0.148*** 0.030 0.036 -0.125*** -0.120*** -0.137* -0.134* (0.020) (0.021) (0.056) (0.056) (0.024) (0.027) (0.064) (0.066) 246 246 246 246 119 119 119 119 0.1034 0.1135 0.1314 0.1432 0.0061 0.0107 0.0069 0.0117 Instrumental Variables 0.227+ 0.247+ 0.045 0.062 0.227 0.342 0.156 0.273 (0.127) (0.128) (0.146) (0.144) (0.476) (0.449) (0.462) (0.450) -54.169* -58.144* -109.767 -111.399 (27.488) (27.378) (120.362) (117.937) 0.652* 0.671* 0.324 0.327 (0.322) (0.311) (0.962) (0.967) -0.095*** -0.093*** -0.126*** -0.124*** (0.022) (0.022) (0.023) (0.023) 0.139*** 0.147*** 0.029 0.034 -0.121*** -0.118*** -0.136* -0.133* (0.021) (0.021) (0.056) (0.056) (0.025) (0.026) (0.063) (0.065) 246 246 246 246 119 119 119 119 0.1033 0.1133 0.1313 0.1432 0.005 0.0103 0.0053 0.011 33 Note. Columns (1a)-(1d) report estimates of the (log) employment growth for 1980-90 and 1990-2000 on science in the initial year (i.e. the 1980-90 change is related to 1980 independent variables and the 1990-2000 change is related to 1990 independent variables), pooling data for both periods. Columns (2a)-(2d) report estimates of the change in employment growth for 1990 to 1990 relative to 1980 and 1990 regressed on the change in academic R&D between 1980 and 1990. For the instrumental variables estimates, the instrument is a share shift index for academic R&D. First stage regressions are reported in Appendix Table 1. Estimates weighted by population in 1990. Standard errors, which are robust to an arbitrary error structure within metropolitan areas in Columns (1a)-(1d), are reported in parentheses. Significance given by: *** p<0.001, ** p<0.01, * p<0.05, + p<0.10. 34 Table 3. Wage Estimates. (1a) Academic R&D per Capita Patenting per Capita Col. Grad. Pop. Share Observations Adjusted R-squared Academic R&D per Capita Patenting per Capita Col. Grad. Pop. Share Observations Adjusted R-squared (1b) (1c) (1d) (2a) (2b) (2c) (2d) Pooled Fixed Effects 0.082+ 0.076+ -0.036 -0.033 0.198 0.166 0.104 0.089 (0.044) (0.039) (0.037) (0.037) (0.131) (0.117) (0.110) (0.104) 19.112 11.165 29.847+ 21.720 (15.712) (12.032) (15.263) (13.810) 0.446** 0.421*** 0.833*** 0.758*** (0.138) (0.120) (0.231) (0.219) 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 0.308 0.308 0.309 0.309 0.299 0.299 0.299 0.299 Instrumental Variables Pooled Fixed Effects 0.118* 0.106** -0.018 -0.018 0.786* 0.666+ 0.483+ 0.416+ (0.052) (0.039) (0.039) (0.040) (0.397) (0.353) (0.264) (0.238) 18.981 11.377 26.946+ 20.524 (15.664) (12.295) (14.216) (13.269) 0.431** 0.408*** 0.757*** 0.697*** (0.133) (0.118) (0.210) (0.204) 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 0.308 0.308 0.309 0.309 0.298 0.299 0.299 0.299 Note. Sample includes data for 1980, 1990, and 2000. Individual-level controls include education, a quartic in potential experience, race (dummies for black and other race), Hispanic background, citizenship, and marital status interacted with gender. Regressions also include the log of population and its square, year dummy variables, and a full set of interactions between them. Estimates weighted by population weights. Fixed effects estimates include metropolitan area fixed effects. In the two-stage least squares estimates, the instruments is a share shift index for academic R&D. The individual characteristics are also treated as endogenous. We include the deviation of each individual variable from its mean in each metropolitan area in each year as instruments. First stage regressions for academic R&D are reported in Appendix Table 2. Standard errors, which are robust to an arbitrary error structure within metropolitan 35 areas, are reported in parentheses. Significance given by: *** p<0.001, ** p<0.01, * p<0.05, + p<0.10. 36 Table 4A. Wage Estimates with Elasticities, Pooled Regressions. Academic R&D per Capita Academic R&D per Capita * Locationally-Tied Industry Share Academic R&D per Capita * Local-Consumption Industry Share Academic R&D per Capita * 1-Share(35-55, Some College+ Men) Patenting per Capita (1a) 0.952* (0.416) -1.157* (0.541) -1.730+ (0.944) (1b) 0.993* (0.405) -1.221* (0.532) -1.813+ (0.932) 20.787 (14.862) Col. Grad. Pop. Share Locationally-Tied Industry Share Local-Consumption Industry Share 1-Share(35-55, Some College+ Men) Observations Adjusted R-squared (1c) 0.441+ (0.261) -0.934* (0.465) -0.826 (0.620) 0.370* (0.142) 0.188 (0.172) 0.408** (0.141) 0.251 (0.169) 0.765*** (0.126) 0.840*** (0.145) 0.743*** (0.169) (1d) 0.482+ (0.260) -0.980* (0.473) -0.905 (0.622) 12.480 (8.601) 0.741*** (0.107) 0.848*** (0.137) 0.763*** (0.163) (2a) 1.036+ (0.527) (2b) 1.099* (0.511) (2c) 1.117* (0.468) (2d) 1.151* (0.456) -1.221+ (0.639) -1.308* (0.624) 19.131 (14.897) -1.478* (0.610) -1.518* (0.591) 11.737 (11.709) 0.455*** (0.135) 0.480** (0.149) -0.066 0.002 0.399 0.416 (0.349) (0.309) (0.302) (0.303) 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 0.308 0.309 0.310 0.310 0.308 0.308 0.309 0.309 [See notes beneath Table 4B.] 37 Table 4B. Wage Estimates with Elasticities, Fixed Effects Estimates. (1a) (1b) (1c) Academic R&D per Capita 1.542** 1.456* 1.099* (0.589) (0.568) (0.439) Academic R&D per Capita -2.265* -2.062* -1.615* * Locationally-Tied Industry Share (0.998) (1.023) (0.748) Academic R&D per Capita -2.557** -2.514** -1.975* * Local-Consumption Industry Share (0.963) (0.945) (0.784) Academic R&D per Capita * 1-Share(35-55, Some College+ Men) Patenting per Capita 28.980* (13.915) Col. Grad. Pop. Share 0.882*** (0.211) Locationally-Tied Industry Share 0.252 0.267 0.502* (0.247) (0.231) (0.250) Local-Consumption Industry Share 0.280 0.378 0.630* (0.263) (0.256) (0.249) 1-Share(35-55, Some College+ Men) Observations Adjusted R-squared (1d) 1.067* (0.441) -1.509+ (0.789) -1.986* (0.803) 22.199+ (11.815) 0.815*** (0.198) 0.494* (0.229) 0.679** (0.247) (2a) 1.239* (0.552) (2b) 1.096* (0.526) (2c) 0.795+ (0.412) (2d) 0.736+ (0.406) -1.487* (0.738) -1.323+ (0.731) 28.050* (13.300) -0.987+ (0.593) -0.919 (0.598) 17.545 (10.757) 0.931*** (0.218) 1.000*** (0.225) 1.016** 0.951* 1.457*** 1.386*** (0.389) (0.368) (0.402) (0.395) 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 0.299 0.299 0.299 0.299 0.299 0.299 0.299 0.299 Note. Sample includes data for 1980, 1990, and 2000. Individual-level controls include education, a quartic in potential experience, race (dummies for black and other race), Hispanic background, citizenship, and marital status interacted with gender. Regressions also include the log of population and its square, year dummy variables, and a full set of interactions between them. Estimates weighted by population weights. Fixed effects estimates include metropolitan area fixed effects. Standard errors, which are robust to an arbitrary error structure within metropolitan areas, are reported in parentheses. Significance given by: *** p<0.001, ** p<0.01, * p<0.05, + p<0.10. 38 Appendix Table 1. Employment Regression, First Stage Equations. Share Shift Index Patenting per Capita Col. Grad. Pop. Share Period=1990-2000 Constant F-Statistic on Share Shift Observations Adjusted R-squared 1980-90 and 1990-2000 Changes Pooled Log Changes 0.619*** 0.619*** 0.616*** 0.616*** (0.051) (0.051) (0.060) (0.060) 0.187 0.093 (10.631) (10.839) 0.016 0.016 (0.120) (0.121) 0.001 0.001 0.000 0.000 (0.002) (0.002) (0.005) (0.005) -0.000 -0.000 -0.003 -0.003 (0.003) (0.004) (0.018) (0.018) 148.22 149.55 104.17 105.07 252 252 252 252 0.809 0.808 0.808 0.807 (2000-1990)-(1990-80) Changes in Log Changes 0.617*** 0.619*** 0.524*** (0.118) (0.119) (0.110) -3.402 (25.874) 0.064 (0.116) 0.001 (0.004) 26.51 127 0.498 0.001 (0.004) 25.16 127 0.494 0.000 (0.005) 22.67 121 0.450 0.528*** (0.113) -8.262 (26.629) 0.065 (0.116) 0.000 (0.005) 21.69 121 0.446 Note. Columns (1a)-(1d) report estimates of the (log) employment growth for 1980-90 and 1990-2000 on science in the initial year (i.e. the 1980-90 change is related to 1980 independent variables and the 1990-2000 change is related to 1990 independent variables), pooling data for both periods. Columns (2a)-(2d) report estimates of the change in employment growth for 1990 to 2000 relative to 1980 and 1990 regressed on the change in academic R&D between 1980 and 1990. The instrument is the share shift index for academic R&D. Estimates weighted by population in 1990. Standard errors, which are robust to an arbitrary error structure within metropolitan areas, are reported in parentheses. Significance given by: *** p<0.001, ** p<0.01, * p<0.05, + p<0.10. 39 Appendix Table 2. Wage Regression, First Stage Equations. Share Shift Index Patenting per Capita Col. Grad. Pop. Share F-Statistic on Share Shift Observations Adjusted R-squared Pooled Fixed Effects 0.487*** 0.469*** 0.469*** 0.226*** 0.226*** 0.222*** 0.222*** (0.039) (0.045) (0.045) (0.051) (0.052) (0.051) (0.052) -4.585 -6.662 0.167 -0.198 (6.948) (7.695) (3.371) (3.529) 0.106 0.120 0.039 0.039 (0.106) (0.108) (0.074) (0.077) 155.52 156.25 108.32 108.94 19.25 18.51 18.65 18.19 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 1,262,181 0.783 0.784 0.786 0.787 0.537 0.537 0.538 0.538 0.485*** (0.039) Note. Sample includes data for 1980, 1990, and 2000. Individual-level controls include education, a quartic in potential experience, race (dummies for black and other race), Hispanic background, citizenship, and marital status interacted with gender. Regressions also include the log of population and its square, year dummy variables, and a full set of interactions between them. Estimates weighted by population weights. Fixed effects estimates include metropolitan area fixed effects. The instrument is a share shift index for academic R&D. Individual characteristics are also treated as endogenous. We include the deviation of each individual variable from its mean in each metropolitan area in each year as instruments. Standard errors, which are robust to an arbitrary error structure within metropolitan areas, are reported in parentheses. Significance given by: *** p<0.001, ** p<0.01, * p<0.05, + p<0.10. 40