Logistic Regression stat 557 Heike Hofmann

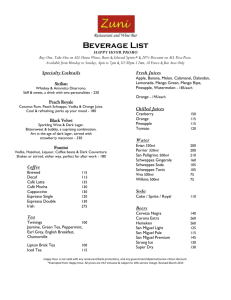

advertisement

Logistic Regression stat 557 Heike Hofmann Homework Outline • Deviance Residuals • Logistic Regression: • interpretation of estimates • inference & confidence intervals • model checking by grouping • scores Deviance • Deviance is used for model comparisons: -2 * difference in log likelihood of two models • Model M and saturated model: deviance of M, lack of fit of model M • Model M and null model (intercept only): null deviance, ‘improvement’ of model M • Models M1 and M2, where M1 is nested within Model M2: M1 is sufficient? ersion parameter in the deviance gives for models M1 and � � duals of GLMs − D(M2 ) = −2 L(θˆ1 ; y) − L(θˆ2 ; y) = g the dispersion parameter in the deviance gives for models M1 and M2 : � � � esiduals of GLMs � � � � ˆ ˆ ˆ = −2 y /a(φ) + 2 b( θ θ − θ ˆ ˆ i i1) − i i D(M ) − D(M ) = −2 L( θ ; y) − L( θ ; y) = 1 2 ting the for models M1 and M2 : 1 dispersion 2 parameter in the 1 deviance gives 2 Deviance � � � � � �� i� i b(θˆi 1 ) − b(θˆi 2 ) /a(φ) θˆi 1−−L(θˆθiˆ22; y)/a(φ) D(M1 ) − D(M2 ) == −2 −2 L(yθˆi1 ; y) = +2 has the form � φ/ω the+deviance model = −2 yi θˆi 1 − θˆfor 2 b(θˆi 1 ) −of b(θˆtwo i , yielding i 2 ) /a(φ) i 2 /a(φ) � i � � � i� dels, a(φ) has the form φ/ωi , iyielding for the deviancei of two models: � � � � � �for the deviance models, a(φ) has the form φ/ωi , yielding of two models: ˆ ˆ ˆ ˆ D(M1 )D(M − D(M ) = 2 ω y ( θ − θ ) + b( θ ) − b( θ ˆ ˆ ˆ ˆ 2 2) = 2 i 1θi 1 ) − b(θi 2i)1 /φ i2) ωi �i yi (θii2 −iθ2i 1 ) + b( 1 ) − D(M � � D(M1 ) − D(M2 ) = 2 ii ωi yi (θˆi 2 − θˆi 1 ) + b(θˆi 1 ) − b(θˆi 2 ) /φ � i ull model for M2 , we get residuals for yi by taking di , where D � = i di and � for residuals foryi ybyi taking by taking D= 2 , we i ,= where e fullM model for Mget for di , where d D� 2 , we get residuals i di and � ˆi 2 − θˆi 1 ) + b(θˆi 1 ) − b(θˆi�2 ) , = 2ω� individualdideviance i �yi (θcontribution: � • di = 2ωi yi (θˆi 2 − θˆi 1 ) + b(θˆi 1 ) − b(θˆi 2 ) , ˆi 2 − θˆi 1 ) + b(θˆi 1 ) − b(θˆi 2 ) , d = 2ω y ( θ i i i ance residual for observation i is deviance residual for observation i is � �d sign(y − ŷ ). dual for observation i is dii sign(yii− ŷi ).i , we have the Pearson’s residual for observation i Residuals Residuals duals uals Deviance eviance Pearson earson Residuals • Deviance Residuals � � di sign(yi − µ̂i ) di sign(yi − µ̂i ) • Pearson Residuals y − µ̂ e =� y − µ̂ i i i i i ei = � Var (yi ) Var (yi ) sets underestimate variance ets underestimate variance Both sets of residuals under-estimate the variance Logistic Regression • Y is binary (with levels 0,1) E[Y] = π, Var[Y] = π(1-π)/n • for ease of discussion: p=1, X is co-variate • g(E[Yi]) = α + β xi with g(π) = log (π/(1-π)) • We already know: for binary x, β is log odds ratio 0.0 0.2 0.4 p(x) 0.6 0.8 1.0 --4 -2 2 4 m 0.0 0.2 0.4 0.6 0 0.8 1 x1.0 p M p(x) 4(x) 2 .2 .4 .6 .8 .0 -4 • • -2 0 2 4 x π(x) = exp(α + β x) /(1 + exp(α + β x) ) Summary of previous findings: • For β > 0 ⇒ π(x) increases in x For β < 0 ⇒ π(x) decreases in x • for β > 0, π(x) increases, Since π(x)/(1 = exp(α + βx) = exp(α) exp(βx), for every unit in X odds increase multiplicafor β −<π(x)) 0, π(x) decreases tively by exp(β) (for every unit there is an (1 − exp(β)) 100% increase). • π(x)/(1 − π(x)) = exp(α) exp(β x) for every unit in X, odds the curve has steepest slope at π = 0.5 (forby x =exp(β) −α/β) - that slope is β/4. increase multiplicatively • exp(β) is odds ratio: ratio of odds at x + 1 versus odds at x. • • x = −α/β is called median effective level EL50 or median lethal dose LD50 . • inflection point for π(x) = 0.5, for x = − α/β (median effect, 39 median lethal dose), • steepest slope in inflection point: β/4 rence regression model Inference for β logit π(x) = α + βx r of interest is usually β in a hypothesis test of H0 : β = 0. Three approach h a test statistic: Mainly 3 methods: est • Wald • Loglikelihood lihood Ratio Test Test • Score � β̂ SEβ �2 ∼ χ21 2(L(β̂; y) − L(0; y)) ∼ χ21 2 u(β̂) ι(β̂) = ∂ ∂β L � ∼ χ21 2 −E ∂∂2 β L � ntervals for β are then found by backwards calculation; e.g. α 100% Wald CI β̂ ± zα/2 SEβ t Test ∂SE 2± β̂ z u(u( β̂)β̂)2 α/2∂β∂ LLβ 22 �∂β � ∼ χ == �∂ 2 � ∼ χ11 ι(ι( β̂)β̂) −E ∂2 L 2 −E ∂ 2β L Inference for π(x) ∂ β or π(x0 ) are based on confidence intervals for logit π(x0 ): rvals forfor β are then found Wald CI CI f ntervals β are then foundbybybackwards backwardscalculation; calculation; e.g. e.g. α 100% Wald π̂(x0 ) ar(log ) = var(α̂ + x0 β̂) = varα̂ + x20 varβ̂ + 2x0 cov(α zα/2SE SEββ β̂β̂±±zα/2 1− π̂(xlink 0 ) function g(x): Use • π(x )is: are basedononconfidence confidenceintervals intervals for for logit logit π(x ): rvals forfor π(x )) are based rntervals logit π(x • In x :π̂(x ) � 0 0 0 o 0 0 π̂(x0 ) 22 varβ̂ + 2x0 cov(α̂, β̂) var(log ) = var(α̂ + x β̂) = varα̂ + x 0 var(log 1 − π̂(x ))= var(α̂ + x0 β̂) = varα̂ + x0)0varβ̂ + 2x0 cov(α̂, β̂) π̂(x 0 0 1 − π̂(x0 ) 0 var(log α̂ + x0 β̂ ± zα/2 ald logit π(x) 0is: ) is: CI CI forfor logit π(x • Wald C.I: 0 �� Wald CI for π(xα̂0+) xis: 0 β̂ ± zα/2 α̂ + x0 β̂ ± zα/2 1 − π̂(x0 ) ) = (l, u). π̂(x0 ) var(log π̂(x0 ) ) = (l, u). var(log 1 − π̂(x0 )) = (l, u). 1 − π̂(x0 ) l u e e α 100% Wald CI for π(x0 ) is: , ) 100% Wald CI for π(x0 ) is: ( l u 1 + le 1 + e u e e ( el l , eu u ) ( 1 + e, 1 + e ) Example: Happiness Data > summary(happy) happy not too happy: 5629 pretty happy :25874 very happy :14800 NA's : 4717 marital divorced : 6131 married :27998 never married:10064 separated : 1781 widowed : 5032 NA's : 14 year Min. :1972 1st Qu.:1982 Median :1990 Mean :1990 3rd Qu.:2000 Max. :2006 age sex Min. : 18.00 female:28581 1st Qu.: 31.00 male :22439 Median : 43.00 Mean : 45.43 3rd Qu.: 58.00 Max. : 89.00 NA's :184.00 degree finrela bachelor : 6918 above average : 8536 graduate : 3253 average :23363 high school :26307 below average :10909 junior college: 2601 far above average: 898 lt high school:11777 far below average: 2438 NA's : 164 NA's : 4876 health excellent:11951 fair : 7149 good :17227 poor : 2164 NA's :12529 only consider extremes: very happy and not very happy individuals prodplot(data=happy, ~ happy+sex, c("vspine", "hspine"), na.rm=T, subset=level==2) # almost perfect independence # try a model happy.sex <- glm(happy~sex, family=binomial(), data=happy) summary(happy.sex) Call: glm(formula = happy ~ sex, family = binomial(), data = happy) Deviance Residuals: Min 1Q Median -1.6060 -1.6054 0.8027 3Q 0.8031 Max 0.8031 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.96613 0.02075 46.551 <2e-16 *** sexmale 0.00130 0.03162 0.041 0.967 --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 24053 Residual deviance: 24053 AIC: 24057 on 20428 on 20427 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 4 female male > anova(happy.sex) Analysis of Deviance Table Model: binomial, link: logit Response: happy > confint(happy.sex) Waiting for profiling to be done... 2.5 % 97.5 % (Intercept) 0.92557962 1.00693875 sexmale -0.06064378 0.06332427 Terms added sequentially (first to last) Df NULL sex • Deviance Resid. Df Resid. Dev 20428 24053 1 0.0016906 20427 24053 Deviance difference is asymptotically χ2 distributed • Null hypothesis of independence cannot be rejected Age and Happiness qplot(age, geom="histogram", fill=happy, binwidth=1, data=happy) 300 count qplot(age, geom="histogram", fill=happy, binwidth=1, position="fill", data=happy) 400 happy not too happy 200 very happy 100 0 20 # research paper claims that happiness is u-shaped happy.age <- glm(happy~poly(age,2), family=binomial(), data=na.omit(happy[,c ("age","happy")])) 30 40 50 age 60 70 80 1.0 0.8 count 0.6 happy not too happy 0.4 very happy 0.2 0.0 20 30 40 50 age 60 70 80 1.0 0.8 count 0.6 happy not too happy 0.4 very happy 0.2 > summary(happy.age) 0.0 20 30 40 50 age 60 70 Call: glm(formula = happy ~ poly(age, 2), family = binomial(), data = na.omit(happy[, c("age", "happy")])) Deviance Residuals: Min 1Q Median -1.6400 -1.5480 0.7841 3Q 0.8061 Max 0.8707 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.96850 0.01571 61.660 < 2e-16 *** poly(age, 2)1 6.41183 2.22171 2.886 0.00390 ** poly(age, 2)2 -7.81568 2.21981 -3.521 0.00043 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 23957 Residual deviance: 23936 AIC: 23942 on 20351 on 20349 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 4 80 age 20 30 40 50 60 70 80 0.0 1.0 count tnuoc 0.2 0.8 very happy happy not too happy not too happy happy 0.4 0.6 0.6 0.4 very happy 0.8 0.2 1.0 0.0 20 30 40 # effect of age X <- data.frame(cbind(age=20:85)) X$pred <- predict(happy.age, newdata=X, type="response") qplot(age, pred, data=X) + ylim(c(0,1)) 50 60 age 70 80 1.0 0.8 > anova(happy.age) Analysis of Deviance Table Model: binomial, link: logit Response: happy pred 0.6 0.4 0.2 0.0 Terms added sequentially (first to last) Df Deviance Resid. Df Resid. Dev NULL 20351 23957 poly(age, 2) 2 20.739 20349 23936 20 30 40 50 age 60 70 80 Problems with Deviance • if X is continuous, deviance has no longer χ2 distribution. Two-fold violations: • regard X to be categorical (with lots of categories): we might end up with a contingency table that has lots of small cells - which means, that the χ2 approximation does not hold. • Increases in sample size, most likely increase the number of different values of X. Corresponding contingency table changes size (asymptotic distribution for the smaller contingency table doesn’t exist). > xtabs(~happy+age, age happy 18 not too happy 19 very happy 36 age happy 35 not too happy 107 very happy 307 age happy 52 not too happy 100 very happy 214 age happy 69 not too happy 53 very happy 188 age happy 86 not too happy 16 very happy 32 data=happy) 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 93 95 105 120 119 127 123 120 126 116 91 127 102 115 91 112 155 185 202 226 268 260 310 286 336 342 303 322 291 350 333 329 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 123 108 110 101 107 123 94 117 104 101 85 93 87 102 94 87 322 320 309 273 287 271 279 276 251 243 257 230 248 242 251 229 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 86 99 95 85 70 86 83 72 70 60 71 58 66 65 72 63 232 226 176 204 209 221 228 195 194 213 216 189 197 194 188 214 70 71 72 73 74 75 76 77 78 43 58 48 59 56 41 57 49 38 203 153 162 162 147 132 135 109 106 87 15 32 88 16 20 89 31 76 Grouping might be better happy$agec <- cut(happy$age, breaks=c(15,10*2:9)) 79 35 85 80 27 71 81 31 69 82 20 75 83 26 66 84 24 45 85 17 40 Model Checking by Grouping blem with deviance: if X continuous, deviance has no longer χ distribution. Th 2 ptions are violated two-fold: even if we regard X to be categorical (with lots of cate we end up with a contingency table that has lots of small cells - which means, tha data along estimates, e.g. such thatlikely the number of d does notGroup hold. Secondly, if we increase the sample size, most eases, too, which makes the correspondingequal contingency table change size (so we can groups are approximately in size. asymptotic distribution for the smaller contingency table, as it doesn’t exist anym is larger). Partition • • smallest n1 estimates into group 1, del Checking by Grouping To get around the problems with the distribution a second smallest batch into such that group 2 estimates group the data along estimates, e.g. of by n partitioning on estimates al in size.group 2, titioning ... theIf estimates is done by size, we the smallest we assume g groups, wegroup get the Hosmer-n1 estimates into g llest batch of n2 estimates into group 2, ... If we assume g groups, we get the Hos Lemeshow test statistic: istic g � i=1 �� ni j=1 �ni �2 yij − j=1 π̂ij 2 �� �� � ∼ χ g−2 . � ni 1 − j π̂ij /ni j=1 π̂ij Problems with Grouping • Different groupings might (and will) lead to different decisions w.r.t model fit • Hosmer et al (1997): “A COMPARISON OF GOODNESS-OF-FIT TESTS FOR THE LOGISTIC REGRESSION MODEL” (on Blackboard) π(x) log = α + βi , 1 − π(x) Example: Alcohol during pregnancy e βi is the effect of the ith category in X on the log odds, i.e. for each category one effect means that the above model is overparameterized (the “last” category can be explaine thers). To make the solution unique again, we have to use an additional constraint. I fault. Whenever one of the effects is fixed to be zero, this is called a contrast coding arison of all the� other effects to the baseline effect. For effect coding the constraint is on t s of a variable: i βi = 0. In a binary variable the effects are then the negatives of each Observational ctions and inference are independentStudy: from the specific coding used and are not affecte in the coding. at 3 months of pregnancy, expectant • mothers asked for average daily alcohol mple: Alcohol and Malformation consume. hol during pregnancy is believed to be associated with congenital malformation. The follow om an observational study - after three months of pregnancy questions on the average nu infant checked for malformation at birth olic beverages were asked; at birth the infant was checked for malformations: Alcohol malformed absent P(malformed) 1 0 48 17066 0.0028 2 <1 38 14464 0.0026 3 1-2 5 788 0.0063 4 3-5 1 126 0.0079 5 ≥6 1 37 0.0263 els m1 and m2 are the same in terms of statistical behavior: deviance, predictions and the same numbers. The variable Alcohol is recoded for the second model, giving differ Saturated Model glm(formula = cbind(malformed, absent) ~ Alcohol, family = binomial()) Deviance Residuals: [1] 0 0 0 0 0 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -5.87364 0.14454 -40.637 <2e-16 *** Alcohol<1 -0.06819 0.21743 -0.314 0.7538 Alcohol1-2 0.81358 0.47134 1.726 0.0843 . Alcohol3-5 1.03736 1.01431 1.023 0.3064 Alcohol>=6 2.26272 1.02368 2.210 0.0271 * --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 6.2020e+00 Residual deviance: -3.0775e-13 AIC: 28.627 on 4 on 0 Number of Fisher Scoring iterations: 4 degrees of freedom degrees of freedom ‘Linear’ Effect glm(formula = cbind(malformed, absent) ~ as.numeric(Alcohol), family = binomial()) Deviance Residuals: 1 2 3 0.7302 -1.1983 0.9636 4 0.4272 5 1.1692 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -6.2089 0.2873 -21.612 <2e-16 *** as.numeric(Alcohol) 0.2278 0.1683 1.353 0.176 --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 6.2020 Residual deviance: 4.4473 AIC: 27.074 on 4 on 3 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 5 levels: 1,2,3,4,5 ‘Linear’ Effect glm(formula = cbind(malformed, absent) ~ as.numeric(Alcohol), family = binomial()) Deviance Residuals: 1 2 3 0.5921 -0.8801 0.8865 4 -0.1449 5 0.1291 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -5.9605 0.1154 -51.637 <2e-16 *** as.numeric(Alcohol) 0.3166 0.1254 2.523 0.0116 * --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 6.2020 Residual deviance: 1.9487 AIC: 24.576 on 4 on 3 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 4 levels: 0,0.5,1.5,4,7 Scores • Scores of categorical variables critically influence a model • usually, scores will be given by data experts • various choices: e.g. midpoints of interval variables, • default scores are values 1 to n Next: • Logit Models for nominal and ordinal response