Predicting Extreme Events: Oliver Edward Newth

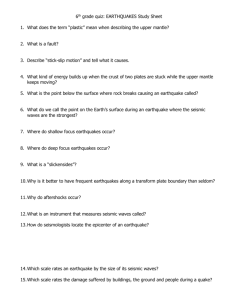

advertisement