Connecting the Dots Between Learning and Resources Jane V. Wellman

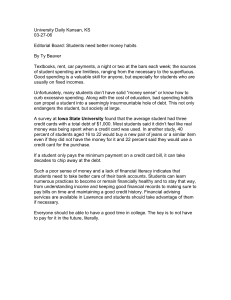

advertisement