Lecture 13: Review of vector spaces V over fields F .

advertisement

Lecture 13:

Review of vector spaces V over fields F .

Emphasis in Math 221: F = R

Emphasis in Math 342: Finite field, F = GF (q). Recall that

GF (q) is the unique field of size q and q must be a prime power:

q = pk , where p is prime and k is a positive integer. Recall that

GF (p) = Zp. Recall that GF (4) 6= Z4 and is instead given by the

addition and multiplication tables on page 36.

Defn: A vector space over a field F is a nonempty set V with

vector addition and scalar multiplication operations that satisfy the

nine axioms on pp. 41-42 of the text.

The first five axioms can be summarized to say that (V, +) is an

abelian group. The other axioms are:

(vi) (Closure of scalar multiplication): for a ∈ F, u ∈ V , we have

au ∈ V

(vii) (Distributivity): for a, b ∈ F, u, v ∈ V , we have a(u + v) =

au + av and (a + b)u = au + bv.

(viii) (Compatibility of multiplication in the field and scalar multiplication): for a, b ∈ F, u ∈ V , we have (ab)u = a(bu)

(ix) (Identity) for u ∈ V , we have 1u = u where 1 is the multiplicative identity of F

For any field F and positive integer n, F n is a vector space over

F ; here, vector addition is coordinate-wise addition:

(u1, . . . , un) + (v1, . . . , vn) = (u1 + v1, . . . , un + vn)

and scalar multiplication is coordinate-wise scalar multiplication:

a(u1, . . . , un) = (au1, . . . , aun)

1

For F = R, F n = Rn, n-dimensional Euclidean space.

We focus on the vector space V (n, q) := GF (q)n. Note that

|V (n, q)| = q n and so is finite (in contrast to Rn).

Example: V (2, 3) = Z23 = {(x, y) : x, y ∈ Z3}

(1, 2) + (2, 2) = (0, 1), 2(1, 2) = (2, 1).

Defn: A subset W of a vector space V is a subspace if it is a

vector space, in its own right, using the same vector addition and

scalar multiplication as in V (and over the same field).

The following characterization of subspaces is similar to the characterization of subgroups that we gave earlier.

Theorem 4.1 A nonempty subset W of a vector space V is a subspace iff it is closed under vector addition and scalar multiplication.

Proof: “only if:” obvious.

“if:” Axioms (vii) - (ix) for W are inherited from V .

Axiom (vi) holds by assumption.

So, it remains to show that (V, +) is an abelian group. For this,

we know by our earlier characterization of subgroups that it suffices

to prove that V is closed under 1) vector addition and 2) additive

inverses. We are assuming 1). And 2) follows from the fact that for

any u ∈ W , (−1)v = −v: We leave this fact as an exercise in HW3.

Example: {(x, y, 0) : x ∈ Z3} and {(x, x, x) : x ∈ Z3} are

subspaces of V (3, 3). To see this, apply Theorem 4.1.

Defn: Let V be a vector space, u1, . . . , uk ∈ V, a1, . . . , ak ∈ F .

2

An expression of the form

k

X

aiui

i=1

is called a linear combination of {u1, . . . , uk }.

Defn: Let V be a vector space and S ⊆ V . The span of S,

denoted hSi, is the set of all linear combinations of elements of S.

And S is called a spanning set for hSi.

It follows from Theorem 4.1 that hSi is a subspace of V . In fact,

these are the only subspaces of V .

In the following examples, we consider subsets S ⊂ V (3, 3).

Example 1: Let S = {(1, 0, 0), (0, 1, 0), (0, 0, 1)}.

Claim: hSi = V (3, 3).

Proof: Clearly LHS ⊆ RHS. For the reverse inclusion: given

(x, y, z) ∈ V (3, 3), we can write

(x, y, z) = x(1, 0, 0) + y(0, 1, 0) + z(0, 0, 1)

Example 2: Let S = {(1, 0, 0), (0, 1, 0)}.

Claim: hSi = {(x, y, 0) : x, y ∈ Z3}.

Proof: Clearly LHS ⊆ RHS. For the reverse inclusion: given

(x, y, 0), we can write

(x, y, 0) = x(1, 0, 0) + y(0, 1, 0)

Example 3: Let S = {(1, 0, 0), (2, 1, 0)}.

Claim hSi = {(x, y, 0) : x, y ∈ Z3}.

3

Proof: Clearly LHS ⊆ RHS. For the reverse inclusion: given

(x, y, 0), we can write

(x, y, 0) = (x − 2y)(1, 0, 0) + y(2, 1, 0)

Note that any subspace of V (n, q) has a finite spanning set, namely

itself.

4

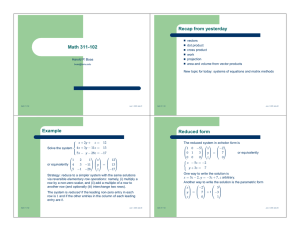

Lecture 14:

Recall definitions of vector space over a field F , subspace, span

of a subset S: hSi, which is the set of all linear combinations of

elements of S.

W := hSi is a subspace and we say that S is a spanning set for

(or spans) W .

Our main interest is the vector space V (n, q) := GF (q)n, which

is a vector space over the field F = GF (q).

Defn: Let V be a vector space over a field F . A subset {u1, . . . , uk } ⊂

V is linearly dependent if there exists a1, . . . , ak ∈ F, not all 0, s.t.

k

X

aiui = 0

(1)

i=1

Equivalently, there exists i s.t. ui is a linear combination of {uj :

j 6= i, 1 ≤ j ≤ k}.

Defn: A subset {u1, . . . , uk } ⊂ V is linearly independent if it is

not linearly dependent.

Equivalently, if a1, . . . , ak ∈ F, s.t.

k

X

aiui = 0

(2)

i=1

then each ai = 0.

Facts:

1. if u is a scalar multiple of v, then {u, v} is linearly dependent.

2. any set that contains the zero vector is linearly dependent.

3. if u 6= 0, then {u} is linearly independent:

5

Proof: if au = 0 and a 6= 0, then a has a mult. inverse,

a−1(au) = a0 and so

u = 0, a contradiction.

So, the only solution to au = 0 is a = 0. Example: {(1, 0, . . . , 0), (0, 1, . . . , 0), . . . , (0, 0, . . . , 0, 1)} is linearly

independent over an field.

Example: In V (3, 3) is {(1, 2, 1), (0, 1, 2), (1, 0, 1)} linearly independent? We must see if there is a non-trivial solution to

a1(1, 2, 1) + a2(0, 1, 2) + a3(1, 0, 1) = (0, 0, 0)

(3)

This is equivalent to the scalar equations (over Z3):

a1 + a3 = 0, 2a1 + a2 = 0, a1 + 2a2 + a3 = 0

We can solve this in an ad hoc way:

From the first equation, we see that a3 = −a1.

From the second equation, we see that a2 = −2a1.

Plugging into the third equation, we see that

a1 + 2(−2a1) − a1 = 0 and so

Since 2 · 2 = 1, this implies that −a1 = 0 and so a1 = 0 and so

a2 = a3 = 0.

So, this set of vectors is linearly independent. We will develop a concrete algorithm for checking linear independence.

Defn: A basis for a vector space V is a subset S ⊂ V such that

S spans V and S is linearly independent.

Example 1: {(1, 0, . . . , 0), (0, 1, . . . , 0), . . . , (0, 0, . . . , 0, 1)} is a

basis for V (n, q).

6

Example 2: {(1, 0, 0), (0, 1, 0)} is a basis for {(x, y, 0) : x, y ∈ Z3}.

Example 3: {(1, 1, 1)} is a basis for {(x, x, x) : x ∈ Z3}.

Theorem 4.2: Every subspace of V (n, q) has a basis. In fact, any

spanning set of a subspace of V (n, q) has a subset which is a basis.

The proof of this theorem inductively extracts a basis from a finite

spanning set. Read and understand the proof in the text.

Given a finite spanning set for a subspace W of V (n, q), we will

develop a concrete algorithm for finding a basis of W .

Theorem 4.3 Let W be a subspace of V (n, q) and let {u1, . . . , uk }

be a basis for W . Then

(i) every element of W can be expressed uniquely as a linear combination of {u1, . . . , uk }.

(ii) |W | = q k .

Proof:

(i): Since {u1, . . . , uk } is a basis for W , it spans W and so every element of W can be expressed as a linear combination of {u1, . . . , uk }.

For the proof of uniqueness, suppose that for some x ∈ W , there

exist a1, . . . , ak , b1, . . . , bk ∈ F s.t.

x=

k

X

aiui

i=1

and

x=

k

X

biui

i=1

Pk

i

Then,

i=1 (ai − bi )u = 0 and so by linear independence, each

ai − bi = 0 and so each ai = bi.

7

(ii) This follows from (i) and the fact that there are q k distinct

P

linear combinations ki=1 aiui of the basis elements. It follows from part (ii) of the theorem above that any two bases

of a subspace of V (n, q) have the same size. The common size is

called the dimension of the subspace.

Example 1: {(1, 0, . . . , 0), (0, 1, . . . , 0), . . . , (0, 0, . . . , 0, 1)} is a

basis for V (n, q). Its dimension is n and |V (n, q)| = q n.

Example 2: {(1, 0, 0), (0, 1, 0)} is a basis for W := {(x, y, 0) :

x, y ∈ Z3}. Its dimension is 2 and |W | = 32 = 9.

Example 3: {(1, 1, 1)} is a basis for W := {(x, x, x) : x ∈ Z3}.

Its dimension is 1 |W | = 3.

Remark: Theorem 4.2 and Theorem 4.3(i) can be generalized to

any vector space that has a finite spanning set, such as F n for any

field F (finite or infinite). In fact, these are precisely the finitedimensional vector spaces and any subspace of a finite-dimensional

vector space is finite-dimensional.

The algorithms for checking linear independence and for finding a

basis depend on matrices. Let A be an m × n matrix (rectangular)

with entries in a ring F

Can multiply such matrices, compute determinants of square matrices, etc.

Examples: F = Z4

2 1 3

1 2

1 2

A = 1 1 2 , B = 0 2 AB = 3 2

0 2 1

1 3

1 3

In order to do the things that we need to do, we will now

require that F be a field. In fact, we assume that F = GF (q).

8

Write

R1

R

A= 2

...

Rm

Defn: Elementary row operations on a matrix:

1. Exchange a pair of rows: Ri ↔ Rj

2. Multiply a row by a non-zero scalar: aRi replaces Ri

3. Add a scalar multiple of one row to a different row: aRi + Rj

replaces Rj

Note: any permutation of rows can be realized by a sequence of

row exchanges.

Defn: The row space of an m × n matrix A is the span of its rows.

Denoted R(A).

Defn: A matrix A is row equivalent to a matrix B if B can be

obtained from A by a sequence of elementary row operations.

Note: row equivalence is symmetric: each row operation is invertible (implicit in proof above); so, A is row equivalent to B iff A is

row equivalent to B.

Theorem 1: If B is row equivalent to A, then R(B) = R(A).

Proof postponed.

Defn: A leading entry of a matrix is the left-most non-zero entry

in a row. Sometimes called a pivot entry or pivot position.

Note: a row has a leading entry iff it is nonzero row (i.e., has at

least one nonzero entry).

Defn: A matrix is in Row Echelon form (REF) if

9

1. The leading entry in each non-zero row is strictly to the right of

the leading entry in the preceding row.

2. All the non-zero rows (if any) are at the bottom.

It follows that in an REF, all entries in the column below a leading

entry are zero.

L = a leading entry (the different appearances of L below may be

different nonzero elements)

0

0

0

0

...

0

...

...

...

...

...

...

0

0

0

0

...

0

L

0

0

0

...

0

∗

0

0

0

...

0

...

...

...

...

...

...

∗

0

0

0

...

0

a

L

0

0

...

0

∗

∗

0

0

...

0

...

...

...

...

...

...

∗

∗

0

0

...

0

b

c

L

0

...

0

∗

∗

∗

0

...

0

...

...

...

...

...

...

∗

∗

∗

0

...

0

Theorem 2: Every matrix is row equivalent to an REF.

Proof postponed.

Note: we say that a matrix B is an REF of a matrix A if B is in

REF and A and B are row equivalent.

Example over GF (3) = Z3: Let

2 1 0

A=1 1 2

0 2 2

Construct an REF B for A:

Step 1: multiply the first row by

2 1

0 2

0 2

10

-2 and add to the second row:

0

2

2

Step 2: subtract the second row

2 1

0 2

0 0

from the third row:

0

2=B

0

Theorem 3: Let W = hSi where S is a finite subset of V (n, q).

Let A be a matrix whose rows are the elements of S. Let B be a

REF of A. Then the nonzero rows of B from a basis for W .

Corollary: Let S be a finite subset of V (n, q). Let A be a matrix

whose rows are the elements of S. Let B be a REF of A. Then S is

linearly independent iff B has no zero rows.

11