MIT LIBRARIES

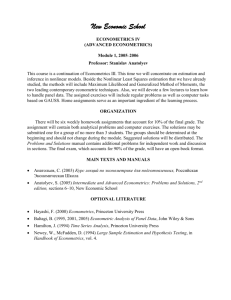

advertisement

MIT LIBRARIES

Digitized by the Internet Archive

in

2011 with funding from

Boston Library Consortium IVIember Libraries

http://www.archive.org/details/rateadaptivegmmeOOkuer

$^\d

'.^i-

Massachusetts Institute of Technology

Department of Economics

Working Paper Series

RATE-ADAPTIVE

GMM ESTIMATORS FOR

LINEAR TIME SERIES

MODELS

Guido Kuersteiner

Working Paper 03- 17

October 2002

Roonn E52-251

50 Mennorial Drive

Cannbridge, MA 02142

This paper can be downloaded without charge from the

Social Science Research Network Paper Collection at

http://ssrn.com/abstract=4 10061

GMM ESTIMATORS FOR LINEAR TIME

RATI> ADAPTIVE

MODELS

SERIES

By Guido M. Kuersteiner*

MASSACHUSETTS INSTITUTE

OF TECHNOLOGY

First Draft:

J

JUN

5 2003

November 1999

This Version: October 2002

LIBRARIES

Abstract

In this paper

we analyze

Generalized

Method

models as advocated by Hansen and Singleton.

efficiency

bounds

if

number

the

GMM estimator.

way

known that

number

of selecting the

The optimal number

approximation.

conditions

is

proposed.

The

of instruments

shown that the

It is

order adaptive. In addition a

new

version of the

these estimators achieve

of instruments in a finite sample

is

GMM

GMM. A

bias correction for both standard

MSE

selected by minimizing a criterion based on

version of the

GMM estimator

is

higher

GMM estimator based on kernel weighted moment

kernel weights are selected in a data-dependent way. Expressions for

standard

shown that the

is

fully fesisible

the asymptotic bias of kernel weighted and standard

of the

well

This paper derives an asymptotic mean squared error (MSE) approximation for the

available.

MSE

It is

estimators for time series

of lagged observations in the instrument set goes to infinity. However,

to this date no data dependent

the

Moments (GMM)

of

GMM estimators are obtained.

procedures have a larger asymptotic bias and

and kernel weighted

MSE

It is

shown that

than optimal kernel weighted

GMM

estimators

is

proposed.

It is

bias corrected version achieves a faster rate of convergence of the higher order terms

than the uncorrected estimator.

Key Words: time

series, feasible

GMM,

number

of instruments, rate-adaptive kernels, higher

order adaptive, bias correction

'Massachusetts Institute of Technology, Dept. of Economics, 50 Memorial Drive E52-371A, Cambridge,

USA. Email: gkuerste@mit.edu.

Web: htip://web.mit.edu/gkuerste/www/.

Gallant, Jinyong Hahn, Jerry

Hausman, Whitney Newey and Ken West

excellent research assistance.

Comments by

the paper. Financial support from

NSF

a co-editor and three

grant SES-0095132

is

I

for helpful

anonymous

MA

02142,

wish to thank Xiaohong Chen, Ronald

comments. Stavros Panageas provided

referees led to substantial

improvements of

gratefully acknowledged. All remaining errors are

my

own.

1.

Introduction

In

recent years

els

based on

first

GMM estimators have become one of the main tools in estimating economic mod-

order conditions for optimal behavior of economic agents. Hansen (1982) established

the asymptotic properties of a large class of

GMM estimators.

Based on

first

order asymptotic theory

was subsequently shown by Chamberlain (1987), Hansen (1985) and Newey (1988) that

tors based on conditional

moment

restrictions

it

GMM estima-

can be constructed to achieve semiparametric efficiency

bounds.

The

focus of this paper

is

the higher order asymptotic analysis of

series case. In the cross-sectional literature

result in substantial second order bias of

it is

well

GMM

know that using a

large

estimators for the time

number

of instruments can

estimators, thus putting limits to the implementation

of efficient procedures. Similar results are obtained in this

fully feasible,

GMM

paper

second order optimal implementations of efficient

for

the time series case. In addition,

GMM estimators for time series models

are developed.

In independent sampling situations feasible versions of efficient GA'IM estimators were implemented

amongst

others,

by Newey (1990). In a time

series context

examples of first order

efficient

estimators are

Hayashi and Sims (1983), Stoica, Soderstrom and Friedlander (1985), Hansen and Singleton (1991,1996)

and Hansen, Heaton and Ogaki (1996). Under special circumstances and

Kuersteiner (2002) constructs a feasible,

number

of instruments

is

efficient

allowed to increase at the same rate as the sample

the optimal expansion rate for the

number

paper a data dependent selection rule

GMM estimators

Several

ture.

of instruments for efficient

size.

fact, to this

More

generally

date no analysis of

GMM procedures depending on

dimensional instrument set has been provided in the context of time series models. In this

infinite

version of

a slightly different context,

GMM estimator for autoregressive models where the

however, such expansion rates do not lead to consistent estimates. In

an

in

moment

for linear

for the

time

number

series

of instruments

models

is

is

obtained and a fully feasible

proposed.

selection procedures, applicable to time series data, were proposed in the litera-

Andrews (1999) considers

selection of valid instruments out of a finite dimensional instrument

set containing potentially invalid instruments. Hall

and Inoue (2001) propose an information

based on the asymptotic variance-covariance matrix of the

GMM estimator to select

relevant

criterion

moment

conditions from a finite set of potential moments. Both approaches do not directly apply to the case

of infinite dimensional instrument sets considered here.

bandwidth parameters

the asymptotic

MSB

optimal bandwidth

in

for kernel estimates of

of the estimator.

Linton (1995) analyses

tiie

optimal choice of

the partially linear regression model based on minimizing

Xiao and Phillips (1996) apply similar ideas to determine the

the estimation of the residual spectral density in a Whittle likelihood based

re-

More recently Linton

gression set up.

bandwidth

for

an

(1997) extended his procedure to the determination of the optimal

Newey

semiparametric instrumental variables estimator. Donald and

efficient

(2001)

use similar arguments to determine the optimal number of base functions in polynomial approximations

to the optimal instrument.

their true

They analyze higher order asymptotic expansions

parameter values. While the

first

order asymptotic terms typically do not depend on the

estimation of infinite dimensional nuisance parameters as

this

of the estimators around

shown

in

Andrews (1994) and Newey

(1994)

not the case for higher order terms of the expansions.

is

In this paper

case of

GMM

important

for

we

will

obtain expansions similar to the ones of Donald and

Newey

(2001) for the

estimators for models with lagged dependent right hand side variables. This set up

the analysis of intertemporal optimization models which are characterized by

One

conditions of maximization.

particular area of application

Minimizing the asymptotic approximation to the

struments leads to a feasible

GMM

MSE

is

first

is

order

asset pricing models.

with respect to the number of lagged in-

The trade

estimator for time series models.

of

is

between more

asymptotic efficiency as measured by the asymptotic covariance matrix and bias.

Full implementation of the procedure requires the specification of estimators for the criterion func-

tion used to determine the optimal

for the

number

of instruments.

optimal number of instruments leads to a

GMM

It is

established that a plug-in estimator

estimator that

We

the same asymptotic distribution as the infeasible optimal estimator.

weighted version of

GMM.

It is

shown that the asymptotic

kernel weights are applied to the

that adjusts

its

moment

conditions.

The paper

tion.

also propose a

new

kernel

this

purpose a new rate-adaptive kernel

is

can be reduced

if

suitable

introduced. In addition, a

proposed.

is

proposed.

The

bias corrected

GMM estimator achieves a faster optimal rate of convergence of the higher order terms.

is

organized as follows. Section 2 presents the time series models and introduces nota-

Section 3 introduces the kernel weighted

asymptotic

is

and achieves

and

a semiparametric correction of the asymptotic bias term

version of the

MSE

fully feasible

bias

smoothness to the smoothness of the underlying model

data-dependent way to pick the optimal kernel

Finally,

For

is

MSE terms and

GMM estimator,

contains the analysis of higher order

derives a selection criterion for the optimal

number

of instruments. Section

4 discusses implementation of the procedure, in particular consistent estimation of the criterion function for optimal

GMM

bandwidth

selection.

Section 5 analyzes the asymptotic bias of the kernel weighted

estimator and introduces a data-dependent procedure to select the optimal kernel.

discusses non-parametric bias correction.

Section 7 contains a small

proofs are collected in Appendix A. Auxiliary

upon

request.

Lemmas

are collected in

Section 6

Monte Carlo experiment. The

Appendix B which

is

available

2.

Linear

We

Time

Series

Models

consider the linear time series framework of

stationary stochastic process.

Hansen and Singleton

assumed that economic theory imposes

It is

of a structural econometric ecjuation on the process

we

=

partition yt

and

yt 3

Here, y^j

[y(,i,J/( 2'i't s]-

(1996). Let yt €

is

yt-

the scalar

R^ be

a strictly

restrictions in the

form

In order to describe this structural equation

left

hand side

The

are the excluded contemporaneous endogenous variables.

possibly a subset, of the lagged dependent variables yt_i,

variable, y^g are the included

vector Xt

where

...,yi_j.

r

is

is

defined to contain,

known and

The

fixed.

structural ecjuation then takes the form

yt.i=ao

(2.1)

The

model

structural

m

again,

with 7^

is

for

Ijl

=

/3

=

+

+

We

finite.

a\L

subject to exclusion

+

ao

/3'x(

+ arU

+

An

£f

alternative representation of (2.1)

is

x p vectors

a^

and normalization

restrictions

imphed by

such that there exists an

with

infinite

£<

we

such that a{L,fi)yt

also

=

ao

=

for

m

>

strictly

1,

where

=

EetSt-j

[yj2j^^(]

we can

by Yj

obtained by setting

+

£(.

Note that

a;

are

/?.

assume that the reduced form of

moving average (VARMA) process A{L)yt

=

yt

is

1

is

—

yt

admits a

A{l)i.iy

+ B{L)ut

moving average representation

(2.2)

G M^

m—

x't

all

1

...

representation as a vector autoregressive

/x

specifically, £t

the regressors in X( where

In addition to the structural equation (2.1)

Here,

More

denote the autocovariance function of

G K'^ and collecting

[/3o,/3x]

=

ao

Ct-

> m.

write (2.1) as y^j

a{L,/3)

on the innovations

and follows a Moving- Average (MA) process of order

assumed known and

=

Letting

also imposes restrictions

=

stationary with Eet

+ P'oyt,2 + P'i^t+£t-

a constant and Ut

is

^iy

+ A-\L)B{L)ut.

a strictly stationary and conditionally homoskedastic martingale

difference sequence.

In order to completely relate

model

(2.1) to

the generating process (2.2)

matrices of lag polynomials Ai{L) and B\{L) such that A\{L)yt

and Bi{L)

satisfy [a'(L,/3), ^'i(L)]'

=

.4(1),

\b'

[L) B[{L)]'

.

=

we

+ a\- The matrices A\{L)

[cvo,a'i]' = A{\)iiy. It follows

= B{L)

and

this representation that the structural innovations St are related to the

by

=

We

b{L)ut where b(L)

=

60

+

biL

+

...

assume that A{L) and B{L) have

A{L) and B{L) are

finite order

+

all

bm-iL'^~^ and

6,

G

W

are

I

reduced form innovations

x p vectors of constants.

their roots outside the unit circle

polynomials in L. Economic theory

the polynomials a{L,0) and b{L) such that their degrees are

is

known

p—1 xp

B\{L)ut

from

St

define additional

and that

assumed to provide

to the investigator.

all

elements of

restrictions

No

on

restrictions

are assumed to be

L

known about the polynomials A\{L) and Bi{L).

are unknown, although assumed

parameter vector

/?

=

finite.

investigator

moment

(2.1) implies

These moment

moment

=

>

for all j

model

form

of the

A

(2.1).

well

zit

where E

W be

known example

strictly stationary

asset pricing models.

is

^

••

— — oo

£l

^

and det B[z)

for

\z\

the polynomial A{L) and

<

some constant a^ and

polynomial B{L)

let

Ai

finite

be the root of

...,u\''

.

Assume

<

oo for

<

fc

is

E {utu[\J^t-i) =

that

8.

defined as C{L)

L

order polynomials in

^

for

\z\

maximum modulus

<

lag polynomial 6 (L)

= 6{L)B{L) and

=

— 9\L —

1

be the root of

let Ai

...

=

^

such that detyl(z)

of det{zP'^A{z~^))

— 9m-\L^~^.

A~^{L)B{L)

Let pa he the degree of

1.

which can eqiiivalently be written as f,(X)

1.

...)'

and

let

P'

=

(27r)~^ ct^

=

Let

0.

|g,(giA)|2

f^^

Let p^ be the degree of the

maximum modulus

= Cov {xt+rm ^t^oo)' Assume

The column rank assumption

for an extensive discussion of this point).

Then l//e(A)

The

0,

of det{zPbB{z~^))

=

0.

max(Ai,Ai). Assume that A G (0,1). Define the infinite dimensional instrument vector

(2/j,yj_j,

Remark

E {ut\lFt-i) =

with coefRcient matrices Cj

Moreover, assume b{z)

1.

(27r)^^6(e''^)'i;5(e-'^)

=

ergodic, with

|cum,j,,..,,Jii,...,^fc_i)|

where A{L) and B{L) are p x p matrices of

=

following formal Assumf>-

£fc_i=^— oo

Assumption B. The lag polynomial C{L)

zj,^

we impose the

oo

^

Define A

and

the k-th order cross cumulant oful]^^^,

oo

=

Alternatively, the

a positive definite symmetric matrix of constants. Let ul be the i-th element of ut and

is

cumij_...^j^(ti, ...,ifc_i)

f,{X)

estimators.

and A{L),B{L) and b{L).

Assumption A. Letut G

T,

GMM

by economic theory and then lead to the formulation of a

In addition to the structural restrictions of Equation (2.1)

tions on

form

0.

restrictions are the basis for the formulation of

restrictions (2.3) are often implied

structural

concerned with inference regarding the

restrictions of the

E{et+myt-j)

(2.3)

is

b{L),Ai{L) and B\{L) are treated as nuisance parameters.

[Pq, I3[) while

The economic model

The

In particular their degrees in

exists

fact that ut

In our context

it is

and corresponds

is

for

P

is

needed

that

P

has

full

column rank.

for identification (see Kuersteiner (2001)

Assumption (B) guarantees that /^(A)

to the spectral density of an

^

for

A 6 [— tt,

tt].

AR(m-l) model.

a martingale difference sequence arises naturallj' in rational expectations models.

needed together with the conditional homoskedasticity assumption to guarantee that

the optimal

GMM weight matrix

is

of a sufficiently simple form. This allows us to construct estimates of

number

the bias terms converging fast enough for bias correction and optimal

The

conditional homoskedasticity condition E{utu[\J-t-i)

changing variances. Relaxing this restriction results

type analyzed

in

in

=

Eutu't

restrictive as

is

more complicated

Kuersteiner (1997, 2001). In principle the higher order

of instruments selection.

GMM weight matrices of the

moment

however nonlinear and

will

Andrews (1991) shows

by a strong mixing assumption

Andrews

(1991)

and here are

resulting estimator

cumulants limits the temporal dependence of the innovation

for the

for

/c

=

for Ut-

4 that the summability condition on the cumulants

The cumulant summability

than the second part of Condition

slightly stronger

by

not be considered here.

The summability assumption

process.

restriction implied

The

conditional homoskedasticity could be used in addition to the conditions (2.3).

is

time

rules out

it

restrictions

A

condition used here

Andrews

in

What

(1991).

is

is

implied

is

similar but

needed both

in

on the eighth-moment dependence of the underlying process

Ut.

GMM estimation

Infeasible efficient

restriction (2.3). In our context this

An

infeasible estimator of

based on

(3

reference point around which

For this purpose

is

=

let Q.

/? is

where

zj.ooj

is

lagged observations

all

cis

moment

instruments.

defined in Assumption (B),

is

used as a

^^'^''^ fl.{l)

=

Cov{zt^oo, z[_i

^) and

D=

P'Q~^P where P

detailed analysis of these infinite dimensional matrices can be found

and Appendix

The

(B.2).

infeasible estimator of

is

given by

n~Tn

..

= D-'p'Q-'- J2

/3„,^

2t,oo

the implications of the

all

feasible versions of the estimator.

Ylh=^m+i Ij^iO

A

based on exploiting

equivalent to choosing

we expand

defined in Assumption (B).

in Kuersteiner (2001)

is

for

{yt+m.i

-

a4)

-

(-t.oo

® ^iy)

1,0

t=i

where Iqo

an infinite dimensional vector containing the element

is

= P'n~^-^

and

/uj is

the

element of

first

i^i

y

~

® m)^( almost surely such that s/n (/?„ ^o ~ 0o) D~^do. It can

be shown that D~^do — N{0,D~^) as n — oo under the assumptions made about yt and etFor any fixed integer M, let Zi^m = {y't^y't-i: yt-M+iY be a finite dimensional vector of instruments.

An approximate version /3„jv/ of Z^n.oo i^ then based on Dm = P'^jQ^^Pm and zt^M where Pm and Q.m

Let do

—

1

Yli^t,rx

loo

+

same way

are defined in the

D~^do

at

—

>

as n,

same way

fully feasible

in

Af

which AI goes to

in the

Equation

>

—

»

as (3„

^

if

P and Q with

The

infinity,

estimator

(3.2)

oo.

as

last

once

M

is

statement

i3„ j^f is

but with

z^oo replaced

Pj\j

is

no longer

by Zt^M-

It

then follows that ^/n

and

fi/i/

replaced by estimates

a function of the data alone.

while data-dependent selection of AI

is

A more

^ — /3) —

without specifying the rate

true, at least not

replaced by a feasible estimator

{^fi^

/3„

Pm

m

and

where $ri.M

fi/i/.

We

is

call

detailed definition of /?„ ^j

discussed in Section

4.

defined

0^

is

^-y

a

given

3.

Kernel Weighted

GMM

In this paper a generalized class of

introduced. Conventional

(2.3).

More

GMM

GMM

estimators based on kernel weighted

estimators are based on using the

non-random weights

generally one can consider

k{j,M)Eet+myt-j-i

The

function k{j,M)

M)

k{j,

is

The truncated

to denote the indicator function.

The

restrictions

kernel

is

M of the moment restrictions

such that

0.

For the special case where k{j,M)

a generalized kernel weight.

a standard kernel function.

is

M)

k{j,

=

first

moment

is

=

k{j/M)

=

k{j/M),

{\j/M\ < 1} where we use

{.}

general kernel weighted approach therefore covers the standard

shown that many

GMM procedure as a special case when the truncated kernel used. In Section 5

kernel functions reduce the higher order bias of GMM and that there always exists a kernel function

is

it is

that dominates the traditional truncated kernel in terms of higher order

We now

describe the kernel weighted

kM =

—

having kernel weight k{j

{km

diag({t

sample.

?/

="

>

diag(fc(0,

l,Af) in the

M),

j'-th

1}

,....{t

The

> M])

estimate of the weight matrix

weight matrix

is

Af ))'

diagonal element and zeros otherwise.

An

is

instrument selection matrix

=

denoted by zt^M

(5'm(0

® Ip)

5m (0 =

no data

is

[zt^M

Km =

Let

" 1m ® v)

in

the

where

Qm

is

obtained as follows.

m—

hM=

where 7^(Z)

For

standard

Let

=

M

^ Xl"=r"^m+i ^t^t-i and

fixed

inefficient

Zm

~

et

and possibly small,

demeaned element

in y<.

n~^Zi^jY. Let

—

x]'

Then

=

- '^^

zi^^^'t-l hi-

—

7"(0^A/(/)

Y.

a{L, f^n,M)iyt+m — y) for

well

it is

GMM procedures where Q^

x,...,i„

define Qi\i(l)

\

known

=

Em = Km^~\}Km-

Z^ =

Mp matrix

/?„

m

K,M = (P'm^mPm)

7

[2inax(i, r-m+i), Mi

Also,

P]^,;

Assuming that

dimension of the parameter space, the estimator

first

stage estimator

that such an estimator can be obtained from

the matrix of regressors.

define the d x

some consistent

Imp-

be the matrix of stacked instruments

[xniax(m+i,7-+i)

We

then given by

(3.1)

(3.2)

1,

introduced to exclude instruments for which there

is

vector of available instruments

The optimal

^M ~

k{M ~

2^t=iyt-

An

PnM-

...,

Define the matrix

/3„jv/-

where Ip the p-dimensional identity matrix.

Ip)

'S'

GMM estimator

MSE.

=

M

Y

is

-) ^n-m,M]' and

the stacked vector of the

n~^X'Zf,; as well as the

is

such that

M

can now be written as

"'

P'm^mP'm-

X =

>

d/p,

Mp x

1

where d

first

vector

is

the

.

Km

For the truncated kernel with

kernel weighted

moments can be

hjj

instead of the optimal

Hjv/

=

Ihip, (3.2)

inferred

from

(3.2).

M)

is

GMM

the standard

The

As

as weight matrix.

for suitable choices of the kernel function k{j,

order loss of efficiency

is

is

The

formula.

effects of using

kernel matrix A'a/ distorts efficiency by using

shown below, these

and bandwidth M.

more than compensated by a reduction

It is

second order

effects are

also

shown that the second

of the second order bias for suitably

chosen kernel functions.

We now

which plays a

define the constant s

role in

determining the rate of convergence of

Definition 3.1. Let \\ and Ai be as defined

s

=

the muhipUcity of Aj. Define s

si

=

3/2si

-

<

1/2 if Ai

Assumption C. The

Mh^

[-1,

,

=

A:(0)

number

hnite

We

1]

1,

=

k{x)

=

kq

i)

X 1R+, k{-x,y)

=

1/2 if Aj

k{j,M)

is

=

€

k{x,y), k{0,y)

(0,

oo),

cq

=

1

ii)

is

.)

for all

= — log(A),

kq

>

1.

satisfied

Hanning

>

|x|

^

for

=

1. k{.) is

some

ij

eR+

6

q

+

—

3/2si

=

1/2 if Ai

k{j/M). Then

Ai

and

and

limy_»oo (1

—

k{.) satisfies

and

continuous at

=

lim3;_o(l

k

:

at all but a

— ^(i'))/

1^1'

(0, oo).

if it satisfies \k (x, y)|

and k{x,y)

=

for \x\

>

k{x/y. y*"A^)) /y^X^

made

1.

=

<

c

<

oo

V

(x, y)

£

Fiirtfiermore, for A

CqCj

|x|

for all

x &M.

Andrews (1991) except that we

in

in estimation.

finite

number

The assumption

of

moment

also

conditions,

could be relaxed at the

bandwidth parameters to estimate the optimal weight matrix.

This seems unattractive from a practical point of view and

is

2s\

k{j,AI)

if

This assumption ensures that only a

cost of having to introduce additional

but

=

Ai, s

rate-adaptive

bandwidth parameter, are used

Assumption (C)

^.

ci

for |x|

controlled by the

to /3„

(B). Let si be the multiplicity of Ai

oo) there exists a constant kq such that kg

(0,

kernel function k{..

and

for

Assiimption (C) corresponds to the assumptions

require A:(x)

>

regular

=

k(-x)\/x G M, k{x)

for all q

defined in Assumption (B)

and some constant

—

Assumption

in

/3„ ^^

first

Ai.

of points. For q e

distinguish:

2si

kernel function

Assumption D. The

K

We

turn to the formal requirements the kernel weight function k{.,.) has to satisfy.

is

not pursued here.

rules out certain parametric kernel functions such as the

Quadratic Spectral kernel

by a number of well known kernels such as the Truncated, Bartlett, Parzen and Tukey-

kernels.

In the case of regular kernels the constant kg measures the higher order loss in efficiency induced by

the kernel function which

kq

^

0.

is

proportional to

1

— k{i/M) = kqM~''

For rate-adaptive kernels we obtain an efficiency

which, as will be shown,

is

of the

same order

of

loss of

|i|'

1

magnitude as the

for large

M and some q such that

— k{i/M,M^X

efficiency loss

)

= M^X

CQ\i\'^

kq

due to truncating the

number

The

of instruments.

kernels are called rate-adaptive because their smoothness locally at zero

adapts to the smoothness A of the model. In Section 4

it is

shown how the argument M^\^'

in k{.,

.)

can be replaced by an estimate.

Kernel functions that satisfy Assumption (D) can be generated by exploiting the chain rule of

Consider functions of the form

differentiation.

(3.3)

for V

4^{v,z)

.

6 [—1,1] and some non-negative integer

z(— log(2))''

7^ 0.

The

q

rate adaptive kernel

/c(j,

M)

=

MG

satisfying

Assumption

(0,oo] as long as A

(C).

the elements of

M

P^M

as in

is

minimized.

/3„ ^/ is

Donald and Newey (2001). Let ^„

+ Tn^M

6

where rn,M

is

(0, 1)

shown

f^j

with respect to

(j)

and J k{x)'^dx

exists.

The

latter

is

the case for

\im.j[j^^

MSE

<

oo uni-

all

kernels

J (p{k{x),M^\

MSE

an error term. Define the approximate mean squared error (/?„(M,

for I

e'D'/^E {bnMb'nM) D^'^'^

and require that the error terms r^ jv/ and Rn,M

=

gW^

1

with

£'l

=

)'^dx.

sum

of a weighted

be stochastically approximated by bn,M such that n^'^(^„

—— =

v.

applied and for which

use the Nagar (1959) type approximation to the

-——

=

in the proof of Proposition (3.4) that

chosen such that the approximate

We

is

z)/9u|^^j

Also note that ^ 4>{k{x),NP\'^^'fdx

\i\''kqcl.

Donald and Newey (2001) such that

(3.5)

It is

We use the short hand notation / (j){x)'^dx =

The bandwidth parameter

bn,M

=

4>{H^/^^)^ M'\^'')) /M'X'^'

formly in

0,9(jf)(u,

cl){k{j/M),NF\^)

any kernel k{j/M) satisfying Assumption (C).

-

=

l,(f>{Q,z)

then obtained as

is

k{3,M)

\imM-*oc {I

=

0(1,2)

chosen in accordance with a kernel k{x) to which ^(u, z)

is

(3.4)

for

Then

q.

thus follows that z parametrizes the partial derivative of

for all z. It

The constant

kq

= {2~z{-\og{z)Y)v + {z{-\og{z)f-l)v'

of

used in

m~P) =

i,

k{.)) of

1,

+ "PniM, ^, k{.)) +

i?„,M

satisfy

Op(l) as

M^oo,ri^oo, VAf/n^O.

(/j„(M,£,fc(.))

The only

difference to

expectation.

^

this

3.2.

and define f,,{X)

(3.6)

is

that in our case (/j„(M,

As noted by Donald and Newey, the approximation

efficiency of /?„

Proposition

Donald and Newey (2001)

=

is

is

£, /c(.)) is

only valid for

an unconditional

M -^

oo.

Given the

the case of interest in the context of this paper.

Suppose Assumptions (A) and (B) hold and

^ Ej" -oor^'e-^^i.

i

eM'^ with

Define

Ai=piAn)-'

r /,,(A)7-i(A)dA.

J—n

i'l

=

1.

Let T^^^

=

EetXs

—

A

Let

Also, 5(9)

=

limM^oo

for k{.) such that

^nd

Qm =

Assumption (Ch)

P'^i

{Imp

for

(Jim) I (M'^A^^^) with

= i'D-y'%;nMbMD''/'-l,

b\j

Qm{Imj, ~

=

Then,

foUows that

= 0{M^ In) +

0{M-^'').

defined in (3.4) such that Assumption (D) holds,

A:(., .)

< K<

-

- Km) n^j + P'.p-Jihip - Km)-

is satisfied it

^„{MJ,k{.))

ii)

(1

\ix^M^^02Ml{M'^'y?^^) where a2M

Pm [P'm^iIPmY' P'm^m)

i)

=

=eD-^/'^X^AiD-^'-i. and Bx

n,M

and Ap-^~~X"

-^ 00

n

—

>

1/k with

CO,

\imn/M^^„{M.l,k{.))

Hi) for k{x)

=

{|i|

<

1}, n, 7\/

=

-^

(p{x)'^dx\

( I

+cl'^k^^B^'^^ /k

< k <

-> 00 and M~-'-'^ X^^' n -» 1/k with

Yimn/M'^ip^iM,e,k{.))

n

= AA +

+

Bi/k.

00,

Bi/k.

This result shows that using standard kernels introduces variance terms of order 0{M~'^'^) that are

larger than for the truncated kernel.

to variance terms of the

Using the rate-adaptive kernels overcomes

same order

as in the standard

introduces additional variance terms of order A/'^'A

optimal weight matrix resulting

be shown,

will

in

.

GMM

Nevertheless, kernel weighting

case.

Intuitively, the kernel function distorts the

an increased variance of higher order terms

this increased variance

can be traded

this problem, leading

off against

in

the expansion. As

a reduction in the bias by an appropriate

choice of the kernel function. Since any kernel other than the rate-adaptive kernels lead to slower rates

of convergence, only rate-adaptive kernels will be considered from

An immediate

corollary resulting from

Lemma

now

(3.2) is that the feasible

asymptotic distribution as the optimal infeasible estimator as long as

Corollary 3.3. Assume that the assumptions of Lemma

n

—

00 then

Because

s/ii iPnj^j

B^'''

- P) — D^^do

and Bi are

difficult to

=

on.

estimator has the same

M / ^/n -^

(3.2) hold. If n.

A/

—

>

0.

oc

and Mj^/n

(3.7)

i\/*

M* mimmize

estimate

we do not minimize

ifjj{AI,l,k(.)) directly. Instead

(p„{MJ.k{.)). Then, as n -» oc.

= argminMIC(A/) = argmin^

M€l

as

Op(l).

propose the following criterion.

Proposition 3.4. Let

^

A\

"

Me I

10

/

V./-00

M*/M*

(i)(x)'^dx]

/

—^

1

-logaM

where

we

with I

0"1M

=

+

{[d/p]

— cr2M

l,[d/p]

2, ...}

and

denotes the largest integer smaller than

[a]

with aiM, cr2M defined in Proposition

ciM^^A^^ + o{Xl^) where

Remark

+

2.

By

<

A, satisfies

construction, fi^^M'

A,

<

(3.2). If in addition, for

A^/^ then

M*/M* -

some constant

=

1

Here

a.

ci,

gm =

logaiM

0(n-i/2 (logn)^/^).

higher order efEcient under quadratic risk in the class of

-"^

=

all

GMM estimators Pn,M> Mel.

Remark

3.

For the truncated kernel

f^

second order loss of efficiency caused by a

fact that (P'j^^jQ.^ Pm)

is

4>{x)'^dx

finite

=

2

and C2m

=

0.

Note that aiM measures the

number of instruments. This

from the

result follows

the asymptotic variance matrix of the estimator based on

M instruments.

Then aiM has the

interpretation of a generalized measure of relative efficiency of (3^^. It corresponds

to N-'^a'^H-'^f'{I

-

Newey

P'')fH-'^ of Donald and

the additional loss in efRciency due to the kernel.

Ai = ^a~^i' D~^''^airx. Noting

that

m=

when

Remark

shown

totically does not

sufficient

Mp is

then

the total

is

proof of Proposition

B^'^\

=

1

measures the simultaneity bias

such that

Et is serially

number of instruments,

(4.3) that

M* =

logn/

possible to replace

MIC(M) by

is

(2co)

the simpler criterion n~^

ARMA

uncorrelated,

can be seen easily

(2001).

+o(logn) and asymp-

M*/M* —

1

=

o(l) is

(Mp) — loguiM where

this simplification is allowed

the case for the

it

Newey

B\ or k up to order o(logn). If the result

at an exponential rate. This

A

rn

A

the same as in Donald and

number of instruments. Note that

logaiM decays

5.

in the

depend on A,

it is

the total

Remark

Mp is

the penalty term essentially

1

4. It is

that here

The constant

When

caused by estimates of the optimal instruments.

The term a2M measures

(2001, their notation).

only for models where

class.

further simpUfication is available if the deBnition of(pj^(M,i,k(.)) is based on

ixD^''^EhnMb'n,MD^''^

instead of £'D^^^E{bn^Mb'^ j^^)D^^^'£.

log \P'j^Q'^Pm\

n~^ (Mp)

Note that

- log

|D|

where a\M

+ log |P|v^fi^ Pm\

this formulation

Then the approximate

=

MSE

D~^/'^ P'j^^W^ Pm D"^/^

.

depends on tiaiM

The simpUfied

is

quite similar to Hall

log|CTiM|

=

criterion for this case is

^^d knowledge of the variance lowerbound D~^

of MIC(M)

=

is

no longer required.

and Inoue (2000) except

for the penalty

term {Mp)~ /n.

4.

Fully Feasible

In this section

we

GMM

derive the missing results that are needed to obtain a fully feasible procedure.

particular one needs to replace the

unknown optimal bandwidth parameter M* by an estimate M*.

11

In

In

.

order to have a fully feasible procedure

we need

consistent estimates of the constants

A\,D and

(Jm,

converging at sufficiently fast rates.

The

following analysis shows that estimation of

that consistent estimates of

same

not true for

is

D

Ai do not depend on

and a^-

We

^i can be done nuisance parameter

additional

free in the sense

unknown parameters. Unfortunately the

use an approximating parametric model for C{L) to estimate

D

and a^.

We

consider the simpler estimation problem for the constant

first

j

Note that

C

estimates of the parameter

P

MA(m-l)

representation of

to obtain estimates

can be done by using a nonlinear

it-

least squares or

of the exponential

pseudo

decay of Cj and the fact that

sample average based on estimated residuals F^^

€t

can be obtained by using consistent

An MA(m-l) model

maximum

chapter 8 of Brockwell and Davis (1991). This procedure

Because

= — oo

are the coefficients in the series expansion of f~^ (A)

Consistent estimates of the

in

^i where

m

is

is

then estimated for

it-

This

likelihood procedure as described

outlined in the proof of

is finite,

= n~^ St^minf '+'"1/

F"

Lemma

(4.1).

can be replaced by a simple

^t+m^t-j- Using these estimates

one forms ^1 by

(4.1)

-^1

To summarize, we

Proposition

=1

E

'^j'^T-m-

j=-n+l

state the following proposition.

Assumptions (A) and (B) be

4.1. Let

satisfied.

Let A\ he defined

in

(4.1).

Tlwn

^{Ai--Ai)=Op{l).

We use a finite order VAR(h)

the parameters

Vt

=

Cq

matrices

Ui.

D

and G^].

Let 7r(L)

=

tti/j, ...tt/iM is

I

It

—

follows that yt

YI'tLi ""j-^

yt

=

=

=

C{L) with C{L)

Cq C{\)^y + X^jlj

and Evtv[

=

+

+

Ei,.

TTjyt-j

=

+ vt

YLT=o CjL^ to estimate

where

= Cq^Cj and

VAR coefficient

ttj

The approximate model with

then given by

(4-2)

where T,yh

approximation to C{L)~^

Evt^h'u't h '^

^^^

=

fiyj^

TTi^hyt-i

mean squared

...

+

nh.hVt-h

+

Vt.h

prediction error of the approximating model.

VAR

ap-

proximations in a bandwidth selection context was proposed by Andrews (1991). There however, h

kept fixed such that the resulting bandwidth choice

12

is

asymptotically suboptimal.

We

let

h

—

>

is

00 at a

data-dependent

rate, to

be described below, leading to the approximating model being asymptotically

equivalent to 7r(L).

was shown by Berk (1974) and Lewis and Reinsel (1985) that the parameters

It

root-n consistent and asymptotically normal for

h

if

i.e.

Ng and Perron

-^ DO.

n

>

0.

satisfy these conditions

h does not increase too

^

(tt'i,

...,<)'

,

Ft,,,

r'j

^

=

=

{y[

-

y'

if

h

selected

is

Ng and Perron

F/,

where

=

vt^h

(n

[r^^j, ...,r^''^]

=

—

Ut

h)~

—

,

-

...,y[_^+,

p x p block of Mf[^. Let

Y^=h

7i"i,/i2/t-i

y')'

Ffc

and

A^ =

Et=/z+i

h„

first statistic in

=

^max/"- -*

if J{i, i) is

Ng and Perron

i'*-!,'.^'/-!,/.

(1995).

Let

and define Mf;\l)

is

T^_^

= Cov {yt-i,y[_j). The coefficients of the approximate model

{ni^h, ,'^h,h) = ri,/,r^^ Let fi,/, = (n - h)"-^ J2t=h ^t,h ivt+i - y)

^t,hYth- '^^^ estimated error covariance matrix

—

•••

—

^h,hyt-h with coefficients

Definition 4.2. The general-to-specific procedure chooses

ii)

Op{\/logn) and h

be the kp x kp matrix whose (m,n)th block

adopt the following lag order selection procedure from

the

as

(1995).

Tr{h)'

is

=

the sequence J{i,

z), {i

=

as

n

/^

=

n~^ Y17=h+i

A Wald

.

Ng and

hn

all

^t.h^'f ^

test for the null

Perron's notation,

=

Perron (1995).

h

if,

at significance level a, J{h, h)

ftmax, -•^ l}i wliich is significantly different

not significantly different from zero for

-^

^"cf n^/2

Ei=h„,,< + i IKjII

S„

{vecjTh^h)

Ng and

i)

is

Fj ^^F^

then, in

J{h,h) =n{vecnh,h)' \ty^h^Mf^^{l)j

is

~h=

by AIC or BIC then h

hypothesis that the coefficients of the last lag h are jointly

We

~*

where F^^^

satisfy the Yule- Walker equations

and

n^^'^'}2i=h+i''^j

avoid the problems that arise from using information criteria to select the order of the ap-

to be the lower-right

and

quickly,

Moreover, the results of Hannan

h.

proximating model we use the sequential testing procedure analyzed in

nih)

are

At the same time h must not increase too slowly to avoid

and can therefore not be used to choose

to be adaptive in the sense of

To

(tti, ...,7r/i) if

/i, ...,7r/j_/i)

(1995) argue that information criteria such as the Akaike criterion do not

and Kavalieris (1984, 1986) imply that

fails

=

Berk (1974) shows that h needs to increase such that

asymptotic biases.

h,

—

chosen such that h /n

is

7r(/i)

(tti

i

=

/i-max;

•••:

1

where

from zero or

/imax is such that

^ do.

In order to calculate the impulse response coefficients associated with (4.2) define the matrix

•

I

Ah

13

with dimensions hp x hp. The j-th impulse coefficient of the approximating model

E'l^Aj^Efi

with

=

E'l^

Estimates Cj^h

—

of Proposition (4.3)

1^.

by substituting n{h)

is

approximated by aM,h

form the possibly

obvious way.

We

a similar way.

We

D^

=

=

-

(^\M,h

leads to an estimate D^.

with

/i

as defined in Definition (4.2). In the proof

D

~

^ =

=

P'm^h

^\\^ ^^x;

i^Mp ~

=

^'D,^

CT2A/,ft

=

where P^)

parameter aM,h

i'D^

the same way as described above and i/n-consistency uniformly in

Ti{h) in

for Df^. In practice, infinite

dimensional matrices.

The

P'M,h^M,hPM,hDh

dimensional matrices such as P'^

resulting approximation can

h

defined in the

'^

obtained from

is

bM,h^M,kb'MjiD^

on

ctim./i

am

^m) ^a/,/i + ^MM^M'^h (-^^p ~ ^m) ^^^

= P^j,^^

and

Op{n~^^'^).

Next,

^P\

t,

where Dh

Poo,/i

A

for Cj_h in

'

(^-ZM.h

that A/,

/,

Kj jP-'hIh) The

P'mm {P'M.h^MjiPM.hj

A^ such

use the approximate autocovariance matrices F^^ to

dimensional matrices P^;;,

then form the matrix QM,h

(JM,h

(4-3)

in

infinite

= QMAihip -

^M,h

obtained by replacing h with

for 7r(/i) in

shown that -yn-consistency of n{h) implies D:h —

is

it

then approximated by F^^

=

=

= Y7P=-m+i^^ (J)^h{j) where the infinite dimensional matrix 0/i(j)

We denote the k, l-th block of fi^^ by -dkj^h- We define Dh by letting

defined in the obvious way. Substituting estimates Cj

is

is

given by Cj^h

Likewise we approximate the optimal weight matrix by the

E'/^Aj^Eh of Cj^h are obtained

fully feasible version Z)^

F^

autocovariance function

1)2,....

Sl^

block Tf^f._-

k, l-th

The

.

~

^

dimensional matrix

has typical

is

^^'

f^"^

Yl'iZo^i+jA'^y.h^'i h

infinite

[/p,0, ...,0]

is

be made

^'^ estimate of aiA/.h

^-

and

^

£

0.^

M

is

based

implied by the result

is

have to be replaced by

arbitrarily accurate

finite

by an appropriate

choice of the relevant dimensions without affecting the convergence results.

We now

4>{k{i/M),

on

fr(/i).

..

define the estimate

/logCTjyj^^).

M

where

where we replace K\j

Moreover, we replace

In Proposition (4.3)

uniformly in

^j^^j^

s'

is

we

all

is

^'•^),

establish that (a ^^j-^-

defined in Proposition (4.3).

om\

(4.4)

The

M*

as

A/*

=

^/2

argmin

.

/

A[

Km

by

with typical element

TOO

fr{h)

following result can be established.

14

=

Op{n~^/'^ {\ognY^'^+'')

let

than was needed to show D; —

^=

.f'L'f

h

h

\2

(f){x)^dx\

/

/ [M'^'X^^^]

Establishing this result requires a stronger

thus explaining the slower rate of convergence. Also

defined in (4.1). Define

b./i//,

elements in (4.3) with corresponding estimates based

form of uniform convergence of the approximating parameters

Op(n

in

-loga^^^.

A.-,A\Dt

h

D=

^ where >1i

M*

Proposition 4.3. Let

addition

logaiM

=

si

Remark

6.

with

s'

|Ai

It is

=

ciM^'X^^''+o{X^/'') with

> Ai| +

Ultimately, one

is

is

.

<

as in

<

A^

=

cjM'^^A

A^/^ ^^jg^

used.

/log<7jy^;y. ^) is

is

(m*/M* -

ie.

+o(A^

=

l)

l)

=

Op{l). If in

Oj,{n-'^/^ (logn)'/2+'')

some

for

)

such that

Xj-

<

<

A^

A.

that the remainder term disappears sufficiently fast.

automated estimator

interested in the properties of a fully

M*

(m*/M* -

Tlien

(3.7).

.

not too close to A,

determined optimal bandwidth

<p{k{i/M*),

< Aij

|Ai

Si

always the case that \oga\M

Here we require that Xr

M*

be defined in (4.4) and

,>.

/?

where the data

plugged into the kernel function and the data-dependent kernel

In order to analyze this estimator

we need an

additional Lipschitz

condition for the class of permitted kernels.

Assumption

and

q

>

E. The kernel k{j/A4) satisBes \k{x)

—

<

k{y)\

\x'^

—

y'^\

Vx, y £

[0, 1]

for

some

ci

<

oo

1.

Assumption (E) corresponds to the assumptions made

results

ci

we are now

in a position to state

one of the main

Andrews

in

results of this

Using the previous

(1991).

paper which establishes that

an automated bandwidth selection procedure can be used to pick the number of instruments based on

sample information alone.

Theorem

4.4. Suppose Assumptions (A)

Assumptions (D) and

is

A^

k{.)

as defined in (3.3) or

<

and

A3/2.

Let

for case

Remark

7.

ii)

M*be

and (B) hold and ether

used as an argument in

ii) k{., .)

=

{|xi

<

1}.

(p{., .)

Assume

defined in (4.4) then for case

n/VM*(/3^

^;^,

—

fin.M')

—

satisfies

that

Op(l)- Also, if

Under the conditions of the Theorem,

Assumptions

logaiM

n/^/W0„

i)

M*

i)

i) fc(., .) is

and

=

defined in 3.4 and satisfies

(Cii)

and (E) where (p{.,

+

ciM'^^X^^'

.)

<

o(A^") with

- K.M^) = Op((logn)™^''(^''"«'"^))

— q < or ii) holds then

a/s'

can he replaced hy

M*

in the last display as

well as in Proposition (4.3).

Remark

8.

Although the constant

which requires that Aj

Theorem

(4.4)

Ai

typically

and there are no

shows that using the

that have asymptotic

estimators where a

7^

s' is

mean squared

it is

often reasonable to

assume that

s'

=

1

multiplicities in the largest roots.

feasible

errors

unknown

bandwidth estimator

which are

ecjuivalent to

nonrandom optimal bandwidth sequence

15

M*

M*

results in estimates j3^

asymptotic

is

used.

mean squared

An immediate

^^j,

errors of

consequence

/

of the

Theorem

is

also that

P^

is first

j^,j,

D~^do. The theorem however shows

respect

5.

Theorem

(4.4)

is

in addition higher order equivalence of P^j(,j.

Newey

stronger than Proposition 4 of Donald and

and P„,M'- In

this

(2001).

Bias Reduction and Kernel Selection

In this section

we analyze the asymptotic

bandwidth parameter

Theorem

5.1.

n,j

sample

size

n and the

Assumption

(D).

IfM

as a fimction of the

A'l.

.)

satisfies

D'^A[ f

<p'^{x)dx.

k{.,

lim

^/^IME[bn

'

m - P) =

J

This result also holds for kernels satisfying Assumption (C) if f

9.

-^ oo

then

n^oo

Remark

bias of /3„

Suppose Assumptions (A) and (B) hold and

and M/n^/'^ -^

j'

order asymptotically equivalent to the infeasible estimator

{x)dx

(p

is

replaced by

k'^{x)dx.

A

simple consecjuence of this result

kernel weighted

GMM

estimator

is

is

that for

many standard

kernels the asymptotic bias of the

GMM

estimator based on the

satisRes

Assumption (D) with

lower than the bias for the standard

truncated kernel.

Corollary 5.2. Suppose Assumptions (A) and (B) hold and

^ (f{x)dx <

2 or

Assumption (C) with

lirn^

where b^ f^

It

is

[ k'^{x)dx

||v^/ME(5„,M -

,5)11

the stochastic approximation to the

2.

If n,

M -» oo, M/n^/"^ ->

< Jim^ \\V^/ME{bl,, -

then

/3)||

GMM estimator based on

the truncated kernel.

can be shown easily that substituting well known kernels such as the Bartlett, Parzen or Tukey-

Hanning

in 4>(v,z) leads to

We now

j

{x)dx being equal to 16/15, 67/64 and 1.34 respectively.

(p

= Hml/

(

A^^^7'Af^2s-2

^here

J

by optimality of

A

(

[

[_^

il/^,

<

(f>{x)~dx)

k*

-

4

a simple variational argument

inequality

is

MSE

turn to the question of optimality in the higher order

Let k*

function.

which

<

fc(., .)

satisfied

<

|

is

oo.

M^

is

From Proposition

+ c^k'^B^''^

k*

<

sense of the choice of kernel

optimal for the truncated kernel.

(3.2)

it

follows at once that

Note that

any kernel

dominates the truncated kernel. In Theorem

used to show that we can always find a kernel

imiformly on a compact subset of the parameter space.

question of finding an optimal or at least dominating kernel.

When

g

solved for standard kernels by Priestley (see Priestley, 1981 p. 569).

16

=

2

is

k{.,

.)

for

(5.3)

such that this

This result raises the

fixed this

problem has been

In our context of rate-adaptive

kernels with fully flexible q

optimization problem

is

exist. In

known whether

not

it is

closed form solutions of the associated functional

any event, such solutions most

depend on the constant

likely

B^"^^

which

difficult to estimate.

To avoid

selection problem. Let <f>{k{x),

the kernel

we only choose

Let r be a

finite integer.

>

if |x|

ip^

=

Let

1.

and

it is

with

(5.1)

=

ip

U

= 1 + X][=i '^i^^

Then for j = 1,

r

,ip^)

fC^

2k{x)

—

...,

where

r

(f){k*{x)fdx]

J

Note that optimality

is

it

in this section

is

follows

>

for j

^'^^

^ ^

define

Also

k{x)

[0, 1),

U^ c

ili^

^

C/^.

1, /C^

=

h{x)\k{x)

enforces adaptiveness of

(p (•, •)

M'^,

=

let IC^

=

1

+

=

k{\x\) for

such that

{|x|

>

1}.

J^'^^^ ipiX',

<

x

compact and

C/1 is

The

permissible class

kernel k*{x)

<a( I

+k*?B^'^'^/K*

(l>{k{x))^dx]

\J-oo

(5.1) is

A

and

which means that

B'''

+

k{.,.)eICg

A;2b('?)/k*, all

J

for all

in general k*

A

sequence.

k*

The notation

elements

0(/c,

=

o^^.,

argmin

-,

is

/ctj ^^. )

estabhsh the following

Theorem

its

own optimal

data-dependent optimal kernel

(5.2)

a(

is

T

M*

To

see

depending on k and

why

(5.1)

same variance term

is

selected,

sequence, can be no worse than under the

<p(k(x)fdx]

+^^(2-^)

a,^,-,.

used to emphasize that the A'M-matrix used to construct

M^

M^

is

ct2a/

r.

contains diagonal

the optimal bandwidth for the truncated kernel.

We

result.

sup (k*{x)

^'^^

and

defined as k* where

5.3. Let k*{x) be defined as in (5.2).

Let Pn,M'{k'),k' ^^

A

a reasonable optimality criterion because one of the main objectives

to construct kernel functions that dominate the truncated kernel.

evaluated under

q>q' ,q'>l

depends on

B\/k* as the truncated kernel when evaluated along the sequence M^. Once the kernel k*

MSE, when

for

k{x) e [-1, l],ipe C/^|

implies dominance note that the particular choice of k* guarantees that k* has the

its

=

and k{x)

The optimal

the restrictions outlined before.

satisfies

pointwise in

be shown that

will

Because

/c(x)^ satisfies

\J-oo

a(

before.

solution to the optimal kernel

which by the Weierstrass Theorem can be approximated by polynomials.

understood that k{x) also

=

be as described

Define k{x)

(i/'j,

ICj= K.^

is

(f){k{x))

B^'^'. It

k{x),

M^X^)

..,tpj_i,0,ipj^i,..,tpT.),

[ip-^^,

of kernels

we propose the following data-dependent

these complications

kernel weighted

estimator based on k*. Here,

M*{k)

-

fc*(x))

Then

=

for

any

q'

6

[1,t],

r

<

oo,

Op{n-^^^ (logn)^/2+.')

GMM estimator with iiernel k* and let

= argmin^M^n"^ —

17

loga.

r

/3^

M'(k')

k'

^^

^''^

where for AJ{k*) we use

GMM

a'l^j

with

^^Mh ^^^^^

(t>{^*

Furthermore,

let

satisfy

\/1oS'^im/i)

=

Assumption

{Ai,

...,

Let

(B).

-"^

used as kernel weight. Then,

Ap^, Bi,

...,

Bqi^;pa,qb finite] be the set of all reduced

Go be a compact

set

Go C

O

such that sup^^j

fi''?'

Then, for some collection of sets U^, each sufficiently large, there exists a

0.

-

lim^r^o (l

^2,6(9')/^*

for

~k{x)^ I |x|''

<

any

6 [l,r],T < oo such that

q'

part of the theorem imphes that the truncated kernel

the case because the truncated kernel

dominates

6.

^

(

A:

oo and infeo -4

G

IC^'

with

>

kg'

=

4

+

(f^^^{k{x)fdxj -

j

{|.r|

<

1}

6

/C

and there

always dominated by k*

is

is

an element

.

This

in /C that strictly

it.

Bias Correction

Another important

are analyzed

for

siipe^

<

form models that

0.

The second

is

:

first.

issue

is

whether the bias term can be corrected

for.

The

benefits of such a correction

turns out that correcting for the bias term increases the optimal rate of expansion

It

the bandwidth parameter and conseciuently accelerates the speed of convergence to the asymptotic

normal limit distribution.

Using the result

Theorem

in

Pl,i

(6.1)

Note that

The

for

the following bias corrected estimator

= hnM -

~ [p'm^mPm)'' a

Ai can be estimated by the methods described

is

proposed

j 4>Hx)dx.

GMM (truncated kernel) the bias correction term

standard

bias term

(5.1)

is

simply 2-^

(

P'j^jQ'^JPm

in the previous section.

The

)

A\

quality of

the estimator Ai determines the impact of the correction on the higher order convergence rate of the

estimator.

If

for fin,M- If

Ai — Ai

-Ai— A\

The mean squared

is

=

only Op(l) then the convergence rate of

Op{n~^)

for

r]

6

(0, 1/2]

and

^/ri.

(/3„jv;

~

error of the bias corrected estimator

/^j

r^jv^ as in (3.5).

=^n M +'n m

We

^^'i^h

j^^

is

essentially the

same

then the convergence rate of the estimator

nD'/^£'Eb';,^„bl,/(D'/^

where

/3„

=

1

+

is

is

as the

one

improved.

defined as before by

^^(M.^.fc(.))

+

JR^,M

the same restrictions imposed on the remainder terms /?^ ^^

obtain the following result.

18

.

.

Theorem

Then

any

for

Suppose Assumptions (A) and (B) hold and

6.1.

i

=

eR"^ with f ^

1,

(fiUMJ,

k{.,

Assumptions (Ci) or (D).

satisfies

.)

K, .)) = 0{M/n) - logCTM- The

optimal

M*

can he chosen

by

Mp

M_ = argmm

IfM* = argmin

^ - log^M,

n/y/W*

RemEtrk

The

10.

result

then

(m*JM; -

(^pIjCi,

if $^

from Theorem

0((logn) /n)

7.

Monte

A

small

moment

for the

(6.1)

=

l)

is

j(^.

•"

It follows

logcTM-

Op(n-i/2 (logn)''^^/^) and

K^i + ^D-'A[ I hHx)dx^ = Op(l).

-

remains valid

,

n

replaced with P^ M^/k')

C

that for f^n^M* the higher order

MSE

y

J^

T^J) as long as

q'

>

s'

0(logn/n) compared to

is

GMM estimator without bias correction.

Cctrlo Simulations

Monte Carlo experiment

is

conducted in order to assess the performance of the proposed

For the simulations we consider the following data

and bias correction methods.

selection

generating process

(7.1)

with

[ui,

~

I't]

N{0, E) where

be estimated and

is

set to

not explicitly estimated.

/3

S

=

yt,i

=

Pyt,2

yt,2

=

<Pyt-i,2

has elements

The parameter

both Ordinary Least Squares (OLS) and

poorly identified.

We

[uqiWo]

The parameter

=

0.

In each sample the

is

ai2

cr^

+ vt.

=

1

and

=

first

is

ai2-

The parameter P

is

the parameter to

one of the determinants of the small sample bias of

GMM estimators

chosen in

9 finally

generate samples of size n

=

Out-i

simulations. All remaining parameters are nuisance parameters

1 in all

the quality of lagged instruments and

o"j

+ ut-

is

and

{.1, .3, .5}

.

Low

set to { — .9, —.5, 0,

{128,512} from Model

is

.5,

set to

.5.

values of

.9}

(7.1).

The parameter

(p

controls

imply that the model

(p

is

.

Starting values are yo

1,000 observations are discarded to eliminate

=

and

dependence on

initial conditions.

Standard

GMM

estimators are obtained from applying Formula (3.2) with

in (3.1).

Im- In order

^m we first construct an inefficient but consistent estimate /3„ based on (3.2) setting

and ^m = f-M- We then construct residuals St = yu — Pn.iVit and estimate Q.m as described

to estimate

Km = fu

Km —

Kernel weighted

^

GMM estimators

(KGMM)

19

are based on the

same

inefficient initial

estimate

such that the estimate for (Im

In the second stage

is

identical to the weight matrix used for the standard

we again apply

dependent kernel k* defined

in

with

(3.2)

Equation

The estimated optimal bandwidth M*

is

and the matrix K^j based on the optimal data-

Cluf

with

(5.2)

/C,

=

/Ci.

computed according

For each simulation replication we obtain a consistent

(5.3).^

residuals

it-

We

estimate 9 by fitting an

MA(1) model

then estimate the sample autocovariances F^^ for j

estimate of Ai based on Formula (4.1). Next

optimal specification of the approximating

of lags.

Based on the optimal

=

to the procedure laid out in

first

for yt

lag length specification

stage estimate

where n

0, ...,n/2

=

[yu,

y2t]'

D

j

to generate

is

We

the sample size and form an

of Definition (4.2) to determine the

allowing for a

we compute the

which are then used to estimate the remaining parameters

/3„

Theorem

Matlab procedure arimax.

to it using the

we use the procedure

VAR

GMM estimators.

maximum

of 2 *

[71^/^]

impiilse coefficients of the

and a^j needed

for

the criterion

VAR

MIC(M)

as well as for optimal kernel selection.

In Tables 1-3

kernel,

we compare^ the performance

KGMM-Opt,

to feasible standard

automatic selection of

M* we

GMJVI-A", with a fixed

number

GMM, GMM-Opt,

consider both estimators,

of

X—

1,

Theorem

GMM

KGMM

we

that for

small relative to the sample size there

(p

are also not verj' different from

dominate

effect

and

OLS. As the

GMM both in terms of (median)

becomes more pronounced

as

by the predominance of bias terms

KGMM-X

In addition to

(with optimally chosen kernel) and

We also report the performance of the

BKGMM-Opt where M* is selected according

and

M from the properties of using weights

conditions

consider

with truncated kernel.

(6.1).

In order to separate the effects of selecting

first

GMM with optimally chosen

20 lagged instruments.

BGMM-Opt

corresponding bias corrected versions

to the procedure described in

of feasible kernel weighted

for the

moment

with a fixed number of instruments. Tables 1-3 show

is little

difference

identification of the

between the two estimators. They

model improves,

KGMM

starts to

MSE

and mean absolute error (MAE). This

more and more instruments

are being used which can be explained

bias

in this case

eis

well as

and the bias reducing property

of the kernel weighted

estimator.

Turning now to the

identified

fully feasible versions

models the choice of

M

we

see that the

same

results

does not affect bias that much and

all

remain to hold. For poorly

the estimators considered

have roughly the same bias properties. Especially for poorly identified parametrizations optimal

is

much more

disperse than optimal

KGMM. The

reason for this

lies in

the fact that optimal

GMM

GMM

tends to be based on fewer instruments which results in somewhat lower bias but comes at the cost

of increased variability.

The Matlab code

'Results for 9

is

As

identification, parameterized

available on request.

= { — .9, .9}

are available on request.

20

by

(p,

and/or sample

size improve, optimally

chosen kernel weighted

The

GMM starts to dominate standard GMM for most parameter combinations.

both estimators attain further improvements both as

bias corrected versions of

as well as

MSE

and A4AE are concerned when the model

the kernel weighted and bias corrected

On

weighted version.

A

clear ranking

compared

to

is

The

thus not possible.

is

and

more

KGMM-Opt when

identification

Theorems

without the homoskedasticity assumption

In this sense

it

ht

=

ao

+

as before.

ai£^_i

it

is

We

when

\6\

still

(4.4)

and

(6.1) are

is

expected that the optimal

+ £t - ^^t-i where St

+ ^i^t-i- We set 6i = .2, uq =

/3y2i

now implemented with

MIC(M)

M* —

IGARCH(1,1) process

follows the

.1

is

and

results are reported in

are available on request.

=

ai

.8.

The

while

KGMM-Opt now

identification

in

is

for

innovations

easy to detect in the data

the case of ^

For a fixed number of

cases for the larger sample size

n

=

512.

moment

The

=

.5.

estimator

does worse when identification

is

KGMM

GMM-Opt

(2 log A)

first

St

=

[u(,i;t]

equation in

1

/2

with

inht

are defined

we assume that

GMM

^m.

lags.

dominates

still

strong and/or sample sizes are large. As expected, bias correction

it

GMM

in

continues to perform well

weak but performs at

least as well

is

no longer

when

effective

produces severe outliers resulting

in inflated dispersion measures. For this reason only inter decile ranges

We

n/

Results for other parametrizations

conditions

reducing bias. Moreover, when combined with kernel weighting,

8.

log

heteroskedasticity consistent covariance matrix estimators

Table 4

many

of conditional

performs reasonably well

For simplicity we use the procedure of Newey and West (1987) with a fixed number of

The

Overall,

large.

not expected to go through

investigate this question by changing the

Since heteroskedasticity of this form

estimators are

or

results concerning higher order adaptive-

plausible that the criterion

is

even with heteroskedastic errors.

=

weak

we have maintained the assumption

While the strongest

ness and optimality of bias correction in

(7.1) to Hit

is

sensitive to the underlying data-generating process.

homoskedasticity of the innovations.

Model

than the non-

bias corrected estimators tend to perform relatively poorly

In the theoretical development of the paper

asymptotically.

MSE

tends to have a somewhat larger

the other hand the non- weighted version tends to overcorrect bias in some cases.

GMM-Opt

their performance

GMM

In these circumstances

well identified.

is

far as bias

(IDR) are reported.

Conclusions

have analyzed the higher order asymptotic properties of

Using expressions

for

lagged instruments

is

the asymptotic

derived.

It is

new

version of the

estimators for time series models.

Error a selection rule for the optimal number of

shown that plugging an estimated

the estimator leads to a fully feasible

A

Mean Squared

GMM

version of the optimal rule into

GMM procedure.

GMM estimator for

linear time series

21

models

is

proposed where the moment

conditions are weighted by a kernel function.

moment

It is

restrictions reduce the asymptotic bias

shown that optimally chosen

kernel weights of the

and MSE. Correcting the estimator

for

the highest

order bias term leads to an overall increase in the optimal rate at which higher order terms vanish

asymptotically.

optimal kernel

A

is

fully

automatic procedure to chose both the number of instruments as well as the

proposed.

22

A. Proofs

Lemmas

Auxiliary

are collected in

^t^

=

Ext- Define

Wf^i

-

T^^.

et+miyt-i+i

—

i^y)

Definition A.l. Let

Ew[i and

let wt,i

Define

=

=

Proof of Proposition

=

wt,i

=

^nd Est+mys

—

{xt+m

=

Next define wl^^^

For a matrix A,

^j,k,'&j,k 3.nd 'djf. respectively.

such that

upon

available

is

request.

They

are referred to