MA MONOTONE AND Room

advertisement

3

DEWEY.

MIT LIBRARIES

3 9080 02898 1451

Massachusetts Institute of Technology

Department of Economics

Working Paper Series

IMPROVING POINT AND INTERVAL ESTIMATES OF

MONOTONE FUNCTIONS BY REARRANGEMENT

Victor Chernozhukov

Ivan Fernandez-Val

Alfred Galichon

Working Paper 08-1

July 2, 2008

Room

E52-251

50 Memorial Drive

Cambridge, MA 02142

This paper can be downloaded without charge from the

Social Science Research Network Paper Collection at

http://ssrn

com/abstract=1

1

59965

Digitized by the Internet Archive

in

2011 with funding from

Boston Library Consortium IVIember Libraries

http://www.archive.org/details/improvingpointinOOcher

IMPROVING POINT AND INTERVAL ESTIMATES OF MONOTONE

FUNCTIONS BY REARRANGEMENT

VICTOR CHERNOZHUKOV'

Abstract. Suppose that a

creasing,

and an

increasing.

We

target function fo

M.''

:

—*

R

estimation methods used

monotonic, namely weakly

is

original estimate / of this target function

Many common

is

available,

in statistics

which

is

in-

not weakly

produce such estimates

/.

show that these estimates can always be improved with no harm by using rearrangement

techniques:

The rearrangement methods,

univariate and multivariate, transform the original

estimate to a monotonic estimate /', and the resulting estimate

in

ALFRED GALICHON'

IVAN FERNANDEZ- VAL*

common

ment

metrics than the original estimate

/.

is

closer to the true curve /o

The improvement property

also extends to the construction of confidence

bands

monotone

for

of the rearrange-

functions. Let (

u be the lower and upper endpoint functions of a simultaneous confidence interval

covers /o with probability

1

—

q, then the rearranged confidence interval [^',u'), defined by

the rearranged lower and upper end-point functions (' and u'

norms than the

original interval

illustrate the results with a

and

[(,u] that

and covers

,

is

shorter in length in

/o with probability greater or equal to

1

common

-

q.

We

computational example and an empirical example dealing with

age-height growth charts.

Key words. Monotone

function, improved estimation, improved inference, multivariate re-

arrangement, univariate rearrangement, Lorentz inequalities, growth chart, quantile regression,

AMS

mean

regression, series, locally linear, kernel

methods

Subject Classification. Primary 62G08; Secondary 46F10, 62F35, 62P10

December, 2006- Two ArXiv versions of paper arc available, ArXiv 0704.3686 (April, 2007) and

This version is of July 2, 200S. The previous title of this paper was "Improving Estimates of

Monotone Functions by Rearrangement". Wp would like to thank the editor, the associate editor, the anonymous referees of

the journal, Richard Blundell, Eric Cator, Andrew Chcshcr, Moshc Cohen, Holgor Dettc. Emily Gallagher, Pict Groeneboom,

Raymond Guiteras, Xuming He, Roger Koenkcr, Charles Manski, Costas Meghir, Ilya Molchanov, Whitney Newey, Steve Portnoy. Alp Simsek, Jon Wellner and seminar participants at BU, CEMFI, CEMMAP Measurement Matters Conference, Columbia,

Columbia Conference on Optimal Transportation, Conference Frontiers of Microeconomctrics in Tokyo, Cornell, Cowless Foundation 75th Anniversary Conference. Duke- Triangle, Georgetown, MIT. MIT-Harvard, Northwestern, UBC, UCL, UIUC. University

of Alicante. University of Gothenburg Conference "Nonsmooth Inference, Analysis, and Dependence," and Yale University for

Date:

First version

is

of

ArXiv: 0806 4730 (June, 2008)

very useful comments that helped to considerably improve the paper.

Massachusetts Institute of Technology, Department of Economics &: Operations Research Center, and University College

London, CEMMAP. E-maii: vchern@mit.edu. Research support from the Castle Krob Chair, National Science Foundation, the

Sloan Foundation, and CEMMAP is gratefully acknowledged

§ Boston University, Department of Economics. E-mail: ivafif@bii,edii, Re.search support from the National Science Foundation is gratefully acknowledged,

J Ecole Polytechniquc, Departement d'Economie, E-mail: alfred.galichon@polytechnique edu.

1

,1. Introduction

A common

problem

in statistics

using an available sample.

is

•

the approximation of an

unknown monotonic function

There are many examples of monotonically increasing functions,

including biometric age-height charts, which should be monotonic in age; econometric de-

mand

functions, which should be

be monotonic

mate

is

monotonic

Suppose an

and quantile functions, which should

non-monotonic,

esti-

Then, the rearrangement operation from variational analysis (Hardy,

Lit-

in the probability index.

available.

in price;

original, potentially

tlewood, and Polya 1952, Lorentz 1953, Villani 2003) can be used to monotonize the original estimate.

The rearrangement has been shown

estimates of density functions (Fougeres 1997), conditional

Zitikis 2005, Dette,

Neumeyer, and

Pilz 2006, Dette

monotonized

to be useful in producing

and

mean

(Davydov and

functions

and Scheder 2006),

Pilz 2006, Dette

and various conditional quantile and distribution functions (Chernozhukov, Fernandez- Val,

and Galichon (2006b, 2006c)).

we show, using Lorentz

In this paper,

inequalities

that the rearrangement of the original estimate

is

and

their appropriate generalizations,

not only useful for producing monotonicity,

but also has the following important property: The rearrangement always improves upon the

original estimate,

whenever the

latter

is

not monotonic.

Namely, the rearranged curves are

Furthermore,

always closer (often considerably closer) to the target curve being estimated.

this

improvement property

is

generic,

i.e.,

it

does not depend on the underlying specifics of

the original estimate and applies to both univariate and multivariate cases.

The improvement

property of the rearrangement also extends to the construction of confidence bands

tone functions.

We

for

mono-

show that we can increase the coverage probabilities and reduce the lengths

of the confidence bands for

monotone functions by rearranging the upper and lower bounds

of

the confidence bands.

Monotonization procedures have a long history

to isotone regression. While

we

will

in

the statistical literature, mostly in relation

not provide an extensive literature review,

methods other than rearrangement that

are

studies the effect of smoothing on monotonization by isotone regression.

alternative two-step procedures that differ in the ordering of the smoothing

steps.

The

resulting estimators are asymptotically equivalent

bandwidth choice

is

used

in

the smoothing step.

up

He

reference the

and monotonization

Turlach, and

if

an optimal

Wand

called I-splines.

Ramsay

(1988), which projects on a class of

Later in the paper we will

of these procedures with the rearrangement.

make both

analytical

(2001)

isotone regression problems,

can be recast as a projection problem with respect to a given norm. Another approach

one-step procedure of

(1991)

considers two

to first order

Mammen, Marron,

show that most smoothing problems, notably including smoothed

we

Mammen

most related to the present paper.

monotone

is

the

spline functions

and numerical comparisons

;

2.

2.1.

Improving Point Estimates of Monotone Functions by Rearrangement

Common

statistics

is

Estimates of Monotonia Functions. A

the estimation of an

unknown

function /o

Suppose we know that the target function

further that an original estimate /

common

is

/o

is

:

M''

basic problem in

^

R

method transforms

which

available,

is

The answer provided by

the rearrangement

Many

not necessarily monotonic.

Can

paper

this

these estimates always

the rearrangement

yes:

is

the original estimate to a monotonic estimate /*. and this estimate

fact closer to the true curve /b

of

monotonia, namely weakly increasing. Suppose

estimation methods do indeed produce such estimates.

be improved with no harm?

many areas

using the available information.

than the original estimate /

in

common

is in

metrics. Furthermore,

computationally tractable, and thus preserves the computational appeal

is

of the original estimates.

Estimation methods, specifically the ones used

global

methods and

where Pk„{x)

is

methods.

local

/o taking the form

'

•

An example

"

\-

in regression analysis,

method

of a global

-

;

,:

the series estimator of

is

,

,-

can be grouped into

.,

-

:

;

.

.

a fc„-vector of suitable transformations of the variable

polynomials, and trigonometric functions. Section 4

an empirical example. The estimate

b is

lists specific

x,

examples

such as B-splines,

in the

context of

obtained by solving the regression problem

n

b

=

a.Tg

min y"p{Y, - Pk„{X,yb),

\

1=1

where {Yi,Xi),i

=

l,...,n denotes the data.

produces estimates of the conditional mean of

1994,

Newey

In particular, using the square loss p{u)

1997), while using the asymmetric absolute deviation loss

Andrews 1991, Stone

p{u) = (u — l(u < 0))u

Y,

series estimates

given Xi (Koenker and Bassett 1978,

x

^-^

f{x)

=

Pk„ {x)'b are widely used

data analysis due to their desirable approximation properties and computational

However, these estimates need not be naturally monotone, unless

into the optimization

program

and Koenker and Ng

(2005)).

Examples

of local

(see, for

v?

Yi given X,; (Gallant 1981,

produces estimates of the conditional u-quantile of

Portnoy 1997, He and Shao 2000). The

=

in

tractability.

explicit constraints are

added

example, Matzkin (1994), Silvapulle and Sen (2005),

methods include kernel and

locally

polynomial estimators.

A

kernel

estimator takes the form

/(i)

= argmin S" Wrp{Y^ -

6),

u^

=

K

where the

loss function

kernel function, and ^

p plays the same role as above, K{u)

>

is

a vector of bandwidths

—^

[

^

1=1

(see, for

is

a standard, possibly high-order,

example,

Wand and

Jones (1995)

and Ramsay and Silverman

resulting estimate x

necessarily improves

We

monotonic one.

kernel estimate into a

upon the

polynomial regression

further

a related local

show here that the rearranged estimate

whenever the

original estimate,

is

/(x) need not be naturally

i-*

show that the rearrangement transforms the

Dette, Neumeyer, and Pilz (2006)

monotone.

locally

The

(2005)).

latter

method (Chaudhuri

1991,

The

not monotonic.

is

Fan and Gijbels 1996).

In particular, the locally linear estimator takes the form

{f{x),d{x))

=

Y] Wip{Yi -b~

~l

argmin

6eR,deR

The

x

resulting estimate

i—>

may

f{x)

also

=

K ^-—

[

"

V

illustrates the

explicit constrains are

non-monotonicity of the locally

an empirical example.

summary, there are many

In

w,

be non-monotonic, unless

added to the optimization problem. Section 4

linear estimate in

d{X, - x)f,

attractive estimation

and approximation methods

in statistics

that do not necessarily produce monotonic estimates. These estimates do have other attractive

features though, such as

we show

good approximation properties and computational

Below

tractability.

that the rearrangement operation applied to these estimates produces (monotonic)

estimates that improve the approximation properties of the original estimates by bringing them

closer to the target curve. Furthermore, the rearrangement

is

computationally tractable, and

thus preserves the computational appeal of the original estimates.

2.2.

In

The Rearrangement and

what

follows, let ,^ be a

take this interval to be ,^

bounded subset

when

X

Approximation Property: The Univariate Case.

its

compact

=

=

loss of generality,

it

is

convenient to

Let f{x) be a measurable function mapping

[Oil]-

of R. Let Ff{y)

Without

interval.

j-^

l{f{u)

follows the uniform distribution

on

< y}du denote

[0, 1].

X

to

K,

a

the distribution function of f(X)

Let

r{x):=Qf{x):=inf{yeR:Ff{y)>x}

be the quantile function of Fj{y). Thus,

r{x) :=inf

This function /*

is

Ue

.

/

l{f{u)

< y}du > X

Jx

ix

called the increasing rearrangement of the function /.

Thus, the rearrangement operator simply transforms a function / to

That

It is

is,

X

i—>

f*{x)

is

the quantile function of the

random

variable

its

quantile function /*.

f{X) when

X~

L/(0, 1).

also convenient to think of the rearrangement as a sorting operation: given values of the

function /(,r) evaluated at i in a fine enough net of equidistant points,

values in an increasing order.

The

first

main point

of this

The

function created in this

paper

is

the following:

way

is

we simply

sort the

the rearrangement of /.

Proposition

Let fo

1.

X ^' K be a weakly increasing measurable function in x. This is

X -^ K he another measurable function, an initial estimate of the

:

Let f

the target function.

:

target function /o-

L For any p €

[1,

rearrangement of f

oo], the

denoted /*, weakly reduces the estimation error:

,

i/p

ll/p

<

dx

rix)-fo{x)

I f{x)JX

X

Suppose that there

2.

x £ Xo and

that for all

>

/o(x')

exist regions

fo{x)

+

for

e,

>

e

Then

0.

p £ (l.co). Namely, for any p G

dx

(2.1)

Xq and Xq, each of measure greater than

S Xq we have that

x'

some

Mx]

the

>

x'

(i)

gam

x,

>

f{x)

(ii)

f(x')

i/p

v,v' ,t,

t'

=

inf{|D

and

is

strict for

(Hi)

-

\f{x)

dx - 5n

fo{x)

(2.2)

IX

—

in the set

e,

i/p

<

dx

X

rjp

+

in the quality of approximation

Q, such

(1,(X)),

r(x)-fo{x)

where

>

5

t'\^

K

+

\v'

—

such that

i|f

—

\v

v'

>

v

- t^ —

+

e

—

\v'

and

>

t'

>

t'\^}

+

t

0,

with the infimum taken over

all

e.

This proposition establishes that the rearranged estimate /* has a smaller (and often strictly

smaller) estimation error in the Lp

monotone. This

sample

size

states the

is

norm than

the original estimate whenever the latter

a very useful and generally applicable property that

and of the way the

weak inequality

example, the inequality

a subset of

X

increasing,

we mean

is

original estimate /

(2.1),

and

strict for

obtained.

is

The

is

part of the proposition

first

the second part states the strict inequality (2.2).

p £ (1,00)

if

the original estimate f{x)

having positive measure, while the target function fo{x)

Of

strictly increasing throughout).

course,

if

is

as the distribution of the original function /, that

The weak

ment

and

inequality (2.1)

is

X

mapping

that

is,

X

to

K. Let

q*

<

/o(x),

and g*{x)

=

the target function

foix).

is its

each pair of vectors {v,v') and

L{q{x),g{x))dx,

/

'

.

'

•:;

.

Jx

any submodular discrepancy function L

=

the

denote their corresponding increasing rearrangements. Then,

L{q*{x),g*{x))dx

g{x)

is

f = Ff

a direct (yet important) consequence of the classical rearrange-

X

for

(by

constant, then the

F;,

is

inequality due to Lorentz (1953): Let q and g be two functions

g*

For

decreasing on

increasing on

is

fo{x)

is

inequality (2.1) becomes an equality, as the distribution of the rearranged function /*

same

not

independent of the

is

R^

Now, note that

^->

in

E+.

t')

+

L(y

\J

=

Set q{x)

our case /q

own rearrangement. Let

(t,t') in R^,'

L{v Av',t/\

:

(a;)

=

/o(2;)

us recall that

we have that

v',t\J

t')

<

L{v,

f{x), q*{x)

L

=

f*{x),

almost everywhere,

is

submodular

if

for

.

t)

+

L(y'

,

t'),

(2.3)

.

L measuring

In other words, a function

the discrepancy between vectors

When

co-monotonization of vectors reduces the discrepancy.

ularity

is

<

equivalent to the condition dvdtL{v,t)

example, power functions L{v,t)

=

—

\v

t\^ for

L

a function

submodular

is

if

smooth, submod-

is

holding for each {v,t) in

p £ [l,oo) and many other

Thus,

IR^.

for

loss functions are

submodular.

In the Appendix,

of the

arise

we provide

weak inequality

a proof of the strong inequality (2.2) as well as the direct proof

The

(2.1).

how

direct proof illustrates

reductions of the estimation error

from even a partial sorting of the values of the estimate

Moreover, the direct proof

/.

characterizes the conditions for the strict reduction of the estimation error.

It is also

worth emphasizing the following immediate asymptotic implication of the above

The rearranged estimate

finite-sample result:

For p e

original estimates /.

[l,c»],

sequence of constants On, then [J^

if

A,,

\I*{'^)

—

/* inherits the

=

[/:^.

1/(3:)

fo{x)\^duY/^

-

Computation of the Rearranged Estimate. One

used

for

points in

[0, 1]

or (2) a sample of

i.i.d.

=

methods can be

of the following

B] be

1

X

either (1) a set of equidistant

[0, 1].

Then

the

can be approximately computed as the u-quantile

The

1,...,S}.

from the

Op{aTi) for some

draws from the uniform distribution on

rearranged estimate f*{u) at point u €

of the sample {f{Xj),i

=

/o(x)|''du]^''''

=

< A„ = Op[an)-

2.3.

computing the rearrangement. Let {Xj.'j

rates of convergence

L-p

first

method

deterministic,

is

and the second

is

draws B, the complexity of computing the rearranged

stochastic. Thus, for a given

number

estimate /*(u) in this way

equivalent to the complexity of computing the sample u-quantile

in

a sample of

size

B.

is

The number

of

of evaluations

B

can depend on the problem.

that the density function of the random variable f{X),

when

A'

^

U{0,

Suppose

bounded away

1), is

from zero over a neighborhood of f*{x). Then f*{x) can be computed with the accuracy of

Op(l/-\/S), as

2.4.

B

^ oo, where the rate follows from the results of Knight

Approximation Property: The Multivariate Case.

multivariate functions /

X'^ — K, where A''' = [0, l]"^ and K is

The Rearrangement and

In this section

we

consider

(2002).

Its

>

;

a bounded subset of K. The notion of monotonicity we seek to impose on /

We

say that the function /

notation x'

=

{x\,...,x'^)

>

is

a;

weakly increasing

=

{x],...,Xd)

other in each of the components, that

is,

i'

in

x

if

f{x')

means that one vector

>

Xj for each j

use the notation f{xj,x^j) to denote the dependence of / on

other arguments,

:r_j,

requirement that

for

for

each x_j in X'^~^

that exclude Xj.

each j in

1, ...,d

The notion

=

i—>

is

/(xj,x_j)

the following:

,r'

>

x.

The

weakly larger than the

follows,

we

j-th argument, ij, and

all

d.

1

its

of monotonicit}'

the mapping Xj

is

> f{x) whenever

above

is

In

is

what

equivalent to the

weakly increasing

in

x_,,

Define the rearranged operator Rj and the rearranged function fj{x) with respect to the

j-th argument as follows:

f;ix):=Rjof{x):=\nny

This

Xj I—

is

the one-dimensional increasing rearrangement applied to the one-dimensional function

The rearrangement

f{Xj,X-j), holding the other arguments x^j fixed.

>

>xA

l{f{x'^,x.j)<y}dx'^

J

Ux

is

applied for

every value of the other arguments x_j.

Let

TT

=

(tti,

TTd)

....

be an ordering,

the TT-rearrangement operator

a permutation, of the integers

i.e.,

R^ and

1, ...,d.

Let us define

the 7r-rearranged function f*{x) as follows:

f:{x):=R^of{x):=R^,o...oR,^of{x).

For any ordering

of

tt,

the 7r-reaxrangement operator rearranges the function with respect to

arguments. As shown below, the resulting function

its

In general, two different orderings

and

/*(a;)

orderings,

orderings

tt

and

|n[

weakly increasing

we may

tt,

we can

consider averaging

among rearrangements done with different

among them: letting 11 be any collection of distinct

define the average rearrangement as

denotes the number of elements

all

in

Tren

'

the set of orderings

11.

Dette and Scheder (2006)

the possible orderings of the smoothed rearrangement in the context

monotone conditional mean estimation. As shown below, the approximation

average rearrangement

is

Let f

:

:

X

—*

X'^ —'

K

K

be a

be

to

For each ordering n of

(a)

For any

X"^ -^

:

measurable function that

another estimate of

1,

some

...,

Moreover, f*{x), an average of

2.

.

,

2. Let the target function /o

a rearranged f with respect

1.

'..''

-.,

following proposition describes the properties of multivariate yr-rearrangements;

Proposition

Let f

error of the

weakly smaller than the average of approximation errors of individual

TT-rearrangements.

The

in x.

f*,{x). Therefore, to resolve the conflict

also proposed avera.ging

of

is

can yield different rearranged functions

n' of 1, ...,d

'

where

/^(a;)

all

j in

1, ...,d

tt

fo,

is

which

K

be weakly increasing

an

initial

is

tt

estimate of the target function /q.

Then,

-rearranged estimate f^{x)

-rearranged estimates,

and any p

in x.

measurable in x, including, for example,

of the arguments.

d, the

and measurable

in [l,oo], the

is

is

weakly increasing in

x.

weakly increasing in x.

rearrangement of f with respect

to the

j-th argument produces a weak reduction in the approximation error:

1/p

\f;ix)-fo{x)\Pdx

X"

1/p

<

\f{x)

X''

- fo{xWdx\

.

(2.5)

(b) Consequently, a

-n

-rearranged estimate f^{x) of f{x) weakly reduces the approximation

error of the original estimate:

1/p

l/;(x)

-

-ii/p

fo{x)\Pdx

UX"

and

for

x'j

A"'

all

>

C X each of measure

x = {xj,X-j) and x' =

,

>

Xj, (a) f{x)

(a) Then, for

(2.6)

Ux-'

Suppose that f{x) and /o(x) have

3.

- foixWdx

\f{x)

f{x')

any p €

+

e,

5

>

the following properties:

and

0,

(lii)

X

X-j C

,

there exist subsets

of measure v

>

Q,

Xj c

>

fo{x')

fo{x)

+

some

for

e,

e

>

X

such that

with x' G X'^ Xj £ Xj, X-j G X-j, we have that

(a;',a;_j),

and

a subset

(i)

0.

(1, oo),

i/p

1/p

\f;(x)-fo{x)\pdx

-

\f{x)

fo{x)\Pdx

-

(2.7)

ripSiy

X''

where

V, v',

rjp

t, t'

=

—

inf{|D

function, f(x)

=

+

\v'

an ordering

(b) Further, for

and

K

in the set

— tp —

such that v' >

i'jP

Rtt^^^ °

tt

=

\v

-

+

v

(tti,

t\P

e

—

and

..., tt;,..

-

\v'

>

t'

••i

o R-n^ ° f{x) (for k

"'rf)

=

>

t'\P}

t

+

with the mfimum. taken over

0,

with

-Kk

=

j, 'e^

=

d we set f{x)

f

be a partially

f{x)).

the target function fo{x) satisfy the condition stated above, then, for

If the

\f{x)-fo{x)rdx-iipSu

Ux-i

4-

Ix-i

The approximation error of an average rearrangement

approximation error of the individual

tt-

is

weakly smaller than the average

rearrangements: For any p G [l,oo],

1/p

1/p

\rix)^foix)\^dx

in!

Ix-i

^

Tren

\rAx) - fo{x)\Pdx

This proposition generalizes the results of Proposition

showed that

all

their

both arguments

of the arguments. Dette

smoothed rearrangement

strict

that

when

is

1

to the multivariate case, also

We see that

mean

demon-

the 7r-rearranged functions

and Scheder (2006), using a

for conditional

for the bivariate case in large samples.

ment improves the approximation properties

(2.9)

Ux''

strating several features unique of the multivariate case.

are monotonic in

function f[x)

Tl/P

<

- fo{x)\^dx

rearranged

any p £ (l,oo),

ii/p

\f:(x)

all

e.

different

functions

is

argument,

monotonic

The rearrangement along any

of the estimate.

argu-

Moreover, the improvement

the rearrangement with respect to a j-th argument

is

decreasing in the j-th argument, while the target function

in

is

performed on an estimate

is

increasing in the

same

j-th argument, in the sense precisely defined in the proposition. Moreover, averaging different

TT-rearrangements

is

better (on average) than using a single

tt-

rearrangement chosen at random.

All other basic implications of the proposition are similar to those discussed for the univariate

case.

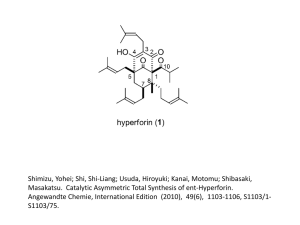

Figure

Graphical illustration for the proof of Proposition

1.

and comparison to

1

panel)

(left

isotonic regression (right panel). In the figure, /o represents

the target function, / the original estimate, /* the rearranged estimate, f' the

isotonized estimate, and /'''^' the average of the rearranged and isotonized

estimates.

L{v,t')

2.5.

=

=

In the left panel L{v,t)

oP,

L{v\t)

~

d',

L{v',t')

=

and

b^,

dP.

Discussion and Comparisons.

In

what

follows

we informally explain why rearrange-

ment provides the improvement property and compare rearrangement

to isotonization.

Let us begin by noting that the proof of the improvement property can be

and then to

to the case of simple functions or, equivalently, functions with a finite domain,

the case of "very simple" functions with a two-point domain.

The improvement property

these very simple functions then follows from the submodularity property (2.3).

panel of Figure

1

we

illustrate this

In the

for

left

property geometrically by plotting the original estimate /,

the rearranged estimate /*, and the true function

is

reduced

first

/q.

In this example, the original estimate

decreasing and hence violates the monotonicity requirement.

We

rearrangement co-monotonizes /* with /o and thus brings /* closer to

see that the two-point

Jq.

Also,

we can view

the rearrangement as a projection on the set of weakly increasing functions that have the same

distribution as the original estimate /•

Next

The

in the right panel of Figure

isotonized estimate /^

is

1

'

,

.

,

we

plot

,

,

.

both the rearranged and isotonized estimates.

a projection of the original estimate / on the set of weakly

increasing functions (that only preserves the

the two values of the isotonized estimate

y

mean

of the original estimate).

We

can compute

by assigning both of them the average of the two

10

values of the original estimate /, whenever the latter violate the monotonicity requirement, and

We

leaving the original values unchanged, otherwise.

this

produces a

flat

function /^.

see from Figure

1

that in our example

This computational procedure, known as "pool adjacent

domains with more than two points by simply applying the

violators," naturally extends to

procedure iteratively to any pair of points at which the monotonicity requirement remains

violated (Ayer, Brunk, Ewing, Reid, and Silverman 1955).

Using the computational definition of isotonization, one can show that,

isotonization also improves

upon the

original estimate, for

any p €

see, e.g..

rearrangement,

ll/p

1/p

<

\f'{x)-Mx)\^dx

like

[l,oo]:

\f{x)-Mx)\Pdx

Barlow, Bartholomew, Bremner, and Brunk (1972).

(2.10)

Therefore,

it

follows that any

function f^ in the convex hull of the rearranged and the isotonized estimate both monotonizes

and improves upon the

The

original estimate.

first

from homogeneity and subadditivity of norms, that

property

for

is

Up

[l,oo]:

i/p

<

Mx)\''dx

\f

obvious and the second follows

is

any p £

i/p

+ (1-A)

\f\x)~h{x)Y'dx

A

ir(.r)-/o(2)Rx

i/p

l/(-r)

where f^{x)

=

\f*{x)

+

(1

— X)f' (x)

for

- h{x)ydx

any A £

(2.11)

[0, 1].

Before proceeding further,

let

us also

note that, by an induction argument similar to that presented in the previous section, the

improvement property

its

listed

above extends to the sequential multivariate isotonization and to

convex hull with the sequential multivariate rearrangement.

Thus, we see that a rather rich class of procedures (or operators) both monotonizes the

and reduces the distance to the true target function.

original estimate

note that there

is

It is also

important to

no single best distance-reducing monotonizing procedure. Indeed, whether

the rearranged estimate /* approximates the target function better than the isotonized estimate

f^ depends on

how

example plotted

steep or flat the target function

in the right

panel of Figure

1;

is.

We

illustrate this point via

a simple

Consider any increasing target function taking

values in the shaded area between /* and /^, and also the function

/^Z"^,

the average of the

isotonized and the rearranged estimate, that passes through the middle of the shaded area.

Suppose

first

that the target function

error than /'.

Now

is

suppose instead that the target function

a smaller approximation error than /*.

very steep nor very

flat,

steep or

flat

or,

some combination

the target function

is

is

It is also clear that, if

/^^^ can outperform either /* or

rearrangement, isotonization,

how

steeper than /^'^, then /* has a smaller approximation

/''.

flatter

than /'Z^, then f^ has

the target function

Thus,

in practice

of the two, depending

in a particular application.

is

neither

we can choose

on our

beliefs

about

11

3.

Monotone Functions by Rearrangement

Improving Interval Estimates of

In this section we propose to directly apply the rearrangement, univariate and multivariate,

We

to simultaneous confidence intervals for functions.

show that our proposal

will necessarily

improve the original intervals by decreasing their length and increasing their coverage.

Suppose that we are given an

simultaneous confidence interval

initial

\£,u]^{\e{x),u{x)],xeX''),

(3.1)

where i{x) and u{x) are the lower and upper end-point functions such that i{x) < u{x)

X £

for all

X<^.

We

further suppose that the confidence interval

[i,

has either the exact or the asymptotic

u]

a G

confidence property for the estimand function /, namely, for a given

\t,u]]

=

Probp{£(a;)

probabihty measures

P

in

Probp{/ €

for all

assume that property

size n, or in the

'

.

—

f{x)

—

for all

•-

a point estimate, s{x)

s{x)c

is

but

finitely

,'V

,,,

is

The Wasserman

is

a,

(3.2)

for the given

sizes

/

n (Lehmann

'

one where

[i,u] in (3.1)

(see, e.g.,

We

sample

,:,,'

'

'

s{x)c,

(3.3)

is

the

covers the function / with the

methods

for

to the bootstrap,

the con-

both

for

Johansen and Johnstone (1990), and Hall

(2006) book provides an excellent overview of the existing

on functions. The problem with such confidence

estimates themselves,

-

There are many well-established methods

parametric and non-parametric estimators

(1993)).

I

the standard error of the point estimate, and c

struction of the critical value, ranging from analytical tube

for inference

is,

many sample

and u{x) = f{x) +

chosen so that the confidence interval

specified probabihty, as stated in (3.2).

x e X'^} >

all

sample sense, that

type of a confidence interval for functions

is

critical value

is,

'

u{x), for

containing the true probability measure Pg.

Vn

,

i{x)

where f{x)

set

<

J{x)

(3.2) holds either in the finite

asymptotic sense, that

and Romano 2005).

A common

some

<

(0, 1),

methods

intervals, similar to the point

that these intervals need not be monotonic. Indeed, typical inferential

procedures do not guarantee that the end-point functions f{x)±s{x)c of the confidence interval

are monotonic. This

means that such a confidence

that can be excluded from

interval contains

it.

In some cases the confidence intervals mentioned above

functions at

all,

for

non-monotone functions

may

not contain any monotone

example, due to a small sample size or misspecification.

case of misspecification or incorrect centering of the confidence interval

the estimand / being covered by

/o, so that /

may

[i,

u] is

not be monotone.

{£,

u] as

We

define the

any case where

not equal to the weakly increasing target function

Misspecification

is

a rather

common

occurrence both

12

parametric and non-parametric estimation.

in

Indeed, correct centering of confidence inter-

vals in parametric estimation requires perfect specification of functional forms

hard to achieve.

On

and

is

estimation requires the so-called undersmoothing, a delicate requirement, which

ric

to using a relatively large

width

number

in kernel-based estimation.

of terms in series estimation

In real applications with

(2008) provide, in our interpretation,

and a

many

may

/o,

some formal

justification for oversmoothing:

be desirable to make inference more robust,

to enlarge the class of data-generating processes

(3.2) holds.

In

Vn

for

any case, regardless of the reasons

band-

regressors, researchers tend

targeting inference on functions /, that represent various smoothed versions of /o

summarize features of

amounts

relatively small

In a recent development, Genovese and

to use oversmoothing rather than undersmoothing.

Wasserman

generally

the other hand, correct centering of confidence intervals in nonparamet-

for

and thus

equivalently,

or,

which the confidence interval property

why

confidence intervals

may

target /

instead of /o, our procedures will work for inference on the monotonized version /* of /.

Our proposal

for

improved interval estimates

to rearrange the entire simultaneous confi-

is

dence intervals into a monotonic interval given by

=

[r,u*]

([r(2;),u*(2-)],xG A-^),

where the lower and upper end-point functions

and

of the original end-point functions i

and u*

i*

and u* are the increasing rearrangements

d*

In the multivariate case,

to denote either 7r-multivariate rearrangements

rearrangements

on

u.

d*

(3.4)

and u* whenever we do not need to

,

and

£,r

Uj^

specifically

we

use the symbols

or average multivariate

emphasize the dependence

IT.

The

following proposition describes the formal property of the rearranged confidence inter-

vals.

Proposition

3.

Let

[£,

u]

in (3.1) be the original confidence interval that has the confidence

interval property (3.2) for the estimand function f

:

A"*^

K

^^

and

let

the rearranged confidence

'

interval [t,u*] be defined as in (3.4).

1.

The rearranged confidence interval

sense that the end-point functions

on

.'

X'^.

Moreover, the event that

[£*,u*]

is

weakly increasing and non-empty, in the

and u* are weakly increasing on PC^ and

(.*

'

.

[i,u]

satisfy £*

<

u*

contains the estimand f implies the event that \i*,u*]

contains the rearranged, hence monotonized, version f* of the estimand f:

f e

\£,u]

implies f* 6 [t,u*].

(3.5)

In particular, under the correct specification, when f equals a weakly increasing target function

/o,

we have

that f

=

f*

=

fo, so that

/o

e

[d,u] im.plies /o

£ [t,u*].

-

(3.6)

13

Therefore, \r,u*] covers /*, which

The rearranged confidence interval

2.

under the correct

equal to /o

is

L^

interval [Lu] in the average

[i*

,u*] is weakly shorter than the initial confidence

p £ [l,oo];

length: for each

p

t{x)

U

i/p

1

exist subsets

Aq C

>

>

or (a) i{x')

CX

>

that x'

i{x)

+

(i)

1/

e,

(3.7)

,

rj-p

t,

f

=

—

inf{|i'

in the set

=

where for k

=

t'\'P -\- \v'

K

—

—

t]^

such that

j, letg

€

>

u{x')

C

=

X

of measure u

,

u.{x)

+

€,

for

Then, for any p E

v'

\v

>

some

—

v

t\^

+

>

e

2,

—

\v'

and

—

t'y]

t'

>

The

>

is

u{x)

t

>

+

0,

all

+ e,

Xq

x' G

for some

Then, for any p e (1,

cxo)

(3.E

ripS

where the infimum

or such that v

e

for an ordering n

>

e

such that for

0,

we have

>

that

=

>

(tti, ...,

and u{x) have

or (Hi) i{x')

>

>

I{x)

=

x

all

(i) x'^

v'

Xj,

+

e

+

is

tt^:, ..., tTc/)

—

either

and u{x) >

t

>

t'

+

all

e.

of integers

R-^^^j^^o...oR^^og{x),

the following properties:

S

>

0,

(ii)

i{x)

u{x')

and a subset

[XpX-

{xj,x-j) and x'

and

taken over

and

e

>

+ e,

for

with

+

e,

some

e

({x')

and

>

0.

(1, oo)

dx

<

IJx"

r]p

0.

u{x)

i/p

—

i{x)

g{x). Suppose that E(x)

C(x) - uUx)

where

>

>

and X' C X, each of measure greater than

Xj S Xj, X-j G X-J,

X'.,

e

such that for

u{x')

denote the partially rearranged function, g{x)

d we set g{x)

there exist subsets Xj

X-j C X^~^

>

and

there

Jx

In the m.ultivariate case with d

{l,...,d] withiTk

+ e,

some

for

f

X

where

£{x')

'

<

dx

>

i{x)

+

and u(x) > u(x')

e

p

\l

Jx"

V

e{x)

1/p

(3.9)

defined as above.

proposition shows that the rearranged confidence intervals are weakly shorter than the

original confidence intervals,

strictly shorter.

univariate case,

I—

u{x)\ dx

each of measure greater than 5

,

and either

x,

\t{x) -u*{x)

x

—

y^t"

and X^

A"

and X e Xq, we have

x'j

i{x)

/

In the univariate case, suppose that i{x) and u{x) have the following properties:

3.

V. v'

iVp

r

<

dx

[X.

/.V

e

specification, with a proba-

greater or equal to the probability that [i,u] covers f.

bility that is

i{x)

and x

and

also qualifies

when

In particular, the inequality (3.7)

if

i—>

there

is

is

the rearranged confidence intervals are

necessarily strict for p £ (l,oo) in the

a region of positive meaisure in

X

over which the end-point functions

u{x) are not comonotonic. This weak shortening result follows for univariate

cases directly from the rearrangement inequality of Lorentz (1953), and the strong shortening

follows from a simple strengthening of the Lorentz inequality, as argued in the proof.

shortening results for the multivariate case follow by an induction argument.

The

Moreover, the

order-preservation property of the univariate and multivariate rearrangements, demonstrated

in the proof, implies that the

rearranged confidence interval

[^*,

u*]

has a weakly higher coverage

14

than the original confidence interval

[£,u].

We

do not qualify

but we demonstrate them through the examples

Our

in

strict

improvements

in coverage,

the next section.

idea of directly monotonizing the interval estimates also applies to other monotonization

procedures. Indeed, the proof of Proposition 3 reveals that part

1

of Proposition 3 applies to

any order-preserving monotonization operator T, such that

g{x)

< m{x)

for all

x e ^'' implies

Furthermore, part 2 of Proposition

3

to any distance-reducing operator

T

T o g(x) <To m{x)

x e

X'^.

(3.10)

on the weak shortenmg of the confidence intervals applies

such that

1

i/p

\Toe{x)-Tou{x)\Pdx

/

for all

r

<

Jx''

/

-1

i/p

- u{x)\Pdx

\(;(x)-u(xWdx

\l!{x)

/

(3.11)

.

VJx''

Rearrangements, univariate and multivariate, are one instance of order- preserving and distancereducing operators. Isotonization, univariate and multivariate,

(Robertson, Wright, and Dykstra 1988).

and distance-reducing operators, such

also order-preserving

is

another important instance

Moreover, convex combinations of order-preserving

as the average of rearrangement

and distance-reducing.

We

and isotonization,

demonstrate the inferential implications of

these properties further in the computational experiments reported in Section

4.

In this section

how

we provide an

are

4.

Illustrations

empirical application of biometric age-height charts.

We

show

the rearrangement monotonizes and improves various nonparametric point and interval

estimates for functions, and then we quantify the improvement in a simulation example that

mimics the empirical application.

(R Development Core Team

We

carried out

all

the computations using the software R

2008), the quantile regression package queuitreg (Koenker 2008),

and the functional data analysis package fda (Ramsay, Wickham, and Graves 2007).

4.1.

An

Empirical Illustration with Age-Height Reference Charts. Since

duction by Quetelet in the 19th century, reference growth charts have become

to assess an individual's health status.

their intro-

common

tools

These charts describe the evolution of individual an-

thropometric measures, such as height, weight, and body mass index, across different ages.

See Cole (1988)

for

a classical work on the subject, and Wei, Pere, Koenker, and He (2006) for

a recent analysis from a quantile regression perspective, and additional references.

To

illustrate the properties of the

growth charts

ship with age.

for height. It

Our data

is

rearrangement method we consider the estimation of

clear that height should naturally follow an increasing relation-

consist of repeated cross sectional

measurements

of height

and age

from the 2003-2004 National Health and Nutrition Survey collected by the National Center

Health

Statistics.

Height

is

meeisured as standing height in centimeters, and age

is

recorded

for

in

15

months and expressed

in years.

between age and height, we

Our

twenty.

Y

Let

final

and

X

expectation of

where u

is

To avoid confounding

restrict the

sample

factors that

US-born white males of age two through

to

X

given

=

x,

the quantile index.

and Qy{u\X

=

£^[y|j>('

=

indices (5%, 50%.

The population functions

and 95%), and

(3)

denote the conditional

x]

x

is

x

i—>

i—»

fo{x)

is

Qy[u\X =

95%; and, in the third case, the target function {u,x)

i—»

(CQF)

for several

x

i—

x],

fo{u,x)

is

>

(CQP)

=

^[yiJf

for

u

{u,x)

H->

We

x]

Qy[u|X

and the

=

x]

CQF

x

height

in the

Qy\u\X =

X

(u,x)

for

x];

5%, 50%, and

SfyiX =

Qy{u\X =

quantile

=

natural monotonicity requirements for the target functions are the following:

i—>

x,

^-*

The

1-^

X=

given

of interests are (1) the conditional

the entire conditional quantile process

In the first case, the target function

second case, the target function x ^^ fo{x)

Y

x) denote the u-th quantile of

expectation function (CEF), (2) the conditional quantile functions

given age.

affect the relationship

sample consists of 533 subjects almost evenly distributed across these ages.

denote height and age, respectively. Let

Y

might

The CEF

x) should be increasing in age x, and the

should be increasing in both age x and the quantile index

x].

CQP

u.

estimate the target functions using non-parametric ordinary least squares or quantile

regression techniques and then rearrange the estimates to satisfy the monotonicity require-

ments.

We

consider (a) kernel, (b) locally linear,

(c)

regression splines,

and

(d) Fourier series

methods. For the kernel and locally linear methods, we choose a bandwidth of one year and

a box kernel. For the regression splines method, we use cubic B-splines with a knot sequence

{3,5,8,10,11.5,13,14.5,16,18}, following Wei, Pere, Koenker, and He (2006). For the Fourier

method, we employ eight trigonometric terms, with four

the estimation of the conditional quantile process,

we

sines

and four

cosines.

Finally, for

use a net of two hundred quantile indices

In the choice of the parameters for the different methods,

{0.005,0.010, ...,0.995}.

we

select

values that either have been used in the previous empirical work or give rise to specifications

with similar complexities

The

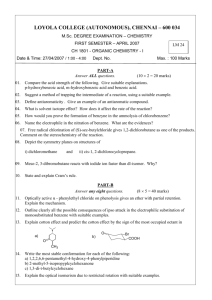

panels

A-D

for the different

of Figure 2

show the

methods.

original

~

.,

:''

'

and rearranged estimates

'

of the conditional

expectation function for the different methods. All the estimated curves have trouble capturing

the slowdown in the growth of height after age fifteen and yield non-monotonic curves for the

highest values of age.

The

Fourier series performs particularly poorly in approximating the

aperiodic age-height relationship and has

many

non-monotonicities.

The rearranged

estimates

correct the non-monotonicity of the original estimates, providing weakly increasing curves

that coincide with the original estimates in the parts where the latter are monotonic. Figure

3 displays similar but

more pronounced non-monotonicity patterns

conditional quantile functions. In

all

cases, the

for the

estimates of the

rearrangement again performs well

in delivering

curves that improve upon the original estimates and that satisfy the natural monotonicity

requirement.

We

quantify this improvement, in the next subsection.

16

A.

CEF

(Kernel, h = 1)

B.

CEF(Loc.

Linear, h

=

15

CEF (Regression

20

Age

Age

C.

1)

D CEF

Splines)

(Fourier)

15

10

Age

Figure

Nonparamctric estimates of the Conditional Expectation Function (CEF) of

2.

heiglit given

age and their increasing rearrangements. Nonparamctric estimates are obtained

using kerne! regression (A), locally linear regression (B), cubic regression B-splines series (C),

and Fourier

series (D).

Figure 4 illustrates the multivariate rearrangement of the conditional quantile process

along both the age and the quantile index arguments.

inal estimate, its age

rearrangement,

its

We

quantile rearrangement, and

its

average multivariate

rearrangement (the average of the age-quantile and quantile-age rearrangements).

plot the corresponding contour surfaces.

(CQP)

plot, in three dimensions, the orig-

Here, for brevity,

we

We

also

focus on the Fourier series

estimates, which have the most severe non-monotonicity problems.

(Analogous figures

for

the other estimation methods considered can be found in the working paper version Cher-

nozhukov, Fernandez- Val, and Galichon (2006a)). Moreover, we do not show the multivariate

age-quantile and quantile-age rearrangements separately, because they are very similar to the

17

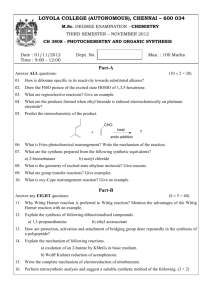

CQF: 5%, 50%, 95%

A.

(Kernel, h = 1)

B.

CQF: 5%, 50%, 95%

(Loc. Linear, h = 1)

Age

C.

CQF: 5%, 50%, 95% (Regression Splines)

D.

CQF: 5%, 50%, 95%

Ags

Figure

(Fourier)

Age

Nonparamotric estimates of the 5%, 50%, and 95% Conditional Quantile

3.

Functions (CQF) of height given age and their increasing rearrangements. Nonparametric

timates are obtained using

Icernel regression (A), locally linear regression (B),

B-splines series (C), and Fourier series (D).

average multivariate rearrangement.

is

non-monotone

index.

The average

,

,,

.

see from the contour plots that the estimated

CQP

age and non-monotone in the quantile index at extremal values of this

in

an estimate of the

We

es-

cubic regression

multivariate rearrangement fixes the non-monotonicity problem delivering

CQP

that

is

monotone

in

both the age and the quantile index arguments.

Furthermore, by the theoretical results of the paper, the multivariate rearranged estimates

upon the

necessarily improve

In Figures 5 and

vals.

6,

Figure 5 shows

we

original estimates.

.

/

illustrate the inference properties of the rearranged confidence inter-

90% uniform

confidence intervals for the conditional expectation function

and three conditional quantile functions

for the

5%, 50%, and 95% quantiles ba,sed on Fourier

.18

A.

C.

CQP

(Fourier)

B.

CQP: Age Rearrangement

D.

"T

0.0

E.

G.

CQP: QuantJIe Rearrangement

CQP: Average

Figure

4.

Multivariate

Rearrangement

F.

H.

CQP: Contour

CQP; Contour (Age Rearrangement)

1

1

1

1

02

04

05

08

CQP: Contour (Quantile Rearrangement)

CQP: Contour (Average

Multivariate Rearrangement)

Fourier series estimates of the Conditional Quantile Process

of height given age

and

T"

10

their increasing rearrangements. Panels

(CQP)

C and E plot

the

one dimensional increasing rearrangement along the age and quantile dimension,

respectively; panel

G

shows the average multivariate rearrangement.

19

A.

CEF

90%

90%

90%

CI Onginal

90Vo CI Rearrangod

C.

CQF; 6%

90%

90%

Figure

5.

90%

60%

B. CQF-.

(Fourier)

(Fourier)

E.

(Fourier)

CI Onginal

CI Rearranged

CQF: 96%

(Fourier)

CI Original

CI Rearranged

confidence intervals for the Conditional Expectation Function (CEF), and

5%, 50% and 95% Conditional Quantile Functions (CQF) of height given age and

their in-

creasing rearrangements. Nonparamotric estimates are based on Fourier series and confidence

bands are obtained by bootstrap with 200 repetitions.

series estimates.

We obtain the

initial

with 200 repetitions to estimate the

confidence intervals of the form (3.3) using the bootstrap

critical values (Hall 1993).

We

then obtain the rearranged

confidence intervals by rearranging the lower and upper end-point functions of the

fidence intervals, following the procedure defined in Section

In Figure

3.

6,

we

90% uniform

We

and

see from the figures that the rearranged confidence inter-

vals correct the non-monotonicity of the original confidence intervals

we

initial

confidence bands for the entire conditional quantile process based on

the Fourier series estimates.

length, as

con-

illustrate the

construction of the confidence intervals in the multidimensional case by plotting the

rearranged

initial

shall verify numerically in the next section.

and reduce

their average

'

,

T3

C3

Qi

G)

C

>

,

"Eb;

m

j3

OH

I

'^

O

d

a

Q.

"3

a!

c

,o

cyi

O

c

J3

O

JD

S

J=

T3

tG

CJ

o

>i

re

'5)

o

I

OJ

G

O

0^

o

Tj

CG

g;

C

o

§ a

tfl

_QJ

.2

CJ

c

OJ

tfi

13

O

10)

^

13

o

e

0)

bO

c

a

1-,

Oj

tH

CO

0)

cti

H

S

Qi

D >

O ^3

fe

02

E

21

4.2.

Monte-Carlo

provement

Illustration.

and

in the point

We

original estimates.

also

following

compare rearrangement to isotonization and

closely

Y = Z{Xyp +

e,

quantifies the im-

to convex

matches the empirical application presented above.

consider a design where the outcome variable

e,

Monte Carlo experiment

combina-

and isotonization.

tions of rearrangement

Our experiment

The

interval estimation that rearrangement provides relative to the

and the disturbance

is

Y

equals

a,

we

Specifically,

location function plus a disturbance

independent of the regressor

X

.

The

vector

Z{X)

includes a constant and a piecewise linear transformation of the regressor A' with three changes

of slope,

namely Z(X)

= (1,X,1{X > 5}{X -5),1{X > 10}-(A-10),

1{.Y

>

(A- 15)).

15}-

This design implies the conditional expectation function

E[Y\X] = Z{Xyp,

(4,1)

QY{u\X) = Z{Xy/3+Q,{u).

(4.2)

and the conditional quantile function

We

select the

parameters of the design to match the empirical example of growth charts

previous subsection. Thus,

obtained

in the

we

parameter P equal to the ordinary

set the

growth chart data, namely

(71.25, 8.13, ^2.72, 1.78, —6.43). This

value and the location specification (4.2) imply a model for the

in

CEF

and

CQP

that

parameter

is

monotone

age over the range of ages 2-20. To generate the values of the dependent variable,

disturbances from a normal distribution with the

variance of the estimated residuals,

regressor A' in

replication,

e

mean and

= Y — Z{X)' p,

in the

in the

least squares estimate

mean and

variance equal to the

growth chart data.

we draw

We

fix

the

of the replications to be the observed values of age in the data set. In each

all

we estimate the

CEF

and

CQP

using the nonparametric methods described in the

previous section, along with a global polynomial and a flexible Fourier methods. For the global

polynomial method, we

fit

a quartic polynomial.

For the flexible Fourier method, we use a

quadratic polynomial and four trigonometric terms, with two sines and two cosines.

In Table

of the

1

CEF.

we report

We

the average LP errors (for p

=

1,2,

and oo)

for the original

also report the relative efficiency of the rearranged estimates,

estimates

measured

as

the ratio of the average error of the rearranged estimate to the average error of the original

estimate; together with relative efficiencies for an alternative approach based on isotonization

of the original estimates,

estimates.

Mammen

and an approach consisting

The two-step approach based on

(1991),

of averaging the rearranged

and isotonized

isotonization corresponds to the SI estimator in

where the isotonization step

is

carried out using the pool-adjacent violator

algorithm (PAVA). For regression splines, we also consider the one-step monotone regression

splines of

Ramsay

(1998).

Table

and

p

L^ Estimation Errors of Original, Rearranged, Isotonizcd, Average Rearranged-

1.

and Monotone Estimates of

Isotonized,

L'klL'o

L'o

thie

Conditional Expectation Function, for p

=

1,2,

oo. Univariate Case.

^//^O

L'm/L^o

^\r+I)I2I^^0

L';,/L'^

L'o

A. Kernel

L^JLl

^(fl+/)/2/-^0

B. Locally Linear

1

1.00

0.97

0.98

0.98

0.79

0.96

0.97

0.96

2

1.30

0.98

0.99

0.98

0.99

0.96

0.97

0.97

oo

4.54

0.99

1.00

1.00

2.93

0.95

0.95

0.95

D. Quartic

C. Regression Splines

1

0.87

0.93

0.95

0.94

0.99

1.33

0.89

0.87

0.87

2

1.09

0.93

0.95

0.94

0.99

1.64

0.89

0.88

0.87

oo

3.68

0.85

0.88

0.86

0.84

4.38

0.86

0.86

0.86

F. Flexible Fourier

E. Fourier

1

6.57

0.49

0.59

0.40

0.73

0.97

0.99

0.98

2

10.8

0.35

0.45

0.30

0.91

0.98

0.99

0.98

oo

48.9

0.16

0.34

0.20

2.40

0.98

0.98

0.98

The

Notes:

table

is

the

is

L''

the

in 6 cases;

L''

error of the isotonized estimate; i-f^

.

L^

is

the

L''

the monotone regression splines

for

these cases were discarded for

L^

error of the error of the original estimate;

and isotonized estimates;

We

The algorithm

based on 1,000 replications.

stopped with an error message

/) ;2

error of the

calculate the average L^ error as the

'^

the

is

'^e

L''

the estimators.

all

Lq

is

error of the rearranged estimate; L;

error of the average of the rearranged

L''

monotone

regression splines estimates.

Monte Carlo average

of

i/p

LP

where the target function fo{x)

is

\f(x)-Mx)\Pdx

the

CEF

the original nonparametric estimate of the

methods considered, we

(i.e.,

x],

or its increasing transformation, For

1% to 84%

CEF

for global

is

no uniform winner between

The rearrangement works

Quartic polynomials

all

better than isotonization

In Table 2

We

works

some norms. Averaging the two

is

For

comparable to the computationally

splines procedure.

we report the average L^

quantile process.

for

it

the estimation methods considered.

regression splines, the performance of the rearrangement

monotone

of the

reduction in the average error, depending on the

procedures seems to be a good compromise for

intensive one-step

all

more accurately

Local Polynomials, Splines, Fourier, and Flexible Fourier estimates, but

worse than isotonization

more

and the estimate f{x) denotes either

values of p). In this example, there

rearrangement and isotonic regression.

for Kernel,

CEF

=

find that the rearranged curves estimate the true

than the original curves, providing a

method and the norm

E[Y'\X

errors for the original estimates of the conditional

also report the ratio of the average error of the multivariate rearranged

23

estimate, with respect to the age and quantile index arguments, to the average error of the original estimate; together

isotonized estimated.

with the same ratios

The

and average rearranged-

for isotonized estimates

isotonized estimates are obtained by sequentially applying the

PAVA

to the two arguments, and then averaging for the two possible orderings age-quantile and

quantile- age.

Table

2.

Estimation

L''

Errors

Original,

of

Rearranged,

and

Isotonized,

=

Rcarranged-Isotonized Estimates of the Conditional Quantile Process, for p

1,2,

Average

and

oo.

Multivariate Case.

tP irP

^R/^O LVL'o

A Kernel

r''

p

rP

1

TV

^Rl^O

i'o

LVL'o

^lR+I)/2l^O

B. Locally Linear

1

1.49

0.95

0.97

0.96

1.21

0.91

0.93

0.92

2

1.99

0.96

0.98

0.97

1.61

0.91

0.93

0.92

oo

13.7

0.92

0.97

0.94

12.3

0.84

0.87

0.85

1

1.33

0.90

0.93

0.91

1.49

0.90

0.89

2

1.78

0.90

0.92

0.90

1.87

0,90

0.89

0.89

00

16.9

0.72

0.76

0.73

12.6

0.68

0.69

0.68

1

6.72

0.62

0.77

0.64

1.05

0.96

0.97

0.96

2

13.7

0.39

0.58

0.44

1.38

0.95

0.97

0.96

84.9

0.26

0.47

0.36

10.9

0.84

0.86

0,85

D, Quartic

C. Splines

F. Flexible

E. Fourier

oo

Notes:

is

the

The

L''

table

is

based on 1,000 replications.

Lq

is

the

L''

,,

is

the

L''

Fou rier

error of the original estimate;

error of the average multivariate rearrange d estimate; L^

multivariate isotonized estimate; LJ"^^,

0.89

error of the

the

is

mean

L''

L^

error of the average

of the average multivariate

rearranged and isotonized estimates.

The average Lp

error

is

the

Monte Carlo average

of

i/p

LP: =

\f{u,x)

/U

where the target function fo(u,x)

-

fo{u,x)\'''dxdu

JX

is

the conditional quantile process

Qy{u\X =

x),

and the

estimate f{u, x) denotes either the original nonparametric estimate of the conditional quantile

process or

its

monotone transformation.

We

present the results for the average multivariate

rearrangement only. The age-quantile and quantile-age multivariate rearrangements give

rors that are very similar to their average multivariate rearrangement,

report

them

separately.

For

all

ranged curves estimate the true

to

74%

the methods considered, we

CQP

er-

and we therefore do not

find that the multivariate rear-

more accurately than the

original curves, providing a

4%

reduction in the approximation error, depending on the method and the norm. As in

24

the univariate case, there

and

is

no uniform winner between rearrangement and isotonic regression

their average estimate gives a

good balance.

Table 3 reports Monte Carlo coverage frequencies and integrated lengths

and monotonized 90% confidence bands

the

for

integrated L^ length, as defined in Proposition

CEF. For a measure

3,

with p

=

1,2,

and

for

of length,

oo.

We

the original

we used the

constructed the

original confidence intervals of the form in equations (3.3) by obtaining the pointwise standard

errors of the original estimates using the bootstrap with 200 repetitions,

critical value so that the original confidence

frequency of 90%.

We

and we calibrated the

bands cover the entire true function with the exact

constructed monotonized confidence intervals by applying rearrange-

ment, isotonization, and a rearrangement-isotonization average to the end-point functions of

the original confidence intervals, as suggested in Section

we

Here,

3.

find that in all cases

the rearrangement and other monotonization methods increase the coverage of the confidence

intervals while reducing their length. In particular,

we

see that monotonization increases cov-

erage especially for the local estimation methods, whereas

for the global

estimation methods. For the most problematic Fourier estimates, there are both

important increases

in

coverage and reductions in length.

Appendix

A.l.

Proof of Proposition

1.

A.

The

Proofs of Propositions

first

part establishes the weak inequality, following in

part the strategy in Lorentz's (1953) proof.

The second

stated in the proposition.

Proof of Part

1.

We

constant on intervals

assume

((s

-

The proof

at first that the functions /(•)

l)/r,s/r], s

=

1, ...,r.

/s,

fe

some

m>

I.

If £

does not

exist, set

For any simple /(•) with r steps,

S{J)

fom >

foe

and the definition

f.

U£

that

we

S{f) to be a r-vector

and

other elements equal

fg,

submodular function L

L(5(/)(x), /o(x))(ix

+

<

all

:

K^ —*

K-|.,

L{fc, forn)

J,^

by

>

fi

fm,

< L{hJw) +

L{f{x), fo{3:))d2\ using

integrate simple functions.

Applying the sorting operation a

pletely sorted, that

/*

/_^,

/ denote

exists, set

of the submodularity, L{fm,foe)

L{fm,fom)- Therefore, we conclude that

let

Let i be an integer in l,...,r such that

=

with the £-th element equal to fm, the m-th element equal to

to the corresponding elements of /. For any

and /o() are simple functions,

equal to the value of /(•) on the s-th interval.

Let us define the sorting operator S{f) as follows:

for

focuses directly on obtaining the result

part establishes the strong inequality.

the r-vector with the s-th element, denoted

> Im

reduces length most noticeably

it

=So

...

o

S[f)

is,

.

sufficient finite

rearranged, vector /*.

By

number

Thus, we

of times to /,

we obtain

a

com-

can express /* as a finite composition

repeating the argument above, each composition weakly reduces the

25

Table

Coverage Probabilities and Integrated Lengths of Original, Rearranged,

3.