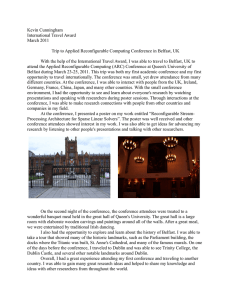

/ Obtaining Performance and Programmability Using Reconfigurable Hardware for Media Processing

advertisement