Software Technology Management: by Michael A. Cusumano

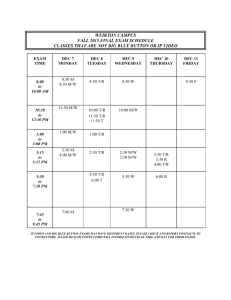

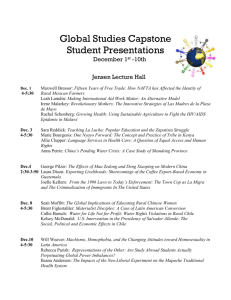

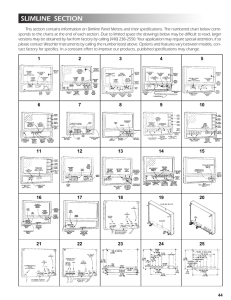

advertisement