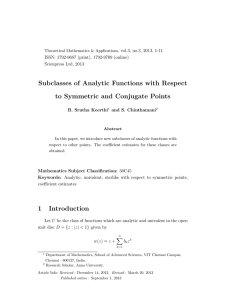

Convergence of the Robbins-Monro Algorithm in Infinite-Dimensional Hilbert Spaces

advertisement

Convergence of the Robbins-Monro Algorithm in Infinite-Dimensional Hilbert Spaces

D.M. Watkins (email: daniel.m.watkins@drexel.edu), G. Simpson (email: simpson@math.drexel.edu)

Department of Mathematics, Drexel University, Philadelphia, PA, USA

Supported by DOE grant DE-SC0012733.

I NTRODUCTION

R OBBINS -M ONRO F ORMULATION (C ONTINUED )

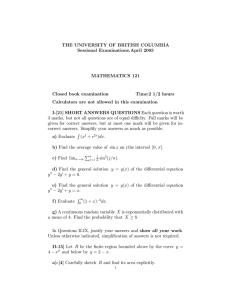

Probability measures on infinite-dimensional Hilbert spaces arises

in applications such as the Bayesian approach to inverse problems

[4]. Obtaining information from such measures is frequently computationally expensive; to ameliorate matters, we wish to find a Gaussian

proposal distribution for MCMC which is as close as possible to the

target distribution, with respect to the KL divergence (image reprinted

from [2]).

Preconditioning by C we obtain f (m) = CJ 0 (m), which will vanish

whenever J 0 vanishes. Finally, with vn ∼ ν0 , we can let

0.12

(3)

0

Yn = CΦ (vn + mn ) + (mn − m0 )

P∞

so that EYn = f (mn ). Let an ≥ 0 be such that

n=1 an = ∞,

P

∞

2

a

n=1 n < ∞. We then define a Robbins-Monro process to find the

zero:

mn+1 = mn − an Yn

(4)

The starting point is arbitrary; we choose to start at m0 .

µε

ν

µ0

0.10

P ROOF OF T HEOREM 1 (C ONTINUED )

µε Samples

ν0

≤ K1 E kvn k < K2 < ∞

If λ is the smallest eigenvalue of C, then λkuk ≤ kuk1 . Using this and

the Cauchy-Schwarz inequality, we find

√

2

2

2

k Bsn k + ksn k1 ≤ K3 kmn − m0 k1 + K4

≤ K3 Xn − K3 log Zµ + K4

P [X ∈ ∆x]

T HEOREM 1

0.06

βn = a2n K3 /2

If Φ : X → R is defined by

0.04

−0.2

0.0

x

0.2

0.4

Let H be a real separable Hilbert space and µ a measure on H, specified with respect to a Gaussian measure µ0 ∼ N (m0 , C):

dµ

1

=

exp (−Φ(u)) .

dµ0

Zµ

(1)

Φ : X → R is continuous on some Banach space X of full measure

with respect to µ0 , exp (−Φ(u)) is integrable with respect to µ0 .

Goal: Find a measure ν ∼ N (m, C) such that the KL divergence

DKL (ν||µ) is minimized.

Since ν and µ0 have the same covariance operators, the requirement

that ν ∼ µ0 will hold if m − m0 is in the Cameron-Martin space H 1 . H 1

has inner product hf, gi1 = hC −1/2 f, C −1/2 gi, where h·, ·i is the inner

product of H and norms k · k and k · k1 , respectively.

The measure ν is parameterized by m. Denoting ν0 = N (0, C), v ∼ ν0 ,

with some work [2] it can be shown that

1

2

DKL (ν||µ) = E [Φ(v + m)] + km − m0 k1 + log Zµ

2

(2)

We set J(m) = DKL (ν(m)||µ). We seek to minimize J, so we should

have that the variational derivative J 0 vanishes at the minimizer. In this

case, J 0 (m) = E ν0 [Φ0 (v + m)] + C −1 (m − m0 ).

ζn = an k(CB + I)mn −

(5)

where B is a self-adjoint, positive, bounded operator, then the RobbinsMonro process (4) converges to a minimizer.

lim k(CB + I)mnk −

k→∞

Let (Ω, F, P ) be a probability space and F1 ⊆ F2 ⊆ · · · be a sequence

of sub σ-fields of F . Let βn , ξn and ζ, n P

= 1, 2, . . . be nonnegative Fn

∞

measurable random variables such that n=1 βn < ∞ and

P

∞

n=1 ξn < ∞. If

(6)

E(Xn+1 |Fn ) ≤ (1 + βn )Xn + ξn − ζn

P∞

n=1 ζn

− an {hC

2

m0 k1

=0

so in particular mnk → (CB + I)−1 m0 = m. Since Xn → X a.s., we

have that mn → m almost surely. Since

ν0

f (m) = E (CB(v + m)) + m − m0 = 0

(8)

m is a critical point, and since J = (CB + I) is positive definite at m,

m is indeed a minimizer.

00

<

P ROOF OF T HEOREM 1

√

Define Xn = J(mn ). With Φ(u) = 21 k Buk2 , we have that Yn = (CB +

I)mn − m0 + CBvn . Expanding,

√

Xn+1 = Xn + a2n {k BYn k2 + kYn k21 }/2

(7)

−1

2

m0 k1

By

Theorem

2,

X

converges

a.s.

to

a

random

variable

X,

and

n

P∞

n=1 ζn < ∞. By choice of the sequence {an }, this implies that there

is a subsequence such that

T HEOREM 2 (R OBBINS -S IEGMUND )

then Xn converges almost surely to a random variable, and

∞. See [3], [1].

R OBBINS -M ONRO F ORMULATION

ν0

ξn = a2n (K2 − K3 log Zµ )

√

1

2

Φ(u) = Bu

2

0.02

−0.4

2

We then gather together these terms and set

0.08

0.00

The operators C and B are bounded, and C is trace-class, so

√

√

√

E ν0 {k Btn k2 + ktn k21 } = E ν0 {k BCBvn k2 + k CBvn k2 }

Yn , mn − m0 i + hBYn , mn i}

Let Fn = σ(X0 , X1 , . . . , Xn ). We write sn = (CB + I)mn − m0 and tn =

CBvn for convenience, so that Yn = sn + tn . Then taking conditional

expectation,

√

E(Xn+1 |Fn ) = Xn + a2n {k Bsn k2 + ksn k21 }/2

√

2 ν0

2

2

2

+ an E {k Btn k + ktn k1 }/2 − an ksn k1

R EFERENCES

References

[1] D. Anbar. An application of a theorem of Robbins and Siegmund.

The Annals of Statistics, 4(5):1018–1021, 1976.

[2] F.J. Pinski, G. Simpson, A.M. Stuart, and H. Weber. Algorithms for

Kullback-Leibler approximation of probability measures in infinite

dimensions. arXiv:http://arxiv.org/abs/1408.1920, 2014.

[3] H. Robbins and D. Siegmund. A convergence theorem for nonnegative almost supermartingales and some applications. Optimizing

Methods in Statistics, pages 233–257, 1971.

[4] A.M. Stuart. Inverse problems: A Bayesian perspective. Acta Numerica, 19:451–559, 2010.