Raspberry HadooPi: A Low-Cost, hands-on Laboratory in Big Data and Analytics

advertisement

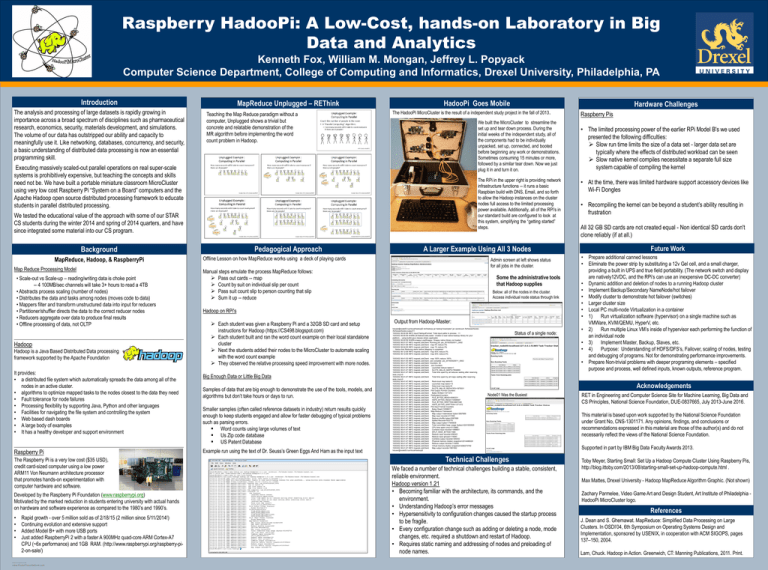

Raspberry HadooPi: A Low-Cost, hands-on Laboratory in Big Data and Analytics Kenneth Fox, William M. Mongan, Jeffrey L. Popyack Computer Science Department, College of Computing and Informatics, Drexel University, Philadelphia, PA Introduction The analysis and processing of large datasets is rapidly growing in importance across a broad spectrum of disciplines such as pharmaceutical research, economics, security, materials development, and simulations. The volume of our data has outstripped our ability and capacity to meaningfully use it. Like networking, databases, concurrency, and security, a basic understanding of distributed data processing is now an essential programming skill. MapReduce Unplugged – REThink Teaching the Map Reduce paradigm without a computer, Unplugged shows a trivial but concrete and relatable demonstration of the MR algorithm before implementing the word count problem in Hadoop. HadooPi Goes Mobile The HadooPi MicroCluster is the result of a independent study project in the fall of 2013. We built the MicroCluster to streamline the set up and tear down process. During the initial weeks of the independent study, all of the components had to be individually unpacked, set up, connected, and booted before beginning any work or demonstrations. Sometimes consuming 15 minutes or more, followed by a similar tear down. Now we just plug it in and turn it on. Executing massively scaled-out parallel operations on real super-scale systems is prohibitively expensive, but teaching the concepts and skills need not be. We have built a portable miniature classroom MicroCluster using very low cost Raspberry Pi “System on a Board” computers and the Apache Hadoop open source distributed processing framework to educate students in parallel distributed processing. The RPi in the upper right is providing network infrastructure functions -- it runs a basic Raspbian build with DNS, Email, and so forth to allow the Hadoop instances on the cluster nodes full access to the limited processing power available. Additionally, all of the RPi's in our standard build are configured to look at this system, simplifying the “getting started” steps. We tested the educational value of the approach with some of our STAR CS students during the winter 2014 and spring of 2014 quarters, and have since integrated some material into our CS program. Pedagogical Approach Background MapReduce, Hadoop, & RaspberryPi Map Reduce Processing Model • Scale-out vs Scale-up – reading/writing data is choke point -- 4 100MB/sec channels will take 3+ hours to read a 4TB • Abstracts process scaling (number of nodes) • Distributes the data and tasks among nodes (moves code to data) • Mappers filter and transform unstructured data into input for reducers • Partitioner/shuffler directs the data to the correct reducer nodes • Reducers aggregate over data to produce final results • Offline processing of data, not OLTP ____________________________________________________________________ Hadoop Hadoop is a Java Based Distributed Data processing framework supported by the Apache Foundation It provides: • a distributed file system which automatically spreads the data among all of the nodes in an active cluster. • algorithms to optimize mapped tasks to the nodes closest to the data they need • Fault tolerance for node failures • Processing flexibility by supporting Java, Python and other languages • Facilities for navigating the file system and controlling the system • Web based dash boards • A large body of examples • It has a healthy developer and support environment ____________________________________________________________________ Raspberry Pi The Raspberry Pi is a very low cost ($35 USD), credit card-sized computer using a low power ARM11 Von Neumann architecture processor that promotes hands-on experimentation with computer hardware and software. Developed by the Raspberry Pi Foundation (www.raspberrypi.org) Motivated by the marked reduction in students entering university with actual hands on hardware and software experience as compared to the 1980’s and 1990’s. • • • • Rapid growth - over 5 million sold as of 2/18/15 (2 million since 5/11/2014!) Continuing evolution and extensive support Added Model B+ with more USB ports Just added RaspberryPi 2 with a faster A 900MHz quad-core ARM Cortex-A7 CPU (~6x performance) and 1GB RAM. (http://www.raspberrypi.org/raspberry-pi2-on-sale/) TEMPLATE DESIGN © 2008 www.PosterPresentations.com A Larger Example Using All 3 Nodes Offline Lesson on how MapReduce works using a deck of playing cards Admin screen at left shows status for all jobs in the cluster. Manual steps emulate the process MapReduce follows: Pass out cards -- map Count by suit on individual slip per count Pass suit count slip to person counting that slip Sum it up -- reduce Some the administrative tools that Hadoop supplies Below: all of the nodes in the cluster. Access individual node status through link Hadoop on RPi's Each student was given a Raspberry Pi and a 32GB SD card and setup instructions for Hadoop (https://CS498.blogspot.com) Each student built and ran the word count example on their local standalone cluster Next the students added their nodes to the MicroCluster to automate scaling with the word count example They observed the relative processing speed improvement with more nodes. Big Enough Data or Little Big Data Samples of data that are big enough to demonstrate the use of the tools, models, and algorithms but don’t take hours or days to run. Smaller samples (often called reference datasets in industry) return results quickly enough to keep students engaged and allow for faster debugging of typical problems such as parsing errors. Word counts using large volumes of text Us Zip code database US Patent Database Example run using the text of Dr. Seuss’s Green Eggs And Ham as the input text Output from Hadoop-Master: hduser@node00:/usr/local/hadoop$ bin/hadoop jar hadoop*examples*.jar wordcount /fs/hduser/books /fs/hduser/books-output11 15/03/02 06:00:58 INFO input.FileInputFormat: Total input paths to process : 11 15/03/02 06:00:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 15/03/02 06:00:59 WARN snappy.LoadSnappy: Snappy native library not loaded 15/03/02 06:01:07 INFO mapred.JobClient: Running job: job_201503020011_0002 15/03/02 06:01:08 INFO mapred.JobClient: map 0% reduce 0% 15/03/02 06:04:05 INFO mapred.JobClient: map 1% reduce 0% … Notice that Reducing starts before Mapping is completed 15/03/02 06:12:08 INFO mapred.JobClient: map 42% reduce 0% 15/03/02 06:12:09 INFO mapred.JobClient: map 42% reduce 6% … 15/03/02 06:41:03 INFO mapred.JobClient: map 100% reduce 100% 15/03/02 06:41:45 INFO mapred.JobClient: Job complete: job_201503020011_0002 15/03/02 06:41:47 INFO mapred.JobClient: Counters: 30 15/03/02 06:41:47 INFO mapred.JobClient: Job Counters 15/03/02 06:41:47 INFO mapred.JobClient: Launched reduce tasks=1 15/03/02 06:41:47 INFO mapred.JobClient: SLOTS_MILLIS_MAPS=7804889 15/03/02 06:41:47 INFO mapred.JobClient: Total time spent by all reduces waiting after reserving slots (ms)=0 15/03/02 06:41:47 INFO mapred.JobClient: Total time spent by all maps waiting after reserving slots (ms)=0 15/03/02 06:41:47 INFO mapred.JobClient: Rack-local map tasks=5 15/03/02 06:41:47 INFO mapred.JobClient: Launched map tasks=13 15/03/02 06:41:47 INFO mapred.JobClient: Data-local map tasks=8 15/03/02 06:41:47 INFO mapred.JobClient: SLOTS_MILLIS_REDUCES=1875031 15/03/02 06:41:47 INFO mapred.JobClient: File Output Format Counters 15/03/02 06:41:47 INFO mapred.JobClient: Bytes Written=1371372 15/03/02 06:41:47 INFO mapred.JobClient: FileSystemCounters 15/03/02 06:41:47 INFO mapred.JobClient: FILE_BYTES_READ=5083231 15/03/02 06:41:47 INFO mapred.JobClient: HDFS_BYTES_READ=10599597 15/03/02 06:41:47 INFO mapred.JobClient: FILE_BYTES_WRITTEN=8807248 15/03/02 06:41:47 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=1371372 15/03/02 06:41:47 INFO mapred.JobClient: File Input Format Counters 15/03/02 06:41:47 INFO mapred.JobClient: Bytes Read=10598017 15/03/02 06:41:47 INFO mapred.JobClient: Map-Reduce Framework 15/03/02 06:41:47 INFO mapred.JobClient: Map output materialized bytes=3067689 15/03/02 06:41:47 INFO mapred.JobClient: Map input records=217458 15/03/02 06:41:47 INFO mapred.JobClient: Reduce shuffle bytes=3067689 15/03/02 06:41:47 INFO mapred.JobClient: Spilled Records=553775 15/03/02 06:41:47 INFO mapred.JobClient: Map output bytes=17945639 15/03/02 06:41:47 INFO mapred.JobClient: Total committed heap usage (bytes)=2241003520 15/03/02 06:41:47 INFO mapred.JobClient: CPU time spent (ms)=3115860 15/03/02 06:41:47 INFO mapred.JobClient: Combine input records=1998651 15/03/02 06:41:47 INFO mapred.JobClient: SPLIT_RAW_BYTES=1580 15/03/02 06:41:47 INFO mapred.JobClient: Reduce input records=208343 15/03/02 06:41:47 INFO mapred.JobClient: Reduce input groups=119283 15/03/02 06:41:47 INFO mapred.JobClient: Combine output records=345432 15/03/02 06:41:47 INFO mapred.JobClient: Physical memory (bytes) snapshot=2014486528 15/03/02 06:41:47 INFO mapred.JobClient: Reduce output records=119283 15/03/02 06:41:47 INFO mapred.JobClient: Virtual memory (bytes) snapshot=4282273792 15/03/02 06:41:47 INFO mapred.JobClient: Map output records=1861562 hduser@node00:/usr/local/hadoop$ Status of a single node: Hardware Challenges Raspberry Pis • The limited processing power of the earlier RPi Model B's we used presented the following difficulties: Slow run time limits the size of a data set - larger data set are typically where the effects of distributed workload can be seen Slow native kernel compiles necessitate a separate full size system capable of compiling the kernel • At the time, there was limited hardware support accessory devices like Wi-Fi Dongles • Recompiling the kernel can be beyond a student’s ability resulting in frustration All 32 GB SD cards are not created equal - Non identical SD cards don't clone reliably (if at all.) Future Work • Prepare additional canned lessons • Eliminate the power strip by substituting a 12v Gel cell, and a small charger, providing a built in UPS and true field portability. (The network switch and display are natively12VDC, and the RPi’s can use an inexpensive DC-DC converter) • Dynamic addition and deletion of nodes to a running Hadoop cluster • Implement Backup/Secondary NameNode/hot failover • Modify cluster to demonstrate hot failover (switches) • Larger cluster size • Local PC multi-node Virtualization in a container • 1) Run virtualization software (hypervisor) on a single machine such as VMWare, KVM/QEMU, HyperV, etc. • 2) Run multiple Linux VM’s inside of hypervisor each performing the function of an individual node • 3) Implement Master, Backup, Slaves, etc. • 4) Purpose: Understanding of HDFS/DFS’s, Failover, scaling of nodes, testing and debugging of programs. Not for demonstrating performance improvements. • Prepare Non-trivial problems with deeper programing elements – specified purpose and process, well defined inputs, known outputs, reference program. Acknowledgements Node01 Was the Busiest Technical Challenges We faced a number of technical challenges building a stable, consistent, reliable environment. Hadoop version 1.21 • Becoming familiar with the architecture, its commands, and the environment. • Understanding Hadoop’s error messages • Hypersensitivity to configuration changes caused the startup process to be fragile. • Every configuration change such as adding or deleting a node, mode changes, etc. required a shutdown and restart of Hadoop. • Requires static naming and addressing of nodes and preloading of node names. RET in Engineering and Computer Science Site for Machine Learning, Big Data and CS Principles, National Science Foundation, DUE-0837665, July 2013-June 2016. This material is based upon work supported by the National Science Foundation under Grant No, CNS-1301171. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. Supported in part by IBM Big Data Faculty Awards 2013. Toby Meyer, Starting Small: Set Up a Hadoop Compute Cluster Using Raspberry Pis, http://blog.ittoby.com/2013/08/starting-small-set-up-hadoop-compute.html . Max Mattes, Drexel University - Hadoop MapReduce Algorithm Graphic. (Not shown) Zachary Parmelee, Video Game Art and Design Student, Art Institute of Philadelphia HadooPi MicroCluster logo. References J. Dean and S. Ghemawat. MapReduce: Simplified Data Processing on Large Clusters. In OSDI’04, 6th Symposium on Operating Systems Design and Implementation, sponsored by USENIX, in cooperation with ACM SIGOPS, pages 137–150, 2004. Lam, Chuck. Hadoop in Action. Greenwich, CT: Manning Publications, 2011. Print.