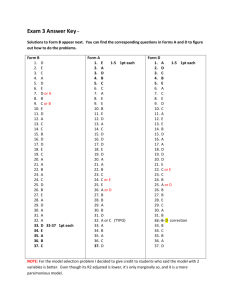

Stat 407 Exam 2 (Fall 2001) Name SOLUTION T reat

advertisement

Stat 407 Exam 2 (Fall 2001)

Name SOLUTION

1. Based on the following data

T reat X1 X2

1

−10 7

1

−11 9

2

5

−6

2

4

−2

2

5

−3

3

6

8

3

5

9

(a) (1pt) Calculate the sample overall mean.

X̄ = (4/7 22/7)0 = (0.57 3.14)0

(b) (1pt) How many groups are there?

3

(c) (1pt) Write down the sample size for each group.

2,3,2

(d) (3pts) Calculate the sample group means.

X̄1 = (−10.5 8)0 , X̄2 = (4.67 − 3.67)0 , X̄3 = (5.5 8.5)0

(e) (2pts) Calculate the sample treatment effects.

X̄1 − X̄ = (−11.07 4.86)0 , X̄2 − X̄ = (4.10 − 6.81)0 , X̄3 − X̄ = (4.93 5.36)0

(f) (2pts) Calculate the residual for the first observation.

X1 − X̄1 = (0.5 − 1)0

1

2. (5pts) Which of the listed methods best matches the problem description. Why?

“Pepsi is currently advertising a new product Pepsi Twist, which is Pepsi with a lemon twist. You are

interested to determine if drinkers can taste the difference between the two. You ask 20 subjects to do a

smell and taste test. Each subject is given a sample of either Pespi Twist or Pepsi to smell and then taste.

They are asked to use a 10 point scale to rate the amount of lemon they smell and taste. The two variables

are smell and taste.”

(a) MANOVA Wilks Λ∗

(b) Paired sample Hotellings T 2

(c) Hierarchical Cluster Analysis

(d) Discriminant Analysis

(e) Multivariate Normal Distribution

The answer is a, because there are two groups but samples are not paired.

3. (5pts) Fill in the missing pieces in the bivariate normal density function, based on the population parameters µ1 = 2, µ2 = −5, σ11 = 10, σ22 = 20, σ12 = −5.

f (X) =

1

10 −5 1/2

p/2

(2π) −5 20 1

exp{− (X − (2 − 5)0 )0

2

"

10 −5

−5 20

#−1

(X − (2 − 5)0 )}

4. (5pts) For the effluent data (n = 14) used in lab, where

X̄ = (0.019 0.184 0.565

1.107 −0.038

−0.038 0.682

S =

0.146

0.012

−0.559 0.187

0.781)0

0.146 −0.560

0.012 0.187

0.594 0.059

0.059 0.720

calculate 90% Bonferroni adjusted confidence intervals for the first variable’s population mean. Note

that t10 (0.1/2 × 4) = 2.63, t13 (0.1/2 × 4) = 2.53, t10 (0.05/2 × 4) = 3.03, t13 (0.05/2 × 4) = 2.89.

q

0.019 ± 2.53 1.107/14 = (−0.69, 0.73)

2

5. With the following data, containing 3 groups (“+”,“o”,“x”), answer the following questions.

(a) (2pts) Would you expect to obtain a significant difference between the 3 means? Explain.

No, the 3 group means appear to be the same.

(b) (3pts) This data does NOT satisfy the assumptions to the Wilks’ Λ test. Why? How would this affect

the significance of the test statistic?

The variance-covariance matricesa re not the same, they have different orientations.

3

6. From the following S-Plus output, answer these questions.

*** Agglomerative Hierarchical Clustering ***

Call:

agnes(x = menuModelFrame(data = clx, variables = "<ALL>", subset = NULL,

na.rm = T), diss = F, metric = "euclidean", stand = F, method

= "average", save.x = T, save.diss = T)

Merge:

[,1] [,2]

[1,] -17 -18

[2,]

-1

-5

[3,]

-8 -12

[4,] -16 -19

[5,] -15

1

[6,]

2

-4

[7,]

-2

-3

[8,] -14

5

[9,]

-6

3

[10,]

8

4

[11,] -10 -11

[12,]

6

7

[13,] -13

10

[14,]

9

-9

[15,]

14

11

[16,]

15

-7

[17,]

13 -20

[18,]

16

17

[19,]

12

18

Order of objects:

[1] 1 5 4 2 3 6 8 12 9 10 11 7 13 14 15 17 18 16 19 20

Height:

[1] 0.8050237 1.5311403 2.4464483 1.7303777 8.3015719 2.0918792

[7] 0.9683186 2.9108459 3.2587285 2.2980136 4.1105591 6.6641699

[13] 2.6504617 1.8072234 1.3242124 0.6813405 2.1984575 1.0224274

[19] 4.6638190

Agglomerative coefficient:

[1] 0.7825644

Available arguments:

[1] "order"

"height"

[6] "diss"

"data"

"ac"

"call"

"merge"

"order.lab"

(a) (1pt) In the hierarchical cluster analysis, what linkage method was used?

average

(b) (2pts) How does this linkage method differ from complete linkage?

Complete linkage uses the maximum interpoint distance to define the inter-cluster distance. Average

linkage uses the average interpoint distance to define the cluster inter-point distance.

4

(c) (2pts) Based on the dendrogram how many clusters are suggested?

2 or 3, based on the height differences in the dendrogram.

(d) (2pts) At what distance were the last two clusters fused into the final single cluster.

8.3

(e) (2pts) Based on the scatterplots would you choose the 2 cluster or 3 cluster solution? Why?

Probably 3 because they are nicely separated inthe first 2 variables.

5

(f) (3pts) A k-means cluster analysis was run using k = 3. The following is a table comparing the cluster

membership between k-means and hierarchical. Which clusters are most similar between the two

methods?

km

|hierarchical

|1

|2

|3

|RowTotl|

-------+-------+-------+-------+-------+

1

|0

|7

|0

|7

|

-------+-------+-------+-------+-------+

2

|8

|0

|0

|8

|

-------+-------+-------+-------+-------+

3

|0

|0

|5

|5

|

-------+-------+-------+-------+-------+

ColTotl|8

|7

|5

|20

|

km 1 with h 2, km 2 with h1, km 3 with h 3

(g) (3pts) Write down the final cluster means from the k-means analysis, and roughly circle the clusters

in the scatterplot matrix below.

*** K-Means Clustering ***

Centers:

x1

x2

x3

[1,] -0.06161786 6.020977172 0.8671158

[2,] -0.09378323 -0.007928403 -0.1010429

[3,] 6.25452584 -1.287267400 0.3888878

Clustering vector:

[1] 3 3 3 3 3 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1

Within cluster sum of squares:

[1] 32.094240 34.094225 8.995041

Cluster sizes:

[1] 7 8 5

Available arguments:

[1] "cluster" "centers"

"withinss" "size"

X̄1 = (−0.062 6.02 0.87)0 , X̄2 = (−0.094 − 0.0079 − 0.10)0 , X̄3 = (6.25 − 1.29 0.39)0

These means can be roughly plotted on each of the scatterplots and a sphere drawn around them to

mark the clusters, roughly.

6

7

7. Answer true or false for the following statements.

(a) (1pt) In the LDA classification rule, using the variance-covariance matrix calculated on all the data

is the same as using the pooled variance-covariance matrix. F.

(b) (1pt) A spine plot is a one-variable (1D) mosaic plot. T.

(c) (1pt) Paired comparisons can be done using Hotellings T 2 statistic calculated on the differences of

corresponding pairs. T.

(d) (1pt) Hotellings T 2 is the statistical distance between the hypothesized mean and the sample mean.

T.

(e) (1pt) A simultaneous confidence ellipses can be used to test hypotheses about a population mean. T.

(f) (1pt) The quadratic discriminant rule is based on an assumption of unequal variance-covariance matrices between groups. T.

(g) (1pt) Classification trees may not generate accurate decision rules when there is strong linear dependency between variables. T.

(h) (1pt) k-means clustering starts with all the cases in individual clusters. F.

(i) (1pt) Hotellings T 2 does not require the two population variance-covariance matrices to be equal. F.

(j) (1pt) Mahalanobis (or statistical) distance derives from the exponent of the multivariate normal density

function. T

8. (2pts) Which of the following is most likely not a sample from a normal distribution? Explain.

(d) The qq-plot curves too low to be considered straight. There is strong skewness in the data.

9. (3pts) The following matrix is a result of a fuzzy c-means cluster analysis where c = 2. Each row corresponds

to a case in the data, where n = 5. Explain what the two columns of numbers mean.

8

Case number

1

2

3

4

5

Cluster 1

0.2

0.6

0.1

1.0

0.8

Cluster 2

0.8

0.4

0.9

0.0

0.2

The numbers correspond to a probability of cluster membership.

10. (2pts) In MANOVA, the analysis of variance table contains the quantities B, between treatment sums of

squares matrix and W is the within sums of squares matrix. What is the dimension of these two matrices?

p × p.

11. (3pts) You have a data problem where there are 2000 cases and 60 variables. How would you start to

address this problem in terms of finding a manageable number of variables to work with?

You will need to start off by reducing the number of variables, probably using PCA.

9