MATH 401: GREEN’S FUNCTIONS AND VARI- ATIONAL METHODS

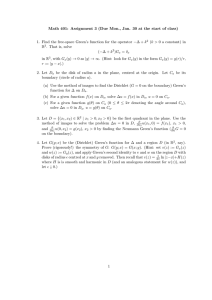

advertisement

MATH 401: GREEN’S FUNCTIONS AND VARIATIONAL METHODS

The main goal of this course is to learn how to “solve” various

differential equation) problems arising in science. Since only very

problems have solutions we can write explicitly, “solve” here often

find an explicit approximation to the true solution, or (b) learn

qualitative properties of the solution.

PDE (= partial

few special such

means either (a)

some important

A number of techniques are available for this. The method of separation of variables

(together with Fourier series), perhaps familiar from earlier courses, unfortunately

only applies to very special problems. The related (but more general) method of

eigenfunction expansion is very useful – and will be discussed in places in this course.

Integral transform techniques (such as the Fourier transform and Laplace transform)

will also be touched on here. If time permits, we will also study some perturbation

methods, which are extremely useful if there is a small parameter somewhere in the

problem.

The bulk of this course, however, will be devoted to the method/notion of Green’s

function (a function which, roughly speaking, expresses the effect of the “data” at one

point on the solution at another point), and to variational methods (by which PDE

problems are solved by minimizing (or maximizing) some quantities). Variational

methods are extremely powerful – and even apply directly to some nonlinear problems

(as well as linear ones). Green’s functions are for linear problems, but in fact play a key

role in nonlinear problems when they are treated as – in some sense – perturbations

of linear ones (which is frequently the only feasible treatment!).

We begin with Green’s functions.

A. GREEN’S FUNCTIONS

I. INTRODUCTION

As an introductory example, consider the following initial-boundary value problem

for the inhomogeneous heat equation (HE) in one (space) dimension

2

∂u

= ∂∂xu2 + f (x, t) 0 < x < L, t > 0 (HE)

∂t

u(0, t) = u(L, t) = 0

(BC)

u(x, 0) = u0 (x)

(IC)

which, physically, describes the temperature u(x, t) at time t and at point x along a

“rod” 0 ≤ x ≤ L of length L subject to (time- and space-varying) heat source f (x, t),

and with the ends held fixed at temperature 0 (boundary condition (BC)) and with

initial (time t = 0) temperature distribution u0 (x) (initial condition (IC)).

1

This problem is easily solved by the (hopefully) familiar methods of separation of

variables and eigenfunction expansion. Without going into details, the eigenfunctions

of the spatial part of the (homogeneous) PDE (namely d2 /dx2 ) satisfying (BC) are

sin(nπx/L), n = 1, 2, 3, . . . (with corresponding eigenvalues −n2 π 2 /L2 ), and so we

seek a solution of the form

u(x, t) =

∞

X

an (t) sin(nπx/L).

n=1

We similarly expand the data of the problem – the source term f (x, t) and the initial

condition u0 (x) – in terms of these eigenfunctions; that is, as Fourier series:

Z

∞

X

2 L

f (x, t) =

fn (t) sin(nπx/L),

fn (t) =

f (x, t) sin(nπx/L)dx

L 0

n=1

Z

∞

X

2 L

u0 (x) =

gn sin(nπx/L),

gn =

u0 (x) sin(nπx/L)dx.

L 0

n=1

Plugging the expression for u(x, t) into the PDE (HE) and comparing coefficients

then yields the family of ODE problems

a0n (t) + (n2 π 2 /L2 )an (t) = fn (t),

an (0) = gn

which are easily solved (after all, they are first-order linear ODEs) by using an integrating factor, to get

Z t

−(n2 π 2 /L2 )t

(n2 π 2 /L2 )s

an (t) = e

gn +

e

fn (s)ds ,

0

and hence the solution we sought:

Z

∞

2 X −(n2 π2 /L2 )t L

e

g(y) sin(nπy/L)dy

u(x, t) =

L n=1

0

Z t

Z L

(n2 π 2 /L2 )s

+

e

f (y, s) sin(nπy/L)dyds sin(nπx/L).

0

0

No problem. But it is instructive to re-write this expression by exchanging the order

of integration and summation (note we are not worrying here about the convergence

of the sum or the exchange of sum and integral – suffice it to say that for reasonable

(say continuous) functions g and f all our manipulations are justified and the sum

converges beautifully due to the decaying exponential) to obtain

Z L

Z tZ L

u(x, t) =

G(x, t; y, 0)u0 (y)dy +

G(x, t; y, s)f (y, s)dyds

(1)

0

0

2

0

where

∞

G(x, t; y, s) =

2 X −(n2 π2 /L2 )(t−s)

e

sin(nπy/L) sin(nπx/L).

L n=1

Expression (1) gives the solution as an integral (OK, 2 integrals) of the “data” (the

initial condition u0 (x) and the source term f (x, t)) against the function G which is

called, of course, the Green’s function for our problem (precisely, for the heat equation

on [0, L] with 0 boundary conditions).

Our computation above suggests a few observations about Green’s functions:

• if we can find the Green’s function for a problem, we have effectively solved the

problem for any data – we just need to plug the data into an integral like (1)

• a Green’s function is a function of 2 sets of variables – one set are the variables

of the solution (x and t above), the other set (y and s above) gets integrated

• one can think of a Green’s function as giving the effect of the data at one point

((y, s) above) on the solution at another point ((x, t))

• the domain of a Green’s function is determined by the original problem: in the

above example, the spatial variables x and y run over the interval [0, L] (the

“rod”), and the time variables satisfy 0 ≤ s ≤ t – here the condition s ≤ t

reflects the fact that the solution at time t is only determined by the data at

previous times (not future times)

The first part of this course will be devoted to a systematic study of Green’s functions,

first for ODEs (where computations are generally easier), and then for PDEs, where

Green’s functions really come into their own.

3

II. GREEN’S FUNCTIONS FOR ODEs

1. An ODE boundary value problem

Consider the ODE (= ordinary differential equation) boundary value problem

Lu := a0 u00 + a1 u0 + a2 u = f (x) x0 < x < x1

u(x0 ) = u(x1 ) = 0.

(2)

Here

d2

d

+ a2 (x)

+

a

(x)

1

dx2

dx

is a (first-order, linear) differential operator. As motivation for problem (2), one can

think, for example, of u(x) as giving the steady-state temperature along a rod [x0 , x1 ]

with (non-uniform) thermal conductivity ρ(x), subject to a heat source f (x) and with

ends held fixed at temperature 0, which leads to the problem

L := a0 (x)

(ρ(x)u0 )0 = f,

u(x0 ) = u(x1 ) = 0

of the form (2). Zero boundary conditions are the simplest, but later we will consider

other boundary conditions (for example, if the ends of the rod are insulated, we should

take u0 (x0 ) = u0 (x1 ) = 0).

We would like to solve (2) by finding a function G(x; z), the Green’s function, so

that

Z x1

u(x) =

G(x; z)f (z)dz =: (Gx , f )

x0

where we have introduced the notations

Gx (z) := G(x; z)

(when we want to emphasize the dependence of G specifically on the variable z,

thinking of x as fixed), and

Z x1

(g, f ) :=

g(z)f (z)dz ( inner product ).

x0

Then since u solves Lu = f , we want

u(x) = (Gx , f ) = (Gx , Lu).

Next we want to “move the operator L over” from u to Gx on the other side of the

inner-product – for which we need the notion of adjoint.

Definition: The adjoint of the operator L is the operator L∗ such that

(v, Lu) = (L∗ v, u) + “ boundary terms “

4

for all smooth functions u and v.

The following example illustrates the adjoint, and explains what is meant by “boundary terms”.

2

d

d

Example: Let, as above, L = a0 (x) dx

2 + a1 (x) dx + a2 (x) acting on functions defined

for x0 ≤ x ≤ x1 . Then for two such (smooth) functions u(x) and v(x), integration by

parts gives (check it!)

Z x1

v[a0 u00 + a1 u0 + a2 u]dx

(v, Lu) =

Zx0x1

x

u[a0 v 00 + (2a00 − a1 )v 0 + (a2 + a000 − a01 )v]dx + [a0 vu0 − a00 v 0 u + a1 uv]x10

=

x0

x

= a0 v 00 + (2a00 − a1 )v 0 + (a2 + a000 − a01 )v, u + [a0 (vu0 − v 0 u) + (a1 − a00 )uv]x10 .

The terms after the integral (the ones evaluated at the endpoints x0 and x1 ) are what

we mean by “boundary terms”. Hence the adjoint is

L ∗ = a0

d2

d

+ (2a00 − a1 ) + (a2 + a000 − a01 ).

2

dx

dx

The differential operator L∗ is of the same form as L, but with (in general) different

coefficients.

An important class of operators are those which are equal to their adjoints.

Definition: An operator L is called (formally) self-adjoint if L = L∗ .

2

d

d

Example: Comparison of L and L∗ for our example L = a0 dx

2 + a1 dx + a2 shows that

0

L is formally self-adjoint if and only if a0 = a1 . Note that in this case

Lu = a0 u00 + a00 u0 + a2 u = (a0 u0 )0 + a2 u

which is an ordinary differential operator of Sturm-Liouville type.

Now we can return to our search for a Green’s function for problem (2):

u(x) = (Gx , f ) = (Gx , Lu) = (L∗ Gx , u) + BT

where we know from our computations above that the “boundary terms” are

x

x

BT = a0 Gx u0 − a00 G0x u + a1 Gx u x10 = a0 Gx u0 x10

where we used the zero boundary conditions u(x0 ) = u(x1 ) = 0 in problem (2). We

can make the remaining boundary term disappear if we also impose the boundary

conditions Gx (x0 ) = Gx (x1 ) = 0 on our Green’s function G. Thus we are led to the

problem

u(x) = (L∗ Gx , u),

Gx (x0 ) = Gx (x1 ) = 0

5

for G.

So L∗ Gx should be a function g(z) which satisfies

Z x1

g(z)u(z)dz

u(x) = (g, u) =

x0

for all (nice) functions u. What kind of function is this? In fact, it is a (Dirac) delta

function, which s not really a function at all! Rather, it is a generalized function, a

notion we need to explore further before proceeding with Green’s functions.

6

2. Generalized functions (distributions).

The precise mathematical definition of a generalized function is:

Definition: A generalized function or distribution is a (continuous) linear functional acting on the space of test functions

C0∞ (R) = { infinitely differentiable functions on R which vanish outside some interval }

(an example of such a test function is

−1/(a2 −x2 )

e

−a < x < a

φ(x) =

0

|x| ≥ a

for any a > 0). That is, a distribution f maps a test function φ to a real number

Z

f (φ) = (f, φ) = “ f (x)φ(x)dx“

which it is useful to think of as an integral (as in the parentheses above) – hence the

common notation (f, φ) for f (φ) – but is not in general an integral (it cannot be –

since f is not in general an actual function!). Further, this map should be linear: for

test functions φ and ψ and numbers α and β,

f (αφ + βψ) = αf (φ) + βf (ψ)

or (f, αφ + βψ) = α(f, φ) + β(f, ψ) .

Some examples should help clarify.

Example:

1. If f (x) is a usual function (say, a piecewise continuous one), then it is also

a distribution (after all, we wouldn’t call them “generalized functions” if they

didn’t include regular functions), which acts by integration,

Z

f (φ) = (f, φ) = f (x)φ(x)dx

which is indeed a linear operation. This is why we use inner-product (and

sometimes even integral) notation for the action of a distribution – when the

distribution is a real function, its action on test functions is integration.

2. The (Dirac) delta function denoted δ(z) (or more generally δx (z) = δ(z − x)

for the delta function centred at a point x) is not a function, but a distribution,

whose action on test functions is defined to be

Z

(δ, φ) := φ(0)

= “ δ(z)φ(z)dz“

Z

(δx , φ) := φ(x)

= “ δ(z − x)φ(z)dz“

7

(where, again, the integral here is just notation). That is, δ acts on test functions

by picking out their value at 0 (and δx acts on test functions by picking out their

value at x).

Generalized functions are so useful because we can perform on them many of the

operations we can perform on usual functions. We can

1. Differentiate them: if f is a usual differentiable function, then for a test function

φ, by integration by parts,

Z

Z

0

0

(f , φ) = f (x)φ(x)dx = − f (x)φ0 (x)dx = (f, −φ0 )

(there are no boundary terms because φ vanishes outside of some interval). Now

if f is any distribution, these integrals make no sense, but we can use the above

calculation as the definition of how the distribution f 0 acts on test functions:

(f 0 , φ) := (f, −φ0 )

and by iterating, we can differentiate f n times:

(f (n) , φ) = (f, (−1)n φ(n) ).

Example:

(a) the derivative of a delta function:

(δ 0 , φ) = (δ, −φ0 ) = −φ0 (0)

(b) the derivative of the Heavyside function

0 x≤0

H(x) :=

1 x>0

(which is a usual function, but is not differentiable in the classical sense at

x = 0):

Z

Z ∞

0

0

0

φ0 (x)dx

(H , φ) = (H, −φ ) = H(x)(−φ (x))dx) = −

0

=

−φ(x)|x=∞

x=0

= φ(0)

(since φ vanishes outside an interval). Hence

d

H(x) = δ(x).

dx

8

The fact that we can always differentiate a distribution is what makes them so

useful for differential equations.

2. Multiply them by smooth functions: if f is a distribution and a(x) is a smooth

(infinitely differentiable) function we define

(a(x)f, φ) := (f, a(x)φ)

(which makes sense, since aφ is again a test function). Note this definition

conincides with the usual one when f is a usual function.

3. Consider convergence of distributions: we say that a sequence {fj }∞

j=1 of distributions converges to another distribution f if

lim (fj , φ) = (f, φ)

for all test functions φ.

j→∞

This kind of convergence is called weak convergence.

R

Example: Let ψ(x) be a smooth, non-negative function with ψ(x)dx = 1, and

set ψj (x) := jψ(jx) for j = 1, 2, 3, . . .. Note that as j increases, the graph

of ψj (x) is becoming

both taller, and more concentrated near x = 0, while

R

maintaining ψj (x)dx = 1. In fact, we have

lim ψj (x) = lim jψ(jx) = δ(x)

j→∞

j→∞

in the weak sense – it is a nice exercise to show this!

4. Compose them with invertible functions: let g : R → R be a one-to-one and

onto differentiable function, with g 0 (x) > 0. If f is a usual function, then by

changing variables y = g(x) (so dy = g 0 (x)dx), we have for the composition

f ◦ g(x) = f (g(x)),

Z

Z

1

(f ◦g, φ) = f (g(x))φ(x)dx = f (y)φ(g −1 (y))dy/g 0 (g −1 (y)) = (f, 0 −1 φ◦g −1 )

g ◦g

and so for f a distribution, we define

1

(f ◦ g, φ) := (f, 0 −1 φ ◦ g −1 ).

g ◦g

Example: Composing the delta function with g(x) gives

(δ(g(x)), φ) = (δ,

1

φ(g −1 (0))

−1

φ

◦

g

)

=

,

g 0 ◦ g −1

g 0 (g −1 (0))

and in particular if g(x) = cx (constant c > 0)

1

1

(δ(cx), φ) = φ(0) = ( δ, φ)

c

c

1

and hence δ(cx) = c δ(x).

9

3. Green’s functions for ODEs.

Returning now to the ODE problem (2), we had concluded that we want our Green’s

function G(x; z) = Gz (z) to satisfy

u(x) = (L∗ Gx , u) ∀ u,

Gx (x0 ) = Gx (x1 ) = 0.

After our discussion of generalized functions, then, we see that what we want is really

L∗ Gx (z) = δ(z − x),

Gx (x0 ) = Gx (x1 ) = 0.

Notice that for z 6= x, we are simply solving L∗ Gx = 0. The strategy is to solve this

equation for x < z and for z > x, and then “glue the two pieces together”. Some

examples should help clarify.

Example: use the Green’s function method to solve the problem

u00 = f (x), 0 < x < L,

u(0) = u(L) = 0.

(3)

(which could, of course, be solved simply by integrating twice).

2

d

∗

(the operator is self-adjoint), so the problem for our

First note that L = dx

2 = L

Green’s function G(x; z) = Gx (z) is

G00x (z) = δ(z − x),

(here 0 denotes

d

).

dz

Gx (0) = Gx (L) = 0

For z < x and z > x, we have simply G00x = 0, and so

Az + B 0 ≤ z < x

Gx (z) =

Cz + D x < z ≤ L

The BC Gx (0) = 0 implies B = 0, and the BC Gx (L) = 0 implies D = −LC, so we

have

Az

0≤z<x

Gx (z) =

C(z − L) x < z ≤ L

Now our task is to determine the remaining two unknown constants by using matching

conditions to “glue” the two pieces together:

1. continuity: we demand that Gx be continuous at z = x: Gx (x−) = Gx (x+) (the

notation here is g(x±) := limε↓0 g(x ± ε)). This yields Ax = C(x − L).

2. jump condition: for any ε > 0, integrating the equation G00x = δ(z − x) between

x − ε and x + ε yields

Z x+ε

Z x+ε

0

0

0 x+ε

00

Gx (x + ε) − Gx (x − ε) = Gx x−ε =

Gx (z)dz =

δ(z − x)dz = 1

x−ε

and letting ε ↓ 0, we arrive at

G0x (x+) − G0x (x−) = 1.

This jump condition requires C − A = 1.

10

x−ε

Solving the two equations for A and C yields C = x/L, A = (x − L)/L, and so

z(x − L)/L 0 ≤ z < x

G(x; z) = Gx (z) =

x(z − L)/L x < z ≤ L

which gives our solution of problem (3)

Z L

Z

Z

x−L x

x L

G(x; z)f (z)dz =

u(x) =

zf (z)dz +

(z − L)f (z)dz.

L

L x

0

0

Remark:

1. whatever you may think of think of the derivation of this expression for the

solution, it is easy to check (by direct differentiation and fundamental theorem

of calculus) that it is correct (assuming f is “reasonable” – say, continuous).

2. notice the form of the Green’s function Gx (z) (graph it!) – it has a “singularity”

(in the sense of being continuous, but not differentiable) at the point z = x. This

“singularity” must be there, since differentiating G twice has to yield a delta

function.

Example: use the Green’s function method to solve the problem

x2 u00 + 2xu0 − 2u = f (x), 0 < x < 1,

u(0) = u(1) = 0.

(4)

Remark: Notice that the coefficients x2 and 2x vanish at the left endpoint x = 0 (this

point is a “regular singular point” in ODE parlance). This suggests that unless u is

very wild as x approaches 0, we will require f (0) = 0 to fully solve the problem. Let’s

come back to this point after we find a solution formula.

2

d

d

Notice the operator L = x2 dx

2 + 2x dx − 2 =

so the problem for the Green’s function is

d 2 d

x dx

dx

LGx = z 2 G00x + 2zG0x − 2Gx = δ(z − x),

− 2 is self-adjoint (L = L∗ ), and

Gx (0) = Gx (1) = 0.

For z 6= x, the equation z 2 G00 +2zG0 −2G = 0 is an ODE of Euler type, and so looking

for solutions of the form G = z r yields

0 = z 2 (rz r−1 )0 + 2z(rz r−1 ) − 2z r = (r(r − 1) + 2r − 2)z r = (r + 2)(r − 1)z r

and so we want r = −2 or r = 1. Thus

Az + B/z 2 0 ≤ z < x

Gx (z) =

.

Cz + D/z 2 x < z ≤ 1

The BCs Gx (0) = Gx (1) = 0 imply B = 0 and C + D = 0, so

Az

0≤z<x

Gx (z) =

.

2

C(z − 1/z ) x < z ≤ 1

The matching conditions are

11

1. continuity Gx (x−) = Gx (x+) implies Ax = C(x − 1/x2 )

2. jump condition

Z

Z x+

δ(z−x)dz =

1=

x+

x−

x−

x+

2 0 0

(z Gx ) −2Gx ]dz = z 2 G0x (z)x− = x2 (G0x (x+)−G0x (x−))

implies x2 C(1 + 2/x3 ) − x2 A = 1.

Solving the simultaneous linear equations for A and C yields

1 = C (x2 + 2/x) − x2 (1 − 1/x3 ) = 3C/x

=⇒ C = x/3, A = (x − 1/x2 )/3

and so the Green’s function is

1

G(x; z) =

3

z(x − 1/x2 ) 0 ≤ z < x

x(z − 1/z 2 ) x < z ≤ 1

and the corresponding solution formula is

Z 1

Z x

Z 1

1

1

2

u(x) =

G(x; z)f (z)dz = (x − 1/x )

zf (z)dz + x

(z − 1/z 2 )f (z)dz.

3

3 x

0

0

Does this really solve (4) (supposing f is, say, continuous)? For 0 < x < 1, differentiation (and fundamental theorem of calculus) gives

Z 1

Z x

2

0

3

(z − 1/z 2 )f (z)dz

zf (z)dz + (x − 1/x )xf (x) +

3u (x) = (1 + 2/x )

x

0

Z 1

Z x

2

3

(z − 1/z 2 )f (z)dz

zf (z)dz +

− x(x − 1/x )f (x) = (1 + 2/x )

0

x

so

Z x

4

3

2

3(x u + 2xu − 2u) = x −6/x

zf (z)dz + (1 + 2/x )xf (x) − (x − 1/x )f (x)

0

Z x

Z 1

3

2

(z − 1/z )f (z)dz

zf (z)dz +

+ 2x (1 + 2/x )

0

x

Z x

Z 1

2

2

− 2 (x − 1/x )

zf (z)dz + x

(z − 1/z )f (z)dz

0

x

Z x

2

2

2

= (−6/x + 2x + 4/x − 2x + 2/x )

zf (z)dz

0

Z 2

+ (2x − 2x)

(z − 1/z 2 )f (z)dz + (x3 + 2 − x3 + 1)f (x)

2 00

0

2

x

= 3f (x)

12

and we see that that the ODE is indeed solved. What about the BCs? Well, u(1) = 0

obviously holds. As alluded to above, the BC at x = 0 is subtler. We see that

Z 1

Z

1 x

f (z)/z 2 dz.

zf (z)dz − lim x

3 lim u(x) = − lim 2

x→0+

x→0+

x→0+ x

x

0

If f is smooth, we have f (z) = f (0) + O(z) for small z, so

3 lim u(x) = −f (0) − f (0) = −2f (0),

x→0+

and so we require f (0) = 0 to genuinely satisfy the boundary condition at x = 0.

13

4. Boundary conditions, and self-adjoint problems.

The only BCs we have seen so far have been homogeneous (i.e. 0) Dirichlet

(specifying the value of the function) ones; namely u(x0 ) = u(x1 ) = 0. Let’s make this

more general, first by considering an ODE problem with inhomogeneous Dirichlet

BCs:

Lu := a0 u00 + a1 u0 + a2 u = f

x0 < x < x 1

.

(5)

u(x0 ) = u0 , u(x1 ) = u1

Recall that by integration by parts, for functions u and v,

x

(v, Lu) = (L∗ v, u) + [a0 (vu0 − v 0 u) + (a1 − a00 )vu]x10 .

Suppose we find a Green’s function G(x; z) = Gx (z) solving the problem

L∗ Gx = δ(z − x)

Gx (x0 ) = Gx (x1 ) = 0

with the corresponding homogeneous (i.e. 0) BCs to the BCs in problem (5). Then

x

x

u(x) = (L∗ Gx , u) = (Gx , Lu) − [a0 G0x u]x10 = (Gx , f ) − [a0 G0x u]x10

Z x1

=

G(x; z)f (z)dz + a0 (x0 )G0x (x0 )u0 − a0 (x1 )G0x (x1 )u1

x0

a formula which gives the solution of problem (5) in terms of the Green’s function

G(x; z), and the “data” (the source term f (x), and the boundary data u0 and u1 ).

The other way to generalize boundary conditions is to include the value of the derivative of u (as well as u itself) at the boundary (i.e. the endpoints of the interval).

For example, if u is the temperature along a rod [x0 , x1 ] whose ends are insulated,

we should impose the Neumann BCs u0 (x0 ) = u0 (x1 ) = 0 (no heat flux through the

ends).

In general, for the following discussion, think of imposing 2 boundary conditions,

each of which is a linear combination of u(x0 ), u0 (x0 ), u(x1 ), and u0 (x1 ) equal to 0

(homogeneous case) or some non-zero number (inhomogeneous case).

Definition:

1. A problem

Lu = f

BCs on u

is called (essentially) self-adjoint if

(a) L = L∗ (so the operator is self-adjoint), and

(b) (v, Lu) = (Lv, u) (i.e. with no boundary terms) whenever both u and v

satisfy the homogeneous BCs corresponding to the BCs on u in the problem.

14

Remark: As in the above example, the Green’s function for a self-adjoint problem

should satisfy the homogeneous BCs corresponding to the BCs in the original

problem. More generally, the Green’s function for a problem should satisfy:

Lu = f

2. The homogeneous adjoint boundary conditions for a problem

BCs on u

are the BCs on v which guarantee that (v, Lu) = (L∗ v, u) (i.e. no boundary

terms) when u satisfies the homogeneous BCs corresponding to the BCs in the

original problem.

Remark:

1. these definitions are quite abstract – it is better to see what is going on by doing

some specific examples

2. a problem can be non-self-adjoint even if L = L∗ , for example (see homework)

u00 + q(x)u = f (x)

u0 (0) − u(1) = 0, u0 (1) = 0

3. if L 6= L∗ , we can make Lu = f self-adjoint by multiplying by a function (again,

see homework).

Example: (Sturm-Liouville problem)

Lu := (p(x)u0 )0 + q(x)u = f (x)

α0 u(0) + β0 u0 (0) = 0

α1 u(1) + β1 u0 (1) = 0

0<x<1

(6)

where p(x) > 0, and α0 , α1 , β0 , β1 are numbers with α0 , β0 not both 0, and α1 , β1

not both 0.

First notice that L = L∗ (the operator is self-adjoint), and integration by parts (as

usual) gives

1

(v, Lu) = (Lv, u) + [p(vu0 − v 0 u)]0 .

If u and v both satisfy the BCs in problem (6) (which are homogeneous) then

α0 [v(0)u0 (0) − v 0 (0)u(0)] = u0 (0)(−β0 v 0 (0)) − v 0 (0)(−β0 u0 (0)) = 0

β0 [v(0)u0 (0) − v 0 (0)u(0)] = v(0)(−α0 u(0)) − u(0)(−α0 v(0)) = 0

and so (since α0 β0 6= 0), v(0)u0 (0) − v 0 (0)u(0) = 0. A similar computation shows that

v(1)u0 (1) − v 0 (1)u(1) = 0. Hence (v, Lu) = (Lv, u) (boundary terms disappear), and

the problem is, indeed, self-adjoint.

15

Thus a Green’s function G(x; z) = Gx (z) for problem (6) should satisfy

LGx = (p(z)G0x (z))0 + q(z)Gx (z) = δ(z − x)

α0 Gx (0) + β0 G0x (0) = 0

.

α1 Gx (1) + β1 G0x (1) = 0

For z 6= x, we have LGx = 0, so

Gx (z) =

c0 w0 (z) 0 ≤ z < x

c1 w1 (z) x < z ≤ 1

where for j = 0, 1, wj denotes any fixed, non-zero solution of

Lwj = 0,

αj wj (j) + βj wj0 (j) = 0

(which is an initial value problem for a second-order, linear, ODE – hence there is a

one-dimensional family of solutions), and c0 , c1 are non-zero constants. Continuity of

G at x implies

c0 w0 (x) = c1 w1 (x).

The jump condition at x is (check it!) p(x)[G0x (x+) − G0x (x−)] = 1, so

c1 w10 (x) − c0 w00 (x) =

1

.

p(x)

Hence

c1 [w0 (x)w10 (x) − w1 (x)w00 (x)] =

w0 (x)

,

p(x)

which involves the Wronskian

W = W [w0 , w1 ](x) = w0 (x)w10 (x) − w1 (x)w00 (x).

Recall that since w0 and w1 satisfy Lw = 0,

p(x)W [w0 , w1 ](x) ≡ constant.

There are two possibilities:

1. If W ≡ 0, then we cannot satisfy the equations for the coefficients above – there

is no Green’s function! In this case, w0 and w1 are linearly dependent, which

means that w0 and w1 are both actually solutions of the homogeneous equation

Lu = 0 satisfying both BCs in (6). We’ll discuss this case more later.

16

2. Otherwise, W is non-zero everywhere in (0, 1) and so we have

c1 =

w0 (x)

,

p(x)W

c0 =

w1 (x)

p(x)W

and hence a Green’s function

1

Gx (z) =

W [w0 , w1 ]p(x)

w1 (x)w0 (z) 0 ≤ z < x

w0 (x)w1 (z) x < z ≤ 1

and a solution to our Sturm-Liouville problem (6)

Z 1

Z x

1

w1 (z)f (z)dz .

u(x) =

w0 (z)f (z)dz + w0 (x)

w1 (x)

W [w0 , w1 ]p(x)

x

0

Remark:

1. Using the variation of parameters procedure from ODE theory would result in

precisely the same formula.

2. Notice that the Green’s function here (and for all our examples so far) is symmetric in its variables: Gx (z) = G(x; z) = G(z; x) = Gz (x). This is no accident

– rather, it is a general property of Green’s functions for self-adjoint problems.

17

5. Modified Green’s functions.

We have seen that we run into trouble in constructing a Green’s function for the

Sturm-Liouville problem

0<x<1

Lu := (p(x)u0 )0 + q(x)u = f (x)

0

α0 u(0) + β0 u (0) = 0

(7)

α1 u(1) + β1 u0 (1) = 0

(where p > 0, q, and f smooth functions, and α0 , α1 , β0 , β1 numbers with α0 , β0 not

both 0, and α1 , β1 not both 0) if the corresponding homogeneous problem ((7) with

f ≡ 0) has a non-trivial solution u∗ :

Lu∗ = 0,

α0 u∗ (0) + β0 u∗ 0 (0) = 0,

α1 u∗ (1) + β1 u∗ 0 (1) = 0.

(8)

In fact in this case, a simple integration by parts

0 = (u, Lu∗ ) = (Lu, u∗ ) = (f, u∗ )

leads to the solvability condition

∗

Z

(f, u ) =

1

f (x)u∗ (x)dx = 0

(9)

0

which the source term f must satisfy, to have any hope of a solution u. In fact:

Theorem: [Fredholm alternative (for the Sturm-Liouville problem)] Either

1. Problem (7) has exactly one solution; or,

2. There is a non-zero solution u∗ of the corresponding homogeneous problem (8).

In this case, problem (7) has a solution if and only if the solvability condition (9)

holds (and the solution is not unique – you can add any multiple of u∗ to get

another).

Proof:

We’ve already seen that if there is no such u∗ , we have a solution (constructed above

via Green’s function). Also, it is unique, since the difference of any two solutions

would be a solution of the homogeneous problem.

If there is a non-zero u∗ , we’ve seen already that the solvability condition (9) is

required. If it is satisfied, we will show below how to construct a solution u using a

modified Green’s function. So suppose now that u∗ is a non-zero solution to (8), and that the solvability condition (9) on f holds. A function G̃(x; z) = G̃x (z) satisfying

LG̃x (z) = δ(z − x) + c(x)u∗ (z)

same BCs on G̃x as in (7)

18

is called a modified Green’s function. Notice that we can choose the “constant”

c(x) here so that the solvability condition

0 = (G̃x , Lu∗ ) = (LG̃x , u∗ ) = (δx + c(x)u∗ , u∗ ) = u∗ (x) + c(x)(u∗ , u∗ )

holds – namely c(x) = −u∗ (x)/(u∗ , u∗ ). This allows the probem for G̃x to be solved

(though we won’t do it here in general – you can use the variation of parameters

method to do it). Given such a G̃, if we have a solution u of (7), then

u(x) = (δx , u) = (LG̃x − cu∗ , u) = (G̃x , f ) +

(u∗ , u) ∗

u

(u∗ , u∗ )

is a solution formula for u in terms of f . Note that the constant in front of u∗ doesn’t

matter – remember we can add any multiple of u∗ and still have a solution. In fact

using reciprocity (G̃ is symmetric in x and z), we can check this formula indeed solves

problem (7):

Lu = (Lx G̃z (x), f ) = (δz (x), f ) + (c(z)u∗ (x), f ) = (δx (z), f ) −

u∗ (x) ∗

(u (z), f ) = f

(u∗ , u∗ )

where we had to use (of course!) the solvability condition (9) on f . Similarly, the

boundary conditions hold.

Hopefully an example will clarify the use of modified Green’s functions.

Example: Solve

u00 (x) = f (x),

0<x<1

.

0

0

u (0) = u (1) = 0

Obviously u∗ (x) ≡ 1 solves the corresponding homogeneous problem, leading to the

solvability condition

Z 1

∗

0 = (f, u ) =

f (x)dx

0

(physically: there is no steady-state temperature distribution in a uniform rod with

insulated ends unless the (spatial) average heat source is zero).

R1

Noticing that (1, 1) = 0 1dx = 1, the modified Green’s function G̃x (z) should solve

G̃00x = δx (z) − 1,

G̃0x (0) = G̃0x (1) = 0,

leading (since G̃00 = −1 =⇒ G̃ = −z 2 /2 + c1 z + c2 , and using the BCs) to

−z 2 /2 + A

0≤z<x

G̃x (z) =

.

2

−z /2 + z + B x < z ≤ 1

19

x+

Continuity at x requires A = x + B. The jump condition G̃0x x− = 1 already holds.

(This leaves one free parameter, which is characteristic of modified Green’s function

problems, since a multiple of u∗ (inRthis case a constant) can always be added.) So,

1

assuming the solvability condition 0 f (x)dx = 0 holds, we arrive at a formula for

the general solution

u(x) = (G̃x , f ) + C

Z

Z x

Z 1

Z 1

1 1 2

=−

z f (z)dz + (B + x)

f (x)dz + B

f (z)dz +

zf (z)dz + C

2 0

0

x

x

Z 1

Z x

f (z)dz +

zf (z)dz + C

=x

0

x

R1

where we simplified using the solvability condition on f , and we absorbed 0 z 2 f (z)dz

into the general constant C. It is very easy to check that this expression solves our

original problem (of course, we could have solved this particular problem just by

integrating).

Remark: So far in this section we discussed the issues of solvability conditions, modified Greens functions, and so on, only for self-adjoint problems. For a general problem,

the obstacle to solvability comes from considering the homogeneous adjoint problem:

L∗ u∗ = 0

.

hom. adj. BCs on u

If this problem has a non-trivial solution u∗ , then the solvability condition for Lu = f

with homogeneous BCs is

0 = (u, L∗ u∗ ) = (Lu, u∗ ) = (f, u∗ ).

One can also construct a modified Green’s function in this case, but we won’t go into

it here.

20

6. Green’s functions and eigenfunction expansion.

Consider again the Sturm-Liouville problem (with homogeneous BCs)

)0 + q(x)u = f (x)

x0 < x < x 1

Lu := (p(x)u0

0

α0 u(x0 ) + β0 u (x0 ) = 0

(BC)

α1 u(x1 ) + β1 u0 (x1 ) = 0

(10)

(p > 0, q, and f smooth functions). Recall that the Sturm-Liouville problem has

eigenvalues

λ0 < λ1 < λ2 < λ3 · · ·

and corresponding eigenfunctions φj (x) such that

Lφj = λj φj

φj satisfies (BC)

which can be taken orthonormal:

(φj , φk ) = δjk =

1 j=k

.

0 j=

6 k

Furthermore, the eigenfunctions are complete, meaning that “any” function g(x) on

[x0 , x1 ] can be expanded

g(x) =

∞

X

cj φj (x)

j=0

cj = (φj , g) = “Fourier coefficient”.

To be more precise, this series does not (in general) converge at every point x in

[x0 , x1 ] (for example, it cannot converge at the endpoints

R x1 2 if g does not satisfy (BC)

2

!), but rather converges in the “L -sense”: provided x0 g (x)dx < ∞,

Z

x1

g(x) −

lim

N →∞

"

x0

N

X

#2

cj φj (x)

dx = 0.

j=0

So let’s express the Green’s function G(x; z) = Gx (z) for problem (10) as such an

eigenfunction expansion

G(x; z) = Gx (z) =

∞

X

j=0

21

cj (x)φj (z)

and try to find the coefficients cj (x). Using LGx = δx , and applying the linear

operator L to the expression for Gx , we find

∞

X

δx = LGx =

cj (x)(Lφj )(z) =

j=0

∞

X

cj (x)λj φj (z)

j=0

and so the coefficients cj (x)λj of this expansion should satisfy

cj (x)λj = (φj , δx ) = φj (x),

and hence we arrive at an expression for the Green’s function as an eigenfunction

expansion:

∞

X

1

φj (x)φj (z).

Gx (z) =

λ

j

j=0

Remark:

1. The reciprocity (symmetry in x and z of G) is very clearly displayed by this

formula.

2. If, for some j, λj = 0, this expression is ill-defined. Indeed, in this case, the

eigenfunction φj is a non-trivial solution to the homogeneous problem (i.e. it is

a “u∗ ”), and we have already seen there is no (usual) Green’s function in this

case.

3. The corresponding solution formula for problem (10) is

Z

u(x) = (Gx , f ) =

∞

x1 X

x0

j=0

∞

X 1

1

φj (x)φj (z)f (z)dz =

φj (x)(φj , f ).

λj

λ

j

j=0

Notice that if λj = 0 (for some j), the only way for this expression to make

sense is if also (φj , f ) = 0 – which is exactly the solvability condition on f we

have seen earlier.

22

III. GREEN’S FUNCTIONS FOR ELLIPTIC (STEADY-STATE)

PROBLEMS

We turn now from ODEs to PDEs (partial differential equations).

1. Green’s functions for the Poisson equation.

As a motivating example, consider the electrostatic potential u(x) in a uniformly

conducting two-dimensional (or three-dimensional) region D ⊂ R2 (or D ⊂ R3 ),

which satisfies

∆u = f (x)

x ∈ D (Poisson equation)

(11)

u = g(x)

x ∈ S = ∂D

where f (x) is the charge density on the region D, g(x) is the potential applied on the

boundary S = ∂D of D, and

∂ 2u ∂ 2u

∂ 2u

+

∆u :=

+ 2

∂x21 ∂x22

∂x3

is the Laplacian of u. (Notice also our notation for vectors in R2 and R3 : x = (x1 , x2 )

(or x = (x1 , x2 , x3 )).) The Poisson problem (11) arises in other physical contexts as

well, for example:

• u(x) is the steady-state temperature distribution inside the (uniform) region

D subject to heat source f (x) and with the temperature fixed at g(x) on the

boundary

• u is the steady-state (small) deformation of an elastic membrane/solid from its

reference position D, when it is subject to applied force f (x) and held at fixed

deformation g(x) on the boundary.

If we are looking to solve problem (11) by finding a Green’s function G(x; y) = Gx (y)

(x, y ∈ D), then by analogy with the self-adjoint ODE problems studied earlier, we

might seek G which solves

∆Gx (y) = δx (y) y ∈ D

(12)

Gx (y) = 0

y∈S

where here δx (y) = δ(y − x) is a 2- or 3-dimensional delta function centred at x,

defined in the same way as in 1-dimension: for a test function φ(x),

Z

(δx , φ) = “

δx (y)φ(y)dy“ = φ(x)

R2 or 3

23

(one can think δ(y − x) = δ(y1 − x1 )δ(y2 − x2 ) in 2 dimensions, and δ(y − x) =

δ(y1 − x1 )δ(y2 − x2 )δ(y3 − x3 ) in 3 dimensions).

Supposing we can find a Green’s function solving (12), we would like to derive a

formula for the solution u of (11) in terms of G. To do this, we will use a simple

“integration by parts” formula, for which we need to define the normal derivative of

a function u at the boundary S = ∂D:

∂u

:= n̂ · ∇u

∂n

where n̂ denotes the outward unit normal vector to the curve/surface S.

Lemma 1 (Green’s second identity) Let D ⊂ R2 or 3 be bounded by a smooth

curve/surface S. Then if u1 and u2 are twice continuously differential functions on

D,

Z

Z ∂u2

∂u1

(u1 ∆u2 − u2 ∆u1 ) dV =

u1

− u2

dS.

(13)

∂n

∂n

D

S

Proof: subtracting the relations

∇ · (u1 ∇u2 ) = ∇u1 · ∇u2 + u1 ∆u2

∇ · (u2 ∇u1 ) = ∇u1 · ∇u2 + u2 ∆u1

and using the divergence theorem, we find

Z Z

Z

∂u2

∂u1

n̂ · (u1 ∇u2 − u2 ∇u2 )dS =

u1

(u1 ∆u2 − u2 ∆u1 ) dV =

− u2

dS.

∂n

∂n

S

S

D

Taking u1 = u (solution of (11)) and u2 = Gx (solution of (12)) in (13), we find

Z

Z

∂Gx

(u(y)δx (y) − Gx (y)f (y))dy =

g(y)

dS(y)

∂n

D

S

or

Z

u(x) =

Z

G(x; y)f (y)dy +

D

S

∂G(x; y)

g(y)dS(y)

∂n(y)

(14)

which is the solution formula we sought.

Free-space Green’s functions. For a general region D, we have no chance of finding

an explicit Green’s function solving (12). One case we can easily solve explicitly, is

for the whole space D = R2 or D = R3 , where there is no boundary at all.

24

• R2 : for a given x ∈ R2 , we want to find Gx (y) solving ∆Gx (y) = δ(y − x). It is

reasonable to try a G which depends only on the distance from the singularity:

G = h(r), r := |y − x|. So for y 6= x (r > 0), we need

2

∂

1

1 ∂

0 = ∆G =

h(r) = (rh0 (r))0

+

2

∂r

r ∂r

r

and so

h0 = c1 /r

=⇒ h = c1 log r + c2

for some constants c1 and c2 . Including c2 is simply adding an overall constant

to the Green’s function, so we will drop it: c2 = 0. The constant c1 is determined

by the condition ∆Gx = δx : for any ε > 0, we have, by the divergence theorem,

Z

Z

Z

δx (y)dy =

∇ · ∇Gx (y)dy =

∇Gx (y) · n̂ dS(y)

1=

|y−x|≤ε

|y−x|≤ε

|y−x|=ε

Z

y−x

y−x

c1

c1

=

·

dS(y)

=

2πε = 2πc1

|y − x|2 |y − x|

ε

|y−x|=ε

and so c1 = (2π)−1 , and our expression for the two-dimensional free-space

Green’s function is

1

GR2 (x; y) =

log |y − x|.

2π

• R3 : we play the same game in three dimensions. Try Gx (y) = h(r), r := |y − x|,

so for r > 0

2

∂

2 ∂

1

0 = ∆Gx =

+

h(r) = 2 (r2 h0 (r))0

2

∂r

r ∂r

r

and so

h0 = c1 /r2

=⇒ h = −c1 /r + c2

and again we set c2 = 0. Constant c1 is determined by

Z

Z

Z

δx (y)dy =

∇ · ∇Gx (y)dy =

∇Gx (y) · n̂dS(y)

1=

|y−x|=ε

|y−x|≤ε

|y−x|≤ε

Z

y−x

y−x

c1

=

c1

·

dS(y) = 2 4πε2 = 4πc1

3

|y − x| |y − x|

ε

|y−x|=ε

and so c1 = (4π)−1 , and our expression for the three-dimensional free-space

Green’s function is

−1

GR3 (x; y) =

.

4π|y − x|

25

Knowing the free-space Green’s functions, we are lead immediately to solution formulas for the Poisson equation ∆u(x) = f (x) in R2 and R2 :

1 R

log |x − y|f (y)dy R2

2π R2

R f (y)

u(x) =

.

(15)

1

R3

− 4π

R3 |x−y|

The following theorem makes precise the claim that these formulas indeed solve Poisson’s equation:

Theorem: Let f (x) be a twice continuously differentiable function on R2 (respectively

R3 ) with compact support (i.e. it vanishes outside of some ball). Then u(x) defined

by (15) is a twice continuously differentiable function, and ∆u = f (x) for all x.

Proof: we’ll do the R3 case (the R2 case is analogous). Notice first that by the change

of variable y 7→ x − y in the integral,

Z

Z

1

f (y)

1

f (x − y)

u(x) = −

dy = −

dy.

4π R3 |x − y|

4π R3

|y|

If êj denotes the j-th standard basis vector, then

Z u(x + hêj ) − u(x)

1

f (x + hêj − y) − f (x − y) dy

=−

,

h

4π R3

h

|y|

f (x+hê −y)−f (x−y)

∂f

j

and since

(x − y) as h → 0 uniformly in y (since f is continu→ ∂x

h

j

ously differentiable and compactly supported),

Z

u(x + hêj ) − u(x)

dy

1

∂f

∂u

(x) = lim

(x − y) .

=−

h→0

∂xj

h

4π R3 ∂xj

|y|

Similarly,

Z

∂ 2u

1

∂ 2f

dy

(x) = −

(x − y)

∂xj ∂xk

4π R3 ∂xj ∂xk

|y|

which is a continuous function of x (again, because f is twice continuously differentiable and compactly supported) - hence u is twice continuously differentiable. Now,

to find ∆u we want to “integrate by parts” twice. But the Green’s function 1/|y| is

not twice continuously differentiable (indeed not even continuous) at y = 0, so to do

this legally, we remove a small neighbourhood of the origin: for ε > 0,

Z

Z

1

dy

1

dy

∆u(x) = −

∆f (x − y) −

∆f (x − y)

=: A + B .

4π |y|>ε

|y| 4π |y|≤ε

|y|

Now,

1

|B| ≤

max |∆f (x)|

4π x

=

Z

|y|≤ε

2

dy

1

=

max |∆f (x)|

|y|

4π x

1

ε

max |∆f (x)| → 0 as ε → 0

4π x

2

26

Z

ε

rdr

0

(∆f is bounded because it is continuous and compactly supported). For the other

term, we apply Green’s second identity on the domain {|y| > ε}, and use the fact

that ∆(1/|y|) = 0 for |y| > 0 to find

1

A=

4π

Z

|y|=ε

∂ 1

1 ∂

f (x − y) −

f (x − y) dS(y) =: C + D.

∂n |y|

|y| ∂n

For the second term,

1

|D| ≤

max |∇f |

4π

Finally, using

1

C=

4π

Z

|y|=ε

1

1

1

dS(y) =

max |∇f | · 4πε2 → 0 as ε → 0.

|y|

4π

ε

∂ 1

∂n |y|

=

Z

1

1

f

(x

−

y)dS(y)

=

|y|2

4πε2

|y|=ε

1

,

|y|2

Z

f (x − y)dS(y) = f (x − y ∗ )

|y|=ε

for some y ∗ with |y ∗ | = ε, by the mean-value theorem for integrals. So as ε → 0,

y ∗ → 0, and so sending ε → 0 in all terms yields ∆u(x) = f (x), as required. Remark:

1. for the above theorem to hold true, we don’t really need f to be compactly

supported (just with enough spatial decay so the the integral defining u makes

sense), or twice continuously differentiable (actually, f just needs to be a “little

better than continuous” – although just continuous is not quite enough)

2. the free space Green’s functions are not just useful for solving Poisson’s equation

on R2 or R3 , but in fact play a key role in boundary value problems – as we will

soon see

3. a physical interpretation: the three-dimensional free-space Green’s function

−(4π|x − y|)−1 is the electrostatic potential generated by a point charge located

at x (i.e. the Coulomb potential).

27

2. The method of images.

Example: Solve the boundary value problem for Laplace’s equation on the 1/2-plane

{ (x1 , x2 ) | x2 > 0 } by the Green’s function method:

∆u = 0

x2 > 0

.

(16)

u(x1 , 0) = g(x1 ) x2 = 0

The corresponding Green’s function problem is: for x with x2 > 0,

∆Gx (y) = δx (y) y2 > 0

.

Gx (y) ≡ 0

y2 = 0

We know that the two-dimensional free-space Green’s function Gf (x; y) = log |y −

x|/2π will generate the right delta function at x, but most certainly does not satisfy

the boundary conditions. The idea of the method of images is to balance Gf (x, y)

with other copies of Gf with singularities at different points in hopes of satisfying

the boundary conditions. For this problem, the geometry suggests placing an “image

charge” (the name comes from the interpretation of free-space Green’s functions as

potentials generated by point electric charges) at x̄ := (x1 , −x2 ), the reflection of x

about the x1 axis. So we set

Gx (y) := Gf (x; y) − Gf (x̄; y) =

1

(log |y − x| − log |y − x̄|) .

2π

Notice that

y2 = 0 =⇒ |y − x| = |y − x̄| =⇒ Gx (y) = 0

so we have satisfied the boundary conditions. But have we satisfied the right PDE?

Yes, since for y with y2 > 0,

∆Gx (y) = δ(y − x) − δ(y − x̄) = δ(y − x)

since the singularity introduced at x̄ lies outside the domain, and so does not contribute. So we have our Green’s function. To write the corresponding solution formula, we need to compute its normal derivative on the boundary y2 = 0: for y with

y2 = 0 (using |y − x| = |y − x̄|),

∇Gx (y) =

1

1

(y − x − (y − x̄)) =

(x̄ − x)

2

2π|y − x|

2π|y − x|2

and since the outward unit normal on the boundary is n̂ = (0, −1),

∂

1

x2

Gx (y) =

(x2 − x̄2 ) =

.

2

∂n

2π|y − x|

π((y1 − x1 )2 + x22 )

28

Hence our formula for the solution of problem (16) is

Z

g(y1 )

x2 ∞

dy1 .

u(x) =

π −∞ (y1 − x1 )2 + x22

Now, does this expression really yield a solution to problem (16)? For x2 > 0, the

function x2 /(x21 + x22 ) is harmonic:

∆

x2

∂ −2x1 x2

∂ x21 − x22

=

+

x21 + x22

∂x1 (x21 + x22 )2 ∂x2 (x21 + x22 )2

−2x2 (x21 + x22 ) + 8x21 x2 − 2x2 (x21 + x22 ) − 4x2 (x21 − x22 )

=

= 0,

(x21 + x22 )3

and hence so is x2 /((y1 −x1 )2 +x22 ) for any y1 . So supposing the function g is bounded

and continuous, we may differentiate under the integral signRto conclude that ∆u(x) =

∞

0 for x2 > 0. What about the boundary conditions? Since −∞ (s2 + 1)−1 ds = π, and

changing variables y1 = x2 s, we have for fixed x1 , and for x2 > 0,

Z ∞

1

1

|u(x1 , x2 ) − g(x1 )| = [g(x

+

x

s)

−

g(x

)]

ds

1

2

1

π −∞ s2 + 1

Z ∞

Z M

4 max |g|

ds

1

1

≤

+

|g(x1 + x2 s) − g(x1 )|ds.

2

π

π −M 1 + s2

M s +1

Let ε > 0 be given, and then choose M large enough so that the first term above is

< ε/2. Then since a continuous function on a compact set is uniformly continuous,

we may choose x2 small enough so that the second term above is < ε/2. Thus we

have shown that

lim u(x1 , x2 ) = g(x1 )

x2 ↓0

– i.e., that the boundary conditions in problem (16) are also satisfied.

In practice, the method of images can only be used to compute the Green’s function

for very special, highly symmetric geometries. Examples include:

• half-plane, quarter-plane, half-space, octant, etc.

• disks, balls

• some combinations of the above, such as a 1/2-disk

Example: Solve the boundary value problem for Laplace’s equation in the 3D ball of

radius a { x ∈ R3 | |x| < a} using the method of images:

∆u = 0 |x| < a

.

(17)

u = g |x| = a

29

The Green’s function should solve

∆Gx (y) = δ(y − x) |y| < a

.

Gx (y) ≡ 0

|y| = a

Given x in the ball (|x| < a), a natural place to put an “image charge” is on the ray

from the origin through x. In fact, we choose the point

a2

x := 2 x

|x|

∗

and notice that |x∗ | > a, so x∗ is not in our domain, and hence ∆Gf (x∗ ; y) will

contribute nothing for |y| < a. Now suppose that y is on the boundary (|y| = a), and

notice that

2

2 a2

a

x

y

a2

|y − x∗ |2 = 2 |x| − a = 2 (|x|2 − 2y · x + a2 ) = 2 |x − y|2

|x|

a

|x|

|x|

|x|

so |y − x∗ | = (a/|x|)|x − y|. Thus if we define

−1

a

1

a f ∗

G (x ; y) =

−

,

Gx (y) = G (x; y) −

|x|

4π |y − x| |x||y − x∗ |

f

we have satisfied the boundary condition: |y| = a =⇒ Gx (y) = 0. The normal

derivative of G on the boundary is

y 1

a(y − x∗ )

∂Gx

y−x

a2 − |x|2

= ·

−

.

=

∂n

a 4π |y − x|3 |x||y − x∗ |3

4πa|y − x|3

Our solution formula is then

a2 − |x|2

u(x) =

4πa

Z

|y|=a

g(y)

dS(y),

|y − x|3

(18)

which is Poisson’s formula for harmonic functions in a 3D ball. Again, it is possible to

prove rigorously that for g a continuous function on the sphere |y| = a, this formula

produces a function u which is harmonic in the ball, and takes on the values g on

the sphere. Furthermore, any function harmonic on the ball and with continuous

boundary values g is given by formula (18) (we will address this issue later on).

A nice consequence of Poisson’s formula (though there are also direct proofs) is the:

Mean value formula for harmonic functions: let u be harmonic (∆u ≡ 0) in

the ball |y| < a in Rn and continuous for |y| ≤ a. Then the value of u at the centre

of the ball is equal to its average over the boundary of the ball.

30

Proof (for the R3 case): take x = 0 in (18) to find

Z

Z

a

g(y)

1

u(x) =

dS(y) =

g(y)dS(y)

4π |y|=a |y|3

4πa2 |y|=a

as required. Solvability conditions and modified Green’s functions.

Example: Consider the problem

∆u = f

∂u

=g

∂n

in D ⊂ R2

on ∂D

(19)

with so-called Neumann boundary conditions. Notice that the corresponding

homogeneous problem

∆u∗ = 0 in D ⊂ R2

∂u∗

=0

on ∂D

∂n

has the non-trivial solution u∗ (x) ≡ 1. As a result, there is a solvability condition on

the data f and g in problem (19), which can be derived using Green’s second identity:

Z

Z

Z

∂u

∂u∗

∗

∗

u − u∗ )dS

0=

∆u udx =

u ∆udx +

(

∂n

D

D

∂D ∂n

and since ∂u∗ /∂n ≡ 0, we arrive at the solvability condition

Z

Z

g(x)dS(x).

f (x)dx =

D

∂D

Problem (19) will not have a Green’s function, but will have a modified Green’s

function, satisfying

∆G̃x (y) = δ(y − x) + C y ∈ D

∂

G̃ (y) = 0

y ∈ ∂D

∂n x

for some constant C, which can be determined using the divergence theorem:

Z

Z

Z

∂

1=

δ(y − x)dy = (∆G̃x − C)dy =

G̃x dS − C|D| = −C|D|

D

D

∂D ∂n

(where |D| denotes the area/volume of D), and hence C = −1/|D|. If we can find the

modified Green’s function, we can construct the family of solutions of problem (19)

(assuming the solvability condition holds), as

Z

Z

u(x) =

G̃x (y)f (y)dy −

G̃x (y)g(y)dS(y) + A

D

∂D

for any constant A.

31

3. Green’s functions for Laplacian: some general theory.

So far we have mainly discussed methods for explicit computation of Green’s functions and solutions for certain problems involving the Laplacian operator. For general

regions, of course, explicit computations are not possible. Nevertheless, some general

theory tells us that Green’s functions exist, and that the corresponding solution formulas do yield solutions to the problems of interest. We describe some of this theory

here.

Let D be a region in R2 or R3 bounded by a smooth curve/surface S = ∂D. Let

Gf (x; y) denote the free-space Green’s function (i.e. log |x − y|/(2π) for R2 and

(4π|y − x|)−1 for R3 ).

Definition: a (Dirichlet) Green’s function G(x; y) for the operator ∆ and the region

D is a function continuous for x, y ∈ D̄ := D ∪ S, x 6= y, satisfying

1. the function Hx (y) := Gx (y) − Gfx (y) is harmonic (i.e. ∆y Hx (y) = 0) for y ∈ D

2. Gx (y) ≡ 0 for y ∈ S

Remark:

• the first property implies that Gx solves ∆Gx = δx , and also implies that Gx (y)

is a smooth function on D\{x} (since Gfx is, and since harmonic functions are

smooth).

• the terminology “Dirichlet Green’s function” refers to the “Dirichlet boundary

conditions” – specifying the value of the function (rather than, say, its normal

derivative) on the boundary.

Properties of Green’s functions:

1. Existence: a Green’s function as above exists. (The proof of this fact calls for

some mathematics a little beyond the level of this course – eg., some functional

analysis – so we will have to simply take it as given.)

2. Uniqueness: the Green’s function is unique – in particular, we may refer to

“the” Green’s function (rather than “a” Green’s function).

3. Symmetry (“reciprocity”): G(x; y) = G(y; x).

4. Relation to solution of BVP for Poisson: let f be a smooth function on

D, and let g be a smooth function on S. Then u solves the boundary value

problem for Poisson’s equation

∆u = f D

(20)

u = g ∂D

32

if and only if

Z

u(x) =

Z

G(x; y)f (y)dy +

D

∂D

∂Gx (y)

g(y)dS(y).

∂n

(21)

Some (sketches of ) proofs:

• Symmetry: a formal (i.e. “cheating”) argument giving the symmetry is:

Z

G(x; y) − G(y; x) =

(G(x; z)δy (z) − G(y; z)δx (z)) dz

D

Z

(Gx (z) ∆Gy (z) − Gy (z)∆Gx (z)) dz

=

D

Z ∂Gy (z)

∂Gx (z)

Gx (z)

=

− Gy (z)

dS(z) = 0.

∂n(z)

∂n(z)

S

This is not a rigorous argument, because we applied Green’s second identity

to functions (i.e. the Green’s functions) which are NOT twice continuously

differentiable (indeed, not even continuous). To make the argument rigorous,

try applying this identity on the domain D with balls of (small) radius ε around

the points x and y removed – the Green’s functions are smooth on this new

domain. Then take ε ↓ 0. This is left as an exercise.

• Representation of solution of Poisson: suppose u is twice continuously differentiable on D, continuous on D̄ := D ∪ S, and satisfies (20). We will show that u

is given by formula (21). Assume first that f = 0, so that u is harmonic in D.

We claim then that

Z ∂ f

f ∂

u(x) =

u Gx − Gx u dS.

(22)

∂n

∂n

S

Assuming this for a moment, applying Green’s second identity to the harmonic

functions u and Hx := Gx − Gfx in D, we arrive at

Z ∂Hx

∂u

0=

u

− Hx

dS

∂n

∂n

S

which we add to (22) to arrive at

Z Z

∂Gx

∂u

∂Gx

u(x) =

u

− Gx

dS =

gdS

∂n

∂n

S

S ∂n

as required. Now let’s prove (22) (we will just do the three-dimensional case

here, for simplicity). Let Dε be D with a ball of (small) radius ε about x

33

removed, and apply Green’s second identity to u and Gfx on Dε (both functions

are smooth and harmonic here) to find

Z

∂Gfx

f ∂u

0=

u

− Gx

dS

∂n

∂n

∂Dε

Z Z

∂Gfx

y−x

y−x

1

f ∂u

=

u

− Gx

dS −

· u

∇u dS.

−

∂n

∂n

4π|y − x|3 4π|y − x|

∂D

|y−x|=ε |y − x|

Now the second term in the second integral is bounded by

1

max |∇u| · 4πε2 = (max |∇u|)ε → 0 as ε ↓ 0,

4πε

while the first term is, in the limit as ε ↓ 0,

Z

1

lim

u(y)dS(y) = u(x)

ε↓0 4πε2 |y−x|=ε

(by the mean value theorem for integrals), and we recover (22), as desired.

Finally, suppose f 6= 0 in (20). Let

Z

G(x; y)f (y)dy.

w(x) :=

D

Notice that for x ∈ S, G(x; y) = 0 (reciprocity), and so w(x) = 0. Just as we

did for the Poisson equation in R3 , we can show that ∆w = f , and so

∆(u − w) = ∆u − f = f − f = 0,

and we may write

Z

u(x) − w(x) =

∂D

∂Gx

[g(y) − w(y)]dS(y) =

∂n

Z

∂D

∂Gx

g(y)dS(y)

∂n

and we recover (21) as needed.

• Formula (21) solves the Poisson BVP: it remains to show (21) really does solve

the BVP (20). We already argued that the first term in (21) (what we called

w above) solves ∆w = f and has zero boundary conditions. It remains to show

that the boundary integral is harmonic, and has boundary values g. We won’t

do this here – the proof can be found in rigorous PDE texts.

• Uniqueness of the Green’s function: we will prove this using the “maximum

principle” in the next section.

34

4. The maximum principle.

Let D be a (open, connected) region in Rn , bounded by a smooth surface ∂D.

Theorem: [maximum principle for harmonic functions]. Let u be a function

which is harmonic in D (∆u = 0) and continuous on D = D ∪ ∂D. Then

1. u attains its maximum and minimum values on the boundary ∂D

2. if u also attains its maximum or minimum value at an interior point of D, it

must be a constant function

Remark:

1. u must attain its max. and min. values somewhere on D, since it is a continuous

function on a closed, bounded set

2. the “maximum” part of the theorem extends to subharmonic functions (∆u ≥ 0),

and the “minimum” part to superharmonic functions (∆u ≤ 0).

We’ll give two proofs of the maximum principle.

Proof #1: suppose u does, in fact attain its maximum (say) at an interior point

x0 ∈ D. Then for any r > 0 such that the ball Br of radius r about x0 lies in D, the

mean-value property of harmonic functions implies that

Z

1

u(x0 ) =

u dS ≤ u(x0 )

|∂Br | ∂Br

since u(x0 ) is the maximum value of u, and so we must have equality above, and u

must be equal to u(x0 ) everywhere on ∂Br . Hence u is constant on Br . Repeating

this argument, we can “fill out” all of D, and conclude u is constant on D. (A slick

argument for this: the set where u(x) = u(x0 ) is both closed and open in D, hence is

all of D, since D is connected.) Our second proof does not rely so heavily on the mean-value property (at least for the

first statement of the maximum principle), and hence generalizes to other “Laplacianlike” (a.k.a elliptic) operators, for which the mean-value property does not hold.

Proof # 2: again, suppose u were to have a maximum (say) at an interior point

x0 ∈ D. Then we know from vector calculus that ∆u(x0 ) ≤ 0, which is almost a

contradiction to ∆u = 0, but not quite (because it is ≤ rather than <). We can “fix”

this by introducing a new function, for any ε > 0,

v(x) := u(x) + ε|x|2 .

Notice that

∇v = ∇u + 2εx,

∆u = ∆u + 2εn = 2εn > 0

35

(n is the space dimension), and so v really cannot have a maximum at an interior

point of D. That means that for any x ∈ D,

u(x) ≤ v(x) < max v(y) = max[u(y) + ε|y|2 ] ≤ max u(y) + ε max |y|2

y∈∂D

y∈∂D

y∈∂D

y∈∂D

and now letting ε ↓ 0, we see

u(x) ≤ max u(y),

y∈∂D

which is the first statement of the maximum principle (sometimes called the weak

maximum principle. For the second statement (sometimes called the strong maximum

principle), we can use the mean value property as before. Consequences of the maximum principle:

• Uniqueness of solutions of the Dirichlet problem for the Poisson equation:

Theorem: Let f and g be continuous functions on D and ∂D respectively. There

is at most one function u which is twice continuously differentiable in D, continuous on D̄, and solves

∆u = f D

.

u = g ∂D

Proof: If u1 (x) and u2 (x) are both solutions, then their difference w(x) :=

u1 (x) − u2 (x) solves

∆w = 0 D

.

w = 0 ∂D

The maximum principle says that w attains its maximum and minimum on the

boundary ∂D. Since w ≡ 0 on ∂D, we must have w ≡ 0 in D, and hence u1 ≡ u2

in D. • Uniqueness of the (Dirichlet) Green’s function:

Theorem: There is at most one Dirichlet Green’s function for a given region D.

Proof: if G(x; y) is a (Dirichlet) Green’s function for D, then the function

Hx (y) := G(x; y) − Gf (x; y) (where Gf denotes the free-space Green’s function)

solves the problem

∆Hx (y) = 0

y∈D

H x (y) = −Gf (x; y) y ∈ ∂D

and so is unique, by the previous theorem. 36

5. Green’s functions by eigenfunction expansion.

We will just do one example.

Example: Find the Dirichlet Green’s function in the infinite wedge of angle α, described in polar coordinates (r, θ) by 0 ≤ θ ≤ α, r ≥ 0.

So given a point x in the wedge, we are looking for a function Gx solving ∆Gx = δx

inside the wedge, and Gx = 0 on the rays θ = 0 and θ = α. It is natural to work in

polar coordinates. Let (r0 , θ0 ) be the polar coordinates of x. Then the delta function

centred at x is written in polar coordinates as r10 δ(r − r0 )δ(θ − θ0 ) (the factor 1/r0 is

there since integrating in polar coordinates, dy becomes rdrdθ – check it). Also, the

Laplacian in polar coordinates is

1 ∂2

∂2

1 ∂

+

∆= 2 +

∂r

r ∂r r2 ∂θ2

and so we are looking for G(r, θ) which solves

Grr + 1r Gr + r12 Gθθ = r10 δ(r − r0 )δ(θ − θ0 )

G(r, 0) = G(r, α) = 0

2

∂

The eigenfunctions of ∂θ

2 (the angular part of the Laplacian) which satisfy zero boundary conditions at θ = 0 and θ = α are sin(nπθ/α), n = 1, 2, 3, . . ., so we will try to

find G in the form of an eigenfunction expansion

G(r, θ) =

∞

X

cn (r) sin(nπθ/α)

n=1

(in fact, a Fourier sine series since the eigenfunctions are sines), and our job is to

find the coefficients cn (r).

Applying ∆ term-by-term to the expansion, we find

∞ X

1 0

n2 π 2

1

00

cn + cn − 2 cn sin(nπθ/α) = 0 δ(r − r0 )δ(θ − θ0 ),

r

α

r

n=1

and integrating (in θ) both sides against sin(kπθ/α) yields

Z α

1 0 k2π2

21

2

00

0

δ(r −r )

sin(kπθ/α)δ(θ −θ0 )dθ = 0 sin(kπθ0 /α)δ(r −r0 ).

ck + ck − 2 ck =

0

r

α

αr

αr

0

For r 6= r0 , this is

1

k2π2

c00k + c0k − 2 ck = 0

r

α

37

an equation of “Euler type” whose solutions are of the form rb , where plugging rb in

yields

k2π2

0 = b(b − 1) + b − 2 = (b − kπ/α)(b + kπ/α),

α

so b = ±kπ/α. Thus we find

Arkπ/α + Br−kπ/α 0 < r < r0

ck (r) =

.

Crkπ/α + Dr−kπ/α r0 < r < ∞

For finiteness at r = 0, we take B = 0, and to avoid growth as r → ∞, we take

C = 0, hence

Arkπ/α 0 < r < r0

ck (r) =

.

Dr−kπ/α r0 < r < ∞

We will find A and D through matching conditions at r = r0 . Continuity at r = r0

implies A(r0 )kπ/α = D(r0 )−kπ/α , and so defining a new constant à = A(r0 )kπ/α , we

have

)

(

r kπ/α

0

min(r, r0 )

0

<

r

<

r

0

kπ/α

r

=

Ãρ

,

ρ

:=

.

ck (r) = Ã

kπ/α

r0

max(r, r0 )

r0 < r < ∞

r

The jump condition is

Z r0 + kπθ0

2

1 0 0 k2π2

2kπ Ã

0 r 0 +

sin

(rc

c

=

)

−

dr

=

c

k

k

k r 0 − = −

0

2

αr

α

r

α

αr0

r0 −

so à = − sin(kπθ0 /α)/(kπ), and ck (r) = − sin(kπθ0 /α)ρkπ/α /(kπ). So our expression

for the Green’s function is

∞

1X1

G=−

sin(nπθ0 /α) sin(nπθ/α)ρnπ/α ,

π n=1 n

and using the cosine summation law to write

nπ

i

1 h nπ

sin(nπθ0 /α) sin(nπθ/α) =

cos

(θ − θ0 ) − cos

(θ + θ0 ) ,

2

α

α

we find

∞

nπ

i

1 X 1 h nπ

0

nπ/α

0

nπ/α

G=−

cos

(θ − θ ) ρ

− cos

(θ + θ ) ρ

2π n=1 n

α

α

∞

i

X

1

1h

0

0

= − Re

(ρei(θ−θ ) )nπ/α − (ρei(θ+θ ) )nπ/α .

2π

n

n=1

38

0

π/α

0

π/α

Now setting z1 := ρei(θ−θ ) , z2 := ρei(θ+θ ) , noting that |z1 | = |z2 | = ρπ/α < 1 (for

r 6= r0 ), and recalling the Taylor series

∞

X

1 n

z

− log(1 − z) =

n

n=1

is convergent for |z| < 1, we arrive at

!

π/α

1 − z π/α 1 − z π/α 2

1

1

1

−

z

1

1

1

1

G(r, θ; r0 , θ0 ) =

=

Re log

log log =

π/α

π/α

π/α

1 − z2 4π

1 − z2 2π

2π

1 − z2

1

1 + ρ2π/α − 2ρπ/α cos(π(θ − θ0 )/α)

=

log

4π

1 + ρ2π/α − 2ρπ/α cos(π(θ + θ0 )/α)

π/α

(ρ

+ ρ−π/α )/2 − cos(π(θ − θ0 )/α)

1

log

=

4π

(ρπ/α − ρ−π/α )/2 − cos(π(θ + θ0 )/α)

and so finally

1

G(r, θ; r , θ ) =

log

4π

0

0

cosh( απ log ρ) − cos( απ (θ − θ0 ))

cosh( απ log ρ) − cos( απ (θ + θ0 ))

,

ρ :=

min(r, r0 )

,

max(r, r0 )

a rather attractive formula for the (Dirichlet) Green’s function in the wedge of angle

α. (And we should pause and appreciate it when we are lucky enough to find an

explicit formula – it is pretty rare!)

Amusing exercise: check that we recover the formulas that we previously derived

(using the method of images) for the half-plane (α = π) and the quarter-plane (α =

π/2) .

39

IV. GREEN’S FUNCTIONS FOR TIME-DEPENDENT PROBLEMS

1. Green’s functions for the heat equation.

Suppose we measure the temperature u(x, t) at each point x in a bounded region V

in Rn (for us, usually n = 1, 2, or 3), and at each time t. The region is subjected to

heat sources f (x, t), and at its boundary, it is held at temperature g(x, t) (x ∈ ∂V ).

Initially (at time t = 0, say), the temperature distribution in V is u0 (x). The following

initial-boundary value problem for the heat equation describes the temperature

distribution u(x, t) at later times t > 0:

∂u

− D∆u = f (x, t),

x ∈ V, t > 0

heat equation:

∂t

,

(23)

boundary value: u(x, t) = g(x, t), x ∈ ∂V, t > 0

initial condition: u(x, 0) = u0 (x), x ∈ V

where D > 0 is the diffusion rate constant.

We would like to represent the solution to this problem using a Green’s function.

The first observation is that the differential operator appearing in the equation is not

self-adjoint:

∂

∂

L :=

− D∆u

=⇒

L∗ = − − D∆u.

∂t

∂t

Next, some notation. We fix a time interval [0, T ] (some T > 0), and let CT denote

the space-time cylinder CT = V × [0, T ].

As before, a Green’s function for our problem should be a function of two sets of

variables: G(x, t; y, τ ), x, y ∈ V , t, τ ≥ 0. To determine the problem that G should

solve, we suppose u(y, τ ) solves problem (23), and integrate G against Lu over the

space-time cylinder, and integrate by parts:

Z

Z

G(uτ − D∆u)dydτ

G Lu dydτ =

CT

CT

Z

Z TZ ∂G

∂u

∗

=

L G u dydτ +

u

−G

dS(y)dτ

∂n

∂n

CT

0

∂V

Z

+

(Gu|τ =T − Gu|τ =0 ) dy.

V

So supposing we demand that our Green’s function G(x, t; y, τ ) solves

∂G

− ∂τ − D∆G = δ(y − x)δ(τ − t)

G ≡ 0 for y ∈ ∂V

G ≡ 0 for τ > t (“causality”) ,

40

(24)

we arrive at a representation formula for u(x, t), x ∈ V , 0 ≤ t < T ,

Z TZ

Z

u(x, t) =

G(x, t; y, τ )f (y, τ )dydτ +

G(x, t; y, 0)u0 (y)dy

0

V

V

Z TZ

∂G

−

g(y, τ )dS(y)dτ.

0

∂V ∂n

(25)

Note that the above “causality” condition implies that the solution at time t should

not depend on any of its values at later times – i.e. we are solving forward in time.

Problem (24) for the Green’s function looks a little odd. It is a backwards heat

equation, which would be nasty, except that is also solved backwards in time (starting

from time τ = t and going down to τ = 0). So, to straighten it out, it is useful to

change the time variable from τ to σ := t − τ . Problem (24) then becomes

∂G

∂σ − D∆G = δ(y − x)δ(σ)

.

(26)

G = 0 for y ∈ ∂V

G = 0 for σ < 0

This is the problem we will try to solve in various situations. The simplest case is

when there is no boundary – the free-space case.

41

2. Free-space Green’s function for the heat equation.

We will find the free-space Green’s function for the heat equation by using the Fourier

transform, so let us first recall the definition and some key properties of it.

R

Definition: Let f be an integrable function on Rn (that means Rn |f (x)|dx < ∞).

The Fourier transform of f is another function, fˆ, defined by

Z

−n/2

ˆ

e−iξ·x f (x)dx.

f (ξ) := (2π)

Rn

Here are some useful properties of the Fourier transform. Let f , g be smooth functions

with rapid decay at ∞.

\

1. F.T. is linear: (αf

+ βg)(ξ) = αfˆ(ξ) + βĝ(ξ)

ˇ

2. F.T. is (almost) its own inverse: fˆ = f , where

Z

−n/2

ǧ(x) := (2π)

eiξ·x g(ξ)dξ

Rn

is the inverse Fourier transform.

3. F.T. is unitary (its inverse is its adjoint): (fˆ, g) = (f, ǧ), and in particular

(taking g = fˆ), preserves the “L2 -norm”:

Z

Z

2

ˆ

|f (ξ)| dξ =

|f (x)|2 dx.

Rn

Rn

4. F.T. “interchanges differentiation and coordinate multiplication”:

∂ ˆ

(x\

f (ξ).

j f (x))(ξ) = i

∂ξ

d

∂f

(ξ) = (iξj ) fˆ(ξ),

∂xj

5. F.T “interchanges convolution and multiplication”:

Z

f[

∗ g(ξ) = (2π)n fˆ(ξ)ĝ(ξ),

(f ∗ g)(x) :=

f (x − y)g(y)dy.

Rn

6. F.T interchanges coordinate translation and multiplication by an exponential:

for a ∈ Rn ,

\

f (x

− a)(ξ) = e−ia·ξ fˆ(ξ).

7. F.T maps Gaussians to Gaussians: for a > 0,

|ξ|2

\

a|x|2

e− 2 (ξ) = a−n/2 e− 2a .

42

It is property 4 which makes the Fourier transform so useful for differential equations

– it converts differential equations into algebraic ones.

Property 2 is deep, and difficult to prove. See an analysis textbook for this. The

other properties are easy to show. We will do 4 and 7 – two that we will use shortly.

Proof of property 4: integrating by parts,

Z

Z

d

∂f

−ix·ξ ∂f

−n/2

−n/2

e

(−iξj )e−ix·ξ f (x)dx = iξj fˆ(ξ).

(ξ) = (2π)

(x)dx = −(2π)

∂xj

∂x

n

n

j

R

R

And for the other one,

∂ ˆ

∂

i

f (ξ) = i

(2π)−n/2

∂ξj

∂ξj

Z

e

−ix·ξ

−n/2

Z

f (x)dx = i(2π)

Rn

(−ixj )e−ix·ξ f (x)dx = (x\

j f (x))(ξ).

Rn

Proof of property 7: the higher-dimensional cases follow easily from the case n = 1,

so we’ll do that one. Completing the square,

Z ∞

Z ∞

2

a

2

\

−1/2

−ix·ξ − a2 x2

−1/2

− ξ2a

− a2 |x|2

e

(ξ) = (2π)

e

e

e− 2 (x+iξ/a) dx.

dx = e (2π)

−∞

−∞

2

The last integral is the integral of the entire complex function f (z) = e−az /2 along

the contour z = x + iξ/a, −∞ < x < ∞ in the complex plane. We can “shift” the

contour to the real axis using Cauchy’s theorem.

Z

Z

Z ∞

Z

− a2 (x+iξ/a)2

f (z)dz

e

dx = lim

f (z)dz = lim

f (z)dz +

R→∞

−∞

R→∞

[−R,R]+iξ/a

AR ∪BR

[−R,R]

where AR denotes the contour z = −R +iy, y : eiξ/a → 0, and BR denotes the contour

z = R + iy, y : 0 → eiξ/a (draw a picture!). Along AR and BR , we have

a

a

2

|f (z)| = e− 2 Re(z ) ≤ e 2 (R

and so

Z

AR ∪BR

Hence

Z

∞

ξ2

a 2

f (z)dz ≤ 2e 2a e− 2 R → 0 as R → ∞.

− a2 (x+iξ/a)2

e

2 −ξ 2 /a2 )

Z

∞

dx =

− a2 x2

e

r

dx =

−∞

−∞

2π

a

(the last equality is a standard fact which can be proved, for example, by squaring

the integral, interpreting it as a two-dimensional integral, and changing to polar

coordinates). Finally, then, we arrive at

2

ξ

− a2 |x|2

e\

(ξ) = a−1/2 e− 2a

43

as needed.

Now let’s return to the problem of finding the free-space Green’s function for the heat

equation. That is, solving (26) when V = Rn . Let Ĝ(x, t; ξ, σ) denote the Fourier

transform of G(x, t; y, σ) in the variable y. Property 4 of the Fourier transform shows

that ∆ in the variable y corresponds to multiplication by −|ξ|2 . Also, note that

Z

−n/2

e−iy·ξ δ(y − x)dy = (2π)−n/2 e−ix·ξ ,

δ[

x (y)(ξ) = (2π)

Rn

and so we have to solve

∂