CENTER RESEARCH OPERA TIONS Working Paper

advertisement

OPERA TIONS RESEARCH

Working Paper

CENTER

Solving Variational Inequality and Fixed Point Problems by

Averaging and Optimizing Potentials

by

T.L. Magnanti

G. Perakis

OR 324-97

December 1997

MASSA CHUSETTS INSTITUTE

OF TECHNOLOGY

Solving Variational Inequality and Fixed Point

Problems by Averaging and Optimizing Potentials

by

T.L. Magnanti

G. Perakis

OR 324-97

December

1997

Solving Variational Inequality and Fixed Point Problems by

Averaging and Optimizing Potentials

Thomas L. Magnanti * and Georgia Perakis t

December 1997

*

Abstract

We introduce a general adaptive averaging framework for solving fixed point and variational

inequality problems. Our goals are to develop schemes that (i) compute solutions when the

underlying map satisfies properties weaker than contractiveness, (for example, weaker forms of

nonexpansiveness), (ii) are more efficient than the classical methods even when the underlying

map is contractive, and (iii) unify and extend several convergence results from the fixed point

and variational inequality literatures.

To achieve these goals, we consider line searches that

optimize certain potential functions. As a special case, we introduce a modified steepest descent

method for solving systems of equations that does not require a previous condition from the

literature (the square of the Jacobian matrix is positive definite). Since the line searches we

propose might be difficult to perform exactly, we also consider inexact line searches.

Key Words:

Fixed Point Problems, Variational Inequalities, Averaging Schemes, Nonexpansive NMaps,

Strongly-f-Monotone Maps.

*Sloan School of Management and Department of Electrical Engineering and Computer Science, MXIIT. Cambridge,

MA 02139.

tOperations Research Center, Cambridge, MA 02139.

SPreparation of this paper was supported, in part, by NSF Grant 9634736-DMI from the National Science

Foundation.

1

1

Introduction

Fixed point and variational inequality problems define a wide class of problems that arise in optimization as well as areas as diverse as economics, game theory, transportation science, and regional

science. Their widespread applicability motivates the need for developing and studying efficient

algorithms for solving them.

Often algorithms for solving problems in these various settings establish an algorithmic map

T : K C R n -+ K is a given map defined over a closed, convex (constraint) set K in R n whose fixed

point solution solves the original problem.

FP(T,K): Find x* E K C R' satisfying T(x*) = x*.

(1)

The algorithmic map T might be defined through the solution of a subproblem. For variational

inequality problems, examples of an algorithmic map T include a projection operator (see Proposition

1) or the solution of a simpler variational inequality subproblem (see, for example, [12] and [44]).

A standard method for solving fixed point problems for contractive maps T(.) in Rn is to apply

the iterative procedure

Xk+l = T(Xk).

(2)

The classical Banach fixed point theorem shows that this method converges from any starting point

to the unique fixed point of T. When the map is nonexpansive instead of contractive, this algorithm

need not converge and, indeed, the map T need not have a fixed point (or it might have several). For

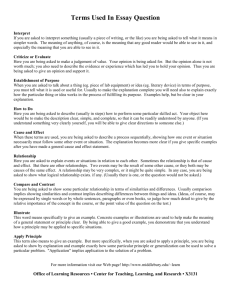

example, the sequence that the classical iteration (2) induces for the 900 degree rotation mapping

shown in Figure 1 does not converge to the solution x*.

x

T(x)

Figure 1: 90 ° Degree Rotation

2

In these situations, researchers (see [2], [10], [13], [27], [38], [39], [46]) have established convergence

of recursive averaging schemes of the type

Xk+1 = Xk + ak(T(xk) - Xk),

assuming that 0 < ak < 1 and

0 l min(ak, 1 Ek_

ak) = +,

Ek=l ak(l -

ak) = +oo.

Equivalently, this condition states that

implying that the iterates lie far "enough" from the previous iterates

as well as from the image of the previous iterates under the fixed point mapping. For variational

inequality problems, averaging schemes of this type give rise to convergent algorithms using algorithmic maps T that are nonexpansive rather than contractive (see Magnanti and Perakis [38]).

Even though recursive averaging methods converge to a solution whenever the underlying fixed

point map is nonexpansive, they might converge very slowly. In fact, when the underlying map T

is a contraction, recursive averaging might converge more slowly than the classical iterative method

(2).

Motivated by these observations, in this paper we introduce and study a recursive averaging

framework for solving fixed point and variational inequality problems. Our goals are to (i) design

methods permitting a larger range of step sizes with better rates of convergence than the classical

iterative method (2) even when applied to contractive maps, (ii) impose assumptions on the map T

that are weaker than contractiveness, (iii) understand the role of nonexpansiveness, and (iv) unify

and extend several convergence results from the fixed point and variational inequality literature. To

achieve these goals, we introduce a general adaptive averaging framework that determines step sizes

by "intelligently" updating them dynamically as we apply the algorithm. For example, to determine

step sizes for nonexpansive maps. we adopt the following idea: unless the current iterate lies close

to a solution, the next iterate should not lie close to either the current iterate or its image under the

fixed point map T. As a special case, we introduce a modification of the classical steepest descent

method for solving fixed point problems (or equivalently, unconstrained asymmetric variational

inequality problems). This new method does not require the square of the Jacobian matrix to be

positive definite as does the classical steepest descent method, but rather that the Jacobian matrix

be positive semidefinite. Moreover, it is a globally convergent method even in the general nonlinear

case.

3

1.1

Preliminaries: Fixed Points and Variational Inequalities

Fixed point problems are closely related to variational inequality problems

VI(f,K) : Find x* E K C Rn : f(x*)t(x- x*) > 0, Vx E K

(3)

defined over a closed, convex (constraint) set K in R n . In this formulation, f : K C R n -+ R n is a

given function.

The following well-known proposition provides a connection between the two problems. In stating

this result and in the remainder of this paper, we let G be a positive definite and symmetric matrix.

We also let PrG denote the projection operator on the set K with respect to the norm IIXIIG =

(xtGx) .

Proposition 1 ([25]): Let p be a positive constant and T be the map T = Pr(I

- pG-' f). Then

the solutions of the fixed point problem FP(T, K) are the same as the solutions of the variational

inequality problem VI(f, K), if any.

Corollary 1 : Let f = I - T. Then the fixed point problem FP(T, K) has the same solutions, if

any, as the variationalinequality problem VI(f, K).

To state the results in this paper, we need to impose several conditions on the underlying fixed

point map T or the variational inequality map f. We first introduce a definition that captures many

well known concepts from the literature.

Definition 1 : A map T: K - K is A-domain and B-range coercive on the set K with coercive

constants A E R and B E R if

IIT(x) - T(y)II

<

Allx

-

yll

+ BIIx - T(x) - y + T(y)IG

V, y E K.

T is a (range) coercive map on K relative to the II.IG norm if A > 0 and B = 0.

T is a (range) contraction on K, relative to the

GII.G

norm,

and is (range) nonexpansive on K, relative to the

HI.IIG

if 0 < A < 1 and B = 0

norm, if A = 1 and B = 0.

T is nonexpansive-contractive if A = 1 and B < 1,

T is contractive-nonexpansive

T is nonexpansive-nonexpansive

if A < 1 and B = 1,

if A = 1 and B = 1,

T is firmly contractive on K relative to the I.HIG norm if 0 < A < 1 and B = -1,

and T is firmly nonexpansive on K relative to the II.II

4

norm if A = 1 and B = -1.

(4)

Observe that any firmly contractive map is a contraction and any firmly nonexpansive map is

nonexpansive.

When y in the definition of a nonexpansive map is restricted to be a fixed point solution, researchers have referred to the map T as pseudo nonexpansive relative to the 11I.IG norm (see [55]).

Observe that any nonexpansive map is also nonexpansive-contractive.

The following example

shows that the converse isn't necessarily true.

Example:

If T(x) = -3x then x* = 0. T is an expansive map about x* since lIT(x) - T(x*)l[ > lIx - x*II for

all x. Nevertheless, the map T satisfies the nonexpansive-contractivecondition, with B =

,1since

2

91]x[I2 = liT(x) - T(x*)112 = lx - x* 112+ Bllx - T(x) 11

= 911IIx 2

Definition 2 : For variational inequality problems VI(f, K), the following notions are useful.

f is b-domain and d-range monotone (on the set K) with monotonicity constants b and d if

[f(x) - f(y)] t G[x - y] > blx -

G

+ dIlf(x) - f(Y)l,

Vx y

E K.

As special cases:

(i) f is strongly domain and range monotone if b > 0 and d > 0.

(ii) f is strongly monotone if b > 0 and d = 0.

(iii) f is strongly-f-monotone if b = 0 and d > 0.

(iv) f is monotone if b = d = 0.

Contraction, nonexpansiveness, firm nonexpansiveness, pseudo nonexpansiveness, monotonicity, and

strong monotonicity are standard conditions in the literature (especially when G = I). Some authors

refer to strong monotonicity and strong-f-monotonicity as coercivity and co-coercivity (see [54], [57]).

Lemma 1:

Let T: R n - R n and f : R n -

R n be two given maps with T = I - f.

Then T

is domain and range coercive with coercivity constants A and B if and only if f is domain and

range monotone with monotonicity constants b = I-A and d =

-B. (We permit the coercivity and

monotonicity constants to be negative.) That is,

2

JIT(x) - T(y) l1 < AlIx - Y2 + BlIx - T(x) - y + T(y)HG

if and only if

[f(x) - f(y)] t G[x

y]G

]> -

2

X

5

[2-y

.

Proof: Substitute I - f = T , expand IIT(x) - T(y)1G, and rearrange terms.

Corollary 2 :

1. T is nonexpansive relative to the Il.IIG norm if and only if f is strongly-f-monotone relative to

the matrix G with a monotonicity constant d = 1

2. If T is contractive relative to the 11.lG norm, then f is strongly monotone relative to the matrix

G with a monotonicity constant b = 1-A

3. If T is nonexpansive-nonexpansive (nonexpansive-contractive) relative to the Il.IIG norm with

a coercivity constant B E [0, 1] (B < 1 in the nonexpansive-contractive case) if and only if f

is strongly-f-monotone with constant d

= 1-B

4. T is firmly contractive relative to the II6G norm with constant A E [0, 1) if and only if f is

domain and range monotone with constants b =

A and d = 1.

Proof of the Corollary: Take A = 1 and B = 0 for part a, A < 1 and B = 0 for part b, A = 1 and

B = 1 (or B < 1) in part c, and B = -1 in part d.

The following related result is also useful.

Proposition 2 ([8]): Let p > 0 be a given constant, G a positive definite and symmetric matrix,

and f : K C R n - R n a given function. Then the map T = I - pG-1f is nonexpansive with respect

to the II.IIG norm if and only if f is strongly-f-monotone.

We structure the remainder of this paper as follows. In Section 2 we introduce a general adaptive

framework for solving fixed point problems that evaluates step sizes through the optimization of a

potential. In Section 3 we introduce and study several choices of potentials as special cases. At each

step, we optimize these potentials to dynamically update the step sizes of the recursive averaging

scheme. We also examine rates of convergence for the various choices of potentials. To make the

computation of the step sizes easier to perform, in Section 4 we attempt to understand when the

schemes have a better rate of convergence than the classic iteration (2). In Section 5 we consider

inexact line searches. Finally, in Section 6 we address some open questions.

6

Potential Optimizing Methods: A General Case

2

2.1

An Adaptive Averaging Framework

We are interested in finding "good" step sizes ak for the following general iterative scheme

Xk+1 = xk(ak) = Xk + ak(T(xk) - xk).

For any positive definite and symmetric matrix G, the fixed point problem FP(T, K) is equivalent

to the minimization problem

(5)

minxEK][X - T(x) I.

The difficulty in using the equivalent optimization formulation (5) is that even when T is a contractive

map relative to the 1l.[IG norm, the potential llx - T(x)iiG need not be a convex function.

Example:

Let T(x) = xl/ 2 and K = [1/2, 1]. Then the fixed point problem becomes

Find x*

E [1/2,1]:

(x*) 1/

2

=

*.

x* = 1. The mapping T is contractive on K since

IIT(x) - T(y)ll = IX1/2

yl/2

=

<

[x-yl

IIx1/2 + y1 2 11

2

-Yll,

for all x, y > 1/2. The potential g(x) = (x-T(x))2 = (x-x l/ 2 )2 is not convex for all x E [1/2. 9/16).

In fact, since g(x)" =

2

< 0 for all x < 9/16, g(x) is concave in the interval [1/2, 9/16).

This example shows that the minimization problem (5) need not be a convex programming

problem.

Nevertheless, the equivalent minimization problem does motivate a class of potential

functions and a general scheme for computing the step sizes ak.

Adaptive Averaging Framework

ak = argmin({as}g(xk(a)).

Later, we consider several choices for the potential g(xk(a)). We assume the step size search set S

is a subset of R. Examples include S = [0,1], S = R+ and S = [-1,0].

We first make several observations, beginning with a relationship between

Ix-

*G'

7

x - T(x)ll2 and

Preliminary Observations

Proposition 3 : If T is a coercive map relative to the I IG norm with a coercive constant A > 0

and if x 54 x*, then the following inequality is valid,

(1 +

V?1)

2

>

IIx- T(x)11G

> (1- _)

-IIx-X*11

-6

2

(6)

Proof: Lemma 1 with y = x* and B = 0 together with the inequality I(x - T(x))tG(x - x*)l <

IIx - T(x)IIG.Ilx - x*IIG imply that

lix - T(x)IIG.llx

- X IIG

1-A

---I -2

X*I12

± 1

+

x

2

-

I1

T(x)11

Dividing by ilx- x* I on both sides and setting y = Ix-/*11 c 'l we obtain

y2-y +

¾_A < 0.

inequality holds for the values of y lying between the two roots yl = 1 + vT and Y2 = 1 -

This

a

of

the binomial. This result implies the inequality (6). Q.E.D.

Definition 3 : The sequence {k} is asymptotically regular (with respect to the map T)

if limk-o,,

lXk

- T(xk)II =

0.

Proposition 4 :

i. If T is a continuous map and the sequence {Xk} converges to some fixed point x*, then the sequence

{k } is asymptotically regular.

ii. If the sequence {Xk} is bounded and asymptotically regular, then every limit point of this sequence

is also a fixed point solution.

Proof: Property (i) is a direct consequence of continuity since if the sequence {k} converges to

some fixed point x*, then the continuity of T implies that limk-+,o l[xk - T(xk)I2 = 0.

Result (ii) follows from the observation that if the sequence {k } is bounded, then it has at least one

limit point. Asymptotic regularity then implies that every limit point is also a fixed point solution.

Q.E.D.

Remark: If the sequence Ilxk - x* 11is convergent for every fixed point solution x*. then the entire

sequence {(k} converges to a fixed point solution.

This remark follows from property (ii) of Proposition 4 since every limit point xt is also a fixed point

solution x* and the sequence llxk - x*IIG is convergent for every limit point x* =

further implies that the limit point

. This result

of the sequence {xk} must be unique. Therefore, the entire

sequence {(k} converges to a fixed point solution.

8

Corollary 3 ([38]): If T is a nonexpansive map and for each k = 1, 2,...,

Xk+l = Xk

+ ak(T(xk)

-

xk), with ak E [0, 1], then the following two statements are equivalent:

i. For some fixed point x*, limk.+o

-x*I Ek -

=

0.

ii. The sequence {xk} is asymptotically regular.

Corollary 4 ([38]): Let T be any coercive map. Let xk(a)

+ a(T(xk) - Xk) for any 0 < a < 1

= Xk

and let x* be any fixed point of T. Then,

i.

Ixk(a)

-

X*IG < [1 - a(1

ii. 11k(a) - T(xk(a))IG

<

and

- V/)]lxk - X*l11,

[1

-

a(l - V)]llxk -

T(xk)lIG.

Remark:

Corollary 4 implies that for any potential function g(xk(a)) with a E [0, 1], if T is nonexpansive and

x* is any fixed point of T, then the iterates Xk of the general scheme satisfy the following conditions:

i. Ixk

i.

l[xk

-

x*

IG is nonincreasing and, therefore, a convergent sequence.

- T(xk)lG is nondecreasing and, therefore, a convergent sequence.

In analyzing the convergence of the adaptive averaging framework, we will impose several conditions on the map T and on the step sizes

ak}

in the iterative scheme xk+l = xk + ak(T(xk)

For this purpose, we will select the step sizes from a class C of sequences {ak}.

- Xk).

For example,

the class C might be the set of all sequences generated by a family of potential functions g,

that is, ak = argminaESg(xk(a)), for some step size search set S. some potential function g and

xk(a)

= Xk

+ a(T(xk)

-

Xk).

Alternatively, C might be the set of all Dunn sequences, that is,

sequences satisfying the conditions 0 < ak < 1 and

k-=l ak(1

- ak) =

+0o.

Convergence Analysis

For some positive definite, symmetric matrix G and any sequence of step sizes {ak} from class

C, for a fixed point solution x* of the map T. we define the quantity

__Ixk

Ak(X*)

- x*11_- lIT(xk)-

-

IiXk - T(Xk) 11

2

T(·x*) 11

+ ( - ak).

Observe that if T is nonexpansive, then the first term in the definition of Ak(x*) is nonnegative.

If, additionally, 0 < ak

1, then the quantity Ak(x*) is nonnegative. Therefore, when 0 < ak < 1,

the condition Ak(x*) > 0 provides a generalization of the condition T is a nonexpansive map.

We use a generalization of this condition by imposing the following assumptions in our convergence analysis.

9

Al. For any fixed point x* of the map T, akAk(x*) is nonnegative.

A2. For any fixed point x* of the map T, if akAk(x*) converges to zero, then every limit point of

the sequence {Xk} is a fixed point solution.

As we have already observed, whenever T is nonexpansive and 0 < ak < 1, assumption Al is

valid.

The following theorem establishes a convergence result for the general adaptive averaging framework.

Theorem 1 : Consider iterates of the type

Xk+l =

xk + ak(T(xk) - Xk), with step sizes ak chosen

from a class C. Assume that the map T has a fixed point solution x*. If the map T and the sequence

{ak} in the class C generated by the adaptive averaging framework satisfy conditions Al and A2,

then the sequence of iterates {Xk} converges to a fixed point solution.

Proof: Let F(x) = x - T(x). Consider any fixed point x* of map T. Observe that

I1Xk

X*

11

G - IT(Xk)

|Xk - T(Xk)IG

)I=

-kX

Suppose F(Xk)

#

t

T(x*) |1 +

ak) =

+

2(F(Xk) - F(x*)) G(Xk

|IF(xk) - F(x*)1

0. Since x* is a fixed point solution of the map T, F(x*)

IlXk+1

-

X1

X*

+

= lXk -

a

|

|F(Xk) -

F(x*)|Ij

- 2ak(F(Xk) - F(x*))t

- akilXk - T(k)I[

Xk

-1

X*)

0 and. therefore,

|*i =

I1Xk+1 -X* II = |1Xk -akF(k) JIjXk -

=

-

T(k)

-

jXk

(Xk - x*).

T(X)

-TT(Xk)f

+ (1 - ak)]

That is,

Xk+±1

-

X*IIG

=

IXk

-

X*l2 - akAk(X

) Xk - T(xk)

Relation (7) and assumption Al imply that the sequence lxk -X*IZ

is convergent. This result implies that either (i) llXk - T(Xk) l

.12

(7)

is nonincreasing and, therefore,

converges to zero and. therefore,

Proposition 4 implies that every limit point of the sequence of iterates {Xzk} is also a fixed point

solution, or (ii)

akAk(x*)

converges to zero and then assumption A2 implies that every limit point

of the sequence of iterates {Xk} is a fixed point solution. If either (i) or (ii) holds, then the entire

sequence of iterates {Xk} converges to a fixed point solution since as we already established in (7),

IIXk -

X"*11

is convergent for every fixed point x*. Q.E.D.

-0

le-2kFx)-Fx)t~k-x)

10

This result extends Banach's fixed point theorem since when T is a contraction, a choice of ak

=

1

for all k satisfies assumptions Al and A2 (see also Lemma 2).

As we next show, this theorem also includes as special case Dunn's averaging results (see [13],

[38]).

Theorem 2 : Suppose that T is a nonexpansive map and the step sizes ak from the class C are a

Dunn sequence and that T has a fixed point solution x*. Then the map T and the step sizes ak in

the class C satisfy assumptions Al and A2.

Proof: Assume as in Dunn's Theorem that T is a nonexpansive map and that ak

condition Zk ak(l - ak)

= +ccx.

E

[0, 1] satisfies the

Since T is a nonexpansive map and ak E [0,1], Ak(x*)

O0(that

is, Al holds).

To establish the validity of assumption A2, we suppose that it does not hold.

If akAk(x*)

converges to zero, some limit point of the sequence {zk} is not a solution. In the proof of Theorem

1, we showed that

IXk+l - x* 112

-

IXk - X*112 < -akAk(x*)]xk

which implies (since akAk(x*) > 0) that the sequence {(Ixk

-

- T(xk)I,

x*112} converges for every fixed point

solution x*. Corollary 4 implies that since ak E [0, 1], the sequence ({ sk - T(xk) l} converges. Since

we assumed that some limit point of the sequence {(k} will not be a solution, flxk -T(xk)l

> B >0

for all k > k, for sufficiently large constant ko. Then for k > k0 ,

2

211

<

lim llk+1_X 112- lxk0

E akAk(x*)B.

-

k=ko

But since T is nonexpansive,

Sk

akAk(x*) > Ek ak(l-ak)

=

+x.

Therefore,

k akAk(x*)

= +xC.

This result is a contradiction since it implies that

+o

> lim Ixk+l

k

-

x*112 _x -

-

X*I

2

< -

akAk(x )B = -c.

k=ko

Therefore, if akAk(x*) converges to zero, then every limit point of the sequence {(k} is a fixed

point solution (that is, assumption A2 is valid).

We conclude that the assumptions of Dunn's

theorem imply the assumptions of Theorem 1 and, therefore, Dunn's theorem becomes a special

case. Q.E.D.

11

Example:

Consider the map T(x) = xv/1 -ixii over the set ({x: lxIll < 1}. The fixed point solution x* = 0

is unique. The map T is nonexpansive around solutions, since

IjT(x) - T(x*)ll = Ilxllv1

-Ilxji<

lix - x*.

Ilxll =

If we choose step sizes ak = 1 for all k, then Dunn's averaging result does not apply since

ak) = 0 < +oo.

Ek

ak(l -

Banach's fixed point theorem also does not apply since T is a nonexpansive

but not a contractive map. Nevertheless, Theorem 1 ensures convergence since ak = 1 for all k is

bounded away from zero and Ak(x*)

2

l1--llT(Zxk)-z*1

+1-ak

and therefore,

IIXk 11

112

assumptions Al and A2 hold. Therefore, Theorem 1 applies for this choice of the map T and step

=

Zlk-

I

=-

=

Ilk-T(k)ll

sizes.

Remarks:

1. Observe that the iterates we considered in Theorem 1 do not necessarily require that the step

sizes lie between zero and one.

2. Theorem 1 does not require the map T to be nonexpansive. Rather it requires assumptions

Al and A2. The convergence result depends upon not only the step sizes ak, but also the

quantity Ak(x*)

+ (1 - ak) which measures both how far the step

lXzk -X' II -IIT(xk)-T(x*)II

=

lIXk -T(Xk) 11

size ak lies from one and "how far" the map T lies from "nonexpansiveness"' relative to some

C norm. Next we examine how restrictive assumptions Al and A2 are and how we can replace

them with the various versions of coerciveness we defined in Definition 1.

(a) If S C [0, 1] and T is a nonexpansive map relative to a G norm around fixed point

solutions, then assumptions Al and A2 are valid, that is,

akAk(x*)

=

a

l

xk

-

x*

-

i|T(xk) - -

T(xV*)lG + ak(1

-

ak)

> ak(l - ak) > 0.

(8)

Ixk - T(xk)iG

Conclusion from (a):

If T is a nonexpansive map relative to a 0 norm and the set S C [0, 1], then all schemes

that "repel" ak from zero and one. unless at a solution, induce a sequence of iterates that

converges to a solution.

(b) As a special case of subcase (a) assume that the step size search set is S = [cl. c 2] for

some 0 < cl < c2 < 1 (see, for example, scheme III in the next section) and that T is a

nonexpansive map. Relation (8) becomes akAk(*)

>

cl(1

- c2)

> 0. Then assumptions

Al and A2 follow easily. This observation is valid regardless of the choice of potential g.

12

(c) Theorem 1 is valid for maps T that are weaker than nonexpansive. What happens if a

map T satisfies the nonexpansive-contractiveness condition around solutions x*? Then

for step sizes 0 < ak < c < 1 - B, Ak(x*)

>

1 - B - ak > 1 - B - c > O. Assumptions

Al and A2 follow for all schemes that "repel" ak from zero unless at a solution. It is

important to observe that there are maps T satisfying the nonexpansive-contractiveness

condition that are weaker than nonexpansive.

Conclusion from (c):

If S = [0, c] C [0, 1 - B) and T is a nonexpansive-contractivemap with coercivity constant

O < B < 1, then schemes that "repel" ak from zero unless at a solution induce a sequence

of iterates that converges to a solution.

(d) Another class of maps T weaker than nonexpansive that satisfy Theorem 1 are maps that

satisfy the following condition

Vx E K, for some constant B > 1. (9)

> llx-x*ll+Bllx-T(x)112

lIT(x)-T(x*)Il

Maps T satisfying this condition are expansive. Then for step sizes 0 > ak

Ak(x*) < 1 - B - ak

>

c > 1 - B.

1 - B - c < O. Assumptions Al and A2 follow for all schemes

that "repel" ak from zero unless at a solution.

Conclusion from (d):

If S = [c. 0] C (1 - B,0] and T is a map satisfying condition (9), then schemes that

"repel"

ak

from zero unless at a solution induce a sequence of iterates that converges to

a solution.

(e) Finally, we observe that for contractive maps, if we consider step sizes in S = [0. 1] and

schemes that "repel" ak from zero unless at a solution, then assumptions Al and A2 are

also valid. The following lemma illustrates this observation.

Lemma 2 : Let T be a contractive map relative to a 1.HllG norm. Then for any choice

of step sizes ak

E

[0, 1] and schemes that "repel" ak from zero unless at a solution, the

sequence {Xk} satisfies assumptions Al and A2.

Proof: If T is a contractive map relative to the G norm with a contractive constant

A E (0, 1). then Corollary 2 implies that

Ak(x*)

2(F(xk) - F(x*))G(xk - X*)

kIF(Xk) - F(*)

_a

>

)

X

IF(xk)

- Ak- F(x*)I

+

>

-ak

k

(10)

13

(1- A)

Xk

-X

[IF(xk) -_F(x*)[[

> 0.

It is easy to see that expression (10) and the fact that the sequence Ijxk - x* 1

is nonin-

creasing implies assumptions Al and A2. Q.E.D.

Conclusion from (e):

If S = [0, 1] and T is a contractive map, then algorithmic schemes that "repel" ak from

zero unless at a solution, induce a sequence of iterates that converge to a solution.

The discussion in (a)-(e) suggests that in order to satisfy assumptions Al and A2 in Theorem

1, we may either (i) restrict the line searches we perform to a set S C [0, 1) or (-1,0], so

that our results apply for maps that satisfy a condition weaker than nonexpansiveness, or (ii)

extend the line searches to a set S D [0, 1] or [-1, 0] but as a result we might need to impose

stronger assumptions either on the map T or on the step sizes ak.

Generalized Norms

Our analysis so far applies if we consider the conditions of contractiveness, nonexpansiveness and

strictly weak nonexpansiveness relative to a .11

G norm. We observe that the analysis also applies if

we impose versions of the conditions with respect to a generalized norm P.

In particular, we might consider a generalized norm P : K x K -- R satisfying the following

properties:

1. P(x*, T(x*)) = 0 if and only if x* is a solution.

2. P(x, x) = 0.

3. P(x,T(x)) > 0, P(x,x*) > 0.

4. P is convex relative to the first component, that is, P(y,.) is convex for all fixed y, and for all

points xl, zl and a E R. some constant D > 0 satisfies the condition

P(axi + (1 - a)zi, y) < aP(xl, y) + (1 - a)P(zl, y) - Dia(1 - a)P(zl, x).

(If D1 > 0, then P is strictly convex).

Some Examples of Generalized Norms:

1) P(x, y)

=

x1- ylIG or P(x. y) = x - yil

for some positive definite and symmetric matrix

G.

14

2) For variational inequality problems VI(f, K), let P(x, y) = f(x)t(x - y). Suppose f(x) =

Mx - c and the matrix M is positive semidefinite. Then a possible choice of a map T could

be T(x) E argminyeKf(x)ty.

3) For variational inequality problems VI(f, K), let P(x, y) = (f(x) - f(y))t(x - y).

Using generalized norms, we can extend the notions of coerciveness as follows.

Definition 4 : A map T is coercive around solutions x* relative to a generalized norm P,

if for some constant A > 0 and for all x

P(T(x), x*) < v, P(x,x*).

If A = 1, then T is a nonexpansive map relative to the generalized norm P around solutions.

If A < 1, then T is a contractive map relative to the generalized norm P around solutions.

It will be useful in our subsequent analysis to observe that if P(x, x*) = 0 and P(T(x), x*) = 0

then P(x, T(x)) = 0.

This observation follows from property 4 of the generalized norm conditions. Property 1 then

also implies that x is a solution.

Let us now consider a generalized norm P with the following two additional assumptions.

5. . The generalized norm P is convex with respect to the second component. That is. for a fixed

x and for all points Z2, Y2 and a E R + , some constant D 2 > 0, satisfying

P(x, ay2 + (1 - a)z2) < aP(x, Y2) + (1 - a)P(x, Z2) - D 2 a(1 - a)P(z2 , Y2).

(If D 2 > 0, then P is strictly convex).

6. The generalized norm P satisfies the triangle inequality,

P(x, y) < P(z, z) + P(z. y).

Lemma 3 : Under assumptions 1-6 on the generalized norm P and for a map T that is coercive

relative to P, for all a E [0. 1],

P(xk(a), T(xk (a))) < [1 - a(l -

15

)] P(xk, T(xk)).

Proof: The convexity assumption 4 implies that for all a E [0, 1],

P(xk(a),T(xk(a))) < aP(T(xk), T(xk(a))) + (1 - a)P(xk,T(xk(a)))

(then the triangle inequality 6 implies that)

< P(T(xk),T(xk(a))) + (1 - a)P(xk,T(xk))

(the definition of coerciveness further implies that)

<

P(xk,xk(a)) + (1 - a)P(xk,T(xk))

(the convexity assumption 5 implies that)

< aP(xk,T(xk)) + (1 - a)P(xk, Xk) + (1 - a)P(xk,T(xk))

(finally, assumption 2 implies that)

< avP(k,

T(xk)) + (1 - a)P(k,T(xk ) ).

Q.E.D.

In the previous analysis, we presented choices of maps T and step sizes ak that imply assumptions

Al and A2. Next we illustrate a specific class of potentials satisfying assumptions Al and A2 as well.

This class of potentials is only a special case. Theorem 1 also applies to other potential functions

as we will show in detail in Section 3. To state these results in a more general form, we use the

generalized norm concept that we have introduced in this section.

2.2

A Class of Potential Functions

The previous discussion related assumptions Al and A2 to the notions of contractiveness, nonexpansiveness, and strictly weak nonexpansiveness. We next address the following natural question:

Is there a class of potentials satisfying assumptions Al and A2?

To provide partial answers to these questions, we consider potentials of the form

g(xk(a)) = P(xk(a), T(xk(a)) 2 -/3h(a)P(xk, T(xk)) 2 .

As we will see, these potentials satisfy assumptions Al and A2 if we impose the following conditions:

P : K x K -* R is a continuous function, h : R -* R+ is a given continuous function, and j > 0 is

a given constant satisfying the following assumptions

16

(i) P is a generalized norm (that is, satisfies assumptions 1-6 from the last section).

(ii) h(O) = 0.

(iii) For some point a E S n (0, 1), h(a) > 0.

(iv) h is bounded from above on S n [0, 1], that is, 0 < C = SupaEsn[o,llh(a) < +oo.

(v) 1 < C.

The following result provides a bound on the rate of convergence of the adaptive averaging

framework for choices of potentials satisfying properties (i)-(v).

Proposition 5 : Consider the class of potentials g satisfying the properties (i)-(iv). The general

scheme converges at a rate

2

P(xk+l,T(xk+l))2 < [1 - /3(C - h(ak))]P(xk,T(xk)) .

(11)

Furthermore,for step sizes ak E [0, 1] n S,

P(xk+l,T(xk+l))2 < [1

-

2

2

ak(l - V)]1P(xk,T(xk)) .

(12)

Proof: Select a* E S n [0, 1]. The iteration of the general averaging framework implies that

9(xk+l) = P(xk+l,T(k+l))2 - /3h(ak)P(xk,T(xk))2 <

P(xk(a*), T(xk(a*))) 2 - 3h(a*)P(xk, T(xk)) 2 .

Lemma 3 implies that P(xk(a*), T(xk(a*)) 2 < P(xk, T(xk)) 2 and so

g(xk+l) = P(xk+l,T(xk+l))2 - fh(ak)P(xk,T(xk)) 2 < P(xk,T(xk)) 2 -/3h(a*)P(xk,T(xk))

2

,

which, by letting h(a*) approach C, implies the inequality (11).

On the other hand, if ak E [0, 1] n S. then Lemma 3 implies that

0 < P(xk+l,T(xk+l))2 < (1 - ak(l -

2

)) P(xk,T(xk)) 2 .

Q.E.D.

Remarks:

(1) Relation (12) is valid for any choice of 3 (negative as well as positive).

(2) If C is not a limit point of h(ak), then for some constant h < C. h(ak)

h, for all k > ko.

Therefore, for all k > ko,

P(xk+l,T(xk+l))2 < [1 -

17

(C - h)]P(xk, T(xk)) 2 .

(13)

(3) If the step sizes

ak

are bounded away from zero, i.e.,

P(xk+1,T(xk+l)) 2

ak

> c > 0 for all k > k0 , then

xk,T(xk)) 2 .

[1 - c(1 - V)]2P(

(14)

The following proposition shows that potentials satisfying properties (i)-(v) also satisfy assumptions Al and A2.

Proposition 6 : Consider potentials of the type

g(xk(a)) = P(xk(a), T(xk(a)) 2 -

h(a)P(xk, T(xk)) 2 .

Assume that P : R x R - R is a continuous function, h : R

-

R + is a given continuous function,

and /3 > 0 is a given constant satisfying properties (i)-(v).

Any such potential satisfies the following properties:

a. limk-+,o ak = 0 implies that every limit point of

{Xk

} is a fixed point solution (that is, assumption

A1 is valid).

b. h(ak) < 0 implies that Xk is a fixed point solution.

c. limk -+c

h(ak) = 0 implies that every limit point of {xk} is a fixed point solution.

d. If h(ak) < akAk(x*) and ak

>

O, then assumptions Al and A2 are valid.

Proof: Before proving parts a-d, we observe that Proposition 5 implies that

limk-+x P(xk, T(xk)) exists.

a. If limk-+o ak = 0, then the iteration of the general scheme implies that

lim g(xk+l) =

k +oc

lim P(Xk+l,T(xk+l))2 -,3h(ak)P(xk,T(k))

2

k-+c

lim P(xk,T(k))2 [1 -

k-+oo

=

h(ak)] = lim P(xk,T(xk))2 <

k¢-+x

2

lim [P(xk(a). T(xk(a)) -/3h(a)P(xk, T(xk)) 2 ],

k +o

for any a. Therefore,

lim P(xk(a),T(xk(a))2 > (1 + /h(a))

k-+oo

lim P(xk,T(xk)) 2 .

k-+oc

Select a E (0, 1] n S satisfying h(a) > 0. Then Proposition 5 implies that P(xk(a), T(xk(a)) 2 <

[1- d(1 - V/A)] 2 P(xk,T(xk)) 2 . Therefore.

[1

-(

1 - vA)]

2

lim P(xk. T(Xk))

2

18

> (1 + h(a)) lim P(Xk T(Xk)) 2

Consequently, either limk-+, P(xk, T(xk)) = 0, implying (by property (i) and the continuity of

P) that every limit point of {xk} is a fixed point solution,

[1 -5(l - 1V)]2 > 1 + 3h(a).

But h(d) > 0 implies that [1 -

(l - v/)]

2

> 1 which is a contradiction.

We conclude that for this class of potentials, assumption Al is valid.

b. Select an a so that

< h(a). Since

< 3, such an a exists.

If xk is not a fixed point solution, then P(xk, T(xk))

#

O. The generalized nonexpansive property

and the choice of 5 imply that

g(xk(a)) = P(Xk(d),T(xk(a)) 2 -

h(d)P(Xk,T(xk))2 < P(xk, T(xk))

2

- P(Xk,T(k)) 2 = 0.

But if h(ak) < O, then

2

g(xk+l) = P(Xk+l,T(Xk+l))

Therefore, 0 <

g(xk+l) < g(xk(a)) <

- 3h(ak)P(xk,T(xk))2

> O.

0, which is a contradiction.

O. Therefore, as we have

c. Suppose that limk_+,~ h(ak) = 0 and that limk_+, P(xk, T(xk))

shown in part a,

lim g(Xk+l)

k-+oo

k--+oo

P(xk+lT(Xk+l))2

=

lim

k--+oc

P(k,T(xk))2 > 0.

The condition 1 - 3C < 0 implies that for some a E [0, 1] n S, 1 -/3h(a)

Lemma 3 implies that P(xk(a),T(xk(a))

k

lim g(xk(a)) =

-+oo

k

<

< O0.Consequently, since

P(xk,T(Xk)),

lim P(xk(a),T(xk(a)) 2 -/h(a)

+

lim P(Xk,T(Xk))

k -+oo

2

-

k

lim

P(xkT(xk))

lim P(xk,T(xk))2

k-+oo

oo

<

= 0.

Therefore, 0 < limk-+o g(xk+1) < limk-+o, g(xk(a)) < O, which is a contradiction. This result

implies that limP(xk, T(xk)) = 0 and, therefore, that every limit point is a fixed point solution.

d. Part b and the fact that h(O) = 0 imply that h(ak) > 0 and ak > 0 whenever xk is not a solution.

Consequently, if xk is not a solution, then Ak(x*) > 0 as well (that is, assumption A2 is valid).

Moreover, if limk_+o Ak(x*) = 0. then limk-+,o h(ak) = 0. Then part c implies that every limit

point of

{Xk}

is a fixed point solution (that is, assumption A2 is valid). Q.E.D.

Remark:

For example, if h(a) = a(l - a) and

as

> 4, then we can choose any

so that 5 < h(a).

19

-

< a < 2+

Potential Optimizing Methods: Specific Cases

3

In this section we apply the results from the previous section to special cases. We consider several

choices for the potential function g and examine the convergence behavior for the sequence they

generate.

Table I summarizes some of these results.

Let 3 E R be a given constant and

g(xk(a)) = Ik(a) - T(xk(a))llG + 3llxk(a) -

(15)

k(0)G.

We consider several choices for the constant 3.

Scheme I: Let S = [0, 1] and

> 0, then (15) becomes gl(xk(a)) = lIxk(a)-T(xk(a))I[+/3lIxk(a)-

d

Xk(0)

This potential stems from (5) and the observation that we do not allow the iterates to lie "far

away" from each other.

Lemma 4

Suppose T is a contraction relative to the I.IIG norm and 3

limk-+,

0, the sequence

ak =

Proof: If limk-+,

lim

ak =

Then if

converges to a solution (that is, assumption Al is valid).

0, then

IXk - T(xk) 1 <

k-+oo

(since

{Xk}

1 - A.

lim

IT(xk) - T 2 (xk)

k +c

+

/3 lim lxk - T(xk)l

<

k +oo

/3 < 1 - A)

(A +

3) k-lim

IlXk +oo

T(Xk)

<

lim I1Xk -T(Xk)

k-~+ao

Therefore, limk-+o Hlxk - T(xk)ll2 = 0. Proposition 4 and Corollary 4 imply that the sequence

{(k} converges to a fixed point solution. Q.E.D.

Theorem 3 : If T is a contractive map relative to the I.HIG norm, with a contraction constant 0 <

A < 1, then the sequence induced by scheme I converges to a solution for any choice 0 < /3 < 1 - A.

Proof:

· First, we restrict the line searches so that ak E [0, 1].

In this case, the proof follows from Theorem 1. In fact, Lemma 4 implies that for any choice

/3 < 1 - A, the step sizes

ak

satisfy assumption Al. Moreover, Lemma 2 implies that Ak(x*) satisfies

assumptions Al and A2.

· More generally we perform unrestricted line searches in the sense of choosing step sizes ak E R+.

20

Then the proof follows since the general iteration of the algorithm implies that

Ilxk+ - T(Xk+l)11G +

a2klXk - T(Xk)11

< IIXk - T(xk)

G.

Then

IIXk+1 - T(Xk+l)G -

< -a2IIxk

--IIk

T(xk)I11

- T(xk)2 < 0.

Therefore,

lim ak Xk - T(xk)[11

= 0.

k -+oo

This result implies that (i) either limk-+, ak = 0, or (ii) limk-+,

Ixk - T(xk)[[~ = 0. In the

former case, Lemma 4 implies that for any choice 3 < 1 - A, the sequence {Xk} converges to a

solution. In case (ii), Proposition 3 implies that the sequence {(k} converges to a solution. Q.E.D.

As the next corollary shows, scheme I extends to also include nonexpansive affine maps relative

to the II.IG norm.

Corollary 5 : If T is an affine, nonexpansive map, then the iterates {(k} of scheme I converge to

a fixed point solution.

Proof: Let dk = T(xk) -Xk.

matrix, and since

lXk =

For affine problems, the map MI = I - T is a positive semidefinite

-dk,

ak

dtMtGdk 2

+ ldkll

IMdkll

(16)

Observe that if we let G = MtGM + fG in the definition of Ak(x*), then replacing this choice of 0

and accounting for the fact that MtG is positive semidefinite, we see that

Ak(X*) >

MdMtGdk +

(Xk - x*)tM'ItG(xk - x*)

-IIMdkll +

(F(xk) - F(x*))tG(xk - x*)

Ad

dk1 + ,311dkil

dlldkl

2

Strong-f-monotonicity of f (or, equivalently, nonexpansiveness of T) easily implies that Ak(x*) >

0 and that if either ak or Ak(x*) converge to zero, then {Xk} converges to a solution (that is,

assumptions Al and A2 hold). Theorem 1 implies the conclusion. Q.E.D.

Lemma 5 : If T is affine and is domain and range coercive with coercivity constants A and B,

then all iterates with dk

#

0 satisfy the inequality ak > 1-B

+ 2(1+V)2+!)

(-A1 (l-B)

2

If T is nonexpansive,

that is 0 < A < 1 and B = O, then for any choice of 3 < 1 - A, ak is bounded away from 1/2.

Proof: Lemma 1 implies, since T is domain and range coercive with coercivity constants A and B,

4

that wtGMw > 1-'lwII

ak

+ i-B IIwli.

Moreover, if dk

M Gdk

dtk/Gdk

ak

jIMdkI ± jd|k1

0, then Proposition 3 implies that

- - (1 - B)

B + 11-A-(1-(17)

>1-B

2

21

#

2(1 + V-A)2 + )

Q.E.D.

The following proposition provides a rate of convergence for scheme I.

Proposition 7 : Formaps T that are contractive relative to the G norm with a contraction constant

A satisfying the condition 3 < 1 - A, the iterates generated by scheme I satisfy the estimates:

IlXk+l - T(xk+l)ll

< [A + (1 - a)]xk -

(18)

T(xk)ll-.

Proof: The iteration of scheme I implies the inequality

IjXk+1 - T(xk+l)!|G + al lxk - T(xk)|HG < llT(xk) - T2 ( xk)[

+ /3Ixk - T(xk)[l1.

Since the map T is a contraction,

[Ixk+1 - T(xk+l)11G < (A + 3(1 - a))llxk - T(k)llG,

implying (18). If 3 < 1 - A, then I - T is a contraction with coercivity constant 0 < A + 3 < 1.

Q.E.D.

Remark:

If ak >> 1, then scheme I has a strictly better rate of convergence than the classical iteration (2).

Observe when T is an affine, firmly contractive map, then a choice of 0 < 3 << iA

and Lemma

5 imply that

ak =

dt Mt Gdk

Idkl+

>> 1.

Requiring the iterates to lie close to each other might be too much of a restriction. In fact, Proposition

7 shows that the rate of convergence of scheme I is, in the nonlinear contractive case, worse than the

rate of convergence of the classic iteration (2) unless the step size ak is greater than 1. Therefore,

motivated as before by the minimization problem (5), we consider the following potential.

Scheme II: Let 3 = 0 in expression (15). Then g2(xk(a)) = lxk(a) - T(xk(a)) [

and S = R + .

Itoh, Fukushima and Ibaraki [28] have studied a line search scheme of this type in the context

of unconstrained variational inequality problems. They consider only strongly monotone problem

functions, which correspond to contractive fixed point maps.

Remark:

Let T(x) = x - lzIx + c be an affine, nonexpansive map relative to the IiG norm and let dk

T(xk) - xk. Then ak = argmin{a>o} g2(xk(a)) = argmin{a>O}l[Mxk(a) - c

22

G

-- I

(dk)

=

Lemma 6 : If T is an affine, contractive map relative to the II.IIG norm, with coercivity constant

0 < A < 1, then for all iterates k with dk

bounded away from

0

O, ak

> 2 +

'A

Therefore, if A < 1, then

ak is

2.

Proof: The proof follows from Lemma 5 with/3 = B = 0.

These results show that

ak >

in the nonexpansive case (when A = 1).

Lemma 7 : If T is a contractionwith coercivity constant A, then limk-,, ak = 0 implies that every

limit point of the sequence of iterates {xk} is a fixed point solution (that is, assumption Al holds).

Proof: If limk,, ak = 0, but limk

c IIXk - T(xk)G

O. Then,

ItXk - T(xk)lIG < IIT(xk) - T(T(Xk))llG

Since T is a contraction mapping, I[[T(xk)-T(T(xk)) llG < AIXk -T(Xk)

T(xk)Ij' < Alimk-,,

1Gand, therefore, limk_,

llxk -

Ixk-T(xk)lG, which is a contradiction. Consequently limk-.oc I[Xk-T(Xk)llG =

Oand so Proposition 4 implies the conclusion. Q.E.D.

Remark:

The proof of this lemma also follows from Proposition 6 part a with hi = 0. The class of potential

described in this proposition need to satisfy properties (i)-(v), and in particular, to be contractive

with constant 0 < A < 1.

The following theorem shows when scheme II works.

Theorem 4 : If T is an affine, nonexpansive map relative to the

l.llG norm, or if T is a nonlinear,

contractive map relative to the IIc norm with coercivity constant A, then the sequence that scheme

II induces converges to a fixed point solution.

Proof: First, we illustrate how the proof of this theorem follows from Theorem 1. Lemma 7 implies

that the step sizes

ak

satisfy assumption Al. Moreover,

1) If T is a contractive map relative to the 1l.llG norm and our linesearch is restricted in [0, 1]. then

Lemma 2 implies assumptions Al and A2.

2) In the affine, nonexpansive case, we do not need to restrict the linesearch. In fact,

dt

4GA(dk)

ak =

k]~l.

II~dkII,

1

> - 2

and so a choice of G = MtGAI implies, using strong-f-monotonicity, that Ak(x*) = ak > . That is.

assumptions Al, Al and A2 are valid.

In both cases, Theorem 1 implies the result.

23

Alternatively, for nonlinear, contractive mappings the result follows from the observation that

G < T(xk) - T(T(xk)) 11 < Allk -

IiXk+1 - T(xk+l)

)lG.

T(Xk

This result implies that the sequence {(Ixk -T(xk)ll } converges to zero. Proposition 3 then implies

the conclusion. Q.E.D.

The following proposition provides a rate of convergence for scheme II.

Proposition 8 : For nonlinear, contractive maps relative to the 11.IG norm, with contraction constant A E (0, 1), the line search we considered in scheme II gives rise to the estimates:

(19)

IIXk+1 - T(Xk+1)G < AlXk - T(Xk) 11.

Proof: For nonlinear, contractive mappings T,

IlT(xk) - T(T(xk))11G < AiT(xk) -Xk

]lXk+l - T(k+l)l]_<

G.

Q.E.D.

In the following discussion, to improve on the rate of convergence of the general scheme, we

consider potentials that involve a penalty term that pulls the iterates away from zero unless they

are approaching a solution.

Scheme III:

Let

/3 < 0 in expression (15). This is equivalent to replacing : with -

and letting 3 > 0. Then g3(xk(a)) = Ilxk(a) - T(xk(a))fl

1Ixk(a)

-

-

k(0)ll.

in (15)

Moreover, we set

S = [0,cl], with 0 < cl < 1.

Alternatively, letting P(x,T(x)) =

Ilx - T(x)IIG and h(a) =

a2 and / > 0.

g3(xk(a)) = P(xk(a),T(xk(a))) 2 -/3h(a)P(xk, T(xk)) 2.

Remarks:

1) For affine, nonexpansive maps relative to the I-IlG norm, we compute

ak = argmin({a[O,cj]}93(xk(a)).

Let

ak = IlMdk ll-Idkl'

if 1Mdk11

< 3ldk1

or

k > cl

then

ak = cl,

otherwise,

ak =

ak

2) Observe that when T is a contractive map with a contractive constant A < 1. Proposition 2

implies that for a choice of

< (±1A)2,

llldk

-5-dk-l >

24

1.

Lemma 8 : Let T be an aftfine, contractive map, with a contractive constant 0 < A < 1. Then if

IIMdk11' >/311dkl11 and p < 4,

ajk >

1

-

2

+

/3+1-A

)2 _ 3)

2((1 +

Proof: The proof follows from Lemma 5 with -/

replacing

In the nonexpansive case, A = 1. Therefore, that ak

>

and B = 0. Q.E.D.

/3

i + 2+

Lemma 9 : If T is a nonexpansive map relative to the IIl11i

-

norm, then Al holds.

Proof: Observe that in scheme III, h(a) = a2 , a E [O,cl]. Consequently, this lemma becomes a

special case of part a in Proposition 6. Q.E.D.

Theorem 5 : If T is a nonexpansive map relative to the I.lcG norm with a coercivity constant

0 < A < 1 and ak < c1 < 1, then the sequence induced by scheme III converges to a fixed point

solution.

Proof: First, let cl < 1. The facts that ak < cl < 1 and T is a nonexpansive map imply that

Ak(x*) > 1 - c1 > 0 and, as a result, that assumptions Al and A2 are valid. Moreover, Lemma 9

implies assumption A2 and Theorem 1 implies the result.

If we let cl = 1, then we need to assume that T is a contractive map, that is, 0 < A < 1.

- xI

- IXk

Assumptions Al and A2 are valid because Ak(x*) > (1- A) llF(xk)-F(

x*)ll,'

.

Theorem 1 again

implies the result. Q.E.D.

The following proposition provides a rate of convergence for scheme III.

Proposition 9 : For a contractive (or nonexpansive) map T relative to the G norm with a coercivity

constant 0 < A < 1, the line search we consider in scheme III gives rise to estimates:

Ilxk+1 -T(xk+l)11

Proof: In scheme III, P(x, y) = 11x -

< (A -/3(c

YIIG

-a2)))lxk -T(xk)112 ,

(20)

< ci < 1.

and h(a) = a2 . a E [0, cl], with 0 < cl < 1. Then a* = cl

and C = c2. Then as in the proof of Proposition 5, the iteration of scheme III implies that

1lxk+1 - T(Xk+l)

-

aklXk - T(xk)lG < lxk(c) - T(xk(cl))

- 33cllxk - T(Xk)

Therefore,

|IXk+1 - T(xk+l)t11

< (A - 3(c

Q.E.D.

25

2

- a)))lXk - T(xk) 11G

.

Remarks:

1. Observe that when the map T is contractive, 0 < A < 1, scheme III is at least as good as the

classical iterative method (2). Scheme III has a better rate of convergence than the classical

iterative method (2) when, for example, the step sizes ak converge to zero.

2. The choice of potential in scheme III requires that for nonexpansive maps, we perform a line

search with step sizes bounded away from 1. "How far" should the step sizes we consider lie

away from 1 ? Perhaps considering step sizes bounded away from 1 is too much of a restriction.

For this reason, we modify the potential function of scheme III as follows,

Scheme IV:

S =R

g4(xk(a)) = Ilxk(a) - T(Xk(a))IG -

1[Ixk(a)

- xk(O)llGjxk(a) - Xk(l)][G,

and set

+.

Proposition 10 : For nonexpansive maps T relative to the G norm,

1

12

IXk+1 - T(xk+l) G

_<

• xk()2 - T(xk( 2 ))11G -

(ak-

122

2

)21

)Ik

- T(xk)

I

G<

(21)

(21)

1

[1 -

Proof: Observe that P(x, y) = lx - yli]

(ak -

)2]lxk - T(xk)ll G.

and a* =

.

Therefore, the result follows from Proposition

5. Q.E.D.

Remarks:

1. The sequence {(1xk - T(xk)llG} is nonincreasing and, therefore, converges despite the fact that

we did not restrict the line search in the set S = [0, 1].

2. Proposition 10 provides a convergence rate for scheme IV.

Lemma 10 : If T is a nonexpansive map relative to the

limk+, ak

=

IG

norm, then Al is valid. Moreover, if

1, then every limit point of the sequence of iterates {Xk} is a fixed point solution.

Proof: The proof follows from parts a and c in Proposition 6. Q.E.D.

The following theorem provides a convergence result as well as a convergence rate for this choice

of potential function.

Theorem 6 : For nonexpansive maps T relative to the G norm, the sequence {(k} that scheme IV

generates converges to a fixed point solution.

26

Proof: Lemma 10 implies that assumption Al is valid. Proposition 10 implies that if a limit point of

{Xk } is not a solution, then

ak

converges to

2.

This result combined with.the nonexpansiveness of

the map T, implies that for k0o large enough, for all k > ko, Ak(x*) > 1 - ak > 0, that is, assumption

A2 applies. The proof then follows from Theorem 1. Q.E.D.

Remark:

For affine, nonexpansive maps T relative to the 11.IlG norm,

ak

2

l/3lidk Il + 2d GMdk

112dkl

+ 211Mdkll'

Lemma 11 : Suppose T is an affine, nonexpansive map relative to the

I.11G norm, with a coercivity

constant 0 < A < 1. Then

ak >

1

1-A

+

2

23 + 2(1 + V)2

-

If T is a contraction, that is, 0 < A < 1, then ak is bounded away from

2.

Proof:

ak >

Therefore, whenever dk

0, ak >

ak11dkllG + IlMdkll2 + (1 - A)ldkll

211dk11 + 21IMdkl12

2

+

+2(1+-)

2,3+2(1+V/)2'

Q.E.D.

Observe that in the contractive case, a choice of 3 > 1 - (1A)2 implies that all ak

<

1.

Corollary 6 : For affine, nonexpansive maps T relative to the G norm, the sequence {Xk} that

scheme IV generates converges to a fixed point solution.

Proof: In the affine case of scheme IV, ak

=

211dk1l12+2d1G(1)d

2011dk

jj+2jMdk 11'

2MtGM+2/3G in the definition of Ak (x*), then Ak (*)

=

we choose

sce

since

½ f is strongly-

> 2 . Then if

2d' Mt Gdk+3I11dk

11

k

+

=

>>

2

f-monotone relative to the 11.11G norm. Therefore, assumptions Al and A2 are valid and so Theorem

1 implies the result. Q.E.D.

Remarks:

1. The previous analysis does not restrict the line search to the set S = [0. 1]. If we do restrict

the search to S = [0. 1] then the following theorem implies convergence.

Theorem 7 : If T is a nonexpansive map relative to the G norm, then the sequence that

scheme IV generates converges to a fixed point solution.

27

Proof: The proof follows from Theorem 1. Lemma 10 implies assumption Al. Moreover,

assumptions Al and A2 follow from Lemma 2. Q.E.D.

2. Observe that the previous analysis did not restrict the choice of the constant /3. If we choose

/ > 4, then the line search in scheme IV always yields a step length ak < 1 unless the current

point is a solution. This result follows from property (2) of Proposition 6.

3. We can view scheme III as a form of scheme IV if we can find 0 < a4 (k) < 1 for which

a3 (k) = v/a4(k)(1 - a4 (k)). Then

/a 4 (k)( -a

xk(a3) = xk(a4) = Xk +

4

(k))(T(xk)

Xk)

and the potential

93(xk(a3)) = 93(Xk(a4)) = Ixk(a4) - T(xk(a 4 ))11G -

a4 (k)(l - a4(k))llxk - T(xk)Ic.

Note that we can find these values a4 (k) only if a3 (k) < . More generally, if a3 (k) < cl < 1,

then we can find 0 < a4 (k)

1 and a positive integer m, so that a 3 (k) is the mth root of

a4 (k)(1 - a4 (k)).

4. Suppose ak is bounded away from

for a choice of /3 >

-,

by a constant 0 < c

If T is a contractive map, then

the rate of convergence for scheme IV is better than that of the

classical iterative method (2).

For example, in the contractive case, if the sequence of the step sizes ak converges either to

zero or to a constant

, then scheme IV strictly improves the rate of convergence of the

classical iterative method (2).

Scheme V:

gs(xk(a)) = [(xk(a) - T(xk(a))) t ( xk - T(xk))] 2 , and S = R +.

This method is the classical steepest descent method (see [47]) as studied by Hammond and Magnanti

[23], as applied to solving asymmetric system of equations: find x* E K satisfying F(x*) = x* T(x*) = 0.

Remark:

If T(x) = x - Mx + c is an affine map and dk = -(Mxk - c), then

mina>o[(xk(a) - T(xk(a))) t (xk - T(xk))] 2 = mina>o[(AIxk(a) - c)t (lxk

implies that

ak

lldk l2

dt ldk

28

- c)]2 .

ak = 0 implies that every limit point of

Lemma 12 : If T is a contraction mapping, then limk -+,

the sequence of iterates {xk} is a fixed point solution.

Proof: Assume T is a contraction map with coercivity constant A E (0, 1). If limk-+x ak

then limk-+

[(xk - T(xk))t(xk - T(xk))] 2

result implies that limk-+

< limk--+[(T(xk) - T 2 (xk))t(xk - T(xk))] 2 .

0,

This

4

2

4

=

I xk - T(xk) 11 < limk-+c~ A [Ixk - T(xk)11 , but since A < 1, this is a

contradiction, unless limk-+

Ixk - T(xk)IG = 0. Q.E.D.

Theorem 8 : If T = x - Mx + c is an affine map, with M and M 2 positive definite matrices, then

the sequence {Xk} that scheme V induces converges to the solution.

Proof: See [23].

This result also follows from Theorem 1. Lemma 12 implies assumption Al.

Moreover, since ak =

t,

dk Mdk'

assumptions Al and A2 hold when M2 and I are positive definite

matrices.

= M+Mlt.

2

To show that assumptions Al and A2 are valid. we select

Ak(x*)

-

(xk - X*)tA/ 2 (Xk - x*) + (k

- x*)tAItMI(xk - x*) _ ak

(xk - x*)tMt( MM)(t

(replacing

ak =

Then Ak(x*) becomes

xk

x*)

-

d,d

(xk - x*)tM

2(

(xk - x*)tM

xk - x*) + dkdk

2(

xk - x*)

dtMdk

dt Mdk

Therefore, whenever MI2 is a positive definite matrix,

Ak (x*) = (xk - x*)tM 2 (xk - x*)

dt Mdk

(for xk

# x*),

t

, i2+(A/2)

_2

Amin(

Amax (MtGMI)

) = c> 0.

Q.E.D.

The following proposition characterizes the rate of convergence of scheme V.

Proposition 11 : If T = I - MI is an affine mapping and M and M 2 are positive definite matrices

and I= M+M

a then

the sequence induced by scheme V contracts to a solution through the estimate,

Xk+1 -X*

• [1-

Amin((M

n~~~ma(I

29

)]lihX

-

x*111.

(22)

Proof: See [23].

Example:

Let K = R n and T(x) = [x 2, -xl].

Then x* = (0, 0) is the solution of the fixed point problem

FP(T, Rn). The steepest descent algorithm starting from the point x ° = (1, 1) generates the iterates x1 = (1, -1),

X2 = (-1, -1),

3

4

= (-1,1), x = XO = (1, 1)

and, therefore, the algorithm cycles.

Remark:

[

1-1

is positive definite, but M 2 =

0

-2

is positive

1

0

semidefinite. This example illustrates that the choice of potential we considered in scheme V could

In this example M = I - T =

produce iterates that cycle unless the matrices M and M 2 are positive definite.

-2

1

r

-1

1

X*=(O,O)

X 1

-1

Figure 2: Cycling Example for Scheme V

Scheme VI: To remedy this cycling behavior, we modify the potential function in scheme V by

introducing a penalty term as follows,

g6 (xk(a)) = [(xk(a) - T(xk(a) )G(xk - T(Xk))]

2-

/[(xk(a) - Xk()) G(xk - T(xk))].[(xk(a) - Xk(l)) G(xk - T(xk))].

We choose S = R + .

We first make the following observation.

Lemma 13 : If T is a nonlinear, nonexpansive map relative to the

/3 > 4 implies that if ak > 1, then xk is a fixed point solution.

30

H11.G

norm, then a choice of

Proof: The remark at the end of Section 2 implies that potential g6 satisfies (i)-(v) in Subsection

2.2. Then this lemma follows from part b of Proposition 6. Q.E.D.

Remark:

Consider an affine map T(x) = x - Mx + c, and let

ak =

t G

d k)

0ldk 1+21ldk 112(d' M

t

2

Gdk)

k

2[Illd l+(d~M

]

+(dMtGdk)

2[I3IdkI

Then the step

length solution ak to the problem

minaE[o,1]g6(Xk(a)),

is

ak = min1, ak}.

(23)

We observe the following,

(1) If dk # O, then ak > 1/2.

(2) If ak > 1, then a choice of /3> 1 implies that

t

Gdk)2 + ( - 1)lldkllG + (lldkll

0 < (dMA

- dtM t Gdk)2

O.

This result implies that dk = 0 and, therefore, that xk is a fixed point solution.

Lemma 14 : Let T be a nonexpansive map relative to the G norm and suppose

nonlinear case (or

> 4 in the general

> 1 in the affine case). If either ak converges to zero or to one, then every

limit point of the sequence {zk} is a fixed point solution.

Proof: If ak converges to zero, then

lim g(xk(O))= lim

k -+oc

k-+oeo

If limk-+o dk

IdklG < lim

k

+oo

g(xk(a)) < [1 - 3a(1

O, then for all a E (0,1), limk-+,c g(xk(O)) > [1 -

-a)]

pa(1 -

lim

k-+oo

Idk G

(24)

a)]lldkllG, contradicting

(24). We conclude that limk-,+o dk = 0 and therefore, every limit point of xk is a fixed point

solution. Moreover, if ak converges to one, then if T is an affine map, if we choose

0 <

lim (d'MItGdk)2 + (/ - 1) lim

k--+oo

This result implies that

k-+oc

Ildk l + lim (dkG - d MIt'dk) 2

> 1,

=

0.

k-+oc

lldkllG converges to zero. Therefore, Proposition 4 implies that every limit

point of the sequence {xk} is a fixed point solution.

If T is a nonlinear, nonexpansive map then if ak converges to one, then property c. of Proposition

6 implies that for a choice of / > 4, every limit point of the sequence {xk} is a fixed point solution.

Q.E.D.

31

Theorem 9 : If T is a nonexpansive map relative to the 1I.[IG norm and

> 4 (or 3 > 1 in the

affine case), then the sequence that scheme VI generates converges to a fixed point solution.

Proof: Lemma 14 together with the nonexpansiveness of T imply that assumptions Al and A2 are

valid. The proof follows from Theorem 1. Q.E.D.

Remarks:

1) Observe that scheme VI extends the steepest descent method to include nonexpansive maps and

does not require any positive definiteness condition on the square of the Jacobian matrix. Moreover,

scheme VI provides a global convergence result even when the map T is nonlinear.

2) In this section we have considered special cases of the general scheme we introduced in Theorem

1. Several other schemes we have not presented in this section are also a special case of our general

scheme. In particular, consider a variational inequality problem. Then Proposition 1 states that

variational inequality VI(f, K) is equivalent to a fixed point problem FP(T.K). The operator T

might be a projection operator T = PrG(I - pG-lf) or perhaps be defined so that T(x) = y, and

y is a solution of a simpler variational inequality or minimization subproblem (see for example [12],

[20], [44], [56]).

Fukushima [20] considered the variational inequality problem VI(f, K). He considered the projection operator T(x) = PrG (x-pG-f(x)) = argminyeK[f(x)t(Yy-

x)+ 1 y-x2 ]. The fixed point

solutions corresponding to this map T are in fact the solutions of variational inequality VI(f, K)

(see Proposition 1). Using the potential

g(x) = -f(x)t (T(x-

x)x) - -lT(x) 2

xG,

(25)

Fukushima established a scheme that, when f is strongly monotone. computes a variational inequality solution.

Namely, each iteration of the scheme computes a point

k+1 = xk(ak)

=

Xk + ak(T(xk) - Xk), with ak = argmina[o,1]g(xk(a)). We observe that this scheme is in fact

a special case of our general scheme for the choice of potential g that we described in expression

(25). Observe that when f(x) = x - T(x), the potential in (25) is the same as the potential we

considered in scheme II.

Taji, Fukushima and Ibaraki [52] established an alternative scheme for strongly monotone variational inequality problems, using an operator T that generates points through a Newton procedure.

Zhu and Marcotte [58] modified Fukushima's scheme [20] to also include monotone problems. WNu.

Florian and Marcotte [56] have generalized Fukushima's scheme. They considered as T the operator that maps a point x in the set G(x) = argminyEK[f(x)t (y - x) +

32

1O(x.y)].

The function

: K x K -- R has the following properties:

(a)

is continuously differentiable,

(b) b is nonnegative,

(c) 0 is uniformly strongly convex with respect to y,

(d) O(x, y) = 0 is equivalent to x = y,

(e) V¢x(x, y) is uniformly Lipschitz continuous on K with respect to x.

Observe that when

(x,y) = Ix

- YII2,

then T(x) becomes the projection operator as in

Fukushima [20]. The fixed point solutions corresponding to this map T are also solutions of the

variational inequality problem VI(f, K) (see [56] for more details).

Furthermore, Wu, Florian and Marcotte [56] considered the potential,

1

g(x) = -f(x)t (T(x) - x) - -(T(x),

p

x).

(26)

Notice as in the previous cases, this scheme becomes a special case of our general scheme for the

choice of potential g given in (26).

The convergence of these schemes follows from Theorem 1, where we established the convergence

of the general scheme. Observe that as we have established in Lemma 2 when F is strongly monotone

(which the developers of these schemes impose) assumptions Al and A2 hold for schemes which

"repel"

ak

from zero unless at a solution. Furthermore, observe that indeed this assumption follows

for these schemes (like Lemma 7) using the descent property of the potential functions involved in

each of the previous schemes. For a proof of the latter, see [20] and [56].

Finally, for all these schemes (see also Section 5), we can apply an Armijo-type procedure to

compute inexact solutions to the line search procedures. This computation is easy to perform.

4

On the Rate of Convergence

In this section, we examine the following question: when does the general scheme we introduced in

this paper and its special cases exhibit a better rate of convergence than the classic iteration (2)?

To illustrate this possibility, we first establish a "best" step size under which the adaptive averaging

scheme will achieve a better convergence rate.

Proposition 12 : Let

(F(xk) - F(x*))tG(xk - x*)

(Xk - T(Xk))tG(xk - x*)

Xk - T(xk)l[

IF(xk)-

33

F(x*)11

If a*

#

1, then for some step size, namely a*, the general iteration

Xk+1 = xk(ak) = Xk + a*(T(xk) - Xk),

has a rate of convergence at least as good as the classic iteration (2). Moreover, if Iak - 1 > c > 0,

then it has a better rate of convergence.

Proof: First observe that

[Ixk(a) - x*

If

xk

=

Ixk

-

x*1

t

)

- 2a(xk - T(xk)) G(xkX

+ a2Ixk - T(xk)[

(27)

is a strictly convex function of a. Moreover,

T(xk), then lxk(a) - x*

argminaXk(a) - X*11

=2 (Xk - T(Xk))tG(xk - x*)

IlXk - T(Xk)II

Therefore, if a* = 1, then

Ilxk(a) - x Ie < JlT(xk) - T(x*)II

< AIIXk - X*112.

Moreover, expression (27) implies that

flxk(a)

-

=

- (a* - 1)21

IIT(xk) - T(x*)ll

k - T(xk)ll

Proposition 3 implies that

IlXk(ak)

1ii <

-

(A - (a

-

1)2(1

) 2 )xk

-

- X*12

Therefore, if la* - 11 > c > 0. then

[lkk(a)

-

*I < (A - c2(1

-

'/)

2

)|lxk

-

x*[,

implying that the sequence {xk(a*)} has a better rate of convergence than the sequence {T(Xk)}.

Q.E.D.

Remark:

Which maps T give rise to step sizes a* ~ 1, and which to step sizes a* - 11 > c? We next examine

this question.

1. Let T be a nonexpansive map that is tight, that is,

jiT(x) - T(x*)I

34

|X -

2x11

Then

(k

- T(xk) )tG(k - x*)

ak

- 1

-

.

2

|IXk- T(Xk)2ll

ak

and so

1

Observe that in this case, we can achieve the "best" rate of convergence

by moving half way at each step.

2. Let T be a firmly nonexpansive map that is not tight, that is,

IT(x) - T(x*)-1

< x - x*l1

- lx - T(x) - x* + T(x*)H.

Setting A = 1, B = -1 and y = x* and B = -1 in Lemma 1 stated as a strict inequality

implies that this condition is equivalent to

(x - T(x) - x* + T(x*)) t G(x - x*) > llx - T(x)H-.

Consequently,

ak =

xk-T(Xk)'G

)

> 1.

3. Let T be a firmly contractive map. Then setting y = x*, and B = -1 in Lemma 1 implies

that

(x- T(x) - x* + T(x*)) tG(x - x*) > 1

Then Proposition 3 implies that a* - 1 >

-

2

x - x11

G

+

2(1- +V)

2(1

4. Finally, if T is contractive but with a constant A "close" to 1, that is, if for some constant

A

(0,1),

Alx - x*ll

then 1 -a

>

> T(x) - T(x*)l

2

> lx - x* Z - Alx - T(x) l~,

A > 0.

The previous analysis shows that for certain types of maps and for some step sizes. adaptive

averaging provides a better rate of convergence than the classical iterative scheme xk+l = T(xk).

We have shown that to achieve the improved convergence rate, we need to choose step size as a.

Since a involves a fixed point solution x*, its computation is not possible. Accordingly, we extend

our choice of step sizes to establish an allowable range of step sizes for which adaptive averaging

schemes have a better rate of convergence. Moreover, we show that the schemes we studied in the

previous section have step sizes within this range.

Proposition 13 : For fixed point problems with maps T satisfying the condition

a*

11

(x-T(x))t(x-x*)

35

> c > 0,

for all x E K

(28)

adaptive averaging schemes with choices of step size ak lying with the range

a -la

- 11

+ d < ak < a* + la -1 -d,

(29)

with 0 < d < c, have a better rate of convergence than the classic iteration (2).

Proof: Using expression (27) we see that

IIk(a) - xII =

T(xk) - T(x *)II

+ (a2

- 2aa* + 2ak - 1)lxk - T(xk)I.I

Therefore, the binomial a2 - 2aa* + 2a* - 1 < -d 2 for choices of step sizes a satisfying the condition

a - la* - 11 + d < a < a* + la* - 1 - d,

with 0 < d < c. Consequently, we need la* - 11 > c > 0, (that is, condition (28)). Functions of the

type we discussed in the previous remark satisfy this inequality. Then a choice of step sizes ak lying

within the range of (29) provide a rate of convergence

HIxk(ak)

-

X*11

< IT(xk) - T(x)112

-d 21Xk - T(Xk)lI

(Using the fact that T is contractive and Proposition 3, we obtain)

Ilxk(ak)

-

x*[I[

< [A - d2 (1 -

2

(30)

) ]xk - x*[Iq.

Q.E.D.

Corollary 7 : For fixed point problems with maps T satisfying expression (28), a choice of step

sizes within (29) guarantees a better rate of convergence than the classic iteration (2). Furthermore,

IIXk+1 - x*l 2 < IT(xk) - T(x*)11

-

(ak

- 1)(Ak(X*) - 1)Ilxk - T(xk)1

<

(31)

[A - (ak - 1)(Ak(X*) - 1)(1 - vA)2 ]Ixk - x* I

Proof: Expression (31) follows similarly to our development of (7). Moreover. observe that Ak(x*)

2ak - ak. Therefore, to obtain a better rate of convergence than the classical iteration (2), we need

(ak - 1)(Ak(x*) - 1) bounded away from 0. Since, (ak - 1)(Ak(x*) - 1) = a. - 2ak.a + 2ak

the conclusion follows as in Proposition 13. Q.E.D.

36

-

1.

Remarks:

1) Condition (29) is equivalent to assuming that

(ak -

)(Ak(x*) - 1) > .d2 > 0. Moreover, it is

equivalent to assuming that

(32)

Ak(x*) E [min{l, 2a* - 1} + d, max{1, 2ak- 1}- d].

Observe that when (29) (or (32)) hold, then assumptions Al and A2 also follow.

2) In the following discussion, we show that the step sizes that we used in the schemes of Section

3 satisfy (29). Therefore, the schemes we studied in Section 3 exhibit better rates of convergence

than the classical iteration (2). In discussing these methods, we let ak and Ai(x*) with j = I* ..., VI

denote the step size and quantity Aik (x*) for scheme j at the kth iteration.

In particular, consider the affine problem with I - T = 3i. We need to keep in mind that

T(xk) - xk = dk

and a*

=

(X-dk Xk)

· Consider scheme I. If T is firmly contractive, then relation (29) follows. If we set G = MtALI + 31I,

Furthermore, for G = I, a-k

Observe that for firmly contractive maps T, a choice of 3 <

then a*k -

Mk+(k--

lldkll2+llMdl112

klMdk[2+-lMdkalk

M2k2).

that

llIdkll 2

1 - A- 2

2

ak-lŽ

IMdk

2

+ Ildkl 2

implies, using Proposition 3.

'-A

a - 11> A- 2

2(3 + (1 +

d>0

=d>0.

V/)2)

Therefore,

(ak - 1)(A(x*) - 1) = (ak - 1)(2a

with d

=

1-A-23

2(O+(1+ v)2) '

- a -1)

-

(a

-

that is, relation (29).

· Consider scheme II. If T is contractive, then relation (29) follows.

= Mt

1)2 > d2 ,

in

in ak

ak and

and G = I in a

implies that a,

=

Observe that a choice of

a* which satisfies relation (29).

· Consider scheme III. If T is contractive, then relation (29) follows. If we set G =

akak ==

ak

t

2

Mdt

All(kjIIMdkII12-k-3(xk-x)

-0Idk1I

d

-

d

t

IIAMdkl12I -311dk 112

Observe that 1 - a'

1-

AIII(x*) =

)

.

-

I31

then

-

Furthermore, for G = I,