Numerical Approaches for Sequential Bayesian Optimal Experimental Design Xun Huan

advertisement

Numerical Approaches for

Sequential Bayesian Optimal Experimental Design

by

Xun Huan

B.A.Sc., University of Toronto (2008)

S.M., Massachusetts Institute of Technology (2010)

Submitted to the Department of Aeronautics and Astronautics

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy in Computational Science and Engineering

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2015

c Massachusetts Institute of Technology 2015. All rights reserved.

Author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Department of Aeronautics and Astronautics

August 20, 2015

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Youssef M. Marzouk

Class of 1942 Associate Professor of Aeronautics and Astronautics

Thesis Supervisor

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

John N. Tsitsiklis

Clarence J. Lebel Professor of Electrical Engineering

Thesis Committee

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Mort D. Webster

Associate Professor of Energy Engineering, Pennsylvania State University

Thesis Committee

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Karen E. Willcox

Professor of Aeronautics and Astronautics

Thesis Committee

Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Paulo C. Lozano

Associate Professor of Aeronautics and Astronautics

Chair, Graduate Program Committee

2

Numerical Approaches for

Sequential Bayesian Optimal Experimental Design

by

Xun Huan

Submitted to the Department of Aeronautics and Astronautics

on August 20, 2015, in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy in Computational Science and Engineering

Abstract

Experimental data play a crucial role in developing and refining models of physical systems.

Some experiments can be more valuable than others, however. Well-chosen experiments can

save substantial resources, and hence optimal experimental design (OED) seeks to quantify

and maximize the value of experimental data. Common current practice for designing a

sequence of experiments uses suboptimal approaches: batch (open-loop) design that chooses

all experiments simultaneously with no feedback of information, or greedy (myopic) design that optimally selects the next experiment without accounting for future observations

and dynamics. In contrast, sequential optimal experimental design (sOED) is free of these

limitations.

With the goal of acquiring experimental data that are optimal for model parameter

inference, we develop a rigorous Bayesian formulation for OED using an objective that incorporates a measure of information gain. This framework is first demonstrated in a batch

design setting, and then extended to sOED using a dynamic programming (DP) formulation. We also develop new numerical tools for sOED to accommodate nonlinear models

with continuous (and often unbounded) parameter, design, and observation spaces. Two

major techniques are employed to make solution of the DP problem computationally feasible. First, the optimal policy is sought using a one-step lookahead representation combined

with approximate value iteration. This approximate dynamic programming method couples backward induction and regression to construct value function approximations. It also

iteratively generates trajectories via exploration and exploitation to further improve approximation accuracy in frequently visited regions of the state space. Second, transport maps are

used to represent belief states, which reflect the intermediate posteriors within the sequential

design process. Transport maps offer a finite-dimensional representation of these generally

non-Gaussian random variables, and also enable fast approximate Bayesian inference, which

must be performed millions of times under nested combinations of optimization and Monte

Carlo sampling.

The overall sOED algorithm is demonstrated and verified against analytic solutions on a

simple linear-Gaussian model. Its advantages over batch and greedy designs are then shown

via a nonlinear application of optimal sequential sensing: inferring contaminant source location from a sensor in a time-dependent convection-diffusion system. Finally, the capability of

the algorithm is tested for multidimensional parameter and design spaces in a more complex

setting of the source inversion problem.

3

Thesis Supervisor: Youssef M. Marzouk

Title: Class of 1942 Associate Professor of Aeronautics and Astronautics

Committee Member: John N. Tsitsiklis

Title: Clarence J. Lebel Professor of Electrical Engineering

Committee Member: Mort D. Webster

Title: Associate Professor of Energy Engineering, Pennsylvania State University

Committee Member: Karen E. Willcox

Title: Professor of Aeronautics and Astronautics

4

Acknowledgments

First and foremost, I would like to thank my advisor Youssef Marzouk, for giving me the

opportunity to work with him, and for his constant guidance and support. Youssef has

been a great mentor, friend, and inspiration to me throughout my graduate school career. I

find myself incredibly lucky to have crossed paths with him right as he started as a faculty

member at MIT. I would also like to thank all my committee members, John Tsitsiklis,

Mort Webster, and Karen Willcox, and my readers Peter Frazier and Omar Knio. I have

benefited greatly from their support and insightful discussions, and I am honored to have

each of them in the making of this thesis.

There are many friends and colleagues that helped me through graduate school and

enriched my life: Huafei Sun, with a friendship that started all the way back in Toronto,

who has been like a big brother to me; Masayuki Yano, a great roommate of 3 years,

with whom I had many interesting discussions about research and life; Hemant Chaurasia,

together we endured through quals and classes, enjoyed MIT $100K events, and played many

intramural hockey games side-by-side; Matthew Parno for graciously sharing his MUQ code;

Tarek El Moselhy for the fun times in exploring Vancouver and Japan; Tiangang Cui for

many enjoyable outings outside of research; Chi Feng, Alessio Spantini, and Sergio Amaral

for performing “emergency surgery” on my desktop computer when its power supply died the

week before my defense; and many others that I cannot name all here. I want to thank the

entire UQ group and ACDL, all the students, post-docs, faculty and staff, past and present.

Special thanks go to Sophia Hasenfus, Beth Marois, Meghan Pepin, and Jean Sofronas, for

all the help behind the scenes. I am also grateful for all my friends from Toronto for making

my visits back home extra fun and memorable.

Last but not least, I want to thank my parents, who have always been there for me,

through tough and happy times. I would not have made this far without their constant love,

support, and encouragement, and I am very proud to be their son.

My research was generously supported by funding from the BP-MIT Energy Fellowship,

the KAUST Global Research Partnership, the U.S. Department of Energy, Office of Science,

Office of Advanced Scientific Computing Research (ASCR), the Air Force Office of Scientific

Research (AFOSR) Computational Mathematics Program, the National Science Foundation

(NSF), and the Natural Sciences and Engineering Research Council of Canada (NSERC).

5

6

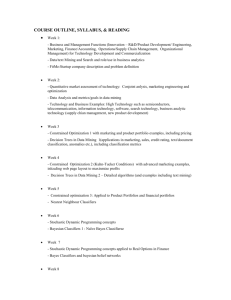

Contents

1 Introduction

1.1

1.2

19

Literature review . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.1.1

Batch (open-loop) optimal experimental design . . . . . . . . . . . . . 21

1.1.2

Sequential (closed-loop) optimal experimental design . . . . . . . . . . 26

Thesis objectives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2 Batch Optimal Experimental Design

31

2.1

Formulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.2

Stochastic optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2.2.1

Robbins-Monro stochastic approximation . . . . . . . . . . . . . . . . 35

2.2.2

Sample average approximation . . . . . . . . . . . . . . . . . . . . . . 36

2.2.3

Challenges in optimal experimental design . . . . . . . . . . . . . . . . 39

2.3

Polynomial chaos expansions . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.4

Infinitesimal perturbation analysis . . . . . . . . . . . . . . . . . . . . . . . . 43

2.5

Numerical results: 2D diffusion source inversion problem . . . . . . . . . . . . 46

2.5.1

Problem setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.5.2

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3 Formulation for Sequential Design

65

3.1

Problem definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

3.2

Dynamic programming form . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

3.3

Information-based Bayesian experimental design . . . . . . . . . . . . . . . . . 69

3.4

Notable suboptimal sequential design methods . . . . . . . . . . . . . . . . . . 71

7

4 Approximate Dynamic Programming for Sequential Design

73

4.1

Approximation approaches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.2

Policy representation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

4.3

Policy construction via approximate value iteration . . . . . . . . . . . . . . . 76

4.3.1

Backward induction and regression . . . . . . . . . . . . . . . . . . . . 76

4.3.2

Exploration and exploitation . . . . . . . . . . . . . . . . . . . . . . . 78

4.3.3

Iterative update of state measure and policy approximation . . . . . . 78

4.4

Connection to the rollout algorithm (policy iteration) . . . . . . . . . . . . . . 80

4.5

Connection to POMDP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

5 Transport Maps for Sequential Design

87

5.1

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

5.2

Bayesian inference using transport maps . . . . . . . . . . . . . . . . . . . . . 90

5.3

Constructing maps from samples . . . . . . . . . . . . . . . . . . . . . . . . . 93

5.3.1

Optimization objective . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

5.3.2

Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

5.3.3

Convexity and separability of the optimization problem . . . . . . . . 96

5.3.4

Map parameterization . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

5.4

Relationship between quality of joint and conditional maps

. . . . . . . . . . 98

5.5

Sequential design using transport maps . . . . . . . . . . . . . . . . . . . . . . 104

5.5.1

Joint map structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

5.5.2

Distributions on design variables . . . . . . . . . . . . . . . . . . . . . 108

5.5.3

Generating samples in sequential design . . . . . . . . . . . . . . . . . 111

5.5.4

Evaluating the Kullback-Leibler divergence . . . . . . . . . . . . . . . 112

6 Full Algorithm Pseudo-code for Sequential Design

117

7 Numerical Results

119

7.1

7.2

Linear-Gaussian problem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

7.1.1

Problem setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

7.1.2

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

1D contaminant source inversion problem . . . . . . . . . . . . . . . . . . . . 126

7.2.1

Case 1: comparison with greedy (myopic) design . . . . . . . . . . . . 131

8

7.3

7.2.2

Case 2: comparison with batch (open-loop) design . . . . . . . . . . . 133

7.2.3

Case 3: sOED grid and map methods . . . . . . . . . . . . . . . . . . 136

2D Contaminant source inversion problem . . . . . . . . . . . . . . . . . . . . 142

7.3.1

Problem setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

7.3.2

Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

8 Conclusions

155

8.1

Summary and conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

8.2

Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

8.2.1

Computational advances . . . . . . . . . . . . . . . . . . . . . . . . . . 157

8.2.2

Formulational advances . . . . . . . . . . . . . . . . . . . . . . . . . . 159

A Analytic Derivation of the Unbiased Gradient Estimator

163

B Analytic Solution to the Linear-Gaussian Problem

169

B.1 Derivation from batch optimal experimental design . . . . . . . . . . . . . . . 169

B.2 Derivation from sequential optimal experimental design . . . . . . . . . . . . . 172

9

10

List of Figures

1-1 The learning process can be characterized as an iteration between theory and

practice via deductive and inductive reasoning. . . . . . . . . . . . . . . . . . 19

2-1 Example forward model solution and realizations from the likelihood. The

solid line represents the time-dependent contaminant concentration w(x, t; xsrc )

at x = xsensor = (0, 0), given a source centered at xsrc = (0.1, 0.1), source

strength s = 2.0, width h = 0.05, and shutoff time τ = 0.3. Parameters are

defined in Equation 2.18. The five crosses represent noisy measurements at

five designated measurement times. . . . . . . . . . . . . . . . . . . . . . . . . 47

2-2 Surface plots of independent ÛN,M realizations, evaluated over the entire design space [0, 1]2 ∋ d = (x, y). Note that the vertical axis ranges and color

scales vary among the subfigures. . . . . . . . . . . . . . . . . . . . . . . . . . 49

2-3 Contours of posterior densities for the source location, given different sensor

placements. The true source location, marked with a blue circle, is xsrc =

(0.09, 0.22). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2-4 Sample paths of the RM algorithm with N = 1, overlaid on ÛN,M surfaces

from Figure 2-2 with the corresponding M values. The large is the starting

position and the large × is the final position. . . . . . . . . . . . . . . . . . . 52

2-5 Sample paths of the RM algorithm with N = 11, overlaid on ÛN,M surfaces

from Figure 2-2 with the corresponding M values. The large is the starting

position and the large × is the final position. . . . . . . . . . . . . . . . . . . 53

2-6 Sample paths of the RM algorithm with N = 101, overlaid on ÛN,M surfaces

from Figure 2-2 with the corresponding M values. The large is the starting

position and the large × is the final position. . . . . . . . . . . . . . . . . . . 54

11

2-7 Realizations of the objective function surface using SAA, and corresponding

steps of BFGS, with N = 1. The large is the starting position and the

large × is the final position. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2-8 Realizations of the objective function surface using SAA, and corresponding

steps of BFGS, with N = 11. The large is the starting position and the

large × is the final position. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2-9 Realizations of the objective function surface using SAA, and corresponding

steps of BFGS, with N = 101. The large is the starting position and the

large × is the final position. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

2-10 Mean squared error, defined in Equation 2.22, versus average run time for

each optimization algorithm and various choices of inner-loop and outer-loop

sample sizes. The highlighted curves are “optimal fronts” for RM (light red)

and SAA-BFGS (light blue). . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

3-1 Batch design exhibits an open-loop behavior, where no feedback of information

is involved, and the observations yk from any experiment do not affect the

design of any other experiments. Sequential design exhibits a closed-loop

behavior, where feedback of information takes place, and the data yk from an

experiment can be used to guide the design of future experiments. . . . . . . . 68

5-1 A log-normal random variable z can be mapped to a standard Gaussian rani.d.

dom variable ξ via ξ = T (z) = ln(z). . . . . . . . . . . . . . . . . . . . . . . . 88

5-2 Example 5.3.1: samples and density contours. . . . . . . . . . . . . . . . . . . 99

5-3 Example 5.3.1: posterior density functions using different map polynomial

basis orders and sample sizes. . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

5-4 Illustration of exact map and perspectives of approximate maps. Contour

plots on the left reflect the reference density, and on the right the target

density. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

5-5 Example 5.5.1: posteriors from joint maps constructed under different d distributions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

5-6 Example 5.5.1: additional examples of posteriors from joint maps constructed

under different d distributions. The same legend in Figure 5-5 applies. . . . . 115

12

7-1 Linear-Gaussian problem: J˜1 surfaces and regression points used to build

them. The left, middle, and right columns correspond to ℓ = 1, 2, and 3,

respectively. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

7-2 Linear-Gaussian problem: d0 histograms from 1000 simulated trajectories.

The left, middle, and right columns correspond to ℓ = 1, 2, and 3, respectively.125

7-3 Linear-Gaussian problem: d1 histograms from 1000 simulated trajectories.

The left, middle, and right columns correspond to ℓ = 1, 2, and 3, respectively.126

7-4 Linear-Gaussian problem: (d0 , d1 ) pair scatter plots from 1000 simulated trajectories superimposed on top of the analytic expected utility surface. The

left, middle, and right columns correspond to ℓ = 1, 2, and 3, respectively. . . 127

7-5 Linear-Gaussian problem: total reward histograms from 1000 simulated trajectories. The left, middle, and right columns correspond to ℓ = 1, 2, and 3,

respectively. The plus-minus quantity is 1 standard error. . . . . . . . . . . . 128

7-6 Linear-Gaussian problem: samples used to construct the exploration map and

samples generated from the resulting map. . . . . . . . . . . . . . . . . . . . . 129

7-7 1D contaminant source inversion problem, case 1: physical state and belief

state density progression of a sample trajectory. . . . . . . . . . . . . . . . . . 132

7-8 1D contaminant source inversion problem, case 1: (d0 , d1 ) pair scatter plots

from 1000 simulated trajectories for greedy design and sOED. . . . . . . . . . 133

7-9 1D contaminant source inversion problem, case 1: total reward histograms

from 1000 simulated trajectories for greedy design and sOED. The plus-minus

quantity is 1 standard error. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

7-10 1D contaminant source inversion problem, case 2: d0 and d1 pair scatter plots

from 1000 simulated trajectories for batch design and sOED. Roughly 55% of

the sOED trajectories qualify for the precise device in the second experiment.

However, there is no particular pattern or clustering of these designs, thus we

do not separately color-code them in the scatter plot. . . . . . . . . . . . . . . 135

7-11 1D contaminant source inversion problem case 2: total reward histograms

from 1000 simulated trajectories for batch design and sOED. The plus-minus

quantity is 1 standard error. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

13

7-12 1D contaminant source inversion problem, case 3: d0 histograms from 1000

simulated trajectories for the sOED grid and map methods. The left, middle,

and right columns correspond to ℓ = 1, 2, and 3, respectively. . . . . . . . . . 138

7-13 1D contaminant source inversion problem, case 3: d1 histograms from 1000

simulated trajectories for the sOED grid and map methods. The left, middle,

and right columns correspond to ℓ = 1, 2, and 3, respectively. . . . . . . . . . 139

7-14 1D contaminant source inversion problem, case 3: total reward histograms

from 1000 simulated trajectories for the sOED grid and map methods. The

left, middle, and right columns correspond to ℓ = 1, 2, and 3, respectively.

The plus-minus quantity is 1 standard error. . . . . . . . . . . . . . . . . . . . 140

7-15 1D contaminant source inversion problem: samples used to construct exploration map and samples generated from the resulting map. . . . . . . . . . . . 141

7-16 1D contaminant source inversion problem, case 3: (d0 , d1 ) pair scatter plots

from 1000 simulated trajectories. The sOED result here is for ℓ = 1. . . . . . 142

7-17 1D contaminant source inversion problem, case 3: total reward histograms

from 1000 simulated trajectories using batch and greedy designs. The plusminus quantity is 1 standard error. . . . . . . . . . . . . . . . . . . . . . . . . 142

7-18 2D contaminant source inversion problem: plume signal and physical state

progression of sample trajectory 1. . . . . . . . . . . . . . . . . . . . . . . . . 146

7-19 2D contaminant source inversion problem: belief state posterior density contour progression of sample trajectory 1. . . . . . . . . . . . . . . . . . . . . . 147

7-20 2D contaminant source inversion problem: plume signal and physical state

progression of sample trajectory 2. . . . . . . . . . . . . . . . . . . . . . . . . 148

7-21 2D contaminant source inversion problem: belief state posterior density contour progression of sample trajectory 2. . . . . . . . . . . . . . . . . . . . . . 149

7-22 2D contaminant source inversion problem: dk histograms from 1000 simulated

trajectories. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

7-23 2D contaminant source inversion problem: total reward histograms from 1000

simulated trajectories. The left, middle, and right columns correspond to

ℓ = 1, 2, and 3, respectively. The plus-minus quantity is 1 standard error. . . 150

7-24 2D contaminant source inversion problem: samples used to construct exploration map. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

14

7-25 2D contaminant source inversion problem: samples generated from the resulting map. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

7-26 2D contaminant source inversion problem: samples used to construct exploration map between dk and yk , with θ. The columns from left to right

correspond to d0,0 , d0,1 , y0 , d1,0 , d1,1 , y1 , d2,0 , d2,1 , y2 , and the marginals

for the row variables, and the rows from top to bottom correspond to the

marginal for the column variables, θ0 , θ1 , θ0 , θ1 , θ0 , θ1 , where each pair of

rows corresponding to θ for inference after 1, 2, and 3 experiments, respectively.153

7-27 2D contaminant source inversion problem: samples generated from the resulting map between dk and yk , with θ. The columns from left to right correspond

to d0,0 , d0,1 , y0 , d1,0 , d1,1 , y1 , d2,0 , d2,1 , y2 , and the marginals for the row variables, and the rows from top to bottom correspond to the marginal for the

column variables, θ0 , θ1 , θ0 , θ1 , θ0 , θ1 , where each pair of rows corresponding

to θ for inference after 1, 2, and 3 experiments, respectively. . . . . . . . . . . 154

B-1 Linear-Gaussian problem: analytic expected utility surface, with the “front”

of optimal designs in dotted black line. . . . . . . . . . . . . . . . . . . . . . . 172

15

16

List of Tables

2.1

Histograms of final search positions resulting from 1000 independent runs

of RM (top subrows) and SAA (bottom subrows) over a matrix of N and

M sample sizes. For each histogram, the bottom-right and bottom-left axes

represent the sensor coordinates x and y, respectively, while the vertical axis

represents frequency. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.2

High-quality expected information gain estimates at the final sensor positions resulting from 1000 independent runs of RM (top subrows, blue) and

SAA-BFGS (bottom subrows, red). For each histogram, the horizontal axis

represents values of ÛM =1001,N =1001 and the vertical axis represents frequency. 62

2.3

Histograms of optimality gap estimates for SAA-BFGS, over a matrix of samples sizes M and N . For each histogram, the horizontal axis represents value

of the gap estimate and the vertical axis represents frequency. . . . . . . . . . 63

2.4

Number of iterations in each independent run of RM (top subrows, blue) and

SAA-BFGS (bottom subrows, red), over a matrix of sample sizes M and N .

For each histogram, the horizontal axis represents iteration number and the

vertical axis represents frequency. . . . . . . . . . . . . . . . . . . . . . . . . . 64

5.1

Different levels of scope are available when transport maps are used as the

belief state in the sOED context. niter represents the number of stochastic

optimization iterations from numerically evaluating Equation 5.38, and nMC

represents the Monte Carlo size of approximating its expectation. In our

implementation, these values are typically around 50 and 100, respectively. . . 105

17

5.2

Structure of joint maps needed to perform inference under different number

of experiments. For simplicity of notation, we omit the conditioning in the

subscript of map components; please see Equation 5.40 for the full subscripts.

The same pattern is repeated for higher number of experiments. The components grouped by the red rectangular boxes are identical. . . . . . . . . . . . . 108

5.3

Marginal distributions of d used to construct joint map. . . . . . . . . . . . . 110

7.1

Linear-Gaussian problem: total reward mean values (of histograms in Figure 7-5) from 1000 simulated trajectories. Monte Carlo standard errors are

all ±0.02. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

7.2

Contaminant source inversion problem: problem settings.

. . . . . . . . . . . 130

7.3

Contaminant source inversion problem: algorithm settings. . . . . . . . . . . . 130

7.4

1D contaminant source inversion problem, case 3: total reward mean values

from 1000 simulated trajectories; the Monte Carlo standard errors are all

±0.02. The grid and map cases are all from sOED. . . . . . . . . . . . . . . . 138

18

Chapter 1

Introduction

Experiments play an essential role in the learning process. As George E. P. Box points out,

“. . . science is a means whereby learning is achieved, not by mere theoretical speculation on

the one hand, nor by the undirected accumulation of practical facts on the other, but rather

by a motivated iteration between theory and practice . . . ” [29]. As illustrated in Figure 1-1,

theory is used to deduce what is expected to be observed in practice, and observations from

experiments are used in turn to induce how theory may be further improved. In science

and engineering, experiments are a fundamental building block of the scientific method, and

crucial in the continuing development and refinement of models of physical systems.

Theory

Deduction

Induction

Practice

(experimental

observations)

Figure 1-1: The learning process can be characterized as an iteration between theory and

practice via deductive and inductive reasoning.

Whether obtained through field observations or laboratory experiments, experimental

data may be difficult and expensive to acquire. Even controlled experiments can be timeconsuming or delicate to perform. Experiments are also not equally useful, with some

providing valuable information while others perhaps irrelevant to the investigation goals. It

is therefore important to quantify the trade-off between costs and benefits, and maximize

19

the overall value of experimental data—to design experiments to be “optimal” by some appropriate measure. Not only is this an important economic consideration, it can also greatly

accelerate the advancement of scientific understanding. Experimental design thus encompasses questions of where and when to measure, which variables to interrogate, and what

experimental conditions to employ (some examples of real-life experimental design situations

are shown below). In this thesis, we develop a systematic framework for experimental design

that can help answer these questions.

Example 1.0.1. Combustion kinetics: Alternative fuels, such as biofuels [139] and synthetic fuels [86], are becoming increasingly popular [36]. They are attractive sources for safeguarding volatile petroleum price, ensuring energy security, and promoting new and desirable

properties that traditional fossil fuels might not offer. The development of these new fuels

relies on a deep understanding of the underlying chemical combustion process, which is often

modeled by complicated, nonlinear chemical mechanisms composed of many elementary reactions. Parameters governing the rates of these reactions, known as kinetic rate parameters,

are usually inferred from experimental measurements such as ignition delay times [72, 58].

Many of these kinetic parameters have large uncertainties even today [12, 11, 141, 133],

and more data are needed to reduce the uncertainties. Combustion experiments, often conducted using shock tubes, are usually expensive and difficult to set up, and need to be

carefully planned. Furthermore, one may choose to carry out these experiments under different temperatures and pressures, with different initial concentrations of reactants, and

selecting different output quantities to observe and at different times. Experimental design

provides guidance in making these choices such that the most information may be gained

on the kinetic rate parameters [89, 90].

Example 1.0.2. Optimal sensor placement: The United States government has initiated a number of terrorism prevention measures since the events of 9/11. For example,

the BioWatch program [152] focuses on the prevention and response to scenarios where a

biological pathogen is released in a city. One of its main goals is to find and intercept the

contaminant source and eliminate it as soon as possible. It is often too dangerous to dispatch personnel into the contamination zone, but a limited number of measurements may be

available from remote-controlled robotic vehicles. It is thus crucial for these measurements

to yield the most information on the location of contaminant source [91]. This problem

20

will be revisited in this thesis, with particular focus on situations that allow a sequential

selection of measurement locations.

1.1

Literature review

Systematic design of experiments has received much attention in the statistics community

and in many science and engineering applications. Early design approaches primarily relied

on heuristics and experience, with the traditional factorial, composite, and Latin hypercube

designs all based on the concepts of space-filling and blocking [69, 31, 56, 32]. While these

methods can produce good designs in relatively simple situations involving a few design

variables, they generally do not take into account, and take advantage of, the knowledge

of the underlying physical process. Simulation-based experimental design uses a model

to guide the choice of experiments, and optimal experimental design (OED) furthermore

incorporates specific and relevant metrics to design experiments for a particular purpose,

such as parameter inference, prediction, or model discrimination.

The design of multiple experiments can be pursued via two broad classes of approaches:

• Batch (open-loop) design involves the design of all experiments concurrently as a batch.

The outcome of any experiment would not affect the design of others. In some situations, this approach may be necessary, such as under certain scheduling constraints.

• Sequential (closed-loop) design allows experiments to be conducted in sequence, thus

permitting newly acquired data to help guide the design of future experiments.

1.1.1

Batch (open-loop) optimal experimental design

Extensive theory has been developed for OED of linear models, where the quantities probed

in the experiments depend linearly on the model parameters of interest. Common solution criteria for the OED problem are written as functionals of the Fisher information

matrix [66, 9]. These criteria include the well-known “alphabetic optimality” conditions,

e.g., A-optimality to minimize the average variance of parameter estimates, or G-optimality

to minimize the maximum variance of model predictions. The derivations may also adopt

a Bayesian perspective [94, 156], which provides a rigorous foundation for inference from

noisy, indirect, and incomplete data and a natural mechanism for incorporating physical

21

constraints and heterogeneous sources of information. Bayesian analogues of alphabetic optimality, reflecting prior and posterior uncertainty in the model parameters, can be attained

from a decision-theoretic point of view [18, 146, 130], with the formulation of an expected

utility quantity. For instance, Bayesian D-optimality can be obtained from a utility function containing Shannon information while Bayesian A-optimality may be derived from a

squared error loss. In the case of linear-Gaussian models, the criteria of Bayesian alphabetic

optimality reduce to mathematical forms that parallel their non-Bayesian counterparts [43].

For nonlinear models, however, exact evaluation of optimal design criteria is much more

challenging. More tractable design criteria can be obtained by imposing additional assumptions, effectively changing the form of the objective; these assumptions include linearizations

of the forward model, Gaussian approximations of the posterior distribution, and additional

assumptions on the marginal distribution of the data [33, 43]. In the Bayesian setting, such

assumptions lead to design criteria that may be understood as approximations of an expected utility. Most of these involve prior expectations of the Fisher information matrix [49].

Cruder “locally optimal” approximations require selecting a “best guess” value of the unknown model parameters and maximizing some functional of the Fisher information evaluated at this point [70]. None of these approximations, though, is suitable when the parameter

distribution is broad or when it departs significantly from normality [51]. A more general

design framework, free of these limiting assumptions, is preferred [118, 80]. With recent

advances in algorithm development and computational power, OED for nonlinear systems

can now be tackled directly using numerical simulation [109, 145, 169, 108, 158, 89, 90, 91].

Information-based objectives

Our work accommodates nonlinear experimental design from a Bayesian perspective (e.g., [118]).

We focus on experiments described by a continuous design space, with the goal of choosing experiments that are optimal for Bayesian parameter inference. Rigorous informationtheoretic criteria have been proposed throughout the literature (e.g., [75]). The seminal

paper of Lindley [105] suggests using the expected information gain in model parameters

from prior to posterior—or equivalently, the mutual information between parameters and

observations, conditioned on the design variables—as a measure of the information provided by an experiment. This objective can also be derived using the Kullback-Leibler

divergence from posterior to prior as a utility function [60, 43]. Sebastiani and Wynn [148]

22

propose selecting experiments for which the marginal distribution of the data has maximum

Shannon entropy; this may be understood as a special case of Lindley’s criterion. Maximum entropy sampling (MES) has seen use in applications ranging from astronomy [109] to

geophysics [169], and is well suited to nonlinear models. Reverting to Lindley’s criterion,

Ryan [145] introduces a Monte Carlo estimator of expected information gain to design experiments for a model of material fatigue. Terejanu et al. [166] use a kernel estimator of

mutual information to identify parameters in chemical kinetic model. The latter two studies

evaluate their criteria on every element of a finite set of possible designs (on the order of

ten designs in these examples), and thus sidestep the challenge of optimizing the design

criterion over general design spaces. And both report significant limitations due to computation expense; [145] concludes that “full blown search” over the design space is infeasible,

and that two order-of-magnitude gains in computational efficiency would be required even

to discriminate among the enumerated designs.

The application of optimization methods to experimental design has thus favored simpler design objectives. The chemical engineering community, for example, has tended to use

linearized and locally optimal [117] design criteria or other objectives [144] for which deterministic optimization strategies are suitable. But in the broader context of decision-theoretic

design formulations, sampling is required. [120] proposes a curve fitting scheme wherein the

expected utility was fit with a regression model, using Monte Carlo samples over the design

space. This scheme relies on problem-specific intuition about the character of the expected

utility surface. Clyde et al. [52] explore the joint design, parameter, and data space with a

Markov chain Monte Carlo (MCMC) sampler, while Amzal et al. [6] expanded this concept

to multiple MCMC chains in a sequential Monte Carlo framework; this strategy combines

integration with optimization, such that the marginal distribution of sampled designs is proportional to the expected utility. This idea is extended with simulated annealing in [121] to

achieve more efficient maximization of the expected utility. [52, 121] use expected utilities

as design criteria but do not pursue information-theoretic design metrics. Indeed, direct optimization of information-theoretic metrics has seen much less development. Building on the

enumeration approaches of [169, 145, 166] and the one-dimensional design space considered

in [109], [80] iteratively finds MES designs in multi-dimensional spaces by greedily choosing

one component of the design vector at a time. Hamada et al. [84] also find “near-optimal”

designs for linear and nonlinear regression problems by maximizing expected information

23

gain via genetic algorithms. Guestrin, Krause and others [81, 99, 100] find near-optimal

placement of sensors in a discretized domain by iteratively solving greedy subproblems, taking advantage of the submodularity of mutual information. More recently, the author has

made several contributions addressing the coupling of rigorous information-theoretic design

criteria, complex nonlinear physics-based models, and efficient optimization strategies on

continuous design spaces [89, 90, 91].

Stochastic optimization

There are many approaches for solving continuous optimization problems with stochastic

objectives. While some do not require the direct evaluation of gradients (e.g., NelderMead [124], Kiefer-Wolfowitz [95], and simultaneous perturbation stochastic approximation [161]), other algorithms can use gradient evaluations to great advantage. Broadly,

these algorithms involve either stochastic approximation (SA) [102] or sample average approximation (SAA) [149], where the latter approach must also invoke a gradient-based deterministic optimization algorithm. Hybrids of the two approaches are possible as well. The

Robbins-Monro algorithm [142] is one of the earliest and most widely used SA methods,

and has become a prototype for many subsequent algorithms. It involves an iterative update that resembles steepest descent, except that it uses stochastic gradient information.

SAA (also referred to as retrospective method [85] and sample-path method [82]) is a more

recent approach, with theoretical analysis initially appearing in the 1990s [149, 82, 97].

Convergence rates and stochastic bounds, although useful, do not necessarily reflect empirical performance under finite computational resources and imperfect numerical optimization

schemes. To the best of our knowledge, extensive numerical testing of SAA has focused on

stochastic programming problems with special structure (e.g., linear programs with discrete

design variables) [3, 170, 16, 79, 147]. While numerical improvements to SAA have seen

continual development (e.g., estimators of optimality gap [127, 111] and sample size adaptation [46, 47]), the practical behavior of SAA in more general optimization settings is largely

unexplored. SAA is frequently compared to stochastic approximation methods such as RM.

For example, [150] suggests that SAA is more robust than SA because of sensitivity to step

size choice in the latter. On the other hand, variants of SA have been developed that, for

certain classes of problems (e.g., [125]), reach solution quality comparable to that of SAA

in substantially less time. In this thesis, we also make comparisons between SA and SAA,

24

but from a practical and numerical perspective and in the context of OED.

Surrogates for computationally intensive models

In either case of Robbins-Monro or SAA, for information-based OED, one needs to employ

gradients of an information gain objective. Typically, this objective function involves nested

integrations over possible model outputs and over the input parameter space, where the

model output may be a functional of the solution of a partial differential equation. In

many practical cases, the model may be essentially a black box; while in other cases, even

if gradients can be evaluated with adjoint methods, using the full model to evaluate the

expected information gain or its gradient is computationally prohibitive. To make these

calculations tractable, one would like to replace the forward model with a cheaper “surrogate”

model that is accurate over the entire regions of the model input parameters.

Surrogates can be generally categorized into three classes [65, 71]: data-fit models,

reduced-order models, and hierarchical models. Data-fit models capture the input-output

relationship of a model from available data points, and assume regularity by imposing interpolation or regression. Given the data points, it matters not how the original model

functions, and it may be treated as a black box. One common approach for constructing

data-fit models is Gaussian process regression [94, 140]; other approaches rely on so-called

polynomial chaos expansions (PCE) and related stochastic spectral methods [178, 74, 180,

59, 123, 179, 104, 53]. In the context of OED, the former can be used to replace the likelihood altogether, allowing quick inferences and objective evaluations from this statistical

model of much simpler structure [177]. The latter builds a subspace from a set of orthogonal

polynomial basis functions, and exploits the regularity in the dependence of model outputs

on uncertain input parameters. PCE capturing dependencies jointly on parameters and

design conditions further accelerates the overall OED process [90], and can be constructed

using dimension-adaptive sparse quadrature [73] that identifies and exploits anisotropic dependencies for efficiency in high dimensions.

Reduced-order models are based on a projection of the output space onto a smaller, lowerdimensional subspace. One example is the proper orthogonal decomposition (POD), where

a set of “snapshots” of the model outputs are used to construct a basis for the subspace [19,

155, 38]. Finally, hierarchical models are those where simplifications are performed based on

the underlying physics. Techniques based on grid-coarsening, simplification of mechanics,

25

addition of assumptions, are of this type, and are often the basis of multifidelity analysis

and optimization [28, 4].

1.1.2

Sequential (closed-loop) optimal experimental design

Compared to batch OED, sequential optimal experimental design (sOED) has seen much less

development and use. The value of feedback through sequential design has been recognized

early on, with original approaches typically involving a heuristic partitioning of experiments

into batches. For instance, in the context of experimental design for improving chemical

plant filtration rate [30], an initial “empirical feedback” stage involving space-filling designs

is administered to “pick the winner” and find designs that best fix the problem, and a subsequent “scientific feedback” stage with adapted designs is followed to better understand the

reasons for what went wrong or why a solution worked. Initial attempts in finding optimal

sequential designs relied heavily on results from batch OED, by simply repeating its design

methodology in a greedy manner. Some work made use of linear design theory by iteratively

alternating between parameter estimation and applications of linear optimality (e.g., [2]).

Since many physically realistic models involve output quantities that have nonlinear dependencies on model parameters, it is desirable to employ nonlinear OED tools. The key

challenge, then, is to represent and propagate general non-Gaussian posteriors beyond the

first experiment.

Various representation techniques have been tested within the greedy design framework,

with a large body of research based on sample representations of the posterior. For instance, posterior importance sampling has been employed for variance-based utility [158]

and in greedy augmentations of generalized linear models [61]. Sequential Monte Carlo

methods have also been utilized both in experimental design for parameter inference [62]

and model discrimination [42, 63]. Even grid-based discretizations/representations of posterior density functions have shown success in adaptive design optimization that makes use

of hierarchical models in visual psychophysics [96]. While these developments provide a

convenient and intuitive avenue of extending existing batch OED tools, greedy design is

ultimately suboptimal. A truly optimal sequential design framework needs to account for

all relevant future effects in making every decision, but such considerations are dampened

by challenges in computational feasibility. With recent advances in numerical algorithms

and computing power, sOED can now be made practical.

26

sOED is often posed in a dynamic programming (DP) form, a framework widely used to

describe sequential decision-making under uncertainty. While the DP description of sOED

is gaining traction in recent years [119, 172], implementations and applications of this framework remain few, due to notoriously large computational requirements. The few existing

attempts have mostly focused on optimal stopping problems [18], stemming predominantly

from applications of clinical trial designs. Under simple situations, direct backward induction with tabular storage may be used, but is only feasible for discrete variables that can

take on a few possible outcomes [37, 174]. Applications of more involved numerical solution

techniques all rely on special structures of the problem with careful choices of loss functions.

For example, Carlin et al. [41] propose a forward sampling method that directly optimizes

a Monte Carlo estimate of the expected utility, but targets monotonic loss functions and

certain conjugate priors that result in threshold policies based on the posterior mean. Continued development on backward induction also find feasible numerical implementations

owing to policies that depend only on lower-dimensional sufficient statistics such as the posterior mean and standard deviation [21, 48]. Other approaches replace the simulation model

altogether, and instead use statistical models with assumed distribution forms [122]. None

of these works, however, uses an information-based objective. Incorporation of utilities that

reflect information gain induces quantities that are much more challenging to evaluate, and

has been attempted only for simple situations. For instance, Ben-Gal and Caramanis [15]

find near-optimal stopping policies in multidimensional design spaces by deriving and making use of diminishing return (submodularity) on the expected incremental information gain;

however, this is possible only for linear-Gaussian problems, where mutual information does

not depend on the observations.

With the current state-of-the-art in sOED heavily relying on special problem structures

and often feasible only for discrete variables that can take on a few values, we seek to

contribute to its development with a more general framework and numerical tools that can

accommodate broader classes of problems.

Dynamic programming

The solution to the sOED problem directly relates to the solution of a DP problem. As

DP is a broad subject accompanied by a vast sea of literature from many different fields of

research, including control theory [24, 22, 23], operations research [138, 137], and machine

27

learning [93, 164], we do not attempt to make a comprehensive review. Instead, we make

a brief introduction and only describe parts that are most relevant and promising to the

sOED problem, while referring readers to the references above.

Central to DP is the famous Bellman’s equation [13, 14], describing the relationship between cost or reward incurred immediately, with the expected cost or reward in the uncertain

future, as a consequence of a decision. Its recursive definition leads to an exponential explosion of scenarios, and this “curse of dimensionality” cements to become the fundamental

challenge of DP. Typically, only special classes of problems have analytic solutions, such as

those described by linear dynamics and quadratic cost [8]. As a result, substantial research

has been devoted to developing efficient numerical strategies for accurately capturing DP

solutions—this field is known as approximate dynamic programming (ADP) (also referred

to as neuro-dynamic programming and reinforcement learning) [137, 24, 93, 164].

With the goal of finding the (near) optimal policy, one must first be able to represent a

policy. While direct approximations can be made, a policy is more often portrayed implicitly,

such as by the limited lookahead forms. These forms eventually relegate the approximation

to their associated value functions by probing their values at different states, leading to

broad branches of ADP strategy in approximate value iteration (AVI) and approximate policy iteration (API). The key difference between AVI and API is that the former updates

the policy immediately and maintains as good of an approximation to the optimal policy as

possible, while the latter makes an accurate assessment of the value from a fixed policy (i.e.,

policy evaluation or learning) in an inner loop before improvements are made. Both of these

strategies have stimulated the development of a host of learning (policy evaluation) techniques based on the well-known temporal-differencing method (e.g., [163, 164, 34]), and API

further sparked the expansion of policy improvement methods such as least squares policy

iteration [103], actor-critic methods (e.g., [24]), and policy-gradient algorithms (e.g., [165]).

Finally, representation of value functions can be replaced by “model-free” Q-factors that capture the values in state-action pairs—this leads to the widely used reinforcement learning

technique of Q-learning [175, 176].

28

1.2

Thesis objectives

Current research in OED has seen rapid advances in the design of batch experiments.

Progress towards the optimal design of sequential experiments, however, remains in relatively early stages. Direct applications of batch OED methods to sequential settings are

suboptimal, and initial explorations of the optimal framework have been limited to problems

with discrete spaces of very few states and with special problem and solution structures. We

aim to extend the optimal sequential design framework to much more general settings.

The objectives of this thesis are:

• To advance the numerical methods for batch OED from author’s previous work [89, 90]

in order to accommodate nonlinear and computationally intensive models with an

information gain objective. This involves deriving and accessing gradient information

via the use of polynomial chaos and infinitesimal perturbation analysis in order to

enable the application of gradient-based optimization methods.

• To formulate the sOED problem in a rigorous manner, for a finite number of experiments, accommodating nonlinear and physically realistic models, under continuous

parameter, design, and observation spaces of multiple dimensions, using a Bayesian

treatment of uncertainty with general non-Gaussian distributions and an information

measure design objective. This goal includes formulating the DP form of the sOED

problem that is central to the subsequent development of numerical methods.

• To develop numerical methods for solving the sOED problem in a computationally

practical manner. This is achieved via the following sub-objectives.

– To implement ADP techniques based on a one-step lookahead policy representation, combined with approximate value iteration (in particular backward induction and regression) for constructing value function approximations.

– To represent continuous belief states numerically for general multivariate nonGaussian random variables using transport maps.

– To construct and utilize transport maps in the joint design, observation, and

parameter space, in a form that enables fast and approximate Bayesian inference

by conditioning; this capability is necessary to achieve computational feasibility

in the ADP methods.

29

• To demonstrate the computational effectiveness of our sOED numerical tools on realistic design applications with multiple experiments and multidimensional parameters.

These applications include contaminant source inversion problems in both one- and

two-dimensional physical domains.

More broadly speaking, this thesis seeks to develop a rigorous mathematical framework

and a set of numerical tools for performing sequential optimal experimental design in a

computationally feasible manner.

The thesis is organized as follows. Chapter 2 begins with the formulation, numerical

methods, and results for the batch OED method, particularly focusing on the development

of gradient information. It also provides a foundation of understanding in the relatively

simpler batch design setting before extending to sequential designs for the rest of the thesis. Chapter 3 then presents the formulation of the sOED problem, including the DP form

that is the basis for developing our numerical methods. We also demonstrate the frequently

used batch and greedy design methods to be simplifications from the sOED problem, and

thus suboptimal for sequential settings. Chapter 4 details the ADP techniques we employ

to numerically solve the DP form of the sOED problem, including the development of an

adaptive strategy to refine the policy induced state space. Chapter 5 introduces and describes the use of transport map as belief state, along with the framework for using joint

maps to enable fast and approximate Bayesian inference. The full algorithm for the sOED

problem is summarized in Chapter 6. It is then applied to several numerical examples in

Chapter 7. We first illustrate the solution on a simple linear-Gaussian problem to provide

intuitive insights and establish comparisons with analytic references. We then demonstrate

these tools on contaminant source inversion problems of 1D and 2D convection-diffusion

scenarios. Finally, Chapter 8 provides concluding remarks and future work.

30

Chapter 2

Batch Optimal Experimental Design

Batch (open-loop) optimal experimental design (OED) involves the design of all experiments

concurrently as a batch, where the outcome of any experiment would not affect the design

of others.1 This self-contained chapter introduces the framework of batch OED, assuming

the goal of the experiments is to infer uncertain model parameters from noisy and indirect observations. The framework developed here, however, can be used to accommodate

other experimental goals as well. Furthermore, it uses a Bayesian treatment of uncertainty,

employs an information measure objective, and accommodates nonlinear models under continuous parameter, design, and observation spaces. We pay particular attention to the use

of gradient information and the overall computational behavior of the method, and demonstrate its feasibility with a partial differential equation (PDE)-based 2D diffusion source

inversion problem. We then extend this foundation to sequential (closed-loop) OED in

subsequent chapters.

The content of this chapter is a continuation from the author’s previous work [89, 90],

and draws heavily from the author’s recent publication [91].

2.1

Formulation

Let (Ω, F, P) be a probability space, where Ω is a sample space, F is a σ-field, and P

is a probability measure on (Ω, F ). Let the vector of real-valued random variables2 θ :

Ω → Rnθ denote the uncertain model parameters of interest (referred to as “parameters”

1

For simplicity of terminology, we refer to the entire batch of experiments as a single entity “experiment”

in this chapter.

2

For simplicity, we will use lower case to represent both the random variables and their realizations.

31

in this thesis), i.e., they are the parameters to be conditioned on experimental data. Here

nθ is the dimension of parameters. θ is associated with a measure µ on Rnθ , such that

µ(A) = P θ−1 (A) for A ∈ Rnθ . We then define f (θ) = dµ/dθ to be the density of θ

with respect to the Lebesgue measure. For the present purposes, we will assume that such

a density always exists. Similarly, we treat the observations from the experiment, y ∈ Y

(referred to as “observations”, “noisy measurements”, or “data” in this thesis), as a real-valued

random vector endowed with an appropriate density, and d ∈ D as the vector of continuous

design variables (referred to as “design” in this thesis). If one performs an experiment under

design d and observes a realization of the data y, then the change in one’s state of knowledge

about the parameters is given by Bayes’ rule:

f (θ|y, d) =

f (y|θ, d)f (θ|d)

f (y|θ, d)f (θ)

=

.

f (y|d)

f (y|d)

(2.1)

For simplicity of notation, we shall use f (·) to represent all density functions, and which

specific distribution it corresponds to is reflected by its arguments (when needed for clarity,

we will explicitly include a subscript of the associated random variable). Here, f (θ|d) is

the prior density, f (y|θ, d) is the likelihood function, f (θ|y, d) is the posterior density, and

f (y|d) is the evidence. The second equality is due to the assumption that knowing the

design of an experiment without knowing its observations does not affect our belief about

the parameters (i.e., the prior would not change based on what experiment we plan to

do)—thus f (θ|d) = f (θ). The likelihood function is assumed to be given, and describes

the discrepancy between the observations and a forward model prediction in a probabilistic

way. The forward model, denoted by G(θ, d), is a function that maps both the parameters

and design into the observation space, and usually describes the outcome of some (possibly

computationally expensive, such as PDE-based) simulation process. For example, y can be

from, but not limited to, an additive Gaussian likelihood model: y = G(θ, d) + ǫ, where

ǫ ∼ N (0, σǫ2 ), leading to a likelihood function of f (y|θ, d) = fǫ (y − G(θ, d)).

We take a decision-theoretic approach and follow the concept of expected utility (or expected reward) to quantify the value of experiments [18, 146, 130]. While utility functions

are quite flexible and can be based on loss functions defined for specific goals or tasks, we

focus on utility functions that lead to valid measures of information gain of experiments [75].

Taking an information-theoretic approach, we choose utility functions that reflect the ex32

pected information gain on the parameters θ [105, 106]. In particular, we use the relative

entropy, or the Kullback-Leibler (KL) divergence, from the posterior to the prior, and take

its expectation under the prior predictive distribution of the data to obtain an expected

utility U (d):

f (θ|y, d)

f (θ|y, d) ln

U (d) =

dθ f (y|d) dy

f (θ)

Y H

= Ey|d DKL fθ|y,d (·|y, d)||fθ (·) ,

Z Z

(2.2)

where H ⊆ Rnθ is the support of the prior. Because the observations y cannot be known

before the experiment is performed, taking the expectation over the prior predictive f (y|d)

lets the resulting utility function reflect the information gain on average, over all anticipated

outcomes of the experiment. The expected utility U (d) is thus the expected information gain

due to an experiment performed at design d. A more detailed derivation of the expected

utility can be found in [89, 90].

We choose to use the KL divergence for several reasons. First, KL is a special case of

a wide range of divergence measures that satisfy the minimal set of requirements to be a

valid measure of information on a set of experiments [75]. These requirements are based on

the sufficient ordering (or “always at least as informative” ordering) of experiments, and are

developed rigorously from likelihood ratio statistics, in a general setting without specifically

targeting decision-theoretic or Bayesian perspectives. Second, KL gives an intuitive indication of information gain in the sense of Shannon information [55]. Since KL reflects the

difference between two distributions, a large KL divergence from posterior to prior implies

that the observations y decrease entropy in θ by a large amount, and hence those observations are more informative for parameter inference. Indeed, the KL divergence reflects

the difference in information carried by two distributions in units of nats [55, 110], and the

expected information gain is also equivalent to the mutual information between the parameters θ and the observations y, given the design d. Third, such a formulation for general

nonlinear forward models (where G(θ, d) are nonlinear functions in the parameters θ) is

consistent with linear optimal design theory based on the Fisher information matrix [66, 9].

When a linear model is used in this formulation, it simplifies to the linear D-optimality design, which is an attractive design approach due to, for example, its invariant under smooth

model reparameterization [45]. Finally, the use of information measure contrasts with a loss

33

function in that, while the former does not target a particular task (such as estimation)

in the context of a decision problem, it provides a general guidance of learning about the

uncertain environment, and gaining information that performs well for a wide range of tasks

albeit not best for any particular task.

Typically, the expected utility in Equation 2.2 has no closed form (even if the forward

model is, for example, a polynomial function of θ). Instead, it must be approximated

numerically. By applying Bayes’ rule to the quantities inside and outside the logarithm in

Equation 2.2, and then introducing Monte Carlo approximations for the resulting integrals,

we obtain the nested Monte Carlo estimator proposed by Ryan [145]:

N h

M

i

X

X

1

1

ln f (y (i) |θ(i) , d) − ln

f (y (i) |θ̃(i,j) , d) , (2.3)

U (d) ≈ ÛN,M (d, θs , ys ) ≡

N

M

i=1

j=1

o

n

where θs ≡ θ(i) ∪ θ̃(i,j) , i = 1 . . . N , j = 1 . . . M , are i.i.d. samples from the prior f (θ);

and ys ≡ y (i) , i = 1 . . . N , are independent samples from the likelihoods f (y|θ(i) , d). The

variance of this estimator is approximately A(d)/N + B(d)/N M and its bias is (to leading

order) C(d)/M [145], where A, B, and C are terms that depend only on the distributions

at hand. While the estimator ÛN,M is biased for finite M , it is asymptotically unbiased.

Finally, the expected utility must be maximized over the design space D to find the

optimal design:

d∗ = argmax U (d).

(2.4)

d∈D

Since U can only be approximated by Monte Carlo estimators such as ÛN,M , optimization

methods for stochastic objective functions are needed.

2.2

Stochastic optimization

Optimization methods can be broadly categorized as gradient-based and non-gradient-based.

While gradient-based methods require additional gradient information, they are also generally more efficient than their non-gradient counterparts. With the intention to solve Equation 2.4, we make the gradient information available for the batch OED problem in this

chapter. In particular, we consider two gradient-based stochastic optimization approaches:

34

Robbins-Monro (RM) stochastic approximation, and sample average approximation (SAA)

combined with the Broyden-Fletcher-Goldfarb-Shanno (BFGS) method. Both approaches

require some flavor of gradient information, but they do not use the exact gradient of U (d).

Calculating the latter is generally not possible, given that we only have a Monte Carlo

estimator of U (d). The use of non-gradient optimization methods in the context of batch

OED, with simultaneous perturbation stochastic approximation [160, 161] and Nelder-Mead

simplex method [124], have been previously investigated by the author [89, 90].

Recasting the optimization problem in the convention of a minimization statement:

d∗ = argmin [−U (d)] = argmin

d∈D

d∈D

n

io

h

h(d) ≡ Ey|d ĥ(d, y) ,

(2.5)

where ĥ(d, y) is the underlying unbiased estimator of the unavailable objective function

h(d) ≡ −U (d). We note that y is generally dependent on d.

2.2.1

Robbins-Monro stochastic approximation

The iterative update of the RM method is

dj+1 = dj − aj ĝ(dj , y ′ ),

(2.6)

where j is the optimization iteration index and ĝ(dj , y ′ ) is an unbiased estimator of the

gradient (with respect to d) of h(d) evaluated at dj . In other words, Ey′ |d [ĝ(d, y ′ )] = ∇d h(d),

but ĝ is not necessarily equal to ∇d ĥ. Also, y ′ and y may, but need not, be related. The

gain sequence aj should satisfy the following properties:

∞

X

j=0

aj = ∞ and

∞

X

j=0

a2j < ∞.

(2.7)

One natural choice, used in this work, is the harmonic step size sequence aj = β/j, where

β is some appropriate scaling constant. For example, in the diffusion application problem

of Section 2.5, β is chosen to be 1.0 since the design space is [0, 1]2 . With various technical assumptions on ĝ and g, it can be shown that RM converges to the exact solution of

Equation 2.5 almost surely [102].

Choosing the sequence aj is often viewed as the Achilles’ heel of RM, as the algorithm’s

35

performance can be very sensitive to the step size. We acknowledge this fact and do not

downplay the difficulty of choosing an appropriate gain sequence, but there exist logical

approaches to selecting aj that yield reasonable performance. More sophisticated strategies, such as search-then-converge learning rate schedules [57], adaptive stochastic step size

rules [17], and iterate averaging methods [135, 102], have been developed and successfully

demonstrated in applications.

We will also use relatively simple stopping criteria for the RM iterations: the algorithm

will be terminated when changes in dj stall (e.g., k dj − dj−1 k falls below some designated

tolerance for 5 successive iterations) or when a maximum number of iterations has been

reached (e.g., 50 iterations for the results of Section 2.5.)

2.2.2

Sample average approximation

Transformation to design-independent noise

The central idea of SAA is to reduce the stochastic optimization problem to a deterministic

problem, by fixing the noise throughout the entire optimization process. In practice, if the

noise y is design-dependent, it is first transformed to a design-independent random variable

by effectively moving all the design dependence into the function ĥ. (An example of this

transformation is given in Section 2.4.) The noise variables at different d then share a

common distribution, and a common set of realizations is employed at all values of d.

Such a transformation is always possible in practice, since the random numbers in any

computation are fundamentally generated from uniform random (more precisely, pseudorandom) numbers. Thus one can always transform y back into these uniform random variables,

which are of course independent of d.3 For the remainder of this section (Section 2.2.2) we

shall, without loss of generality, assume that y has been transformed to a random variable

w that is independent of d, while abusing the same notation of ĥ(d, w).

Reduction to a deterministic problem

SAA approximates the optimization problem of Equation 2.5 with

)

N

X

1

ĥ(d, wi ) ,

dˆs = argmin ĥN (d, ws ) ≡

N

d∈D

(

3

(2.8)

i=1

One does not need to go all the way to the uniform random variables; any higher-level “transformed”

random variables, as long as they remain independent of d, suffice.

36

where dˆs and ĥN (dˆs , ws ) are the optimal design and objective values under a particular set

of N realizations of the random variable w, ws ≡ {wi }N

i=1 . The same set of realizations is

used for different values of d during the optimization process, thus making the minimization

problem in Equation 2.8 deterministic. (One can view this approach as an application of

common random numbers.) A deterministic optimization algorithm can then be chosen to

find dˆs as an approximation to d∗ .

Estimates of h(dˆs ) can be improved by using ĥN ′ (dˆs , ws′ ) instead of ĥN (dˆs , ws ), where

′

′

ĥN ′ (dˆs , ws′ ) is computed from a larger set of realizations ws′ ≡ {wm }N

m=1 with N > N ,

in order to attain a lower variance. Finally, multiple (say R) optimization runs are often

performed to obtain a sampling distribution for the optimal design values and the optimal

objective values, i.e., dˆrs and ĥN (dˆrs , wsr ), for r = 1 . . . R. The sets wsr are independently

chosen for each optimization run, but remain fixed within each run. Under certain assumptions on the objective function and the design space, the optimal design and objective

estimates in SAA generally converge to their respective true values in distribution at a rate

√

of 1/ N [149, 97].4

For the solution of a particular deterministic problem dˆrs , stochastic bounds on the true

optimal value can be constructed by estimating the optimality gap h(dˆrs ) − h(d∗ ) [127, 111].

The first term can simply be approximated using the unbiased estimator ĥN ′ (dˆrs , wsr′ ) since

h

i

Ews′ ĥN ′ (dˆrs , ws′ ) = h(dˆrs ). The second term may be estimated using the average of the

approximate optimal objective values across the R replicate optimization runs (based on

wsr , rather than wsr′ ):

R

h̄N =

1 X

ĥN (dˆrs , wsr ).

R

(2.9)

r=1

This is a negatively biased estimator and hence a stochastic lower bound on h(d∗ ) [127,

4

More precise properties of these asymptotic distributions depend on properties of the objective and

the set of optimal solutions to the true problem. For instance, in the case of a singleton optimum d∗ ,

the SAA estimates ĥN (dˆs , ·) converge to a Gaussian with variance Varw [ĥ(d∗ , w)]/N . Faster convergence

to the optimal objective value may be obtained when the objective satisfies stronger regularity conditions.

The SAA solutions dˆs are not in general asympotically normal, however. Furthermore, discrete probability

distributions lead to entirely different asymptotics of the optimal solutions.

37

111, 151].5,6 The difference ĥN ′ (dˆrs , wsr′ ) − h̄N is thus a stochastic upper bound on the true

optimality gap h(dˆrs ) − h(d∗ ). The variance of this optimality gap estimator can be derived

from the Monte Carlo standard error formula [3]. One could then use the optimality gap

estimator and its variance to decide whether more runs are required, or which approximate

optimal designs are most trustworthy.

Pseudo-code for the SAA method is presented in Algorithm 1. At this point, we have

reduced the stochastic optimization problem to a series of deterministic optimization problems; a suitable deterministic optimization algorithm is still needed to solve them.

Algorithm 1: Pseudo-code for SAA.

Set optimality gap tolerance η and number of replicate optimization runs R;

r = 1;

while optimality gap estimate > η and r ≤ R do

Sample the set wsr = {wir }N

i=1 ;

Perform a deterministic optimization run and find dˆrs (see Algorithm 2);

r }N ′

Sample the larger set wsr′ = {wm

where N ′ > N ;

m=1

P

N′

1

r

r

r

r

ˆ

ˆ

′

ĥ d , w ;

Compute ĥN (d , w ′ ) = ′

1

2

3

4

5

6

7

s

s

N

m=1

s

m

Estimate the optimality gap and its variance;

r = r + 1;

end

ˆr r R

Output the sets {dˆrs }R

r=1 and {ĥN ′ (ds , ws′ )}r=1 for post-processing;

8

9

10

11

Broyden-Fletcher-Goldfarb-Shanno method

The BFGS method [126] is a gradient-based method for solving deterministic nonlinear

optimization problems, widely used for its robustness, ease of implementation, and efficiency.

It is a quasi-Newton method, iteratively updating an approximation to the (inverse) Hessian

matrix from objective and gradient evaluations at each stage. Pseudo-code for the BFGS

method is given in Algorithm 2. In the present implementation, a simple backtracking

line search is used to find a step size that satisfies the first (Armijo) Wolfe condition only.

The algorithm can be terminated according to many commonly used criteria: for example,

when the gradient stalls, the line search step size falls below a prescribed tolerance, the

h

i

Short proof from [151]: For any d ∈ D, we have that Ews ĥN (d, ws ) = h(d), and that ĥN (d, wsr ) ≥

h

h

h

i

i

i

mind′ ∈D ĥN (d′ , wsr ). Then h(d) = Ews ĥN (d, ws ) ≥ Ews mind′ ∈D ĥN (d′ , ws ) = Ews ĥN (dˆrs , ws ) =

Ews h̄N .

6