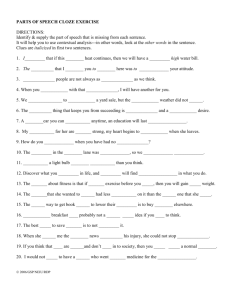

Extracting Rich Event Structure from Text Models and Evaluations Evaluations and More

advertisement

Extracting Rich Event Structure from Text Models and Evaluations Evaluations and More Nate Chambers US Naval Academy Experiments 1. Schema Quality – Did we learn valid schemas/frames ? 2. Schema Extraction – Do the learned schemas prove useful ? 2 Experiments 1. Schema Quality – Human judgments – Comparison to other knowledgebases 2. Schema Extraction – – – – Narrative Cloze MUC-4 TAC Summarization 3 Schema Quality: Humans “Generating Coherent Event Schemas at Scale” – Balasubramanian et al., 2013 Relation Coherence 1) Are the relations in a schema valid? 2) Do the relations belong to the schema topic? Actor coherence: 3) Do the actors have a useful role within the schema? 4) What fraction of instances fit the role 4 Schema Quality: Humans Amazon Turk Experiment: Relation Coherence 1. Ground the arguments with a single entity. – 2. Randomly sample based on frequency the head word for each argument. Present schema as a grounded list of tuples Grounded Schema Carey veto legislation Legislation be sign by Carey Legislation be pass by State Senate Carey sign into law … 5 Schema Quality: Humans Amazon Turk Questions: Relation Coherence 1. 2. 3. Is each of the grounded tuples valid (i.e. meaningful in the real world)? Do the majority of relations form a coherent topic? Does each tuple belong to the common topic? * Turkers told to ignore grammar * Five annotators per schema Grounded Schema Carey veto legislation Legislation be sign by Carey Legislation be pass by State Senate Carey sign into law … 6 Schema Quality: Humans Actor Coherence 1. Ground ONE argument with a single entity. 2. Show the top 5 head words for the second argument. Grounded Schema Carey veto legislation, bill, law, measure Legislation be sign by Carey, John, Chavez, She Legislation be pass by State Senate, Assembly, House, … Carey sign into law … “Do the actors represent a coherent set of arguments?” (yes/no question? Unclear what answers were allowed.) 7 Results 8 Schema Quality: Knowledgebases • FrameNet events and roles • MUC-3 templates Chambers and Jurafsky, 2009 9 FrameNet (Baker et al., 1998) 10 Comparison to FrameNet • Narrative Schemas – Focuses on events that occur together in a narrative. • FrameNet (Baker et al., 1998) – Focuses on events that share core roles. Comparison to FrameNet • Narrative Schemas – Focuses on events that occur together in a narrative. – Schemas represent larger situations. • FrameNet (Baker et al., 1998) – Focuses on events that share core roles. – Frames typically represent single events. Comparison to FrameNet 1. How similar are schemas to frames? – Find “best” FrameNet frame by event overlap 2. How similar are schema roles to frame elements? – Evaluate argument types as FrameNet frame elements. FrameNet Schema Similarity 1. How many schemas map to frames? – 13 of 20 schemas mapped to a frame – 26 of 78 (33%) verbs are not in FrameNet 2. Verbs present in FrameNet – 35 of 52 (67%) matched frame – 17 of 52 (33%) did not match FrameNet Schema Similarity • Why were 33% unaligned? – FrameNet represents subevents as separate frames – Schemas model sequences of events. One Schema trade rise fall Two FrameNet Frames Exchange Change Position on a Scale FrameNet Argument Similarity 2. Argument role mapping to frame elements. – 72% of arguments appropriate as frame elements FrameNet frame: Enforcing Frame element: Rule law, ban, rule, constitutionality, conviction, ruling, lawmaker, tax INCORRECT FrameNet to MUC? • FrameNet represents more atomic events, less larger scenarios. • Do we have a resource with larger scenarios? – Not really – MUC-4? 17 Schema Quality Perp 1. 2. 3. 4. Attack Bombing Kidnapping Arson Recall: 71% 18 Victim Target Instrument Location Time MUC-4 Issues • MUC-4 is a very limited domain • 6 template types • No good way to evaluate the learned knowledge except through the extraction task. – PROBLEM: You can do extraction without learning an event representation 19 Can we label more MUC? • Extremely time-consuming • Still domain-dependent One possibility: crowd-sourcing • Regneri et al. (2010) – Used Turk for 22 scenarios – Asked Turkers to list events in order for each 20 Regneri Example 21 Experiments 1. Schema Quality – Human judgments – Comparison to other knowledgebase 2. Schema Extraction – – – – Narrative Cloze MUC-4 TAC Turkers 22 Cloze Evaluation Taylor, Wilson. Cloze Procedure: a new tool for measuring readability. Journalism Quarterly. 1953. • Predict the missing event, given a set of observed events. McCann threw two interceptions early… Toledo pulled McCann aside and told him he’d start… McCann gold events quickly completed his first two passes… 23 X threw pulled X told X X????? start X completed Narrative Cloze Results 36.5% improvement Narrative Cloze Evaluation What was the original goal of this evaluation? 1. “comparative measure to evaluate narrative knowledge” 2. “never meant to be solvable by humans” Do you need narrative schemas to perform well? As with all things NLP, the community optimized evaluation performance, and not the big picture goal. 25 Narrative Cloze Evaluation Jans et al., (2012) Use the text ordering information in a cloze evaluation. It is no longer a bag of events that have occurred, but a specific order, and you know where in the order the missing event occurred in the text. This has developed into…events as Language Models P(x | previousEvent) * P(nextEvent | x) 26 Narrative Cloze Evaluation Two Major Changes • Cloze includes the text order. • Cloze tests are auto-generated from parses and coreference systems. The event chains aren’t manually verified as gold (as the original Narrative Cloze did). Jans et al., (2012) Pichotta and Mooney (2014) Rudinger et al. (2015) 27 Narrative Cloze Evaluation Language Modeling with Jans et al. (2011) • Event: (verb, dependency) • Pointwise Mutual Information between events with coreferring arguments (Chambers and Jurafsky, 2009) • Event bigrams, in text order • Event bigrams with one intervening event (skip-grams) • Event bigrams with two intervening events (skip-grams) • Varied which coreference chains they trained on. All, subset, or just the single longest event chain. 28 Narrative Cloze Evaluation Language Modeling with Jans et al. (2011) • Introduced the score metric: Recall@N • The number of cloze tests where the system guesses the missing event in the top N of its ranked list. • PMI events scored worse than bigram/skip-gram approaches. • Skip-grams outperformed vanilla bigrams. 2-skip-gram and 1skip-gram performed similarly. • Subset of chains (long ones) training performed best. 29 Narrative Cloze Evaluation Pichotta and Mooney (2014) • Extended and reproduced much of Jans et al. (2012) • Main Contribution: multi-argument bigram Cloze Evaluation arrested _Y_ convicted _Y_ 30 _X_ arrested _Y_ _Z_convicted _Y_ Narrative Cloze Evaluation Pichotta and Mooney (2014) • Extended and reproduced much of Jans et al. • Main Contribution: multi-argument bigram Cloze Evaluation arrested _Y_ convicted _Y_ _X_ arrested _Y_ _Z_convicted _Y_ • Fun finding: multi-argument bigrams improve performance in single-argument cloze tests • Not so fun: unigrams are an extremely high baseline 31 Narrative Cloze Evaluation Rudinger et al. (2015) • Duplicated Jans et al. skip-grams and Pichotta/Mooney unigrams • Contribution: log-bilinear language model (Mnih and Hinton, 2007) • Single-argument events, not multi-argument. arrested _Y_ convicted _Y_ 32 Narrative Cloze Evaluation Rudinger et al. (2015) • Main finding: Unigrams essentially as good as the bigram models (confirms Pichotta) • Main finding: log-bilinear language model ~36% recall in Top 10 ranking compared to ~30% with bigrams 33 Narrative Cloze Evaluation Remaining Observations 1. Language modeling is better than PMI on the Narrative Cloze. 2. PMI and other learners appear to learn attractive representations that LMs do not. Remaining Questions 1. Does this mean the Narrative Close is useless? • Do we care about predicting “X said”? 2. Should text order be part of the test? • Originally, it was not • Real-world order is what we care about 34 3. Perhaps it is one of a bag of evaluations… IE as an Evaluation • MUC-4 • TAC 35 MUC-4 Extraction MUC-4 corpus, as before Experiment Setup: • Train on all 1700 documents • Evaluate the inferred labels in the 200 test documents 36 Evaluations 1. Flat Mapping 2. Schema Mapping Mapping choice leads to very different extraction performance. 37 Evaluations 1. Flat Mapping – Map each learned slot to any MUC-4 slot Schema 1 Role 1 Role 2 Role 3 Role 4 Schema 2 Role 1 Role 2 Role 3 Role 4 Schema 3 Role 1 Role 2 Role 3 Role 4 Bombing Perpetrator Victim Target Instrument Arson Perpetrator Victim Target Instrument 38 Evaluations 2. Schema Mapping – Slots bound to a single MUC-4 template Schema 1 Role 1 Role 2 Role 3 Role 4 Schema 2 Role 1 Role 2 Role 3 Role 4 Schema 3 Role 1 Role 2 Role 3 Role 4 Bombing Perpetrator Victim Target Instrument Arson Perpetrator Victim Target Instrument 39 MUC-4 Evaluations • Cheung et al. (2013) – Learned Schemas – Flat Mapping • Chambers (2013) – Learned Schemas – Flat and Schema Mapping • Nguyen et al. (2015) – Learned a bag of slots, not schemas – Flat Mapping (unable to do Schema Mapping) 40 Evaluations 1. Flat Mapping - Didn’t learn schema structure Role 1 Role 2 Role 3 Role 4 Role 5 Bombing Perpetrator Victim Target Instrument Role 6 Role 7 Role 8 Arson Role 9 Perpetrator Victim Target Instrument Role 10 Role 11 Role 12 41 MUC-4 Evaluation Optimizing to the Evaluation 1. Latest efforts appear to be optimizing to the evaluation again. 2. Don’t evaluate with structure, so don’t learn structure (this gives higher evaluation results). – Similar to Narrative Cloze. The best rankings occur with a model that doesn’t learn good sets of events. 3. But if the goal is learning rich event structure, perhaps the flat mapping is inappropriate? – But if we extract better with it, why does it matter? 42 MUC-4 Evaluation A way forward? 1. Yes, perform the MUC-4 extraction task. 2. Also compare to the knowledgebase of templates. This prevents a specialized extractor from “winning”, in that it may not represent any useful knowledge beyond the task. It also prevents a cute way to learn event knowledge that has no practical utility. 43 TAC 2010 Cheung et al. (2013) TAC 2010 Guided Summarization • Write a 100 word summary for 10 newswire articles. • Documents come from the AQUAINT datasets • http://nist.gov/tac/2010/Summarization/GuidedSumm.2010.guidelines.html • KEY: each topic comes with a “topic statement”, essentially an event template 44 TAC 2010 Example TAC Template Accidents and Natural Disasters: WHAT: what happened WHEN: date, time, other temporal placement markers WHERE: physical location WHY: reasons for accident/disaster WHO_AFFECTED: casualties (death, injury), or individuals otherwise negatively affected by the accident/disaster DAMAGES: damages caused by the accident/disaster COUNTERMEASURES: countermeasures, rescue efforts, prevention efforts, other reactions to the accident/disaster 45 TAC 2010 Example TAC Summary Text (WHEN During the night of July 17,) (WHAT a 23-foot <WHAT tsunami) hit the north coast of Papua New Guinea (PNG)>, (WHY triggered by a 7.0 undersea earthquake in the area). You can map this data to a MUC-style evaluation. BENEFIT: another domain beyond the niche MUC-4 domain 46 Summary of Evaluations • Chambers and Jurafsky (2008) • – Turkers – Narrative cloze and FrameNet • Regneri et al. (2010) • Chambers and Jurafsky (2011) – MUC-4 • Chen et al. (2011) – Custom annotation of docs for relations • Jans et al. (2012) • Cheung et al. (2013) – MUC-4 – TAC-2010 Summarization Chambers (2013) – MUC-4 • Pichotta and Mooney (2014) – Narrative Cloze • Rudinger et al. (2015) – Narrative Cloze – Narrative Cloze • Bamman et al. (2013) – Learned actor roles, gold movie clusters – Turkers • Balasubramian et al. (2013) • Nguyen et al. (2015) – MUC-4 47 References Niranjan Balasubramanian and Stephen Soderland and Mausam and Oren Etzioni. Generating Coherent Event Schemas at Scale. EMNLP 2013. David Bamman, Brendan O’Connor, Noah Smith. Learning Latent Personas of Film Characters. ACL 2013. Nathanael Chambers. Event Schema Induction with a Probabilistic Entity-Driven Model. EMNLP 2013. Nathanael Chambers and Dan Jurafsky. Template-Based Information Extraction without the Templates. ACL 2011. Harr Chen, Edward Benson, Tahira Naseem, and Regina Barzilay. In-domain Relation Discovery with Meta-constraints via Posterior Regularization. ACL 2011. Jackie Cheung, Hoifung Poon, Lucy Vanderwende. Probabilistic Frame Induction. ACL 2013. Bram Jans, Ivan Vulic, and Marie Francine Moens. Skip N-grams and Ranking Functions for Predicting Script Events. EACL 2012 Kiem-Hieu Nguyen, Xavier Tannier, Olivier Ferret and Romaric Besançon. Generative Event Schema Induction with Entity Disambiguation. ACL 2015. Karl Pichotta and Raymond J. Mooney. Statistical Script Learning with Multi-Argument Events. EACL 2014. Michaela Regneri, Alexander Koller, Manfred Pinkal. Learning Script Knowledge with Web Experiments. Rachel Rudinger, Pushpendre Rastogi, Francis Ferraro, Benjamin Van Durme. Script Induction as Language Modeling. EMNLP 2015. 48