Document 11074825

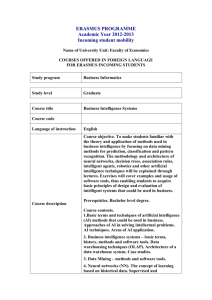

advertisement