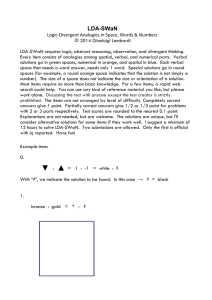

Recommendations, Credits and Discounts: by Boris Maciejovsky

advertisement