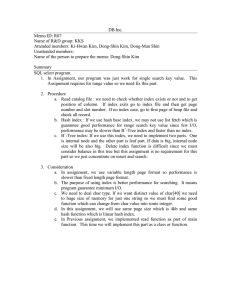

Optimizing Live Virtual Machine Migrations using Content-based

advertisement