2012 Research Digest 2012 Highlights

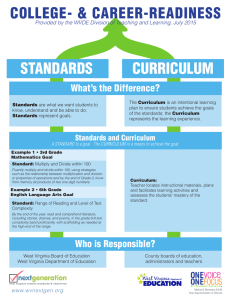

advertisement

Office of Research 2012 Research Digest 2012 Highlights Brief Reviews of the Research Literature Page 2 Research and Evaluation Studies Page 3 Our Staff Page 15 Milestones Page 15 During 2012, the Office of Research continued to evolve as an independent agency within the West Virginia Department of Education, strengthening our ability to provide objective and rigorous data collection and reporting. Here are some of our accomplishments for the year. Creation of an Institutional Review Board (IRB). Now that the Department has brought most of its research and evaluation projects in-house, we can no longer rely on external IRBs to provide oversight for the protection of human research participants. So we got to work forming a board, writing guidelines, training our research staff and others on the board, developing forms and recordkeeping procedures, and performing our first research application reviews. When we began these activities, our focus was primarily on making sure we were in compliance with federal guidelines. As we went along, we developed an even stronger respect for the process of thinking through and implementing protections for the people we study, in such a structured and formal way. Consolidation of our team. The Office of Research was established as a separate entity as recently as 2010. Under the leadership of Larry White, we finished staffing our office in 2012. Collectively our office has a full set of the specialized skills needed to provide focused reviews of the research literature; conduct needs assessments; analyze demographic, student achievement, and other data to report on trends; and provide informative and actionable program evaluations. We aim to be a high-impact and objective research and evaluation resource for West Virginia’s educational system. Refinement of our methods and procedures. In 2012 we put together a powerful set of tools and processes, that guide how we • plan our research—by using logic models, client collaboration, reviews of the research literature, formal evaluation plans, and internal reviews; • manage our projects—by using electronic project tracking and management tools; • collect data—by using efficient online survey tools, representative sampling, validated instruments, existing data sources, and structured interviews; • analyze data—by using an array of statistical models and interpretative techniques; and • communicate findings and recommendations—by developing high quality reports and research briefs through a rigorous writing and review process, and disseminating them through multiple channels (online, in print, and presentations) to their intended audiences. Development of a procedure for creating research and data disclosure agreements for external research projects. We worked with The Office of Information Systems and the Office of Legal Services to develop a two-step process that enables the WVDE to safely and efficiently enter into agreements with external research organizations/individuals to conduct research on behalf of the Department. The process includes a Research Proposal Application, completed by the external researcher(s), which the Office of Research first reviews to determine the scientific merit and usefulness to the WVDE of a research proposal. Once the research proposal is approved, the Office of Information Systems develops a legally binding data disclosure agreement with the researcher to ensure any personally identifiable information provided is protected. Data are then released to the researcher for analysis. Along the way, we also engaged in more than 30 projects, 16 of which culminated in findings and reports by the end of 2012, described in this Research Digest. We also produced two brief reviews of the research literature, also described here. All reports and reviews are available on our website at http://wvde.state.wv.us/research. We look forward to our work with students, educators, communities, and Department staff in 2013 and we invite your comments about how we can better serve you and the students of West Virginia. Nate Hixson, Assistant Director Brief Reviews of the Research Literature Effects of Disability Labels on Students with Exceptionalities Patricia Cahape Hammer, 2012 Researchers have studied the effects of disability labels on students from several angles, including labels as a basis of stigma and lower teacher expectations and the mitigating effects that labels can sometimes have—especially for students with dyslexia and highfunctioning autism. Other research has looked at the behavior of disabled students, to see how it may affect both teacher and peer expectations and relationships. Lastly, researchers point out the need to address issues of school culture, especially stigma and mistreatment of students with disabilities by their nondisabled peers. This brief research review was originally published as part of a larger study, West Virginia Alternate Identification and Reporting Program: An Exploratory Analysis by Yetty A. Shobo, Anduamlak Meharie, Patricia Cahape Hammer, and Nate Hixson, available at http://wvde.state. wv.us/research/reports2012/EvaluationofAIR2011Final062812.pdf. Response to Intervention: An Introduction Patricia Cahape Hammer, 2012 This review provides an introduction to response to intervention (RTI), including how it is defined, reasons for its growing popularity, an introduction to an emerging body of research, a brief discussion of what it all means, and suggestions about directions for future research. This brief research review was originally published as part of a larger study, West Virginia Alternate Identification and Reporting Program: An Exploratory Analysis by Yetty A. Shobo, Anduamlak Meharie, Patricia Cahape Hammer, and Nate Hixson, available at http://wvde.state. wv.us/research/reports2012/EvaluationofAIR2011Final062812.pdf. 2 Research and Evaluation Studies The West Virginia School Climate Index: A Measure of School Engagement, Safety, and Environment Andy Whisman, January 2012 The WV School Climate Index is a multidimensional measure developed by the WVDE Office of Research and Office of Healthy Schools as part of the Safe and Supportive Schools grant program (S3). The index was developed in alignment with a model put forth by the U.S. Department of Education, Office of Safe and Drug Free Schools (OSDFS). The OSDFS based its model on a synthesis of available research and expert and stakeholder opinion, which point to school climate consisting of three primary domains, each consisting of corresponding subdomains (in italics) as follows: • Engagement - the quality of relationships, including respect for diversity, among students, staff and families; the level of school participation and involvement by families, staff, and students in school activities; and efforts by schools to connect with the larger community. • Safety - the physical and emotional security of the school setting and school-related activities as perceived, experienced, and created by students, staff, families, and the community. The use and trade of illicit substances in the school setting and during school-related activities also is included in this domain. • Environment - the physical and mental health supports available that promote student wellness, the physical condition of school facilities, the academic environment, and the disciplinary tone of the school—i.e., the fairness and adequacy of disciplinary procedures. The WV School Climate Index—derived from 20 indicators drawing from student and staff survey data and selected discipline incident data reported in the West Virginia Education Information System (WVEIS)—provides (a) an overall measure of school climate that measures all domains and subdomains in the OSDFS model; (b) a straightforward, easily understood scale that can be readily interpreted by those working to improve school climate; and (c) information about component parts of the index to help schools rapidly identify areas for programmatic interventions. The measure is a requirement of the S3 program, which awarded WVDE a grant in 2010. To date, the WV School Climate Index has been deployed in 42 high schools in 18 counties that are part of West Virginia’s S3 grant program. This Office of Research publication fulfills West Virginia’s obligation to publicly document the composition of the index. The WV School Climate Index provides a straightforward, easily understood scale that can be readily interpreted by those working to improve school climate and help them rapidly identify areas for improvement. The West Virginia Special Education Technology Integration Specialist Program: Examining Reported Expected and Actual Use by School Administrators Report Yetty A. Shobo and Nate Hixson, February 2012 The WVDE Office of Assessment, Accountability and Research, in collaboration with the Office of Instructional Technology; Office of Special Programs, Extended and Early Learning; and the Office of Career and Technical Instruction developed an evaluation plan to assist the WVDE in determining the impact of the Technology Integration Specialist (TIS) program on selected TIS applicants, participating schools, teachers, and students. This study which focuses on the experience of school administrators, both in terms of their expected use and actual use of the SE TISs program during the 2010-2011 school year was conducted as one component of that plan. It is the first examination of administrator perceptions about the program in its 6-year history. The West Virginia Special Education Technology Integration Specialist Program: Examining Reported Expected and Actual Use by Teachers Report Yetty A. Shobo and Nate Hixson, February 2012 The West Virginia Department of Education (WVDE) Office of Assessment, Accountability and Research, in collaboration with the Office of Instructional Technology; Office of Special Programs, Extended and Early Learning; and the Office of Career and Technical Instruction developed an evaluation plan to assist the WVDE in determining the impact of the Technology Integration Specialist (TIS) program 3 on selected TIS applicants, participating schools, teachers, and students. This study focuses on how other teachers with whom an SE TIS has cotaught or had some degree of influence perceive the program, and how having an SE TIS in their school has changed their technology use in their teaching. It was conducted during the 2010-2011 school year as one component of that plan, and is the first examination of teacher perceptions about the program in its 6-year history. The West Virginia School Climate Index: Validity and Associations With Academic Outcome Measures Andy Whisman, April 2012 The term school climate refers to the character and quality of school life. The validity of a new index designed to measure school climate—the WV School Climate Index—was tested in this study, and it was used to show the impact of school climate in West Virginia schools. Validity of the index. The Index was developed in alignment with a model for school climate measurement put forth by the U.S. Department of Education, Office of Safe and Healthy Students. The Index was tested based on the assumptions that a valid measure should (a) differentiate between favorable and unfavorable climate conditions and (b) based on other research, be correlated with and predictive of academic outcomes. Evidence of the Index’s ability to differentiate climate conditions was provided by School Climate Specialists working in intervention schools, who reported that the Index reflected conditions they had observed. Further, statistically significant differences in Index scores were found between intervention and nonintervention schools. The Index also was shown to correlate at moderate to moderately strong levels with school-level proficiency rates in four content areas and median growth percentiles for mathematics and reading/language arts (RLA)— accounting for noteworthy proportions of variation in these measures. 4 Demonstrated relationship of school climate with student outcomes. Factors such as high poverty rates, large proportion of students with disabilities, larger school size, and certain grade-span configurations of schools are associated with poorer academic outcomes. Even when these conditions were present, this study showed the positive effect of school climate remained strong for four of six academic outcome measures tested. School climate was the most influential predictor in the social studies proficiency and mathematics growth percentile regression models, and was the second and third most influential predictor for RLA proficiency and growth percentile. Further, the study showed positive school climate substantially moderated the effect of poverty as well as the other factors included in the model. For social studies proficiency and mathematics growth percentile, the effects of poverty were entirely moderated by school climate. With all measures considered together, positive school climate lessened the cumulative negative impact of poverty, disability rate, school size, and grade-span configuration from 6% to 100%. Conclusions. Schools have virtually no control of the demographic characteristics of the students and communities they serve, and decisions about school size and grade-span configuration reside at much higher political and administrative levels. The results reported in this study suggest that by addressing a factor that is within their sphere of influence— improving school climate—schools may substantially diminish the unfavorable effects of matters over which they have little control. Accordingly, schools should focus their improvement efforts on the needs of their students and staff as they relate to school climate. The WV School Climate Index can help schools identify areas of needed improvement and measure their progress. This study showed that by addressing school climate, schools may substantially diminish the unfavorable effects of poverty. Educator Evaluation Pilot Project: Results from a Midyear Survey of Teachers in All Participating Schools Anduamlak Meharie, April 2012 This is an interim, midyear report of a more comprehensive evaluation study of the Educator Evaluation Pilot Project. As such, the report is not intended to present summative conclusions about the efficacy or outcomes of the program under study. The purpose of this report is to present stakeholder feedback for program staff to consider as they make implementation decisions. Method of study. We conducted an online survey of teachers participating in the Pilot Project, during January and February of 2012. A total of 421 teachers representing all 25 schools in the project responded, for a response rate of 55%, which means we can have 95% confidence (±3.2% margin of error) that the results of the survey represent the larger population. Findings. Large majorities of respondents indicated they had received adequate training to participate in the new evaluation system, as well as adequate support, and constructive and beneficial feedback from their school administrators. Although half of all respondents (79 out of 158) who encountered technology issues indicated they had been adequately addressed, the remaining half were still encountering technology issues. The student learning goal process was the system component with the highest reported fidelity of implementation. About two thirds of respondents reported it took them less than 60 minutes to establish student learning goals and identify strategies, measures, and evidence. This system component was also viewed as having contributed the most to respondents’ professional growth. Large majorities indicated that conferences with school administrators to discuss student learning goals and classroom observations had at least a moderate impact on their professional growth. Nearly two thirds or more of respondents indicated that they believe (a) they play an active role in their own evaluation; (b) the new evaluation system promotes professional growth; (c) the new system clarifies what is expected from teachers; and (d) the district/school has provided enough time for them to collaborate with other teachers in their school. Approximately half or more of respondents indicated the belief that the new evaluation system empowers teachers and is fair to all teachers regardless of tenure, role, and other factors. Limitations of study. Feedback from participants was gathered at the halfway point of the first year implementation of the pilot project. Therefore, data from this interim evaluation report should not be used to pass judgment on the merit of the system but rather to identify the strengths and weaknesses of the system during the early phases of implementation. Recommendations. Project staff should consider (a) identifying teachers in need of training at the beginning of the school year and provide ongoing supplemental training; (b) providing extensive training specifically on the online system to individuals either at the RESA or district level to serve as contact persons for schools; (c) making WVEIS on the Web accessible to educators outside of the school building; (d) making the self-assessment instrument available for the less experienced teachers and encouraging them to use the process for their own purposes; (e) providing onsite technical assistance to provide clarification on the process of setting student learning goals; and (f) elucidating further the process for compiling additional evidence for use during conferences. Nearly two thirds or more of teachers in the pilot project reported the belief that (a) they play an active role in their own evaluation; (b) the new evaluation system promotes professional growth; (c) the new system clarifies what is expected from teachers; and (d) the district/ school has provided enough time for them to collaborate with other teachers in their school. 5 ESEA LEA Consolidated Monitoring 2011- 2012: Feedback from Subrecipients Anduamlak Meharie, June 2012 The Consolidated Monitoring Survey was designed by the West Virginia Department of Education (WVDE) Office of Research in consultation with representatives from the WVDE Division of Educator Quality and System Support. The purpose of the survey was to gather feedback regarding the quality, relevance, and usefulness of the federal consolidated monitoring process for improving the WVDE’s efforts to assist districts with school improvement initiatives and to build capacity. Method of study. The link to an online survey was distributed to district superintendents, federal program directors and coordinators, and school principals following monitoring visits during the course of the 2011-2012 school year. A total of 35 respondents completed the survey. Data from the survey were tabulated and descriptive statistics were interpreted. Findings. Overall, feedback from respondents suggests the monitoring process has been highly successful in ensuring that grantees comply with federal requirements, and in aiding LEAs and schools working to bring about countyand school-wide improvement. Monitoring team members were appreciated for their professionalism and level of expertise in helping LEAs and schools overcome obstacles and identify solutions, in a process that respondents characterized as collaborative. In the process, based on the nature and tone of comments from respondents, LEAs view the SEA as a partner in their improvement efforts. Based on survey responses collected later versus earlier in the school year, use of the Electronic Document Storage System seemed to increase—although it remained low. Limitations of study. Due to the process by which the online survey was distributed, coupled with the need to ensure confidentiality, we were not able to calculate a response rate and confidence level for the result. In other words, without knowledge of the exact size of the population, we cannot be confident that feedback from a sample of 35 respondents is representative of the larger population. Recommendations. The increase in usage of the Electronic Document Storage System through-out the 2011-2012 school year, although encouraging, is not yet ideal. The intent of the system appears to be a very good one, as it would allow monitoring teams to dedicate more time during on-site visits to conversations with LEA and school staff, which respondents seem to value above time spent reviewing documents. Some respondents expressed the need for training in use of the system. Respondents’ comments and the fact that near the end of the 2011-2012 school year only a little over a third of respondents indicated having begun using the system suggest that this is an aspect of the monitoring process that program staff can target for improvement—one that can enrich the overall process for all stakeholders. 6 Team members involved in monitoring were appreciated for their professionalism and level of expertise in a process that respondents characterized as collaborative. Use of the Electronic Document Storage System, although increasing, is an aspect of the monitoring process that program staff can target for improvement—one that can enrich the overall process for all stakeholders. The West Virginia Alternate Identification and Reporting Program: An Exploratory Analysis Yetty A. Shobo, Anduamlak Meharie, Patricia Cahape Hammer, and Nate Hixson, June 2012 This study examines the results of the Alternative Identification and Reporting (AIR) program, which promoted the nonuse of disability labels for students receiving special education under the Individuals with Disabilities Education Act in a group of 26 elementary and middle schools in West Virginia. The AIR program was founded on the premise that the determination of a specific disability category and subsequent labeling is not necessary for providing students needed instructional and behavioral services. Instead, staff were to focus on the instructional and behavioral needs of the students. Method of study. Survey and assessment data were used to examine the results of the AIR program. In response to e-mail invitations, 273 teachers, 20 principals, 12 assistant principals, and 11 psychologists completed online questionnaires. Additionally, an analysis of WESTEST/ WESTEST 2 assessment data examined whether students with disabilities attending AIR schools outperform students with similar disabilities in non-AIR schools in mathematics and reading/ language arts. Findings. The AIR program made limited progress toward the first of its four goals, to establish and reinforce the commonality of instructional and behavioral needs for students. The AIR program made some progress in its second goal of transitioning teachers, administrators, and parents towards a model of support that is based on the student’s instructional and behavioral needs and not a defined area of disability. Survey results also suggest that the AIR process made progress Survey results suggest that the AIR process made progress in diminishing the burden that a label appears to place on students emotionally, and the associated low expectations. in diminishing the burden that a label appears to place on students emotionally, and the associated low expectations. Addressing the third goal, analyses in this study reveal some higher, though statistically insignificant, gains in test scores for AIR students in mathematics and reading compared with students with similar disabilities in non-AIR schools. The fourth goal, accomplished with this report, was to contribute to the national dialogue associated with early intervention, response to intervention (RTI), and appropriate instruction and support for students who demonstrate the need for the protections of IDEA. Limitations of study. Limitations included (a) a lack of direct contact with potential survey participants resulting in inconsistency across schools regarding who responded to the survey; (b) variation in the length of experience with the AIR program among survey respondents; (c) shortcomings in a survey with multiple choice response options; (d) some confusion about the differences between RTI and AIR; (e) the availability of only posttest responses for educators, precluding the ability to detect changes in attitudes over time; and (f) the exclusion of students too young to take WESTEST 2. Recommendations. Although the AIR program did not fully achieve its first three goals, it appears it is well on its way to doing so. Recommendations include (a) provide more resources and support for school personnel; (b) reduce negative perceptions of the program held by some school personnel; (c) encourage parents’ engagement with the AIR model of support based on needs; (d) have better documentation and aim to increase the fidelity of the AIR program; (e) include general education students as part of the AIR program; (f) focus specifically on school culture in the AIR program; and (g) thoroughly review the literature at the program planning stage. Although the AIR program did not fully achieve its goals, it could be on its way to doing so if the findings from this study are used to inform program implementation going forward. 7 A Cohort Study of Arts Participation and Academic Performance Andy Whisman and Nate Hixson, August 2012 In West Virginia, one arts credit is required for graduation. This study examined whether participation in arts instruction beyond the one-credit minimum correlated with improved academic proficiency. Method of study. We studied a cohort of 14,653 public high school students who stayed at grade level during the four-year period from 2007 through 2010. Arts participation was defined as the total number of credits in all arts disciplines earned during students’ tenure from Grade 9 through Grade 12. We examined the presence and magnitude of associations between arts participation and proficiency (Mastery or above) in mathematics and reading/language arts (RLA) on the WESTEST 2 and for scoring at or above the national average composite score on ACT PLAN. We also calculated the odds of achieving these outcomes relative to the level of arts participation. Findings. Students who earned two or more arts credits during high school were about 1.3 and 1.6 times more likely to score at proficient levels for mathematics and RLA, respectively. Students who earned two or more arts credits also were about 1.5 times more likely to have scored at or above the national average composite score on the ACT PLAN. odds of achieving Mastery in mathematics were only modestly improved—1.3 times greater when students earned two or more arts credits and 1.5 times greater for achieving Distinguished; but were slightly higher for reading/language, rising from about 1.4 times for Mastery to 2.0 times for Distinguished. Limitations of study. The highest grade in which WESTEST 2 is administered is 11th grade, yet arts participation was measured through students’ 12th-grade year. Likewise, the students completed ACT PLAN testing in 10th grade, two years prior to their scheduled graduation. Finally, the study was correlational and no claims were made as to causation. Students who earned two or more arts credits during high school were more likely to score at proficient levels for mathematics and reading/language arts, and to score at or above the national average composite score on the ACT PLAN. Significant associations between arts participation and RLA proficiency held across subgroups of students with and without disabilities and/or economic disadvantage; however, for mathematics, we observed significant associations only for nondisabled students. While few students with disabilities reach proficiency in reading/ language arts, they were up to twice as likely to do so if they exceeded the minimum number of arts credits required for graduation. Compared to earning a single arts credit, for mathematics there was no advantage in earning a second arts credit; however, students were about 1.3 times more likely to reach proficiency when earning three arts credits and 1.6 times more likely when earning four or more. The odds were better for RLA. There was a slight advantage in proficiency for students earning two credits, but the advantage rose to 1.6 and 2.2 times when students earned three, or four or more arts credits, respectively. While few students reached Above Mastery and Distinguished status, their odds of doing so increased somewhat if they earned additional arts credits. The 8 While few students with disabilities reach proficiency in reading/language arts, they were up to twice as likely to do so if they exceeded the minimum number of arts credits required for graduation. Supplemental Educational Services in the State of West Virginia: Evaluation Report for 2011-2012 Anduamlak Meharie, August 2012 This report presents findings of an evaluation of the 2011-2012 supplemental educational services (SES) program in West Virginia. The primary purpose of the evaluation was to examine SES provider effectiveness by analyzing (a) achievement outcomes of students who received SES and (b) the perceptions of key stakeholders in participating school districts in West Virginia. Method of study. We compared math and reading/ language arts (RLA) scores of SES-participating students with scores of students in four other comparison groups: (a) students at SES-eligible schools where some students took advantage of SES services; (b) students at SES-eligible schools where no students took advantage of SES services; (c) all other Title I schools across WV; and (d) all remaining (non-Title I) schools. This comparison was limited to low socioeconomic status students in Grades 3 through 8 from schools with 10 or more students tested. We also investigated stakeholder perceptions about SES implementation and outcomes statewide, through surveys administered to SES providers and four stakeholder groups: district coordinators, principals/site coordinators, teachers, and parents of students receiving SES. Findings. Only RESA 1 and RESA 3 had at least 10 students available for analysis in math and RLA; these students had lower proficiency rates than the four comparison groups. However, students who received both math and RLA tutoring had higher rates of proficiency in those subjects than students who received math or RLA tutoring alone. Stakeholders held positive views about providers’ performance, including making services available, having a positive impact on student achievement, adapting materials, and aligning with local and state standards to meet student needs, including special education and ELL students. Stakeholders had less favorable views about the levels of collaboration and communication with providers. Limitations of study. The analyses were based on small sample sizes for many providers, which reduced the number of providers available for reliable evaluation. In RLA as well as math, only two providers had 10 or more students available with 2011-2012 test data. When limiting the analysis to students with at least 50% attendance rates, these numbers were even smaller. One must note that such small samples may not reliably represent the quality of services provided across the state. Additionally, students attended SES services an average of 19.05 hours, a utilization rate of 61.12%. This number of hours, spread over the course of a school year, is much lower than that reported by providers in the previous academic year, and it begs the question as to whether dramatic improvements in proficiency should be expected. Recommendations. The primary areas for program improvement as identified by respondent stakeholder groups were to (a) increase the frequency with which providers communicate with principals/site coordinators, teachers, and parents, (b) increase the frequency with which providers collaborate with district and school personnel to set goals for student growth, and (c) increase the rate of attendance and utilization of SES services. All stakeholder groups should be encouraged to participate in the evaluation at higher levels than observed this year. Students who received both math and reading/language arts (RLA) tutoring had higher rates of proficiency than students who received math or RLA tutoring alone. District coordinator, principal/site coordinator, teacher, and parent stakeholder groups had large majorities who agreed or strongly agreed they were satisfied with provider services. 9 Extended Professional Development in ProjectBased Learning: Impacts on 21st Century Skills Teaching and Student Achievement Nate K. Hixson, Jason Ravitz, and Andy Whisman, September 2012 From 2008 to 2010, project-based learning (PBL) was a major focus of the Teacher Leadership Institute (TLI), undertaken by the West Virginia Department of Education (WVDE), as a method for teaching 21st century skills. Beginning in January 2011, a summative evaluation was conducted to investigate the effect of PBL implementation on teachers’ perceived ability to teach and assess 21st century skills and on student achievement. Method of study. We conducted a survey of teachers who (a) were trained in PBL at TLI by Buck Institute for Education (BIE), (b) had been identified as experienced users because they had successfully published a project in the state’s peer-reviewed project library, and (c) used PBL during the spring semester of the 20102011 school year. The survey responses of the final sample of 24 trained PBL-using teachers were compared to a matched group of teachers with similar backgrounds and teaching assignments who did not use PBL or who had used it but had limited or no professional development and had not participated in the BIE training. WESTEST 2 achievement gains in English/language arts, mathematics, science, and social studies were compared for students of the two groups of teachers. Findings. Overall, there were substantial and statistically significant effect size differences between teachers who used PBL with extended professional development and other teachers in the sample. Compared with the matching group, the extensively trained PBL-using teachers taught 21st century skills more often and more extensively. This finding applied across the four content areas, in classrooms serving students with a range of performance levels, and whether or not their schools had block scheduling. The study also found that teachers did not feel as successful at assessing the skills as they did teaching them. Students of these teachers performed no differently on WESTEST 2 than a matched set of students taught by non-PBL-using teachers or teachers who had not received extensive training. Although these results did not show significantly different gains, they should serve to mitigate the concern among some educators that engaging in PBL will impede standardized test preparation. This study also provided evidence of the potential of PBL to become part of the larger educational landscape by working in different types of schools. 10 Limitations of study. All studies of this nature that involve voluntary teacher participation in professional development and implementation have a risk of selfselection bias. Survey responses were based on teacher perceptions regarding a “target class”; consequently they do not necessarily represent the breadth of instruction provided by the sampled teachers in all of their course offerings. Due to relatively low sample sizes and small effect sizes, the achievement test analyses were afflicted by low statistical power. When we aggregated our data (across content areas) the result approached significance, but the difference between groups was still quite small in practicality. Compared with the matched group, the teachers who received intensive training in projectbased learning (PBL) taught 21st century skills more often and more extensively. Although students of PBLusing teachers did not show higher WESTEST 2 gains than a matched group of students, their performance was not lower, which should alleviate any concern that engaging in PBL will impede standardized test preparation. The Middle School Algebra Readiness Initiative: An Evaluation of Teacher Outcomes and Student Mathematics Achievement and Gains Nate K. Hixson, October 2012 We conducted an evaluation study of the Middle School Algebra Readiness Initiative (MSARI), a mathematics intervention that was implemented in two West Virginia school districts during the 2011–2012 school year. In participating middle schools, the Carnegie Learning MATHia® software intervention and classroom curriculum were used as a total replacement for the districts’ alternative mathematics curriculum for Grades 6, 7, and 8. A cohort of teachers was trained by Carnegie Learning in mathematical content and pedagogy as well as in the proper implementation of the software and classroom curriculum materials. Method of study. Our evaluation tested five hypotheses. The first investigated the impact of the initiative on teacher-level outcomes, specifically teachers’ content and pedagogical knowledge in the areas of patterns, functions, and algebra on the researchvalidated, Learning Mathematics for Teaching (LMT) assessment. The remaining four study hypotheses tested the impact of the initiative on students’ mathematical achievement and year-toyear mathematics gains as measured by the Grade 6, 7, or 8 mathematics subtest of the West Virginia Educational Standards Test 2 (WESTEST 2). We used propensity score matching (PSM) to match students in a variety of implementation scenarios to select a comparison group of students. In all cases, we rigorously matched the two groups of students using a variety of covariates. We then examined mean differences in students’ standardized test scores and mathematics gains, determining if the treatment or comparison group scores differed by a statistically significant margin. Next, we used linear regression to determine, after controlling for covariates, what level of impact the treatment had on student achievement and gains. Findings. Our statistical analysis of teacher pretest/posttest differences on the LMT assessment revealed that, for the 20 teachers who completed both a pretest and posttest, there was only a marginal gain, which was not statistically significant. As for student results, in most cases students in the treatment group underperformed on the WESTEST 2, when compared with their grade-level peers who used an alternate curriculum. With a few exceptions, the differences were statistically significant. However, the results of the linear regressions illustrated that, after controlling for important covariates, the negative relationship among treatment and student achievement/gains was relatively small, but still statistically significant. Limitations of study. Several limitations impair our ability to make conclusions based on these results. Most critically, we had very little information about the degree to which the teachers and students implemented the intervention components with fidelity. We recommend strongly against using our report as an evaluation of MATHia in general. It should be seen as an evaluation of an entire initiative rather than any curriculum or software program alone. Recommendations. In light of these and other limitations described in this report, we make only two recommendations. First, we suggest future program implementations of this type take substantial measures to collect qualitative implementation data so that the results of quantitative analyses can be more readily interpreted. Second, in districts where similar programs are currently underway or in the planning stages, we recommend continuous monitoring and technical assistance to ensure that the program components are delivered as intended. In most cases, students in the treatment group underperformed their gradelevel peers who used an alternate curriculum. With few exceptions, the differences were statistically significant. Available data indicate that few students met the required 1 hour/week of program use. 11 Findings from the 2011 West Virginia Online Writing Scoring Comparability Study Nate Hixson and Vaughn Rhudy, October 2012 To provide an opportunity for teachers to better understand the automated scoring process used by the state of West Virginia on our annual WESTEST 2 Online Writing Assessment, the WVDE Office of Assessment and Accountability and the Office of Research conduct an annual comparability study. Each year educators from throughout West Virginia receive training from the Office of Assessment and Accountability and then hand score randomly selected student compositions. The educators’ hand scores are then compared to the operational computer (engine) scores, and the comparability of the two scoring methods is examined. Method of study. A scoring group made up of 43 participants representing all eight regions scored a randomly selected set of student essays using the appropriate grade-level WV Writing Rubrics. A total of 2,550 essays were each scored by two different human scorers to allow for comparison of humanto-human scores as well as human-to-engine scores. Four hypotheses were tested. Findings. We first sought to determine the extent to which human scorers calibrated their scoring process to align with the automated scoring engine via a series of training papers. We found that calibration was generally quite good in Grades 5-11, but there was room for improvement in Grades 3 and 4. We also found that calibration rates were relatively static across the set of training papers. We next sought to determine the comparability of human-to-human and human-toengine agreement rates. We examined both exact and exact/adjacent agreement rates (i.e., scores that were exactly matched or within 1 point of each other on a 6-point scale). Looking at wellcalibrated human scorers, our analyses showed that, with few exceptions, both exact and exact/ adjacent agreement rates were comparable for the human-to-human and human-to-engine pairs. Finally, we examined the average essay scores assigned by the automated scoring engine and those assigned by a sufficiently calibrated human scorer. Our analyses revealed that for four of the available grade levels there were no significant differences. However, for the remaining grades and for all grades in aggregate, differences were statistically significant. In these cases, the differences observed between calibrated human 12 scorers and the automated scoring engine were equivalent to or less than approximately three 10ths of a point (.310) on a 5-point scale or approximately 2% to 5% of the available points, with human scorers typically scoring papers higher. This difference was deemed to be practically insignificant. Limitations of study. The human essay scores used in similar studies of automated essay scoring are generated by scorers for whom there is strong empirical evidence that indicates they are able to apply a validated scoring rubric in a consistent and valid manner. In our case, employing 10 training papers is likely not enough training to ensure our scorers become expert raters. Until the calibration process and measure are improved upon, agreement rates and differences in human and engine scores should be interpreted cautiously. Recommendations. We recommend improving the calibration process; examining new measures of calibration among scorers to assist in interpreting results; using multiple and different measures to examine agreement between scoring methods; and adding a qualitative research component to next year’s online writing comparability study to examine teacher outcomes. Until the calibration process and measures are improved, agreement rates and differences in human and engine scores should be interpreted cautiously. Implementation of the Master Plan for Statewide Professional Staff Development for 2011-2012: An Evaluation Study Patricia Cahape Hammer, October 2012 This evaluation study examined the formation and implementation of the West Virginia Board of Education’s Master Plan for Professional Staff Development for 2011-2012 (PD Master Plan). Method of study. We examined the performance of professional development (PD) providers included in the PD Master Plan: Marshall University, all eight regional education service agencies (RESAs), the WV Center for Professional Development (CPD), and eight WV Department of Education (WVDE) offices. We used two main data sources: PD session reports from the providers, and an online survey of 6,312 participants who attended the sessions to which 4,281 responded (68% response rate). Findings. Of the sessions planned, 77.5% were implemented—down slightly from the previous year. Attendance was also down, dropping nearly 42% from about 37,000 in 2010-2011 to under 22,000 in 2011-2012. The RESAs, CPD, and Marshall all saw lower attendance, while WVDE providers’ attendance was slightly up. Overall, participants were in agreement that the sessions they attended adhered to research-based practices for high quality PD. The strongest ratings were given to the relevance and specificity (contentfocus) of the PD. The weakest ratings were for two follow-up items—that is, follow-up discussion and collaboration, and related follow-up PD. Only 51.2% of respondents agreed that the sessions they attended were helpful in moving them toward the Board goals they were intended to support. Participants reported greatest impacts on their knowledge and behaviors, with less impact on their attitudes and beliefs. Collectively, we estimate participants traveled more than 20,000 hours to attend the PD, with participants from some counties traveling twice as long as others. As for formation of the plan, only one of the 11 public institutions of higher education (IHEs) with teacher preparation programs participated in the PD Master Plan and only eight of 15 WVDE offices. All eight RESAs participated. The ability of some providers to participate meaningfully is hampered by the schedule that must be kept in formulating the PD Master Plan. It is unknown how much of the PD offered by IHEs, RESAs, and the WVDE falls outside of that which is included in the PD Master Plan. Limitations of study. While a 68% response rate is high for this type of survey, there remained a portion of the sample from whom we did not hear, whose perceptions of the PD are unknown. Recommendations. The Board may wish to consider urging providers to (a) support more follow-up to their PD, (b) better align their PD with the Board’s goals, and (c) reduce travel time by using more online PD formats; and to consider (d) other methods for including IHEs in the PD Master Plan, (e) reopening the PD Master Plan in early October to allow providers to revise their plans, and (f) conducting a study of the PD offered outside of the PD Master Plan by the four main groups of providers (i.e., CPD, RESAs, IHEs, and WVDE). Participants gave the strongest ratings to the relevance and specificity (content-focus) of the professional development. The weakest ratings were for two follow-up items—that is, follow-up discussion and collaboration, and related follow-up professional development. The ability of some providers to participate meaningfully is hampered by the schedule that must be kept in formulating the PD Master Plan. 13 21st Century Community Learning Centers: A Descriptive Evaluation for 2011-2012 Patricia Cahape Hammer and Larry J. White, December 2012 This evaluation study provides descriptive information about the implementation and outcomes of the 21st Century Community Learning Centers (CCLC) program in West Virginia, from September 2011 through May 2012. Method of study. The report draws on information from online surveys of directors of 24 CCLC programs and from school teachers of nearly 4,000 participating students. Findings. Most participating students were in the elementary grades. The mean number of days students attended ranged from about 14 to 96 days, depending on the program. Teachers perceived the greatest improvements in participating students’ behaviors related to promptness and quality of homework turned in, overall academic performance, and participation in class. Regarding CCLC program volunteers, largest sources were K-12 service learning programs, parents and faculty members, local businesses, and postsecondary service learning programs. Although AmeriCorps was not the largest source of volunteers, it was the group with which program directors reported the greatest level of success. Regarding work with partners, the two most frequent types of support received from partners were programming and resources. Partnerships engaged in funding, programming, resources, and training were reported to be the most effective. Regarding professional development, the topics best attended by program directors were programming, STEM/ STEAM, and program evaluation. Regarding parent and community involvement, more than half of program directors indicated they either Teachers perceived the greatest improvements in the promptness and quality of homework turned in by students, and their overall academic performance and participation in class. 14 had no family components in their programs or that they were, at best, well below target goals. Of those who reported success in this area, three main themes emerged as reasons for their successes: (a) the right types of activities, (b) ongoing, even daily contact with parents, and (c) a shared commitment to the program, which involved engaging parents in meaningful work toward program goals. Program directors reported offering more than 300 substance abuse prevention activities, involving more than 11,000 students and nearly 900 adults. Nearly three quarters of program directors found the continuous improvement process for after school moderately or very helpful. Likewise, the great majority found the WVDE monitoring visits moderately or very helpful. Limitations of study. We cannot assume that the CCLC attendance was a key factor in the improvement of behaviors perceived by teachers. We did not hear from all program directors, so we lack information about at least three of the programs. Recommendations. Topics that program directors reported needing additional professional development include programming, staff development, and STEM/STEAM; for technical assistance topics include program evaluation, program sustainability, and project management. Parent involvement, too, seems to need attention. Avoid requiring major effort from program staff for program monitoring and evaluation at the beginning of the school year and look for ways to streamline reporting and data collection requirements. Continue with current practices for WVDE site visits, which program directors seem to greatly appreciate. Involve program directors in providing input when planning takes place for program improvements. Consider publishing a calendar for the full year, at the beginning of the school year. Regarding parent and community involvement, more than half of program directors indicated they either had no family components in their programs or that they were, at best, well below target goals. Our Staff Our expert staff is trained and experienced in conducting state-of-the-art qualitative and quantitative social science and assessment methodologies. Nathaniel “Nate” K. Hixson, Assistant Director Monica Beane, Assistant Director Patricia Cahape Hammer, Coordinator, Research Writer Anduamlak “Andu” Meharie, Coordinator, Research and Evaluation Jason E Perdue, Manager, Technology and Data Amber D. Stohr, Coordinator, Research and Evaluation Steven A. “Andy” Whisman, Coordinator, Research and Evaluation We are supported by two staff associates: Cathy Moles, Secretary III Kristina Smith, Secretary II Milestones In November 2012, Larry J. White, our executive director retired after 38 years in state government—27 years in the West Virginia Department of Education. He began in the Office of Technology, where he led the development of the first local area network, which eventually expanded to serve all Department employees. He became the first psychometrician in the Office of Assessment, and worked with the Department’s assessment vendor CTB-McGraw Hill’s top psychometricians and statisticians—work he considered to be the highlight of his career. He considered his biggest accomplishment, however, to be the formation of the Office of Research. We are grateful to Larry for his leadership in forming our office and in setting a very high bar for our performance. Amber Stohr joined our staff in September, filling a position vacated by Yetty Shobo, who followed her husband’s career to Australia. Prior to joining the Department, Amber was an epidemiologist with the West Virginia Bureau for Public Health, Health Statistics Center. Additionally, she has over five years of teaching experience, in the United States, and in China. Her professional and educational focus areas include evaluation; policy, systems and environmental change; social determinants of health; equity studies in race/ethnicity, gender, and socioeconomic status; and Chinese politics and economics. The Office of Research was especially productive last year, creating lots of new research knowledge— and even producing a new human! Coordinator Anduamlak (Andu) Meharie and his wife Michelle welcomed a new daughter, Selaam, in January. 15 Educate Enhancing Learning. For Now. For the Future. James B. Phares, Ed.D. State Superintendent of Schools