West Virginia Revised Educator Evaluation System for Teachers 2011-2012

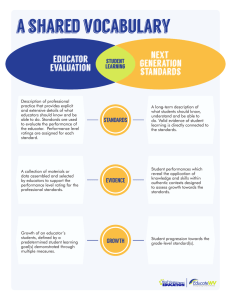

advertisement