Analysis of Control Mechanisms in a Re-entrant Manufacturing System

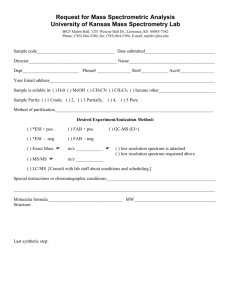

advertisement