Design of an Improved Electronics

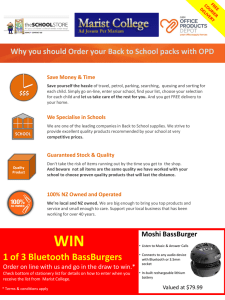

advertisement