L

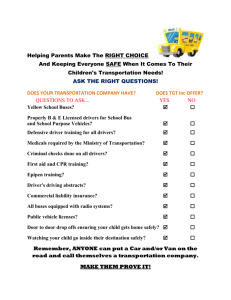

advertisement

Optimizing a Start-Stop System to Minimize Fuel

Consumption using Machine Learning

,Hur

OF TECHN4OLOGjY

by

L

Noel Hollingsworth

L

S.B., C.S. M.I.T., 2012

15 2014

LIBRARIES

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

February 2014

@ Massachusetts Institute of Technology 2014. All rights reserved.

Author.

Signature redacted

Departhment of Electrical Engineering and Computer Science

December 10, 2013

Certified by.........Signature

redacted..........

Leslie Pack Kaelbling

Panasonic Professor of Computer Science and Engineering

Thesis Supervisor

Signature redacted

Accepted by ........

................

Albert R. Meyer

Chairman, N166sers of Engineering Thesis Committee

Optimizing a Start-Stop System to Minimize Fuel

Consumption using Machine Learning

by

Noel Hollingsworth

Submitted to the Department of Electrical Engineering and Computer Science

on December 10, 2013, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

Many people are working on improving the efficiency of car's engines. One approach

to maximizing efficiency has been to create start-stop systems. These systems shut

the car's engine off when the car comes to a stop, saving fuel that would be used to

keep the engine running. However, these systems introduce additional energy costs,

which are associated with the engine restarting. These energy costs must be balanced

by the system.

In this thesis I describe my work with Ford to improve the performance of their

start-stop controller. In this thesis I discuss optimizing a controller for both the general population as well as for individual drivers. I use reinforcement-learning techniques in both cases to find the best performing controller. I find a 27% improvement

on Ford's current controller when optimizing for the general population, and then

find an additional 1.6% improvement on the improved controller when optimizing for

an individual.

Thesis Supervisor: Leslie Pack Kaelbling

Title: Panasonic Professor of Computer Science and Engineering

2

Contents

1

2

Introduction

8

1.1

Problem Overview

. . . . . . . . . . . . . . . . . . . . . . . . .

9

1.2

O utline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

. . . . . ..

Background

12

2.1

Reinforcement Learning

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

2.2

Policy Search Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

14

2.2.1

Policy Gradient Algorithms

. . . . . . . . . . . . . . . . . . . . . . .

14

2.2.2

Kohl-Stone Policy Gradient

. . . . . . . . . . . . . . . . . . . . . . .

15

2.2.3

Evolutionary Algorithms . . . . . . . . . . . . . . . . . . . . . . . . .

17

2.2.4

Covariance Matrix Adaptation Evolution Strategy . . . . . . . . . . .

18

Model Based Policy Search . . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

2.3.1

Gaussian Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

2.3.2

Nearest Neighbors

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

2.4.1

22

2.3

2.4

Machine Learning for Adaptive Power Management . . . . . . . . . .

3

2.4.2

2.5

3

Wind Turbine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

2.4.3

Reinforcement learning for energy conservation in buildings . . . . . .

23

2.4.4

Adaptive Data centers . . . . . . . . . . . . . . . . . . . . . . . . . .

23

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

Learning a Policy for a Population of Drivers

25

3.1

Problem Overview

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

25

3.2

Simulator Model

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

Simulator Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

29

Optimizing For a Population . . . . . . . . . . . . . . . . . . . . . . . . . . .

32

3.3.1

Experimental Results . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

3.3.2

Optimizing For a Subset of a Larger Population . . . . . . . . . . . .

36

3.3.3

Experimental Results . . . . . . . . . . . . . . . . . . . . . . . . . . .

38

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

3.2.1

3.3

3.4

4

Design, Analysis, and Learning Control of a Fully Actuated Micro

Optimizing a Policy for an Individual Driver

41

4.1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

41

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

Kohl-Stone Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

4.2.1

Initial Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

4.2.2

Finding Policies in Parallel . . . . . . . . . . . . . . . . . . . . . . . .

48

4.2.3

Improving the Convergence Speed of the Algorithm . . . . . . . . . .

51

4.2.4

Determining the Learning Rate for Kohl-Stone . . . . . . . . . . . . .

53

Problem Overview

4.1.1

4.2

Issues

4

4.2.5

4.3

4.4

4.5

5

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

Cross-Entropy Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

4.3.1

Experiment

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

57

4.3.2

Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

Model Based Policy Search . . . . . . . . . . . . . . . . . . . . . . . . . . . .

60

4.4.1

Experiment With Normally Distributed Output Variables . . . . . . .

63

4.4.2

Experiment with Output Variable Drawn from Logistic Distribution .

65

4.4.3

Nearest Neighbors Regression

. . . . . . . . . . . . . . . . . . . . . .

66

4.4.4

Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

68

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

70

Conclusion

5.1

Future Work. . ..

72

. . . ....

. . . . . . . . . . . . . . . . . . . . . . . . .

5

73

Kohl-Stone Policy Search Pseudo-Code

16

2.2

CEM Pseudo-code

. . . . . . . . . .

18

3.1

Hypothetical rule-based policy.....

26

3.2

Vehicle Data Trace . . . . . . . . . .

28

3.3

Simulator State Machine . . . . . . .

29

3.4

Simulator Speed Comparison . . . . .

30

3.5

Simulator Brake Pressure Comparison

31

3.6

Simulator Stop Length Comparison .

32

3.7

Kohl-Stone Convergence Graph

. . .

35

4.1

Results of Kohl-Stone for Individual Driver's . . . .

. . . . . . . .

46

4.2

Moving Average of Kohl-Stone for Individuals . . .

. . . . . . . .

47

4.3

Kohl-Stone Result Histogram

. . . . . . . . . . . .

. . . . . . . .

49

4.4

Parallel Kohl-Stone Results

. . . . . . . . . . . . .

. . . .

50

4.5

Kohl-Stone Results When Sped Up . . . . . . . . .

. . . . . . . .

53

4.6

Results from Kohl-Stone with a Slower Learning Rate

. . . . . . . .

55

4.7

Results from Kohl-Stone with a Faster Learning Rate

. . . . . . . .

56

.

.

.

.

.

.

.

.

.

.

2.1

.

List of Figures

6

4.8

Cost Improvement from CMAES with an Initial Covariance of 0.001 . . . . .

59

4.9

Average Covariance Values for CMAES . . . . . . . . . . . . . . . . . . . . .

60

4.10 Cost Improvement from CMAES with an Initial Covariance of 0.005 and 0.008 61

4.11 Stop Length Histogram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

63

4.12 Error from Gaussian Processes as Function of Training Set Size

64

. . . . . . .

4.13 Comparison of Gaussian Processes Predictions with Actual Results

. . . . .

66

4.14 Comparison of Gaussian Processes predictions with Actual Results with Laplace

Output Variable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

67

4.15 Comparison of nearest neighbors predictions with Actual Results . . . . . . .

68

7

Chapter 1

Introduction

Energy consumption is one of the most important problems facing the world today. Most

current energy sources pollute and are not renewable, which makes minimizing their use

critical. The most notable way that Americans use non-renewable sources of energy is when

they drive their car. Therefore, any improvements to the efficiency of cars being driven could

have a major effect on total energy consumption.

This reduction can be significant even if the gain in efficiency is small. In 2009 Ford sold

over 4.8 million automobiles [1], with an average fuel efficiency of 27.1 miles per gallon [2].

Assuming these vehicles are driven 15,000 miles a year, a .01 miles per gallon improvement

in efficiency would saves more than 980,000 gallons of gasoline per year.

One approach that automobile manufacturers have used to improve fuel efficiency is to create

start-stop systems for use in their cars. These systems turn off the vehicle's engine when

it comes to a halt, saving fuel that would otherwise be expended running the engine while

the car was stationary. Start-stop systems have primarily been deployed in hybrid vehicles,

but are now being deployed in automobiles with pure combustion engines as well [3]. These

systems have resulted in a 4-10% improvement in fuel efficiency in hybrid cars, but even

greater gains should be possible. Although the systems are generally efficient, shutting the

car's engine down for short periods of time results in losing more energy from the engine

8

restarting than is gained from the engine being off. By not turning off the car's engine as

often for shorter stops and turning it off more often for longer stops, it may be possible to

conserve substantially more energy. My thesis will explore several methods of improving a

controller's performance by making more accurate predictions of driver's stopping behavior.

1.1

Problem Overview

In this thesis I describe my work with Ford to improve the performance of their start-stop

controller. I will examine several methods of improving the performance of the controller.

The performance was measured by a cost function defined by Ford that was a combination

of three factors: the energy savings from turning the car's engine off, the energy cost from

restarting the car's engine after turning it off, and the annoyance felt by the driver when

they attempted to accelerate if the car's engine was still shut down.

My goal was to improve the performance of the controller by altering its policy for shutting

the engine down and turning the engine back on. I did not define the controller's entire

policy. Instead, I was given a black-box controller with five parameters for a policy for the

start-stop controller's rule-based system, which determined when to turn the car's engine off

and when to turn it back on. I attempted to change the parameters of the system's policy

in order to maximize the performance of the system.

I was given less than ten hours of driving data to optimize the policy. This is not enough

data to determine the effectiveness of the various approaches I used, so I created a simulator

in order to measure their effectiveness. The simulator was designed to have custom drivers.

These drivers were sampled from a distribution where the mean behavior of the drivers would

match the behavior from Ford's data. This allowed me to measure the performance of the

algorithms without using real data. It is still important to test the algorithms on real data

before implementing them in production vehicles, in order to determine how drivers react

to different policies. I will describe what sort of data will need to be collected next when I

discuss the effectiveness of each algorithm.

9

I took two broad approaches to optimizing the policy.

First, in chapter three, I discuss

optimizing a single policy for all drivers in a population. This approach makes it easy for

Ford to ensure that the policy chosen is sensible, and requires no additional computing

resources in the car other than those already being used. Second, in chapter four, I discuss

optimizing a policy in the car's computer for the driver of the car. This has the potential for

better results but requires additional computing resources online. In addition, Ford would

have no way of knowing what policies would be used by the controller. It is up to Ford to

balance the trade-off between complexity and performance, but in each section I will explain

the advantages and disadvantages of the approach I used.

1.2

Outline

After the introduction, this thesis consists of four additional chapters.

" In chapter two I discuss the background of the problem. First I describe the approaches

I used to optimize the controller's performance. I then discuss several other applications

of machine learning to energy conservation, comparing and contrasting the algorithms

they used with the ones I experimented with.

* In chapter three I discuss the problem setup in more detail and describe optimizing a

single policy for a population of drivers. I first give a formal overview of the problem

before describing the simulator I created to create synthetic data. I then discuss using

the Kohl-Stone policy gradient algorithm [4] to minimize the expected cost of a policy

over a population of drivers.

" In chapter four I discuss determining a policy online for each driver. I describe three

approaches: the Kohl-Stone policy gradient algorithm, a cross-entropy method, and

model based reinforcement learning using Gaussian processes.

For each approach I

describe the performance gain compared to a policy found for the population of drivers

10

the individual was drawn from, and discuss potential difficulties in implementing the

algorithms in a production vehicle.

* In chapter five I conclude the thesis, summarizing my results and discussing potential

future work.

Now I will proceed to the background chapter, where I will introduce the methods used in

the thesis.

11

Chapter 2

Background

This chapter provides an overview of the knowledge needed to understand the methods used

in the thesis. The primary focus is on the reinforcement learning techniques that will be

used to optimize the start-stop controller, though I also give a brief overview of alternative

methods of optimizing the controller. The chapter concludes with a look at related work

that applies machine learning in the service of energy conservation.

2.1

Reinforcement Learning

Reinforcement learning is a subfield of machine learning, concerned with optimizing the

performance of agents that are interacting with their environment and attempting to maximize an accumulated reward or minimizing an accumulated cost. Formally, given a summed

discounted reward:

00

R = ZYtrt,

t=0

(2.1)

where rt denotes the reward gained at time step t, and -y is a discount factor in the range

[0, 1), the goal is to maximize:

E[RI7r].

where 7r corresponds to a policy that determines the agent's actions.

12

(2.2)

In this setting the agent often does not know what reward their actions will generate, so

they must take an action in order to determine the reward associated with the action. The

need to take exploratory actions makes reinforcement learning a very different problem from

supervised learning, where the goal is to predict an output from an input given a training set

of input-output pairs. The consequence of this difference is that one of the most important

aspects of a reinforcement learning problem is balancing exploration and exploitation. If an

agent exploits by always taking the best known action it may never use an unknown action

that could produce higher long-term reward, while an agent that chooses to constantly

explore will take suboptimal actions and obtain much less reward.

Another key difference between supervised learning and reinforcement learning is that in

reinforcement learning the states the agent observes depends on both the states the agent

previously observed, as well the actions executed in those states. This means that certain

actions may generate large short term rewards, but lead to states that perform worse in the

long run.

Reinforcement learning problems are typically formulated as a Markov decision process, or

MDP, defined by a 4-tuple

(S, A, P, R),

(2.3)

where S corresponds to the set of states that the agent may be in; A is the set of all possible

actions the agent may take; P is the probability distribution for each state-action pair, with

p(s'Is, a) specifying the probability of entering state s', having been in state s and executed

action a; and R is the reward function R(s, a) specifying the reward received by the agent

for executing action a in state s. The goal is to find a policy 7r mapping states to actions

that leads to the highest long-term reward. As shown in equation 2.1 the reward is usually

described as a discounted sum of the rewards received at all future steps. A key element of

the MDP is the Markov property. The Markov property is that given an agent's history of

states, {SO) S1, 2 ....

,

sn_ 1 , sn}, the agent's reward and transition probabilities only depend

on the latest state sn, that is that

P(s's1 ,

sn, a,, ... an) = P(s'ls , an).

13

(2.4)

This is a simplifying assumption that makes MDPs much easier to solve. There are a few

algorithms that are guaranteed to converge to a solution for an MDP, but unfortunately

the start-stop problem deals with a state space that is not directly observable, and Ford

required the use of a black box policy parameterized by five predetermined parameters,

so I will instead use a more general framework that instead searches through the space of

parameterized policies.

2.2

Policy Search Methods

Policy search methods are used to solve problems where a predefined policy with open

parameters is used. In policy search, there is a parameterized policy 7(0) and, as in an MDP,

a cost function C(O) that is being minimized, which can also be thought of as maximizing

a reward that is the negative of the cost function. Instead of choosing actions directly, the

goal is to determine a parameter vector for the policy, which then chooses actions based on

the state of the system. This can allow for a much more compact representation, because

the parameter vector 0 may have far fewer parameters than a corresponding value function.

Additionally, background knowledge can be used to pre-configure the policy. This enables

the policy to be restricted to sensible classes. Policy search algorithms refer to an algorithm

which is drawn from this family of algorithms.

2.2.1

Policy Gradient Algorithms

Policy gradient algorithms are a subset of policy search algorithms that work by finding the

derivative of the reward function with respect to the parameter vector, or some approximation of the derivative, and then moving in the direction of lower cost. This is repeated until

the algorithm converges to a local minimum. If the derivative can be computed analytically

and the problem is an MDP then equation 2.5, known as the policy gradient theorem, holds:

R

Zd

S

(s) 1

s Qa)(s, a).

a

14

(2.5)

Here, dr(s) is the summed probability of reaching a state s using the policy 7r, with some

discount factor applied, which can be expressed as the following, where so is the initial state,

and -y is the discount factor:

00

dr(s) = Z

t=o

'Pr{st = sIso, wr}.

(2.6)

Many sophisticated algorithms for policy search rely on the policy gradient theorem. Unfortunately it relies on an the assumption that the policy is differentiable. This is not true

for the start-stop problem, as Ford requires that we use a black-box policy, which allows me

to the results of applying the policy to an agent's actions, but not view the actual policy so

as to obtain a derivative. The policy also uses a rule-based system, so it is unlikely that a

smooth analytic gradient exists. Because of this I used a relatively simple policy gradient

algorithm published by Kohl and Stone [4].

2.2.2

Kohl-Stone Policy Gradient

The primary advantage of the Kohl-Stone algorithm for determining an optimal policy is

that it can be used when an analytical gradient of the policy is not available. In place of an

analytical gradient, it obtains an approximation of the gradient by sampling, an approach

which was first used by Williams [14].

The algorithm starts with an arbitrary initial parameter vector 0. It then loops, repeatedly

creating random perturbations of the parameters and determining the cost of using those

policies. A gradient is then interpolated from the cost of the random perturbations. Next,

the algorithm adds a vector of length r in the direction of the negative gradient to the policy.

The process repeats until the parameter vector converges to a local minima, at which point

the algorithm halts. Pseudo-code for the algorithm is given in Figure 2.1.

15

1 0 +- initialParameters;

2

while not finished do

3

create R1 , ... , Rm where R, = 0 with its values randomly perturbed;

4

determine(C(Ri),C(R 2), ... , C(Rm));

5

for i - 1 to 101 do

// Find the cost function values after perturbing 0

6

AvgPos +- average of C VR where R[i] > 0[i];

7

AvgNone

8

AvgNeg

average of C VR where R[ij == O[ij;

+-

+-

average of C VR where R[iJ < 0[i];

// Determine the value of the differential for the parameter i

if AvgNone > AvgPos and AvgNone > AvgNeg then

9

change.,

10

11

-

0;

else

L

12

change, +- AvgPos - AvgNeg;

// Move the parameters in the direction of the positive differential

ca ;

13

change +-

14

0

+-

*

changel *T

0 + change;

Figure 2.1: Pseudo-code for the Kohl-Stone policy search algorithm

Another advantage of the algorithm, aside from not needing an analytic gradient, is that

there are only three parameters that must be tuned. The first of these is the learning rate.

If the learning rate is too low the algorithm converges very slowly, while if it is too high the

algorithm may diverge, or it may skip over a better local minimum and go to a minimum

that has higher cost. The usual solution to finding the learning rate is to pick an arbitrary

learning rate and decrease it if divergence is observed. If the algorithm is performing very

slowly then the learning rate may be increased. However this approach may not be suitable

for situations where a user cannot tweak the settings of the algorithm online, although there

16

are methods that adapt the learning rate on-line automatically.

The second parameter chosen by the user is the amount by which each parameter is randomly

perturbed. If the value is set too low the difference in costs due to noise may be much greater

than the differences in cost due to the perturbation.

If it is set too high the algorithm's

approximation of a gradient may no longer be accurate. This is sometimes set arbitrarily,

though I have performed experiments for the start-stop problem in order to determine what

values worked best.

The last parameter that must be set is the initial policy vector. The policy gradient algorithm converges to a local minimum, and the starting policy vector determines which local

minimum the algorithm will converge to. If the problem is convex there will only be one

minimum, so the choice of starting policy will not matter, but in most practical cases the

problem will be non-convex. One solution to this problem is to restart the search from many

different locations, and choose the best minimum that is found. When the policy is found

offline this adds computation time, but is otherwise relatively simple to implement. Unfortunately, like the choice of learning rate, it is much more difficult to set when it needs to be

chosen on-line, as the cost of using different policy starting locations manifests itself in the

real world cost being optimized.

2.2.3

Evolutionary Algorithms

Kohl-Stone and related methods were policy gradient approaches, where given a policy the

next policy is chosen by taking the gradient, or some approximation of it, and moving the

policy in the direction of the negative gradient. A different approach from the gradient based

approach is a sampling-based evolutionary approach. This approach works by starting at

a point, sampling around the point, and then moving in the direction of better samples.

Even though this approach and Kohl-Stone both use sampling, they are somewhat different.

Kohl-Stone uses sampling in order to compute a gradient, and then uses the gradient to move

to a better location. This approach uses the sampled results directly to move to a better

17

location. As a result the samples may be taken much further away from the current policy

than the samples used in Kohl-Stone, and the algorithms move to the weighted average of

the good samples, not in the direction of the gradient.

One example of an evolutionary approach is a cross-entropy method, or CEM [11]. CEM has

the same basic framework as Kohl-Stone, starting at an initial policy 0o and then iterating

through updates until converging to a minimum.

In addition to a vector for the initial

location it also needs an initial covariance matrix Eo. In each update it generates k samples

from the multivariate normal distribution with Ot as the mean and with Et as the covariance

matrix.

It then evaluates the cost of using each sampled policy, and then moves in an

average of the best sampled results, where only the Ke best results are used. It also alters

the covariance matrix to correspond to the variance of the best sampled results.

1

0 +- initialPolicyVector;

2

E <- initialCovariance;

3 while not finished do

4

create R1 , ... , Rk where Ri is randomly sampled from NORMAL(0, E)

determine(C(Ri), C(R2 ),

5

6

... ,

C(Rk));

sort R 1 , R 2 , ... , Rk in decreasing order of cost

Ke

1

i=0

Ke

0 =

-R,

K,

7

E =

L

' (0i _ 0)(0i 0)T-

i=O K

Figure 2.2: Pseudo-code for CEM.

2.2.4

Covariance Matrix Adaptation Evolution Strategy

The covariance matrix adaption evolution strategy, or CMAES, is an improvement over the

cross-entropy method [12]. It works similarly except the procedure of the algorithm is more

configurable and an evolutionary path is maintained. The path allows for information about

18

previous updates of the covariance matrix and mean to be stored, causing the adaption of the

covariance matrix to be based on the entire history of the search, producing better results.

It also contains many more parameters that can be tweaked to produce better results for a

specific problem, though all of them may be set to a sensible default setting as well.

CMAES and CEM are very general algorithms for function maximization but are used quite

often in reinforcement learning because they provide a natural way of determining what

information to gather next. One example is Stulp and Siguald's use of the evolutionary path

adaption of the Covariance Matrix from CMAES in the Path Integral Policy Improvement

algorithm, or PI2 [11]. The PI 2 algorithm has similar goals to CEM and CMAES, except that

instead of aiming to maximize a function it intends to maximize the result of a trajectory.

This is a very different goal from function maximization, because in trajectories earlier steps

are more important than later ones. PI2's application to trajectories makes it an important

algorithm for robotics. In my case I used a standard CMAES library, because it is most

natural to approach the start-stop problem as a function to be maximized.

2.3

Model Based Policy Search

Although I have been addressing approaches that use reinforcement learning to optimize

the start-stop controller, an alternative approach is to first learn a model using supervised

learning, and then use policy search on the model to find the optimal policy. In a supervised

learning problem the user is given pairs of input values ((1),

y(l)), (52),

y( 2)),

1

(51n) y(n))

where an entry of x is a vector of real values, and an entry of y is a real value, and the goal

is to predict the value of a new value for y(i) given only x(). The system can then use a

policy search algorithm to determine a policy vector 0 that performs best given the predicted

output y('). Using this model introduces the limitations that the policy chosen will have no

effect on the output, and that the policy chosen will not affect future states of the world.

For the start-stop controller, these limitations are similar to assuming that no matter how

the start-stop controller behaves the driver will drive the same way.

19

When y(') is real valued, as it is when trying to predict the length of a car's stop, the

supervised learning problem is called regression. I make use of two regression techniques in

this thesis, Gaussian processes and nearest neighbors regression.

2.3.1

Gaussian Processes

The first regression method I used were Gaussian processes, which are a framework for

nonlinear regression that gives probabilistic predictions given the inputs [7].

This often

takes the form of a normal distribution .A(y~p(x), E(x)), where the value for y is normally

distributed with a mean and variance determined by x. The probabilistic prediction is helpful

in cases where the uncertainty associated with the answer is useful, which it may be in the

start-stop prediction problem. For example, the controller may choose to behave differently

if it is relatively sure that the driver is coming to a stop 5 seconds long than when it believes

the driver has a relatively equal chance of coming to a stop between 1 and 9 seconds long.

Gaussian processes work by choosing functions from the set of all functions to represent

the input to output mapping. This is more complex than linear regression, a simpler form

of regression that only allows linear combinations of a set of basis functions applied to the

input to be used to predict the output. Over fitting is avoided by using a Bayesian approach,

where smoother functions are given more likely priors. This corresponds with the view that

many functions are somewhat smooth and that the smoother a function is, the more likely

it explains just the underlying process creating the data and not the noise associated with

the data.

Once the Gaussian process is used to predict the value of y(i), the optimal value for the policy

parameter 0 can be found.

This can be done with one of the search methods described

previously, as long as the reward of using a specified policy for any value of y(') can be

calculated. This may present problems if a decision must be made quickly and it is not easy

to search over the policy space, because a new policy must be searched for every time a

decision must be made. If a near-optimal policy can be found quickly this may represent

20

a good approach, because the policy can be dynamically determined for each decision that

must be made.

2.3.2

Nearest Neighbors

The second regression technique I used was nearest neighbors regression. Given some point

x(') this technique gives a prediction for a point y(') by averaging the outputs of the training

examples that are closest to x(). The number of training examples to average is a parameter

of the algorithm and can be decided using cross-validation.

Unlike Gaussian processes,

nearest neighbors regression gives a point estimate instead of a probabilistic prediction. The

advantage of nearest neighbors regression is that it is simple to implement and makes no

distributional assumptions.

That makes it ideal as a simple check to ensure that a more

sophisticated approach such as Gaussian processes is not making errors due to incorrect

distributional assumptions, or a poor choice of hyper-parameters. A policy can be found as

soon as a prediction for y(') is made, using similar search methods as described earlier.

2.4

Related Work

I will now discuss examples of machine learning being used for energy conservation. Machine

learning is useful for energy conservation because instead of creating simple systems that

must be designed for the entire space of users, or even a subset of users, the system can be

adapted to the current user. This can enable higher energy savings than are possible with

systems that are not specific to the user. I will discuss four examples of machine learning

for energy conservation, to illustrate where it can be applied and what methods are used:

The work by Theocharous et. al. on conserving laptop battery using supervised learning

[6]; Kolter, Jackowski and Tedrake's work in optimizing a wind turbine's performance using

reinforcement learning [8]; the work by Dalamagkidis et. al. about minimizing the power

consumption of a building [9], and lastly about the work of Bodik et. al. on creating an

21

adaptive data center to minimize power consumption [10].

2.4.1

Machine Learning for Adaptive Power Management

One area where machine learning is often used for power conservation is minimizing the power

used by a computer. By turning off components of the computer that have not been used

recently, power can be conserved, provided the user does not begin using the components

again shortly thereafter. Theocharous et. al. applied supervised learning to this area, using

a method similar to the model based policy approach described earlier [6]. A key difference

was that their approach was classification based, where the goal was to classify the current

state as a suitable time to turn the components of the laptop off. One notable difference

of their approach from the approach I described is that they created custom contexts and

trained custom classifiers for each context. This is an interesting area to explore, especially

if the contexts can be determined automatically. One method for determining these contexts

is a Hidden Markov Model, which I looked into, but was too complex for Ford to implement

in their vehicles.

2.4.2

Design, Analysis, and Learning Control of a Fully Actuated

Micro Wind Turbine

Another interesting application of machine learning to energy maximization is Kolter and

others work in maximizing the energy production of a wind turbine [8]. This can be treated

similarly to energy conservation, where instead of minimizing the energy spent, the goal is

to maximize the energy gained. The goal is to choose the settings of a wind turbine that

maximize energy production. Their work uses a sampling based approach to approximate

a gradient. This approach is more complex than the Kohl-Stone algorithm, as they form

a second order approximation of the objective function in a small area known as the trust

region around the current policy. They then solve the problem exactly in the trust region and

continue. This approach takes advantage of the second order function information, hopefully

22

allowing for quicker convergence of the policy parameters. The disadvantages of the approach

are that it is more complex, needing increased time for semidefinite programming to solve

for the optimal solution in the trust region, and needing an increased number of samples to

form the second order approximation.

In their case the calculations could be done before

the wind turbine was deployed, which meant the increased time for computation was not an

issue. In our case we would like approach to be feasible online, and it is unclear whether

there will be enough computation power allocated to our system in the car to perform the

additional computation that are needed for their approach.

2.4.3

Reinforcement learning for energy conservation in buildings

Another example of reinforcement being used to minimize energy consumption is in buildings.

Dalamagkidis et. al. sought to minimize the power consumption in a building subject to an

annoyance penalty that was paid if the temperature in the building was too warm or hot, or

if the air quality inside the building was not good enough [9]. This is a very similar problem

to the start-stop problem, where I am seeking a policy that minimizes the fuel cost and the

annoyance the driver feels. Their approach was to use temporal-difference learning, which

is a method of solving reinforcement learning problems. By using this approach they were

able to optimize a custom policy that was near the performance of a hand-tuned policy in 4

years of simulator time.

2.4.4

Adaptive Data centers

The last example of machine learning being used to minimize power is in data centers. Data

centers have machines that can be turned off based on the workload in the near future. The

goal is to meet the demands of the users of the data center, while keeping as many of the

machines turned off as possible. Bodik et. al. wrote about using linear regression to predict

the workload that would be demanded in the next two minutes [10]. Based on this prediction

the parameters of a policy vector were changed. This resulted in 75% power savings over

23

not turning any machines off, while only having service level violations in .005% of cases.

2.5

Conclusion

There are a variety of approaches related to machine learning that can be used to find the

optimal start-stop controller. This chapter gave a summary of the techniques that I applied

to the problem. I also gave a brief summary of other areas where machine learning has been

used to maximize energy, and discussed the advantages and disadvantages of the approaches

used. In the next chapter I will study the results of applying these techniques to the problem

of determining a policy that performs well for a population of drivers, as well as the problem

of finding a policy that adapts to the driver of the car where the policy is used.

24

Chapter 3

Learning a Policy for a Population of

Drivers

In this chapter I will discuss optimizing a single policy for a population of drivers in order

to minimize average cost. This is similar to the approach Ford currently takes, except that

their policy is a hand-tuned policy that they expect to work well on average, rather than one

optimized with a machine learning or statistical method. The benefits of this approach are

that the policy can be examined before it is used in vehicles to check that it is reasonable,

and that there are no additional computations required inside a car's computer beyond

computing the actions to take given the policy. The disadvantage of this approach is the

single policy used on all drivers will probably use more energy than when a unique policy is

specialized to each driver.

3.1

Problem Overview

In this approach the goal is to select a start-stop controller that performs best across all

users. Formally, the goal is to pick a parameter vector 0 for the policy that minimizes

E[C10],

25

(3.1)

over all drivers in the population, where C is composed of three factors,

C

= C1

+

C 2 + C3 .

(3.2)

The first factor, ci, represents the fuel lost from turning the car's engine on when the car is

stopped, and is equal to the time that car has spent stopped minus the time the combustion

engine was turned off during a driving session. The second factor, c 2, represents the fixed

cost in energy to restart the car's engine, and is equal to 2 times the number of times the

car's engine has been restarted during a period of driving. Every restart of the car's engine

costing 2 was a detail specified by Ford. The last factor, c3 , is the driver annoyance penalty,

and is paid based on the length of time between the driver pressing the accelerator pedal

and the car actually accelerating.

Each time the driver accelerates c 3 is incremented by

10 x max(0, restartDelay- 0.25), where restartDelayis equal to the time elapsed between

the driver pressing the accelerator pedal and the engine turning back on.

The goal is to minimize the expected cost E[C10] by choosing an appropriate parameterized

policy wr(9). Ford uses a rule-based policy, an example of which is used in Algorithm 3. Here

the parameter vector corresponds to values in the rules.

1

2

3

4

5

6

7

s

9

if CarStopped then

if EngineOn and BrakePedal > 0o then

L

TURN ENGINE OFF;

if EngineOn and BrakePedal > 01 and AverageSpeed < 02 then

L

TURN ENGINE OFF;

if EngineOff and BrakePedal < 03 then

TURN ENGINE ON;

if EngineOff and BrakePedal < 04 and TimeStopped > 20 then

TURN ENGINE ON;

Figure 3.1: Hypothetical rule-based policy

26

This policy framework is then called 100 times a second during a stop in order to determine

how to proceed. There are two important things to note about this rule-based policy. First,

this is an example of what the policy might look like, not the actual policy. Ford gave us

an outline similar to this, but did not tell us what the actual rules are, so we do not know

what the parameters correspond to. Second, the controller is responsible for both turning

the engine off when the car comes to a stop, as well as turning the engine back on when the

user is about to accelerate. This second part helps to minimize c 3 in the cost function, but

may also lead to more complicated behavior, as the controller may turn the engine off and

on multiple times during a stop if it believes the car is about to accelerate but the car does

not.

Given the black-box nature of the policy we are not allowed to add or remove parameters, and

instead must only change the vector 0 in order to achieve the best results. Our inputs from

any driver are three data traces: the driver's speed, the driver's accelerator pedal pressure,

and the brake pedal pressure, which are each sampled at 100 Hz. Figure 3.2 shows a graph

of an example of input data.

3.2

Simulator Model

In order to help determine the best policy Ford gave us a small amount of data, representing

under 5 hours of driving from a driver in an Ann Arbor highway loop, as well as about

an hour of data from drivers in stop and go traffic. This data was insufficient to run the

experiments we hoped to perform, so to create more data that was similar to the data from

Ford I built a simulator. The simulator was based on of a state machine, which is shown in

Figure 3.3.

The simulator has three states: Stopped, Creeping, and Driving. In the Stopped state the

driver has come to a stop. In the Driving state the driver is driving at a constant speed,

with Gaussian noise added in. The Creeping state represents when the driver is moving at

a low speed, with their foot on neither pedal. The driver stays at their current state for a

27

Ford Data

-

-

45

40.

35230E

25

20

C 15

10

5

0O

5

10

15

2'0

25

3

0

5

10

15

20

25

30

5

10

15

Time (seconds)

20

25

-g 1000

P

800

600

400

0 200

25

20

-

e

"76

0

15

-

a)

10-

0

-

5-

30

Figure 3.2: A slice of the data. This graph shows a driver coming to a stop, and then

accelerating out of it.

predetermined amount of time, and then transitions to another state by either accelerating

or decelerating at a relatively constant rate.

The simulator is parameterized to allow for the creation of many different drivers.

For

example, the average stopping time is a parameter in the simulator, as well as the maximum

speed a driver will accelerate to after being stopped. There are 40 parameters, allowing me

to create a population of drivers with varying behavior.

The main weakness of the simulator is the state machine model. It is unlikely that a driver's

actions are actually only determined by their current state. For example, it would be expected that a driver on the highway would have a pattern of actions different from a driver

in stop and go traffic in an urban environment. To alleviate this issue, and in order to help

28

Accelerating

Creeping

Stopped

Decelerating

Decelerating

Accelerating

Accelerating

Decelerating

Driving

Accelerating

Decelerating

Figure 3.3: The state machine used by the simulator. Circles represent states and arrows

represent possible transitions between states.

me conduct my experiments, I created 2000 driver profiles, 1000 of whose parameters were

centered around parameters from a driver whose behavior matched the Ann Arbor data, and

1000 whose parameters were centered around a simulated driver who matched the stop and

go data. Every driver uses four randomly picked driver profiles, using one 50% of the time,

two 20% of the time, and one 10% of the time. This enables driver's behavior to be based

on their recent actions, as a driver will use a profile for a period of time before switching to

another profile.

3.2.1

Simulator Output

The simulator that I have described outputs the speed trace of the vehicle. After the speed

trace is generated a second program is run that takes the speed values and generates appro-

29

priate values for the brake and accelerator pedal pressure values. Ford created this program

in Matlab and I ported it to Java. The output from the combination of the two programs is

a data trace with a similar format to data from the real car, giving results for all three values

at 100 Hz for a drive cycle. The only difference in output format is that the synthetic data

is never missing any data, while the data in the real car occasionally doesn't have values for

certain time steps. This occurs rarely, and only causes a millisecond of data to be missing

at a time, so it was not modelled into the simulator.

The highway driving data was given to me first, so I created the highway driver profiles first.

Figures 3.4, 3.5, and 3.6 show that I was able to match coarse statistics from the highway

driving data.

0.09

0.09

0.08

0.08

0.07

0.07

0.06

0.06

0.05

0.05

E 0.4

00.04

0.03

0.03

0.02

0.02

-

0.01

0.01

0.000

10

20

30

40

50

Speed in kmnh

60

70

0

80

10

20

30

40

50

Speed in kmnh

60

70

80

Figure 3.4: The percentage of the time the driver spends at different speeds. On the left is

real data from the Ann-Arbor highway loop, and on the right shows simulated data with a

profile designed to match the highway loops.

Figures 3.4, 3.5, and 3.6 represent a summary of traces generated from the real data and the

simulated data. Aside from analyzing larger statistics generated from the traces, I analyzed

small subsections of traces to ensure that individual outputs behaved similarly as well. I did

this by looking at certain events, such as a driver coming to a stop, and then accelerating

out of the stop, and looking at the trace values generated, making sure that the simulator

output traces that behaved similarly to the real data.

About six months later I was given the stop-and-go data. This data was comprised of eight

30

0.0020

0.0014

0.0012

0.0015-

0.0010

0.0008

0.0010

E

0.0006

0.0005.

0.0004

0.0002

0.00000

500

1000

100

2000

Brake Pressure NM

2500

3000

0.

3500

0003

0000

500

1000

2000

1500

Brake Pressure NM

2500

3000

3500

Figure 3.5: The percentage of the time the driver spends with different brake pressures when

at a stop. On the left is real data from the Ann-Arbor highway loop, and on the right shows

simulated data with a profile designed to match the highway loops.

smaller driving episodes from stop-and-go traffic. I created a driving profile different from

the highway profile that had similar summary statistics to the data, as shown in the following

table.

Sim - Highway

Sim - Stop-And-Go

Average Speed (km/h)

23.2

33.8

33.5

Average Stop Time (s)

19.24

10.32

9.72

Average Time Between Stops

102.78

41.48

40.83

% of Segments below 10 km/h

4

76

Ford Stop-And-Go

77

A driving segment is defined as the period of driving in between instances of the vehicle

coming to a halt. The statistics show that the profile has similar behavior to the real stopand-go data, as the vehicle stops for shorter lengths of time, and often does not accelerate

to a high speed between stops. Combined with the highway simulator profile, it enables me

to create data similar to all data that Ford has given me.

The largest concern with the simulator is that aspects of real-world behavior may not be

modelled in the simulator. One example is that the simulated driver does not change their

behavior based on the start-stop policy in use. It is hard to know if driver's will change their

31

25

20

ih0

.10

~15

t

10

20

30

40

50

so

To0

10

20

340060

7

Letguh of top In

Seconds

Length of stop in seconds

Figure 3.6: A histogram showing the length of stops in a trace. On the left is real data from

the Ann-Arbor highway loop, and on the right shows simulated data with a profile designed

to match the highway loops. The right trace has a much larger number of stops, but the

distribution of stopping times is similar.

actions based on different behavior from the controller without implementing a controller in

a vehicle. Because the Ford data was collected from a vehicle using a static controller, I have

no evidence that drivers change their behavior, or of how they would change their behavior

if they did. Therefore, I opted to use simulated drivers that act the same regardless of the

policy in place.

There may be other areas where the simulated behavior may not match

actual behavior, but there seems to not be any reason that the methods described would not

work just as well with real data.

The simulator will be used for all tests described in the following two chapters, as it allowed

me to quickly test how the algorithms performed using hours of synthetic data, and should

help avoid overfitting to the small amount of that was provided by Ford.

3.3

Optimizing For a Population

I will now describe the procedures used to optimize for a population, as well as the results

obtained from the experiments.

In order to optimize for a population I used a modified

version of the Kohl-Stone policy gradient algorithm described in section 2.2.2. I chose to

32

use this algorithm rather than an evolutionary algorithm because of Kohl-Stone's simplicity

and because the runtime of the algorithm was not a factor, as the algorithm did not need to

run on a car's computer.

The modified version of the algorithm was the same as the algorithm described in section

2.2.2, with one modification made to determining C(R1 ),..., C(R,) at each iteration of the

algorithm. These costs are the costs of using a policy that are a perturbation of the current

policy 0, and in the original Kohl-Stone algorithm are evaluated based on the costs of a

completely new trial using Ri as a policy.

This is because the differences in the policy

may affect the actions of the driver. In my modified algorithm I evaluated the cost of the

perturbations based on a single drive trace, chosen from a random driver from the population.

I did this to cut down on the time necessary to run the algorithm, and to cut down on the

amount of noise present in the results. Most of the runtime of the algorithm is spent creating

the drive traces, and I create 50 perturbations of 0 at each drive trace. Therefore, creating

only one drive trace at each step speeds up the algorithm by a factor of almost 50. Using

only one drive trace also cuts down on the amount of noise present in the cost, as if I selected

a new random driver for each drive trace, the differences in cost would mainly arise from

differences in the drive trace and not the difference from the parameters.

For example,

certain drive traces, such as a file where there are very few stops, will always have a low

cost. Conversely, a drive trace where there are many short stops will always have a relatively

high cost no matter what the controller's policy is. Using a new drive trace each time would

necessitate adding in more perturbations to reduce the noise, extending the runtime of the

algorithm even further. Using one random trace to optimize the policy is an example of a

technique known as variance control, which is also employed by the PEGASUS algorithm.

PEGASUS is another policy search method which searches for the policy that performs best

on random trials that are made deterministic by fixing the random number generation [13].

The main negative side effect of using only one drive trace for each iteration of the algorithm

is that the modified algorithm operates with the assumption that small perturbations to the

start-stop controller have no effect on driver behavior. This is true for the simulator because

33

the start-stop controller never has any effect on the driving behavior.

This is probably

true for real life as well, as I would expect drivers to change their behavior with drastically

different controllers, such as a controller that always turned the engine off, versus one that

never turned the engine off, but because the algorithm only needs to approximate a gradient

the policies measured are similar enough that drivers might not even notice their difference.

However, this does mean that the learning rate may need to be relatively small, so that

any changes at any iteration do not cause the driver to drastically change their behavior.

The other negative side effect is that on each iteration the value for 0 changes based on the

driving trace drawn from one driver. This can lead to the value for 9 moving away from the

optimum if the driver is far away from the mean of the population. This should not be an

issue over time, as I expect the value for 9 to approach the population mean.

3.3.1

Experimental Results

For my experiment I used the modified version of the Kohl-Stone algorithm. I normalized

each of the parameters in the controller to the range [0, 1], and set a learning rate of .025.

For each iteration of the algorithm I created 50 perturbations and perturbed each value

randomly by either -0.25, 0, or 0.25. Each driving trace used was 6 hours long.

I performed 35 trials of the Kohl-Stone algorithm each running from a random starting

location for 200 iterations.

After 200 iterations I measured the performance of each trial

on 25 random drivers drawn from the population. Figure 3.7 shows the average cost of all

35 trials over time, demonstrating that the algorithm had stopped improving many trials

before the 200 iteration limit was reached.

I chose the best performing parameters, and

then measured on another sample of 25 random drivers to get the expected cost of a 6 hour

driving segment.

I found the best parameters had an average cost of 1329, while Ford's

original parameters had a cost of 1810, for an improvement of 481 or 27%. This cost saving

is equivalent to the car's engine being off instead of idling for 80 seconds every hour, though

the actual cost savings may arise from any combination of the annoyance factors and energy

34

200019001E1800-

1700160015001400113000

500

100

150

Iterations

20

Figure 3.7: A graph of the average cost of the 35 trials over time.

savings discussed previously.

I also compared the results of using the policy learned by my algorithm to the results of

Ford's policy on the real data from Ford.

There was not much data from Ford, so the

comparison is not as useful as the comparison on the simulated data, but ideally the policy

should perform better than Ford's policy on the real data as well.

35

Ford Policy

Optimized Policy

Percentage Improvement

1-94 Data

56.84

55.61

2%

Ann Arbor Loop Data

199.33

177.75

11%

W 154 Data

67.61

63.92

5%

Urban Profile 1

36.1

34.05

6%

Urban Profile 2

50.35

47.2

6%

Urban Profile 3

698.12

634.95

9%

Urban Profile 4

115.72

111.57

4%

Urban Profile 5

116.02

100.53

13%

Urban Profile 6

64.45

57.32

11%

Urban Profile 7

62.83

53.9

14%

Total

1467.37

1336.8

9%

The optimized policy does not exhibit as much improvement over the Ford policy as it did for

the simulated data but it still outperforms Ford's policy by 9% overall. It also outperforms

Ford's policy across all 9 data sets. Along with the small amount of data present, there are

two caveats to the results. First, there were no brake pedal or accelerator pedal data available

for most of the samples, so those values were generated as functions of the speed data using

the program Ford gave me. It is unknown accurate these predictions are. Second, there is

no way of knowing if using different controllers may have changed the actions of the driver's

in the experiments. This is impossible to test without implementing a modified controller in

real vehicles. These factors may cause the optimized controller to perform relatively worse

or better compared to these results.

3.3.2

Optimizing For a Subset of a Larger Population

In the previous section I demonstrated that policy search can produce a policy that performs

better than the hand tuned policy created by Ford. The rest of my thesis will discuss methods

36

of improving on this policy.

One way to improve on a policy generated for the entire population of drivers is to create

separate policies for subsets of the entire population. This is beneficial in two ways. First,

if Ford can subdivide the population ahead of time, they can install the policies in different

types of vehicles. For instance, drivers in Europe and the United States might behave differently, or drivers of compact cars and pickup trucks might have different stopping behavior.

If the populations can be identified ahead of time and data can be collected from each population different policies can be installed for each set of drivers.

Classifying for a smaller

population might also be useful if the driver can be classified online as driving in a certain

way. If the driver's style can be classified by the car's computer, a policy can be swapped

in that works best for the situation. For instance, drivers on the highway and drivers in

stop-and-go traffic in an urban environment might behave very differently and have different

optimal policies. It should be possible to obtain better results by using a policy optimized

for a specific pattern of driving when the driver's style fits that pattern.

In addition to providing results for optimizing for a smaller population of drivers, this section

acts as a middle ground between the previous section and the next chapter, where I will

discuss optimizing a policy for a specific driver. As the policy is optimized for a more specific

group better results are expected, as the policy only needs to match the behavior of that

group and does not need to account for drivers that are not present who behave differently.

As long as the drivers in the smaller subsets are different from the larger population in a

meaningful way, better results are expected as the group becomes smaller, with the best

results being expected with policies optimized for a single driver. The key is to divide the

population into subsets that are expected to be different in some way, as if the population

is subdivided into random large subsets the expectation is that the policies will be identical.

It is also necessary for there to be enough data for each subset in order to create policies

that perform well,.

It may even be possible that the methods used in this subsection will result in lower costs

than optimizing policies for a single driver. This strategy may lead to lower costs if policies

37

are optimized for modes of driving, which are then swapped in during a drive, based on the

driver's behavior. Lower costs are likely if the individual driver's behavior in a particular

setting, such as highway driving, is more similar to the average driving behavior from all

drivers in that setting than the individual driver's average behavior across all settings of

driving.

In this case the policy found for all drivers in a particular mode will probably

perform better than the policy found for a single driver across all modes of driving.

3.3.3

Experimental Results

The most natural way to divide the driver profiles from the simulator into groups is to

divide them into two sets of drivers, the first being the drivers generated from the Ann

Arbor data, and the second being the drivers generated from the urban data. This doesn't

represent a division of car buyers that Ford can identify ahead of time, but the profiles

do represent modes of driving that would benefit from separate policies.

I will compare

the results of optimizing for the smaller populations compared to optimizing for a larger

population comprised of both sets of drivers.

In order to determine the best policies for each population I repeated the experiments from

Section 3.3, running the experiment 35 times from random starting locations, and testing

on 25 random drivers to determine the best policy, and finally testing on 25 other random

drivers to determine the expected cost of the policy.

For the profiles matching the Ann

Arbor data I found that the best policy had an average cost of 1233, while the for the

profiles matching the urban data the best policy had a cost of 1399. Averaged together the

cost was 1321, representing the average cost of six hours of driving if three hours are spent

in each mode and the best policy is used for each mode. I found that the best single policy

for the combined population had a cost of 1398. Therefore, optimizing for the subsets of the

population resulted in an improvement of 5.5%, or 13 seconds of idling removed per hour

in real world terms. This is less than the improvement from going from Ford's policy to an

optimized policy, but would represent a massive savings of energy if an improvement of this

38

scale could be implemented on all hybrid cars produced by Ford.

3.4

Conclusion

In this chapter I discussed the policy-search methods that were able to find a single policy

that performs better than Ford's hand tuned policy. I discussed the simulator that generated

the data necessary for policy search as well as the Kohl-Stone policy gradient algorithm

for policy search. The optimized policy that policy-search found performs 27% better on

synthetic data and 9% better on the limited real data available.

This policy could probably be immediately implemented in Ford's vehicles with better results.

The main concerns related to these results were that the simulated data does not capture

unknown characteristics of the real-world data and that drivers might modify their behavior

based on the new policy and produce worse results. The optimized policy does not perform

as well on the real data as it does on the synthetic data, but it outperforms Ford's policy

on every data set available, which seems to indicate that the simulator is capturing at least

some of the important features of the real data. It is impossible for me to test how drivers

would behave differently with the optimized policy. The only way to test this is to implement

the policy on test vehicles that are being driven under normal conditions to see how the user

would behave.

In order to use this policy on production vehicles, a logical next step would be to implement

the policy in vehicles where the user's driving behavior can be measured.

This could be

tested against vehicles running the original hand-tuned policy. If the newly optimized policy

performs better than the old policy, it could then be implemented in vehicles sold by Ford.

Along with testing the effects of the policy, another option to improve the performance of the

policy would be to collect more real world data. Though the simulator was constructed to

match the real-world data there was not enough real data to be sure that it matches the larger

population of driving behavior from drivers in Ford vehicles.

39

Collecting much more data

would allow for a policy-search algorithm to be run directly on the data, eliminating the need

for synthetic data. Unfortunately, this would still not address the problem that there would

be no way to test the effect of a policy on a drivers behavior. Each time a policy is optimized

it should be tested in real vehicles to ensure that drivers do not change their behavior with

the optimized policy in a way that negatively affects performance.

Alternatively, a large

group of random policies could be implemented for random drivers in an effort to show that

policies have no effect on driver's actions. If this is true, then there would be no need to

test the effects of individual drivers. However, it seems unlikely that completely different

controllers would have no effects whatsoever on driver's actions.

The main benefits of the approach outlined in this chapter are that it is simple, producing a

single policy that can be examined by Ford, and that it requires no computation inside the

car. However, it is likely that optimizing for a smaller subsection of driver behavior than

the whole population will produce better results than creating a policy that must be used

by each driver. I demonstrated this partially in this chapter, showing that optimizing for

subsets of a larger population produced better results than creating a single policy for the

entire population.

In the next chapter I will discuss optimizing for a single driver, which

should produce even better results.

40

Chapter 4

Optimizing a Policy for an Individual

Driver

In this chapter I will discuss optimizing the start-stop policy for each individual driver. This

should result in a lower cost on average than the previous approach of optimizing a single

policy for a population of drivers.

The primary disadvantage of this approach is that it

requires more computation in the car's internal computer, which may be an issue if the

computational power of the car is limited. It also requires time to find an optimized solution

and is more complex, needing much more debugging before it can be implemented in a real

car.

4.1

Problem Overview

In the previous chapter I sought to optimize

E[C|0],

(4.1)

for the entire population by varying the policy 0, where C was the cost function comprised

of factors representing the fuel cost of the controller's actions and the annoyance felt by the

41

driver of the vehicle due to the controller's actions. In this chapter I will instead seek to

optimize

E[CIO, d],

(4.2)

where d is the individual driver, again by varying 0. By creating a policy for each driver that

need only work well for that driver I should get better results than finding a single policy

that must work well for all drivers. In this chapter I will discuss experiments to find the

best policy using two methods of policy search, the Kohl-Stone policy gradient algorithm,

and CMAES, a variant of the cross-entropy maximization algorithm. In addition to these

two methods of policy search I will also discuss experiments using a model-based approach

where I attempt to minimize

E [C 10, d, -r],

(4.3)

where r is the recent behavior of the driver. I will do this by creating a model of the driver's

behavior using Gaussian processes.

As before I will use the simulator to measure the performance of my algorithms. The amount

of data available was not sufficient to test any of the approaches on real data. The disadvantages of the simulator were discussed in chapter 3, but one of the main disadvantages

of the simulator, that the simulated drivers do not change their behavior based on the different start-stop policies, is not as much of an issue here. This is because for most of the

approaches in this chapter the effectiveness of a policy would be determined by how that

policy, or a policy similar to it performed with the actual user. Therefore, the results incorporate how the user changed their behavior in response to a change in policy. I will examine

this further when discussing the results of each technique and their expected effectiveness

when implemented in a vehicle.

Along with an identical simulator, I used the same cost and rule configuration as before.

The main changes are that I assumed that I am allowed to do computation inside the car's

computer, and that the car's computer can switch policies at will. This allows me to use

data from the driver to find better policies. There are several complications introduced by

this approach, namely the computational expense, the ability for the algorithms to converge

42

to suboptimal solutions, and the additional complexity introduced by running algorithms on

the car's computer.

4.1.1

Issues

The additional computational load is the most notable effect of finding policies customized

for each driver.

In my experiments I did not create an explicit limit on the amount of

computation available inside the car, though I will discuss the computational expense of

each method.

The other main disadvantage of optimizing the policy for a driver is that it will result

in many different policies that cannot be predicted ahead of time, and therefore cannot be

checked manually. The algorithms used may also lead to policies converging to worse policies

than before, for instance if the optimization overfits a policy to a recent driving period. I

will experimentally evaluate how often the tested algorithms converge to poorly performing

policies by comparing their performance with the general policy found in chapter 3.

An even worse situation than the algorithm finding a suboptimal policy would be for there

to be a bug in the code, leading to crashes, or the controller always returning a bad policy. It

is almost certainly possible to separate the algorithm for finding the best policy from other

computation inside the car's computer, so that a failure in the optimization algorithm does

not cause all of the car's computation to fail, but a bug in the code could lead to worse

policies being used. The controller returning a bad policy because of a bug would be worse

than the controller failing completely, because if the controller failed the car could use the

default policy values found for the population. Conversely, if the controller had a bug in it

that resulted in nonsensical values, such as settings that would lead to the car's engine never

turning off, the car would execute the flawed policy.

There is no way for me to guarantee that the final algorithms used in a production vehicle

will not have any bugs, especially because I believe that any algorithms used in consumer

vehicles would need to be recoded to the standards used for code executing in the car. The

43

only way for me to limit the number of bugs is to use relatively simple algorithms that

are much easier to debug than more complicated algorithms. Because this was a goal, the

two direct policy search algorithms chosen for analysis are simple to implement, though the

Kohl-Stone policy gradient algorithm is simpler than CMAES. The model-based approach

using Gaussian processes is more complicated however, and I relied on an external library

for my implementation. I found that Gaussian processes were not effective, so they seem to

be a suboptimal solution before considering complexity, but if a more complicated solution

obtained better results the tradeoff in cost versus complexity would need to be considered,

as it might not be worth it to find a solution with .001% more efficiency at the cost of greatly

increased complexity. This is in direct contrast to finding a policy for the population, where

the complexity of the approach does not need to be considered, because none of the code

runs inside a vehicle.

The final aspect of optimizing for a population that is different than optimizing for an

individual is that the speed to reach an optimal solution must be considered. Previously

this did not matter, because I was optimizing for a static population offline. Here, if it takes

10,000 hours to find the best solution the person might have sold their car before an optimal