Character Animation for Real-Time Natural Gesture ...

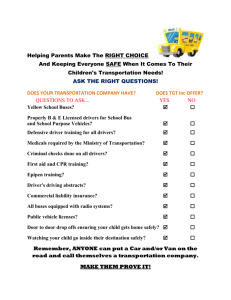

advertisement