Integration of a Bayesian Net Solver ... KBCW Comlink System and a ... Diagnosis System

advertisement

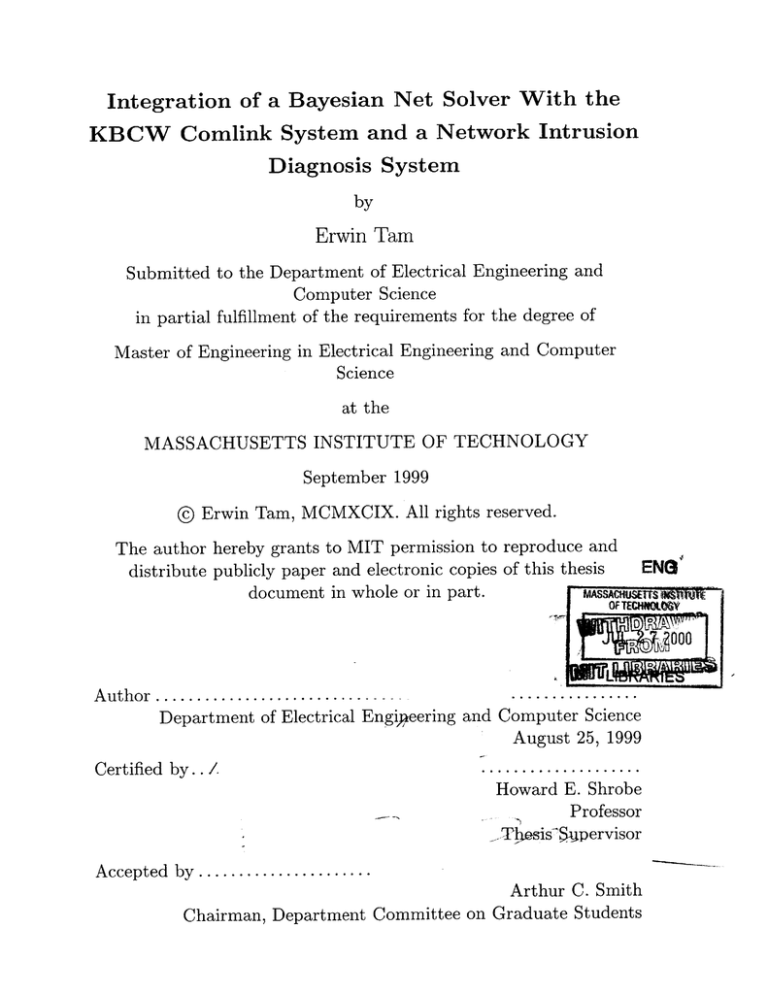

Integration of a Bayesian Net Solver With the

KBCW Comlink System and a Network Intrusion

Diagnosis System

by

Erwin Tam

Submitted to the Department of Electrical Engineering and

Computer Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer

Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 1999

© Erwin Tam, MCMXCIX. All rights reserved.

The author hereby grants to MIT permission to reproduce and

ENG

distribute publicly paper and electronic copies of this thesis

MASSACHUSEIStW1AT1IM

document in whole or in part.

OF TECHN00 Y~ is

0*

--.-.-.-.-.-.-.

Author ........................

Department of Electrical Engipjeering and Computer Science

August 25, 1999

Certified by..

----.

.........

Howard E. Shrobe

Professor

Thesis-5pervisor

Accepted by.................

Arthur C. Smith

Chairman, Department Committee on Graduate Students

Integration of a Bayesian Net Solver With the KBCW

Comlink System and a Network Intrusion Diagnosis System

by

Erwin Tam

Submitted to the Department of Electrical Engineering and Computer Science

on August 25, 1999, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

This thesis attempts to demonstrate the practical aspect of applying probabilistic

methods, namely Bayesian nets, towards solving a variety of applications. Uncertainty comes into play when dealing with real world applications. Rather than trying

to solve these problems exactly, a probabilistic approach is more feasible and can provide useful results. For this project, a Bayes net solver was integrated with a network

intrusion detector with resource stealing the primary attack that was focused upon.

A Bayes net solver was also integrated into the Knowledge Based Collaboration Webs

(KBCW) Comlink system. The results show that such an integration between different ideas can be both useful and productive, providing functionality that neither

alone could accomplish. The project is a successful demonstration of the potential

benefits that Bayesian nets can provide.

Thesis Supervisor: Howard E. Shrobe

Title: Professor

2

Acknowledgments

I would like to thank Professor Howard E. Shrobe for his endless patience and support.

Without him, there could have been no thesis. His understanding and consideration

helped me get through tough times both academically and personally. I can honestly

say that at least half of the credit for finishing this thesis should be attributed to

him. My eternal thanks and gratitude go out to him.

I would also like to thank the other members of the KBCW group in the Al lab

that helped me with my daily questions, always with a friendly ear. Getting work

done and trying to find research ideas was never easy but it was so much better being

able to draw upon the creative ideas and suggestions by everyone in the lab.

Lastly I would like to thank my family and close friends who have kept me sane

enough emotionally to maintain the focus needed to finally finish this thesis. I needed

some help in overcoming this hurdle and for that, I am thankful. Times can be tough,

life can seem gloom, and the last thing on one's mind is work. Without the love and

support of those around you, things would be nearly impossible to cope with alone.

My love goes out to them. Thanks and God Bless.

3

Contents

8

1 Introduction

. . . . . . . . . . . . .

8

. . . . . . . .

9

1.3

Applications and Scope . . . . . . .

10

1.4

Objectives . . . . . . . . . . . . . .

11

1.1

Uncertainty

1.2

Probabilistic Models

1.5

1.4.1

MBT for Network Intrusion

11

1.4.2

KBCW Comlink System . .

12

. . . . . . . . . . . . . .

13

Roadmap

14

2 Bayesian Nets

2.1

H istory . . . . . . . . . . . . . . . .

14

2.2

Description

. . . . . . . . . . . . .

18

2.2.1

Simple Example . . . . . . .

18

2.2.2

Independence Assumptions .

20

2.2.3

Consistent Probabilities

. .

21

2.2.4

Exact Solutions . . . . . . .

22

2.2.5

Approximate Solutions . . .

23

. . . . . . . . . . . . .

24

. . . . . . . .

24

2.3

2.4

Advantages

2.3.1

Computation

2.3.2

Structure

. . . . . . . . . .

24

2.3.3

Human reasoning . . . . . .

24

Disadvantages . . . . . . . . . . . .

25

Scaling . . . . . . . . . . . .

25

2.4.1

4

3

6

25

2.4.3

Conflicting model . . . . . . . . . . . . . . . . . . . . . . . . .

25

26

3.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

26

3.2

Basic Task . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

3.3

Alternate Approaches . . . . . . . . . . . . . . . . . . . . . . . . . . .

28

3.3.1

Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . .

28

3.3.2

Fault Dictionaries and Diagnostics

. . . . . . . . . . . . . . .

28

3.3.3

Rule based Systems . . . . . . . . . . . . . . . . . . . . . . . .

29

3.3.4

W hen not to use the model-based approach

. . . . . . . . . .

29

Three Fundamental Tasks . . . . . . . . . . . . . . . . . . . . . . . .

30

3.4.1

Hypothesis Generation . . . . . . . . . . . . . . . . . . . . . .

30

3.4.2

Hypothesis Testing . . . . . . . . . . . . . . . . . . . . . . . .

31

3.4.3

Hypothesis Discrimination . . . . . . . . . . . . . . . . . . . .

31

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33

3.5

5

Probability values . . . . . . . . . . . . . . . . . . . . . . . . .

Model Based Troubleshooting

3.4

4

2.4.2

34

Design

4.1

Problem Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

4.2

Process modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

36

4.3

Input/Output Black Box modeling

. . . . . . . . . . . . . . . . . . .

37

4.4

Fault modeling

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

4.5

Probabilistic Integration . . . . . . . . . . . . . . . . . . . . . . . . .

40

46

Implementation

5.1

Linear Process. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

5.2

Branch/Fan process.

. . . . . . . . . . . . . . . . . . . . . . . . . . .

49

5.3

Branch and Join

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

53

58

Conclusion

6.1

System critique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

58

6.2

System limitations

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

5

7

6.2.1

Lack of correctness detection . . . . . . . . . . . . . . . . . . .

60

6.2.2

Lack of probabilistic links between components

. . . . . . . .

60

6.2.3

Lack of descriptive model states . . . . . . . . . . . . . . . . .

60

6.3

Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

61

6.4

Lessons learned .......

..............................

62

64

KBCW Comlink System

7.1

D escription

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

64

7.2

Integrating a Bayes Net Solver . . . . . . . . . . . . . . . . . . . . . .

66

7.3

Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

67

Exam ple . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

68

C onclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

80

7.4.1

System critique . . . . . . . . . . . . . . . . . . . . . . . . . .

80

7.4.2

Lessons learned . . . . . . . . . . . . . . . . . . . . . . . . . .

80

Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

81

7.5.1

Sensitivity Analysis . . . . . . . . . . . . . . . . . . . . . . . .

82

7.5.2

Multiple Viewpoints

. . . . . . . . . . . . . . . . . . . . . . .

83

7.3.1

7.4

7.5

8 Summary

84

A delay-simulator code

85

B comlink-ideal code

96

6

List of Figures

. . . . . . . . . . . . . .

19

. . . . . . . . . . . . .

21

4-1

A simple sample component. . . . . . . . . . . . . . . . .

38

4-2

A second example of a component module. . . . . . . . .

39

4-3

Completely specified component with probabilistic model included.

43

5-1

Linear process model. . . . . . . . . . . . . . . . . . . . . . . . . . . .

47

5-2

Linear process apriori probabilistic model.

. . . . . . . . . . . . . . .

48

5-3

Linear process post evidential probabilistic model.....

. . . . . . .

49

5-4

Branch/Fan process model.

. . . . . . . . . . . . . . . . . . . . . . .

50

5-5

Branch/Fan process probabilistic model.

. . . . . . . . . . . . . . . .

51

5-6

Branch and Join model.

. . . . . . . . . . . . . . . . . . . . . . . . .

55

5-7

Branch and Join probabilistic model. . . . . . . . . . . . . . . . . . .

55

7-1

The Burger Problem before any evidence. . . . . . . . . . . . . . . . .

75

7-2

Cheap cream cheese developed.

. . . . . . . . . . . . . . . . . . . . .

76

7-3

Germany goes bankrupt and liquidates pickled cabbage to the world.

76

7-4

McDonald's survey show customers value variety the most. . . . . . .

77

7-5

Medical results say that you need to eat meat. . . . . . . . . . . . . .

77

7-6

Estimated cost of pickled cabbage quadruple burger too high.....

78

7-7

New McDonald's survey also shows that customers love the cheeses.

79

7-8

Final result putting together all the evidence.

79

2-1

A simple causal graph network.

2-2

Fully specified Bayesian network.

7

. . . . . . . . . . . . .

Chapter 1

Introduction

1.1

Uncertainty

Uncertainty is a central motivating force behind many aritificial intelligence topics.

How can we make intelligent decisions in the face of limited data? One might say

that learning itself is merely a process of reducing uncertainty in our world. How

can we ever be entirely certain of something? Given that we know a small limited

amount of information, what conclusions or hypotheses can we reason about from

that? How likely are these conclusions to be true? There are many situations where

tough decisions need to be made. They usually involve a subjective process whereby

one person's experience and intuition guide them in the decision making. Ideally,

we'd like to have a more formal process, especially when confronted with a lot of data

or varying opinions from many people. How much do we really believe something to

be true and what effect does our level of belief have on the conclusions we come up

with? To be able to answer this, we need some model of uncertainty that allows a

systematic procedure for chaining together information and coming up with a viable

hypothesis.

Understanding uncertainty and how the human brain deals with it is a very important question. Right now researchers have no idea how to model the way the

human senses work or the way the brain works. We know that the senses send signals

containing information to the brain, but how this data is processed is still a mystery.

8

Like all things, data is often incomplete, yet the human mind is capable of piecing

things together to form a complete picture. Is it possible that by learning more about

this mysterious process, we can garner practical knowledge that can be readily applied to other fields and problems? The answer appears to be a resounding "yes".

There are so many applications that have to deal with limited data yet still need to

generate some form of useful representation or hypothesis from thatt. Coming up

with a model that can handle such conditions has such wide ranging applicability.

This is an area of research that is starting to expand. As computer technology gets

faster and better, we should also come up with smarter and better ways to utilize

such computing power. Even if we eventually reach the raw computing power of the

human brain, if we cannot make efficient usage of it, the outcome will be severely

limited.

1.2

Probabilistic Models

One of the most useful models for dealing with uncertainty is the probabilistic model.

Probability theory is a well understood and mature field. When dealing with unknown

quantities and attempting to combine and reason with them, probability theory serves

as an obvious choice to serve this purpose. Probability theory has had a resurgence in

the past decade and is the current most popular tool for analyzing uncertainty. This

was due mainly to the increased computing power and newer models and algorithms

for probability theory. There are other formalisms for reasoning about uncertainty

such as the Dempster-Shafer theory of evidence[10] or the large body of work based

on Zadeh's fuzzy set theory[8). However, the model of choice that will be used is the

probabilistic model.

Analyzing uncertainty using formal probability theory provides us with a structured and accurate method to handle evidence and partial information. It allows us to

merge together sources of information with imperfect reliability to generate hypotheses, each with their own relative likelihood or probability of being true. Probability

theory is a well understood idea that is proven to be a correct way for dealing with

9

uncertainty. This is why we decided to use the probabilistic model.

1.3

Applications and Scope

There are three main areas where uncertainty and probability theory can be applied.

They are: diagnosis, data interpretation, and decision making. Diagnosis is a broad

area that covers medical decision making and model based troubleshooting for systems. It is widely used in medicine for making decisions about patient treatment,

interpreting test results, and helping patients understand the rationale behind alternative treatments. This fast growing area is known as medical informatics. Model

based troubleshooting is another form of diagnosis. Here the problem deals with

some system that can fail for a number of reasons. The objective is to diagnose the

condition and reason about possible faults given observations about how the system

is behaving or misbehaving as is usually the case. This form of diagnosis began in

manufacturing where faults in circuits needed to be diagnosed to find out where the

problem lay. Since there is a limited supply of experts who can properly troubleshoot

a given system, automated troubleshooting is a viable and very desirable goal to attain. The range of applications this applies to is immense given the vast amounts of

complex machinery and technology available which often break down.

Data interpretation is widely used by the military when they have to interpret

intelligence data and determine the likelihood of different hypotheses. This is critical

in evaluating the capabilities of the enemy in order to make smarter, more strategic

decisions. Gathering data is often a very costly process both in finacial resources

and human resources. As such one would like to find the optimal ratio of value of

information to cost of information. There is a wide range of oft incomplete data.

The military strategist needs to be able to piece together all of the information in

order to get an idea what the data is suggesting. Having a systematic approach to

data interpretation would take a lot of the guess work out of this process. In turn

it can help to minimize the effects of human error. This is a common problem as

demonstrated by the lack of military tact sometimes displayed by governments, ours

10

included.

The final application area deals with collaborative decision making. Oftentimes

when a large group of people is trying to come up with a decision, they need to decide

between various alternatives. There is no systematic process of debating. Moreover,

there is no structured quantitataive way of weighing arguments including how they

might support or deny another statement. Without a systematic way to piece together

arguments and other data, the decision making process can become very disorganized

and non-optimal. The "might makes right" approach whereby the top CEO or head

of the group makes the final decision isn't ideal. None of the ideas or arguments of

the others are taken into account. If it happens that someone has a really good idea

but is very low on the corporate ladder scale, their idea will get squashed by someone

higher up even though it is a superior idea. This is the type of problem we are trying

to solve when using probabilistic methods in collaborative decision making.

1.4

Objectives

The goal of this thesis is to demonstrate the feasibility of applying probabilitistic

methods towards solving two applications. The first one deals with model based

trouble shooting of network intrusion detection. The second deals with collaborative decision making, namely integrating probabilistic functionality into the KBCW

Comlink system. I will talk more about each of these two projects in the proceeding

sections. The overall objective is to integrate probability into different domains to

show that probability allows for very useful functionality. These two domains are just

a small sample of the many wide ranging applications that probabilistic methods can

be incorportated in. The success of these two projects will demonstrate that this is

an important emerging idea whose potential is not yet fully realized.

1.4.1

MBT for Network Intrusion

The first task/application to be dealt with is model based troubleshooting for network intrusion. Network intrusion is a big problem especially with today's type of

11

distributed systems. Hackers can attack the system in many ways. One difficult problem is resource stealing. This is a hard problem because it is passive. It is difficult to

know if resources are being stealed since nothing really goes wrong. The only visible

sign of a problem is that processes that use the same resource may run a bit slower.

Since the loads on resources vary throughout the day and is not constant, this is difficult to monitor and determine definitively if there is indeed a problem. What we have

done is to model a network of processes and resources and allow timing information

to propagate throughout the system. That is, we model the inputs and outputs of

processes and how they flow between components in the system while making note

of arrival and departure times of the input/output. Together with a prior knowledge

of the range of times it usually takes for a process to complete, we will be able to

monitor and troubleshoot if there seems to be a resource stealing problem. Thus we

will know if the network has been compromised with and more specifically, which

particular resource is likely to be compromised. This is a self monitoring probabilistic system that is a good beginning point for a network traffic monitoring/trouble

shooting system. The main bulk of the thesis will be focused on this problem.

1.4.2

KBCW Comlink System

The second task/application deals with the KBCW Comlink System. The Knowledge Based Collaboration Webs Comlink System allows people from all over the net

to be able to communicate in a collaborative forum. There they can voice their

opinions/arguments about topics to be debated upon. Currently the system allows

arguments to be linked together. That is, there is a graphical structure to the way in

which a person's argument can either agree/disagree or support/deny another statement given by another person. This system allows people from different locations to

be able to come to a group decision based on the arguments of each person. However,

one thing lacking currently is a way in which to quantify things. That is, how much

does one statement/argument support another? Do certain claims/hypotheses seem

more likely to be true than others? What we did was to basically integrate a probabilistic system into the Comlink system to provide this functionality. This would

12

give Comlink the ability to more precisely quantify how likely certain hypotheses are.

In a collaborative decision making process, this would allow the system to be able to

come up with a relative likelihood for all of the competing ideas. It would provide

a systematic way to distinguish between them to see which is more likely to succeed

given all of the arguments given by the group.

1.5

Roadmap

A roadmap of the direction this thesis takes will be given. First the probabilistic

model of choice, Bayesian nets will be described and discussed briefly to provide

the reader with a good basic idea of what Bayesian nets are and what they can do.

Next a quick summary of the field of model based troubleshooting will be given to

allow the reader to obtain a good idea what the issue/problems are in the area of

troubleshooting. This section is included for completeness and is in no way meant to

replace other superior sources on the topic. Next the problem of the network intrusion

troubleshooter will be discussed with an explanation of how things are modeled and

what functionality is available. Several walk through examples of the system will be

given to demonstrate what it can do. A critique of the system and it's limitations

will then be discussed. Issues such as future work and important lessons learned

from designing/implementing such a system will be discussed. Next I will talk about

the second project dealing with the integration of a Bayesian net solver with the

KBCW Comlink system. Issues such as design, implementation, critique, limitations,

and future work will be discussed. Finally a summary of the relative success of this

project will be given. Issues about what went wrong and what went well will be

talked about.

13

Chapter 2

Bayesian Nets

2.1

History

A Bayesian network is a compact, expressive representation of uncertain relationships

among parameters in a domain[3]. They are based on the probability theorem named

after the reverend Bayes.

Bayes' Theorem is a very powerful tool for determining

how to use evidence contained in data to determine the likelihood of hypotheses. It

can be shown to be the only coherent way to pass from specific evidence to general

hypotheses. In other words, it is a method for combining evidence which is provably

correct and well understood formally.

Thus it is of great value when combining

evidence and choosing between competing hypotheses.

Bayes theorem allows one to update the probability of a hypothesis given new

information or evidence. Bayes' Theorem is:

P(H|E) = P(EIH)P(H)/P(E)

Here, P(H) is the prior probability of a hypothesis H. P(H IE) is the posterior probability of a hypothesis, given that we observed evidence E. This allows us to constantly

update the probability of a hypothesis in the face of new evidence. Since this is an

example where new information changes our degree of certainty or belief in an event,

it may also be considered a type of learning.

14

Reducing uncertainty about a topic

is essentially equivalent to learning more about the topic. In Bayes' original paper,

published only after his death, he wanted to figure out how to go from the usual deduction of the probability of a specific result given a general hypothesis, P(E|H)and

turn it around, showing how to pass from a specific evidential result to the probability

of the general case, P(HIE). Bayes was a nonconformist minister and he developed

his theory as a formal means of arguing for the existence of God. The role of evidence

in this argument is taken by the occurrence of miracles and other manifestations of

God's good works. The two hypotheses he was comparing are the affirmation and

denial of God. Bayes wanted to prove formally that it was more likely that God

existed rather than the contrary.

Probability theory, including Bayes theorem is the oldest and best understood

theory for representing and reasoning about situations with uncertainty involved.

However, early artificial intelligence experimental efforts at applying probability theory was unsuccessful and disappointing. The main quip against probability theory

was the complaint that those who were worried about numbers were missing the

main point, that structure was the key and not the numeric details. At that time,

the only way to use probability theory in solving these problems was through the

joint probability distribution, also known as the JPD. For domains described by a set

of discrete parameters, the size of the JPD and the complexity of reasoning with it

directly can both be exponential in the number of parameters[3]. The method was

provably correct but infeasible to use. There was no efficient model that made it simpler or easier to understand or reason about quickly. Plus it was nearly impossible

computational-wise to use the probabilistic method reasonably. Calculations simply

took an exorbitant amount of time even for domains that contained only a small but

reasonable amount of parameters.

An approach to simplify things was the naive Bayes' model. This model assumes

that the probability distribution for each observable parameter depends only on the

root cause and not on the other parameters. This simplification allowed tractable

reasoning and computation. However the model was too extreme and oversimplified.

It did not provide the desired results. Thus given the computing power and probability

15

models at that time, probabilistic methods were not a feasible way to deal with

uncertainty problems.

However, about 5-10 years ago, there was renewed interest in probability, especially decision theory. The reason for this was the result of dramatic new developments in computational probability and decision theory which directly addressed the

perceived shortcomings of probability theory. The key idea was the discovery that

a relationship could be established between a well-defined notion of conditional independence in probability theory and the absence of arcs in a directed acyclic graph

(DAG). This relationship made it possible to express much of the structural information in a domain independently of the detailed numeric information, in a way that

both simplifies knowledge acquisition and reduces the computational complexity of

reasoning. The resulting graphical models have come to be known as Bayesian networks. This directly addressed the two major shortcomings of probability theory.

First that it was structure that was important and not just the numeric details in the

domain. Second that it was intractable to reason about and compute with probability models. The current work is in developing better algorithms to speed up belief

updating in Bayesian nets. This is currently a very active field and has helped to

grow the importance of Bayesian nets in the Al community as well as in industry.

The Bayesian network formalism is the single development most responsible for

progress in building practical systems capable of handling uncertain information[6].

The first book on Bayesian networks was published by Pearl in 1988[9]. The reader

is referred to this text as it is regarded as the authority in Bayesian nets. A Bayesian

network is a directed acyclic graph that represents a probability distribution. Nodes

represent random variables, and arcs represent probabilistic correlation between the

variables. The types of path and lack thereof between variables indicate probabilistic independence.

Quantitative probability information is specified in the form of

conditional probability tables. For each node, the table specifies the probability of

each possible state of the node given each possible combination of states of its parent nodes. The tables for root nodes just contain unconditional probabilities. This

formalism is very intuitive to reason with. It has been claimed that the human mind

16

works in very similar ways when reasoning about uncertainty. Since this model is

theorized to be similar to how we think, it is easy and intuitive to use.

The important feature of Bayesian networks is the fact that they provide a method

for decomposing a probability distribution into a set of local distributions. The independence semantics associated with the network topology specifies how to combine

these local distributions to obtain the complete joint-probability distribution over all

the random variables represented by the nodes in the network. The only probability

values that need to be specified for each node is the conditional probability of the

node being in one of its possible states given all combinations of its parents and their

respective states. The structure of Bayesian networks allow three important features.

First, by naively specifying a joint probability distribution with a table, it requires

an exponential amount of values in the number of variables. However if the graph is

sparse, the number of values needed is drastically reduced. As the network gets larger,

the savings become very substantial. Second, there are efficient inference algorithms

which work by transmitting information between the local distributions rather than

working with the full joint distribution. In essense the algorithms divide up the graph

into several smaller pieces, solves each smaller piece, and combines them together to

get the final result. Much quicker computation can come about by using such optimizing strategies. Third, the separation of the qualitative structure of the domain

and variables with the quantitative specification of the relative strengths of influence

between variables is extremely beneficial. This breaks the problem of modeling a

domain into two distinct stages. The process makes the knowledge engineering task

much easier and more tractable. The first step is in coming up with the qualitative

structure of the graph to see how the variables in the domain influence each other.

The second step is in coming up with the numbers and quantifying the strengths of

these relationships. Once both steps are complete, we are left with a model that

completely specifies the joint probability distribution in an intuitive and easy to use

graphical model.

17

2.2

2.2.1

Description

Simple Example

Now that we have discussed theoretically about what a Bayesian net is and why it is

so useful, we will go through a simple example to demonstrate how a Bayesian net

works. The best way to learn about Bayesian nets is to see an example of one. Note

that the example discussed below is borrowed from Charniak's paper[1] as it is a good

simple example of a Bayesian net. The following will briefly summarize some of the

issues and ideas discussed in his paper.

Bayesian nets are best at modeling situations where causality plays a role but

where our understanding of what is actually going on is incomplete. Therefore we

need to describe things probabilistically.

Suppose that when I go home, I will only take out my keys to open the door if

my family is out. Otherwise if I know that they're in I would just ring the doorbell.

In my house there is a light that my family turns on whenever they leave the house.

However, the light is also turned on if my family is expecting guests. This doesn't

happen all the time though. I also have a dog at home and he is outside in the yard

sometimes. Whenever nobody is at home, the dog is put in the backyard. The same

thing also happens if the dog has bowel-problems. Nobody at home wants the dog

in the house if that is the case. If my dog is outside in the backyard, he will bark

from time to time. There is a chance that I will hear him bark when I get home.

This is the situation when I get home. Since I am lazy and don't want to expend

the effort of taking out my keys if I don't have to, I'd like to be able to figure out

if my family is home or not. The two observations I can make are to see if the light

is on and if I hear the dog barking or not. I can't see if the dog is actually outside

in the backyard since it is in the back of the house. I could walk back there and

check though that would defeat the purpose of conserving my energy. So based on

my two observations, what conclusion can I draw about whether or not my family is

home? This situation is depicted in figure 2-1. The nodes in the graph signify random

variables which can be thought of as states of affairs. Each of the variables can have

18

Figure 2-1: A simple causal graph network.

a multitude of possible values. In our case, they are binary, either true or false. In

the more general case, each node or random variable can be N-ary, having N amount

of discrete states. Bayesian nets also extend to the non-discrete or continuous states.

This is an interesting area of research though for most practical everyday cases, it

isn't as good a model as discrete states.

The directed arcs in the graph signify causal relationships between variables. For

example if my family is out, then it has a causal effect on the light being turned on.

Similarly if my dog has bowel problems, then that directly affects the likelihood of

the dog being put out in the backyard. The important thing to note is that the causal

connections are not absolute. If the dog is out in the backyard, that doesn't mean that

I will definitely hear him bark. Sometimes he might be sleeping in the backyard or is

just being a good quiet dog and isn't making a ruckus. In any case, this is the first

stage of using Bayesian nets to model a real world problem with uncertainty. Note

that no numbers have been quantified yet though much information can be obtained

through the qualitative structure of the model. The arcs in the Bayesian network

specify the independence assumptions that must hold between the random variables.

Nodes that are not connected by arcs are conditionally independent of each other.

For example, suppose that I have observed that my dog is out in the backyard. I

19

went around back and checked and the dog was there. Now that I know for sure that

the dog is out in the backyard, it makes no difference whether he has bowel problems

or the family is out when determining if I will hear him bark or not. These variables

are conditionally independent.

The next step involves specifying the probability distribution of the Bayesian

network. In order to do this, one must first give the prior probabilities of all root

nodes, nodes with no predecessors and the conditional probabilities of all the other

nodes given all possible combinations of their direct predecessors or parent nodes.

Figure 2-2 shows a completely specified Bayesian network. Now that the model is

completed, we can deal with evidence. For example, let's say that I observe the light

to be on and I don't hear the dog barking. These nodes are then set to be in a specific

state with definite probability. I can calculate the conditional probability of familyout given these pieces of evidence. This is known as evaluating the Bayesian network

given the evidence. As more evidence comes in, the probabilities of the belief of each

node changes. It is important to note that it isn't the probabilities of the nodes that

are changing. What is changing is the conditional probability of the nodes given the

emerging evidence. In this case, belief is defined as the conditional probability given

the evidence.

2.2.2

Independence Assumptions

One important feature of Bayesian nets is the implied independence assumptions.

This feature saves a lot on computation for sparse graphs. One objection to the use

of probability theory is that the complete specification of a probability distribution

requires an exponential amount of numbers. If there are n binary random variables,

the complete distribution is specified by 2' - 1 joint probabilities. Thus the complete

distribution for figure 2-2 would require 31 values, yet we only needed to specify 10.

If we doubled the size of our example by grafting a copy of the graph onto the existing

graph, the number of values needed to specify the joint probability distribution would

be 2' - 1 which is 1023, but we would only need to give it 21 with the Bayesian net

formalism. The savings we get as opposed to the brute force probabilistic method

20

.01

.15

P(do

P(do

P(do

P(do

| fo bp) = .99

| fo !bp) = .90

!fo bp) = .97

!fo !bp)= .3

P(lo I fo) = .6

P(lo I !fo) = .05

P(hb I do) =.7

P(hb I !do) = .01

Figure 2-2: Fully specified Bayesian network.

comes from the independence assumptions implied in the graph. See Charniak[1] or

Pearl[9] for a mathematically precise definition of dependence and independence in

Bayesian networks.

2.2.3

Consistent Probabilities

One problem with a naive probabilistic scheme is inconsistent probabilities whereby

the individual conditional probabilities seem legitimate. However, when you combine

them, you can get probabilities which are not consistent, i.e. probabilities which are

greater than 1. There is no such problem with a Bayesian network. They provide

consistent probabilities and are provably equivalent to a full joint probability distribution. The numbers specified by the Bayesian network formalism define a single

unique joint distribution. Furthermore, if the numbers for each local distribution are

consistent, then the global distribution is consistent. A short proof of this claim is

found in Charniak[1] or Pearl[9].

21

2.2.4

Exact Solutions

The basic computation on a belief network is the computation of every node's belief

given the evidence that has been observed so far. This updating process is also known

as belief propagation. One of the biggest constraint on the use of Bayesian networks is

that in general, this computation is NP-hard[2]. The exponential time limitation often

does show up in many real world Bayesian net models. This is a real issue since many

real-world problems we would like to solve take an unacceptable amount of time to

evaluate. Finding a general algorithm that can solve any Bayesian network exactly is

NP-hard. This means that it is very unlikely to find a Bayesian net algorithm that will

work equally well for all cases. The algorithms for solving a Bayesian network employ

one of two strategies. The first is to factor the joint probability distribution based

on the independencies in the graph. The seceond method is to partition the graph

into several smaller parts and solve each separate part individually before combining

the results together to get the final answer. It can be shown that these two methods

are identical to each other. Some algorithms are very good for solving certain classes

of graphs while they are terrible for other types. One solution might be to have a

library of Bayesian network solver algorithms and be able to identify which algorithm

would work best given the particular problem. This would be a good way around the

NP-hard issue. This idea hasn't been that widespread most likely due to the cost of

implementing several algorithms instead of just one.

The algorithms for solving Bayesian networks exactly work well on a restricted

class of networks.

They can efficiently be solved in time linear to the number of

nodes. The class is that of singly connected networks. A singly connected network,

also known as a polytree, is one in which the underlying undirected graph has no more

than one path between any two nodes. There are techniques that can also transform

a multiply connected network into a singly connected one. There are a few ways to

do this but the most common ways are variation on a technique called clustering. In

clustering, one combines nodes until the resulting graph is singly connected. There

are well understood techniques for producing the necessary local probabilities for the

22

clustered network. Once the network has been converted into a singly connected one,

the previous algorithms can be applied.

2.2.5

Approximate Solutions

There are times when the Bayesian network is just too large that no exact algorithm

is capable of solving it in an acceptable amount of time. As is the case when trying to

solve NP-hard problems, one can opt for an approximate answer. That is, an answer

that is not exact but guaranteed to have a certain amount of error depending on how

many iterations of the algorithm is made. There are many approximation algorithms

available but the common approach they take is sampling. The basic approach is to

start at the root nodes and choose a value for its state based on its probabilities. Next

assume that those nodes are in that particular state and progress on to the children.

Again choose a state for the child node to be in based on its conditional probabilities

with the assumption that the parent nodes are in the states specified by the previous

iteration. Once you've gone through the entire network, record the value of the

node that you are trying to query the probability of given the evidence.

Repeat

this operation several times and the distribution that you record should approach

the actual exact distribution had you solved it exactly. The more iterations you

do the closer your solution will be. However, there are a couple of problems. The

first is that sometimes the solution takes a while to converge, i.e. it'll take a lot

of iterations before the answer you get approaches the exact answer. Secondly, and

this is related to the first problem, depending on where you start, you might get

stuck at a local maxima/minima. The solution you get could be quite different from

the actual answer though your answer wouldn't change for a while through several

iterations simply because you are at a local maxima/minima. Regardless, there is

a greater possibility that there exists an approximation algorithm which works well

for all kinds of Bayesian networks since an exact solution is NP-hard. With the ever

growing level of computing power, this might be the most feasible approach towards

solving Bayesian networks.

23

2.3

2.3.1

Advantages

Computation

There are a few advantages of Bayesian networks that make them very attractive

for solving a lot of Al problems. The first is computation. Given the independence

assumptions in the formalism, computation can be sped up greatly as compared to

computing the joint probability distribution the brute force way. For sparse singly

connected networks, they can be solved very efficiently, even if there are a large

amount of variables/parameters.

2.3.2

Structure

The focus of Bayesian networks is more on structure rather than numbers. That was

one of the key arguments against using probability theory in decision making and

other uncertainty problems. Now one can see visually how ideas are linked together.

A good model that is accepted by all the parties involved can be created first. This

process is the more intuitive step and is easier to come to a group agreement on what

the proper model of the problem should be.

2.3.3

Human reasoning

Bayes nets are theorized to be similar to how humans think. The graph model where

new ideas can be linked in quite easily is a good formalism for human thinking. One

of the reason for the success of Bayesian networks is that they are easy to reason

with. Simply using numbers with the joint probability distribution was shown to be

very intractable to reason about. However with the Bayesian formalism, it has been

shown to be very intuitive to reason with. How the probability propagates as the

result of evidence can be depicted visually lending more belief/credence to the results

generated.

24

2.4

2.4.1

Disadvantages

Scaling

There are still problems with using Bayesian networks. They are not perfect and do

not work equally well for all situations. The general problem is still NP hard to solve

exactly. This definitely does not scale well. It is more likely for larger models with

more variables to be more difficult to solve in a reasonable amount of time. NP-hard

problems are very unlikely to ever be solved efficiently as this is a problem that has

plagued algorithm theorists for many years.

2.4.2

Probability values

Another problem is that one still needs to come up with subjective values for the

conditional probabilities. Even though the structure can be decided upon independently, probability values still need to be given to fully specify the model. Now the

question of where these numbers come from arises. People will very likely argue over

the exact values. One fear is that by just changing the numbers, one can come about

with whatever result one desires.

2.4.3

Conflicting model

Model generation is still subjective. There can be conflicting opinions about causality.

Not everyone will come up with the same exact Bayesian network to model a particular

problem. The issue then becomes, which model is more correct. This is a difficult

problem to address as there is no formal way to quantify how correct a particular

model is. Once again this comes down to subjective viewpoints and the fear becomes

that a not-so-correct model is chosen instead of a more accurate one.

25

Chapter 3

Model Based Troubleshooting

We will now briefly go over the important points and issues that arise when discussing

model based troubleshooting. This chapter is just a summary of the excellent article

written by Davis and Hamscher[4] in chapter 8 of the 11th International Joint Conference on Al. It is by no means meant to be a substitute for that. For a more complete

description of model based reasoning and its current state, consult the aforementioned

reference. This chapter is included for completeness to provide the reader with a fair

understanding of the ideas involved in model based reasoning and troubleshooting.

3.1

Introduction

An oft occurring problem that plagues all of us from time to time is that something

stops working. We would like to know why it stopped working and to figure out

how to fix it. A good first step is to understand how it was supposed to work in the

first place. That is the main idea behind model based reasoning. The rest of this

chapter will discuss what the nature of the troubleshooting task is, exploring what

is given and what is the desired result. Models of the structure and behavior of the

system in question is very useful for diagnosis and reasoning. Most of this chapter will

talk about how to use these representations to do model based diagnosis. The basic

procedure is to witness the interaction between prediction and observation, that is we

predict what should happen and observe what actually happens. Thus when there is

26

a contradiction between the two, we attempt to solve the problem. This is broken

down into three fundamental subproblems: generating hypotheses by reasoning from

the symptoms to components that may possibly be at fault, testing each hypotheses

to see if it is consistent with all the observations, and discriminating among all the

valid hypotheses to see which one is the most likely. What we will find is that there

are well known methods for model based diagnosis once a tractable model for the

problem has been given. However, the harder problem to solve is to figure out how

to come up with a good model. This is an open research topic and presents many

problems.

3.2

Basic Task

As stated earlier, the basic paradigm of model based reasoning is to analyze the

interaction between observation and prediction. Typically there is a physical device or

software that operates in an expected normal manner. However when the observation

or how the device/system is actually operating differs from that which is predicted,

there is a discrepancy. One fundamental assumption is that if the model is assumed

to be correct, then a discrepancy must mean that there is a defect somewhere in

the system. The type of faults and location of the faults that occur are clues that

provide some information about where the defect in the system might occur. This

raises some issues though since the assumption might not always be true. In any

case, given a model, the basic task is to determine which of the components in the

system could have failed in such a way as to account for the discrepancies observed.

The model contains information about the structure and correct behavior of the

components in the system. This information is used to reason with. This approach

to troubleshooting has been called a multitude of names such as model based and

reasoning from first principles. This is because the method is based on a few basic

principles about causality and "deep reasoning".

27

3.3

Alternate Approaches

There are several other approaches to trouble shooting besides the model based

method. They each have their own strengths and weaknesses. They will be discussed

below.

3.3.1

Diagnostics

Diagnostics involve running test programs on devices/systems after they have been

manufactured/created to ensure that the system is capable of doing everything it is

supposed to do. The problem is that this approach is not diagnosis but verification.

The tests make sure that the system is supposed to behave in expected ways. There

is no misbehavior to diagnose since none have come up yet. Model based diagnosis on

the other hand is diagnostic because it is symptom directed. Whenever a fault occurs,

the observed symptom is analyzed and used to work backwards to the underlying

components that might be faulty. This approach is more efficient working backwards

from faults that have already occurred rather than trying to find out what all the

possible faults are.

3.3.2

Fault Dictionaries and Diagnostics

Similarly to diagnostics is the idea of fault dictionaries. Here the fault dictionary is

built by using simulation and a list of the kind of faults anticipated. Once a test

has been simulated, the resulting symptoms/faults are recorded. The list is then

inverted so that one can go backwards from symptoms to faults to find the reason for

failure. This is not broad enough since the only possible symptoms it can recognize

are those that come from the prespecified faults at the time of the fault dictionary's

creation. If a new fault occurs which the designers had not anticipated, the dictionary

becomes useless and is unable to correctly diagnose the problem. Using fault models

like this is useful if the library of faults is very broad since there is a high degree of

specificity to the diagnosis. However it is difficult to be certain that the fault models

are comprehensive enough.

28

3.3.3

Rule based Systems

Rule based systems are built upon the knowledge of experts who know the potential

problems that may arise and what the symptoms may be. The problem is that it may

take a while before there is enough expert knowledge to be able to efficiently diagnose

problems. This is important in systems today since the design cycle is so short. There

is no time to be an expert on a system because by the time you are proficient and

knowledgeable about it, the product becomes obsolete. This approach is also very

device dependent. The knowledge and diagnostic methods used are only applicable

to that particle device or system. In contrast, the model based approach is strongly

device independent. It reasons about from first principles and just needs to know

the basic structure/behavior of the system and its components. This information is

often supplied by the description used to build the device in the first place. The

model based approach is also more methodical and comprehensive. It is less likely to

miss something as opposed to the rule based approach which relies on a subjective

expert's knowledge. Finally rule based systems offer very little help in thinking about

or representing structure and behavior. It does not use that for diagnosis and does

not lead us to think in such terms. This makes the diagnosis harder to understand

or follow for those who are not experts about the system.

3.3.4

When not to use the model-based approach

The model based approach does offer significant advantages compared to other trouble shooting approaches. However it is not the best approach to use in all situations.

If the system that is to be modeled is too complex, the model based approach is

unsuccessful. There are too many unknown variables that just aren't modeled. It

would be too complicated to include all of the information needed to correctly predict and understand the behavior of the system. Conversely, if the system is very

simple, the model based approach isn't optimal. For simple systems, we can model

its behavior completely and exhaustively. The faults considered are well known and

can be enumerated beforehand reliably. Thus a fault model approach such as a fault

29

dictionary would be the optimal approach here.

3.4

Three Fundamental Tasks

The task of model based diagnosis can be broken down into three fundamental task.

Once a fault or discrepancy is observed, a set of hypotheses must be generated to try

to explain what went wrong. Each of these hypothese must be tested to see if it is

consistent with the discrepancies observed. Finally all of the consistent hypotheses

must be discriminated between to find the best, most likely answer.

3.4.1

Hypothesis Generation

A hypothesis generator should typically have three desired qualities. A good generator

should be complete, it should be able to produce all plausible hypotheses. It should be

non-redundant,only unique hypotheses should be generated. It should be informed,

only a small fraction of the hypotheses generated should be proven incorrect after

the testing process. We assume that the device/system in question is modeled as

a collection of several interacting components each with inputs and outputs. We

also postulate that there is a stream of data that flows through the system from one

component to another with each component processing the inputted data in some way.

The first simplification is that we only need to consider components that are upstream

of the discrepancy to be suspects for faultiness. Another idea is that not every input

to a component influences the output. There is thus no need to follow irrelevant inputs

upstream for the same reason for not following components downstream. If there is

more than one discrepancy, we can generate a set of suspect components for each and

intersect them. This may further reduce the amount of suspect components to test.

Hypothesis generation thus becomes a process of following the paths backwards from

the discrepancies.

30

3.4.2

Hypothesis Testing

The second fundamental task of model based diagnosis is to test each of the potential

hypotheses generated and see if it can account for all the observations made. One

simple method is to enumerate all the ways each component in the device can malfunction, then simulate the behavior of the entire device on the original set of inputs

under the assumption that the suspect component is malfunctioning in the specified

way. If the simulated results match the observed results, then that hypothesis is

consistent with the observations and is retained, else it is discarded. The problem

with this is that one must have a complete description of the way in which every

single component can misbehave, otherwise the simulation is not accurate. A more

advanced technique is to use constraint suspension. The basic idea behind this technique is to model the behavior of each component as a set of constraints, and test

suspects by determining whether it is consistent to believe that only the suspect is

malfunctioning. Thus given the known inputs and observed outputs, is it consistent

to believe that all components other than the suspect are working properly? The

traditional method to handling inconsistencies in a contraint network is to find a

value to retract. In the hypothesis testing case though, we want to consider which

contraint rather than value to retract in order to remove the inconsistency.

3.4.3

Hypothesis Discrimination

Now that we have a set of hypotheses that all satisfy the observed discrepancies, we

must have a method to choose or discriminate among them. There are a couple of

approaches and each will be discussed briefly in the proceeding sections. The first

method involves variations on probing while the second involves testing.

Probing

Probing involves running the system again with the same inputs but this time, gather

data that was not present before by probing values within the system itself. With

this new data, not all of the hypotheses will be consistent with it and some will have

31

to be discarded.

Using Structure and Behavior

Just probing at random locations is not very optimal. A smarter approach would

be to use the structure and behavior of the system to choose locations where the

information probed would be more discriminatory towards the possible hypotheses.

By choosing locations which are upstream of the discrepancy, we can improve our

chances of finding out more useful information which can further discriminate among

the hypotheses.

Using Failure Probabilities

When probing for locations which are more informative than others, it may be the

case that there are several locations which are equally informative. It would be easy

though more costly to just probe all of these locations but if we can only probe once

or a small amount of times, we'd want to pick the best one. With the use of failure

probabilities, we can know which components are more likely to fail, thus it would be

better to probe those equally informative locations which are near the more likely to

fail components. This would further improve the chances of finding useful definitive

information for hypothesis discrimination.

Testing

The second basic technique for hypothesis discrimination is testing. Here we select

new inputs and once again observe the outputs. The set of possible hypotheses thus

must also satisfy the observations given these new inputs and observed outputs. This

can be done a multiple number of times if allowable to continually trim down the set

of possible hypotheses.

Cost Considerations

One consideration when choosing between the various techniques described is cost.

Not all techniques are equal cost-wise. For example, using an optimal probe is more

32

accurate but it might be very costly to find the optimal probe. It might have been

cheaper to use non-optimal probes a multiple amount of times. Similarly, testing

is a good approach for hypothesis discrimination. However it might be too costly

or even impossible to run a new set of inputs on the system. This is a real world

constraint that must be taken into account when designing a model based trouble

shooting system.

3.5

Conclusion

In summary, model based troubleshooting is based on the interaction between observation and prediction. It is symptom directed and reasons backwards from first

principles given a good model of the system. Model based troubleshooting is device

and domain independent.

The ideas can be equally extended towards other non-

related fields. There are three fundamental tasks that comprise the process. They

are hypothesis generation, hypothesis testing, and hypothesis discrimination. There

are many well understood techniques for reasoning about a model to diagnose the

fault. However the harder problem is in coming up with a good model. There is

an inherent tradeoff between completeness and complexity. A good model needs to

model everything about the system taking into account every single minor detail.

However such a large model can often be too complex and might contain too much

information which is not useful for trouble shooting. These are the problems that

many researchers are currently striving to find solutions to.

33

Chapter 4

Design

We have discussed the viability of using Bayesian nets in a variety of AI applications

such as diagnosis, data interpretation, and trouble shooting. Bayesian nets are a

powerful tool that can be integrated into many existing systems to provide additional

functionality which can prove to be extremely useful. Model based trouble shooting

has also been discussed. This is a very practical area that has broad applications.

The fusion of two such powerful ideas and the results will be examined.

4.1

Problem Statement

Why is network intrusion a problem? As computer networks get larger and larger,

the level of coordination needed to organize such a structure increases dramatically.

Computer networks have grown substantially at such a rapid pace with no signs of

slowing down. Unfortunately, as the computer network has grown, so too has the

art of computer hacking. Keeping a network secure from outside intruders is very

important. In sensitive applications such as those involving company trade secrets or

military knowledge, it is of the utmost priority to make sure such information is kept

secure. The current trend is to have a large network of distributed systems sharing

a pool of common resources. A distributed system is inherently harder to protect

against hackers as opposed to one single supercomputer mainframe. There are more

areas to attack, either blatantly or discretely.

34

In order to design a system that is resistant to such attacks, one would like to be

able to assume a framework of absolute trust requiring a provably impenetrable and

incorruptible trusted computing base[7]. This is not a reasonable or realistic task to

accomplish so the question thus becomes, how do we perform computations in the face

of unreliable resources? How can we model such a system effectively? The problem

becomes very complex as a result of the dynamic nature of networks, distributed

computations, lack of monitoring on all desired inputs/outpus from processes, etc..

Additional complexities arise from the fact that not all hacks are obvious. There are

some that are more passive in nature, e.g. resource stealing. There are also different

levels of "hackedness". For example, if a hacker sniffs a password for a user of a server

but that user doesn't have any root access, the hacker is very limited in what he/she

can do to harm the server. However if a hacker was able to gain root access, that

server has been totally compromised and is not to be trusted as well. Additionally,

different computations can have varying levels of sensitivity. For example if a user

wanted to send a file to be printed out to a printer and the file was just a scanned

image of his dog, that is a very low sensitivity process. However, if a military general

was sending an email to his captains to give them orders about what targets to strike,

that would be an extremely sensitive process. That must be taken into account when

assessing the risk of doing such a computation on a resource that is not totally trusted.

As we can see, this is a very difficult problem that must be addressed nonetheless.

There are many security issues to attempt to solve but in this project, the focus will

be on resource stealing. Resource stealing is difficult to detect since it is passive.

Nothing completely wrong occurs. Some processes might take longer to compute but

the time to compute is hardly constant. It depends on the level of network traffic,

the system load, the amount of resources required for the process, the priority of the

computation in the resource's queue, etc.. Thus we can never be sure if the system

has been compromised in such a way. Normal troubleshooting methods are ineffective

at dealing with this problem since there hasn't really been a definite fault. However

we would still like to get some information about the relative likelihood that some

system resource has been compromised. Probabilistic methods are the obvious tool

35

of choice here. The following sections will describe the model used to describe this

situation of a general network system.

4.2

Process modeling

Model based troubleshooting is a good approach to use for solving this problem. One

of the benefits of model based reasoning is the fact that no specific fault models

need to be specified. We only need to model what a component is supposed to do,

how it is supposed to work. A property of model based reasoning is: something is

malfunctioning if it's not doing what it's supposed to do, no matter what else it may

be doing. Thus it isn't required to prespecify how the component might fail since

a fault is defined as any behavior that doesn't match expectations. This is a very

desirable property in the network instrusion problem. We are unsure of exactly how

the system components can be compromised or even what the particular behavior will

be if it is indeed hacked. There are a lot of unknowns in that respect, which is why

it is ideal to use model based reasoning. All these details are swept underneath the

carpet as they are not necessary. Valuable information can still be garnered through

this process.

The model we will be using is as follows. The computations are modeled as component nodes. A given computation can take input from another computation or can

have it's output be linked to another computation. In our model, a computation is an

abstract term describing anything that takes in input information, processes it using

some prespecified resources, and outputs the result. Thus all of these computation

nodes are linked together in a network with each component containing information

about which resource it uses. Note that resources can be shared among components.

Resources are also modeled as nodes and contain information about which components executes on it.

Similar to the GDE/Sherlock circuit fault troubleshooter, an assumptions baseds

truth maintenance system will be used to maintain consistency in the model. Thus

troubleshooting a fault will be a matter of deciding which constraint to retract through

36

the process of constraint suspension. In our system, we use Joshua, a knowledge based

reasoning system built in Symbolics Common Lisp. This will provide the framework

and infrastructure to allow us to have truth maintenance in the system in order to

detect if there is an inconsistency or not given the inputs and outputs of the system.

Joshua is an extensible software product for building and delivering expert system

applications. It is a very compact system, implemented in the Symbolics Genera

environment. It has a statement oriented Lisp-like syntax. Joshua is at its core a

rule-based inference language. It has five major components: predications, database,

rules, protocol of inference, and truth maintenance system. Our application will draw

on a few of these components.

4.3

Input/Output Black Box modeling

Each component is modeled as a black box node with input and output ports. This is

a very abstract view of the component. We do not specify how it does the computation

nor what particular faults it might have. All we specify is what type of inputs it takes

in, which resources it uses to compute with, and what outputs it has. The component

can be thought of as a factory. It waits for its resources, the inputs to come in. When

it has enough of the resources to start one of its processes, it begins. When the process

is done, it outputs the result as the product. Now note that it is possible that there are

multiple inputs and outputs related in any arbitrary way with regards to which inputs

are needed to create the corresponding outputs. For our problem domain of resource

stealing, it is necessary to model the computation times needed for that component.

We do this by specifying a range of time units that the component needs to complete

the computation when it is operating normally. For example, component A has a

normal computation time range of [1,5]. This means that at best, the computation

takes 1 unit of time. At worse it takes 5 units of time to complete during the normal

operating state. We can thus specify the arrival and departure times of the inputs and

outputs. These can be exact times or ranges depending on how things are specified.

All of the components are modeled as such. The data pathways are then completed so

37

A

FOO

[3,5] NORMAL

C

[7,10] HACKED

B

D

Resource: WILSON

Figure 4-1: A simple sample component.

that the outputs of components go into the inputs of other components as the model

would dictate. Thus we have now modeled the dataflow between the components

as well as the timing information for computation. An important point to note is

that nothing is being said about the correctness of the information passed. Indeed

it is virtually an assumption that all values that get passed are correct and do not

affect the computation time of components. That is, even if a component receives

an erroneous input, it will still take the same amount of time to process that input

as compared to a correct expected input. We assume this because the problem we

are trying to tackle is resource stealing where we assume that the system hasn't been

hacked into blatantly, i.e. all the processes produce the same correct values, only the

computation time is affected.

In figure 4-1, the component is named FOO. It has two inputs, A and B and two

outputs C and D. Inputs A and B combine and get processed to produce outputs C

and D. Thus process FOO would have to wait until both inputs A and B are there

before it can start computing. FOO executes on resource WILSON. FOO has two

possible states, a NORMAL and a HACKED state. In the NORMAL state, FOO

takes time [3,5] once both inputs A and B are present to produce outputs C and D.

If FOO is in the hacked state, it takes time [7,10] to produce C and D.

In figure 4-2, the component is named BAR. It has two inputs A and B and two

outputs C and D. Here inputs A and B are independent of each other and do not

interact at all to produce the outputs. Input A is used to produce output X while

input B is used to produce output Y. The two inputs do not need to wait till the

38

A

BAR

B

X

[2,7]

NORMAL

[3,61

[5,10]

HACKED

Y

Resource: ATHENA

Figure 4-2: A second example of a component module.

other one gets there before they can start processing since they are independent of

each other. The first process of input A to output X takes time [1,5] in the NORMAL

state and time [3,6] in the HACKED state. The second process of input B to output

Y takes time [2,7] in the NORMAL state and time [5,10] in the HACKED state.

Component BAR operates on resource ATHENA.

These two figures are indicative of the types of components that will be present

in the process models. A more complex example would have many more such components linked together in more complicated ways.

4.4

Fault modeling

Similar to the GDE[11] and Sherlock[5] circuit fault troubleshooter, each component

and resource module has several fault states.

Now instead of having states that

describe exact types of faults that can occur, only the behavior of the system in fault

states are given. This covers up a lot of the specific details about how a particular

module could have been compromised. For example, exhaustively enumerating the

ways in which a server could be hacked is intractable.

Possibilities include user

accounts being hacked into, root access being compromised, printer resources being

compromised, etc.. Each module has the obvious NORMAL operating state. There

can be several other state of operations.

Possibilities include HACKED, SLOW,

FAST, etc.. One good idea to use is to also include an OTHER state. This is to

include the miscellaneous conditions that we don't take into account; think of it as

39

a leak probability. Our model of the system is necessarily incomplete and simplified.

As completeness increases, so too does complexity. To keep things tractable, we

use a more simplified view of the system but must allow for an OTHER state for

completeness. The Sherlock system[5] contains a lot of similar ideas that our system

borrows from. The interested reader is encouraged to take a look at the reference. Our

approach towards modeling is similar to the Sherlock circuit fault troubleshooter. The

main idea from the perspective of diagnosis is to identify consistent modes of behavior,

correct or faulty. Thus we are assuming that if a resource is hacked, even though we

don't know the details or specifics of what exactly happened, the behavior of it,

namely the computation time will be consistent. Therefore, we can group all of it up

into the timing range information for the HACKED state. Similar arguments goes for

the other states as well. For our application, we allow only the component modules to

have different consistent states of behavior. The resources are in a separate set from

the components. The reason for this is because the focus is on the component level.

Resources can be thought of as the root nodes in this graph model. They are base

resources that do not depend on other modules for operation. Thus instead of having

a conditional probability dependence on other modules, they will just have a prior

probability distribution for the modes of operation. In some sense, they still contain

the mutltiple states of behavior idea but is executed in a different fasion. Resource

modules will have prior probabilities of being in the NORMAL, HACKED, OTHER,

etc.. state.

4.5