(1)

advertisement

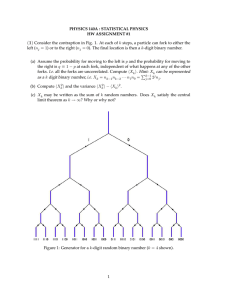

PHYSICS 140A : STATISTICAL PHYSICS HW ASSIGNMENT #1 SOLUTIONS (1) Consider the contraption in Fig. 1. At each of k steps, a particle can fork to either the left (nj = 1) or to the right (nj = 0). The final location is then a k-digit binary number. (a) Assume the probability for moving to the left is p and the probability for moving to the right is q ≡ 1 − p at each fork, independent of what happens at any of the other forks. I.e. all the forks are uncorrelated. Compute hXk i. Hint: Xk can be represented Pk−1 j 2 nj . as a k-digit binary number, i.e. Xk = nk−1nk−2 · · · n1 n0 = j=0 (b) Compute hXk2 i and the variance hXk2 i − hXk i2 . (c) Xk may be written as the sum of k random numbers. Does Xk satisfy the central limit theorem as k → ∞? Why or why not? Figure 1: Generator for a k-digit random binary number (k = 4 shown). Solution : (a) The position after k forks can be written as a k-digit binary number: nk−1 nk−2 · · · n1 n0 . Thus, k−1 X 2j nj , Xk = j=0 where nj = 0 or 1 according to Pn = p δn,1 + q δn,0 . Now it is clear that hnj i = p, and 1 therefore hXk i = p k−1 X j=0 2j = p · 2k − 1 . (b) The variance in Xk is Var(Xk ) = hXk2 i − hXk i2 = k−1 X k−1 X j=0 j ′ =0 ′ 2j+j hnj nj ′ i − hnj ihnj ′ i = p(1 − p) k−1 X j=0 4j = p(1 − p) · 1 3 4k − 1 , since hnj nj ′ i − hnj ihnj ′ i = p(1 − p) δjj ′ . (c) Clearly the distribution of Xk does not obey the CLT, since hXk i scales exponentially with k. Also note p r Var(Xk ) 1−p = , lim k→∞ hXk i 3p which is a constant. For distributions obeying the CLT, the ratio of the rms fluctuations to the mean scales as the inverse square root of the number of trials. The reason that this distribution does not obey the CLT is that the variance of the individual terms is increasing with j. 2 /2σ 2 (2) Let P (x) = (2πσ 2 )−1/2 e−(x−µ) (a) I = R∞ . Compute the following integrals: dx P (x) x3 . −∞ (b) I = R∞ dx P (x) cos(Qx). −∞ (c) I = R∞ dx −∞ R∞ dy P (x) P (y) exy . You may set µ = 0 to make this somewhat simpler. −∞ Under what conditions does this expression converge? Solution : (a) Write x3 = (x − µ + µ)3 = (x − µ)3 + 3(x − µ)2 µ + 3(x − µ)µ2 + µ3 , so that 3 hx i = √ 1 2πσ 2 Z∞ o n 2 2 dt e−t /2σ t3 + 3t2 µ + 3tµ2 + µ3 . −∞ 2 Since exp(−t2 /2σ 2 ) is an even function of t, odd powers of t integrate to zero. We have ht2 i = σ 2 , so hx3 i = µ3 + 3µσ 2 . A nice trick for evaluating ht2k i: 2k ht i = R∞ −λt2 2k t dt e −∞ R∞ dt = 1 2 −∞ R∞ 2 dt e−λt 2 e−λt −∞ = R∞ −λt2 dt e k d (−1)k dλ k −∞ √ (−1)k dk λ = √ λ dλk λ=1/2σ2 (2k)! · 32 · · · (2k−1) λ−k λ=1/2σ2 = k σ 2k . 2 2 k! (b) We have # ∞ iQµ Z e 2 2 dt e−t /2σ eiQt hcos(Qx)i = Re heiQx i = Re √ 2πσ 2 −∞ i h 2 2 iQµ −Q2 σ2 /2 = cos(Qµ) e−Q σ /2 . = Re e e " Here we have used the result r Z∞ π β 2 /4α −αt2 −βt √ dt e = e 2 α 2πσ 1 −∞ with α = 1/2σ 2 and β = −iQ. Another way to do it is to use the general result derive above in part (a) for ht2k i and do the sum: # " ∞ iQµ Z e 2 2 hcos(Qx)i = Re heiQx i = Re √ dt e−t /2σ eiQt 2πσ 2 −∞ = cos(Qµ) ∞ X (−Q2 )k ∞ X k 1 ht i = cos(Qµ) − 21 Q2 σ 2 (2k)! k! k=0 −Q2 σ2 /2 = cos(Qµ) e 2k k=0 . (c) We have Z∞ Z∞ 2 2Z 1 e−µ /2σ κ2 xy = I = dx dy P (x) P (y) e d2x e− 2 Aij xi xj ebi xi , 2 2πσ −∞ −∞ where x = (x, y), A= σ 2 −κ2 −κ2 σ 2 , 3 b= µ/σ 2 µ/σ 2 . Using the general formula for the Gaussian integral, Z we obtain 1 (2π)n/2 dnx e− 2 Aij xi xj ebi xi = p exp det(A) 1 exp I=√ 1 − κ4 σ 4 Convergence requires κ2 σ 2 < 1. µ2 κ2 1 − κ2 σ 2 1 −1 2 Aij bi bj , . (3) The binomial distribution, BN (n, p) = N pn (1 − p)N −n , n tells us the probability for n successes in N trials if the individual trial success probability P is p. The average number of successes is ν = N n=0 n BN (n, p) = N p. Consider the limit N → ∞. (a) Show that the probability of n successes becomes a function of n and ν alone. That is, evaluate Pν (n) = lim BN (n, ν/N ) . N →∞ This is the Poisson distribution. (b) Show that the moments of the Poisson distribution are given by ∂ k eν . hnk i = e−ν ν ∂ν (c) Evaluate the mean and variance of the Poisson distribution. The Poisson distribution is also known as the law of rare events since p = ν/N → 0 in the N → ∞ limit. See http://en.wikipedia.org/wiki/Poisson distribution#Occurrence for some amusing applications of the Poisson distribution. Solution : (a) We have N! N →∞ n! (N − n)! Pν (n) = lim Note that ν N n 1− ν N N −n . n N n−N e → N N −n eN , (N − n)! ≃ (N − n)N −n en−N = N N −n 1 − N 4 x N N where we have used the result limN →∞ 1 + Pν (n) = the Poisson distribution. Note that (b) We have hnk i = ∞ X 1 n −ν ν e , n! P∞ n=0 Pn (ν) Pν (n) nk = n=0 = ex . Thus, we find = 1 for any ν. ∞ X 1 k n −ν n ν e n! n=0 ∞ d k X ∂ k νn = e−ν ν eν . = e−ν ν dν n! ∂ν n=0 (c) Using the result from (b), we have hni = ν and hn2 i = ν + ν 2 , hence Var(n) = ν. (4) Consider a D-dimensional random walk on a hypercubic lattice. The position of a particle after N steps is given by RN = N X n̂j , j=1 where n̂j can take on one of 2D possible values: n̂j ∈ ± ê1 , . . . , ±êD , where êµ is the unit vector along the positive xµ axis. Each of these possible values occurs with probability 1/2D, and each step is statistically independent from all other steps. (a) Consider the generating function SN (k) = eik·RN . Show that α1 1 ∂ 1 ∂ αJ RN · · · RN = ··· S (k) . i ∂kα i ∂kα k=0 N 1 α Rβ i = − ∂ 2S (k)/∂k ∂k For example, hRN α N β N J k=0 . 4 i and hX 2 Y 2 i. (b) Evaluate SN (k) for the case D = 3 and compute the quantities hXN N N Solution : (a) The result follows immediately from 1 ∂ ik·R e = Rα eik·R i ∂kα 1 ∂ 1 ∂ ik·R e = Rα Rβ eik·R , i ∂kα i ∂kβ et cetera. Keep differentiating with respect to the various components of k. 5 (b) For D = 3, there are six possibilities for n̂j : ±x̂, ±ŷ, and ±ẑ. Each occurs with a probability 61 , independent of all the other n̂j ′ with j ′ 6= j. Thus, N Y N 1 ikx −ikz ikz −iky iky −ikx he i= SN (k) = +e +e +e +e e +e 6 j=1 cos kx + cos ky + cos kz N = . 3 ik·n̂j We have 4 hXN i N ∂ 4 ∂ 4 S(k) 1 4 kx + . . . = = 1 − 16 kx2 + 72 4 4 ∂kx ∂kx k=0 kx =0 h 4 ∂ 1 4 = kx + . . . + 12 N (N − 1) − 16 kx2 + 1 + N − 61 kx2 + 72 4 ∂kx k =0 x i 4 ∂ h 2 2 4 1 1 = N k + N k + . . . = 13 N 2 . 1 − x x 6 72 ∂kx4 1 72 kx4 + . . . 2 + ... i kx =0 Similarly, we have 4 4 S(k) N ∂ ∂ 2 2 4 4 2 1 1 (k + k ) + (k + k ) + . . . = 1 − hXN YN2 i = x y x y 6 72 ∂kx2 ∂ky2 ∂kx2 ∂ky2 k=0 kx =0 i 1 2 ∂ 4 h 2 2 4 4 2 2 1 1 1 = (k + k ) + (k + k ) + . . . + N (N − 1) − (k + k ) + . . . + . . . 1 + N − x y x y x y 6 72 2 6 ∂kx2 ∂ky2 kx =ky =0 i ∂ 4 h 2 2 2 4 4 1 1 2 2 1 = N (k + k ) + N (k + k + y ) + k k + . . . = 19 N (N − 1) . 1 − x y x x y 6 72 36 ∂kx2 ∂ky2 kx =ky =0 (5) A rare disease is known to occur in f = 0.02% of the general population. Doctors have designed a test for the disease with ν = 99.90% sensitivity and ρ = 99.95% specificity. (a) What is the probability that someone who tests positive for the disease is actually sick? (b) Suppose the test is administered twice, and the results of the two tests are independent. If a random individual tests positive both times, what are the chances he or she actually has the disease? (c) For a binary partition of events, find an expression for P (X|A ∩ B) in terms of P (A|X), P (B|X), P (A|¬X), P (B|¬X), and the priors P (X) and P (¬X) = 1 − P (X). You should assume A and B are independent, so P (A ∩ B|X) = P (A|X) · P (B|X). 6 Solution : (a) Let X indicate that a person is infected, and A indicate that a person has tested positive. We then have ν = P (A|X) = 0.9990 is the sensitivity and ρ = P (¬A|¬X) = 0.9995 is the specificity. From Bayes’ theorem, we have P (X|A) = νf P (A|X) · P (X) = , P (A|X) · P (X) + P (A|¬X) · P (¬X) νf + (1 − ρ)(1 − f ) where P (A|¬X) = 1 − P (¬A|¬X) = 1 − ρ and P (X) = f is the fraction of infected individuals in the general population. With f = 0.0002, we find P (X|A) = 0.2856. (b) We now need P (X|A2 ) = P (A2 |X) P (A2 |X) · P (X) ν2f = 2 , 2 · P (X) + P (A |¬X) · P (¬X) ν f + (1 − ρ)2 (1 − f ) where A2 indicates two successive, independent tests. We find P (X|A2 ) = 0.9987. (c) Assuming A and B are independent, we have P (A ∩ B|X) · P (X) P (A ∩ B|X) · P (X) + P (A ∩ B|¬X) · P (¬X) P (A|X) · P (B|X) · P (X) = . P (A|X) · P (B|X) · P (X) + P (A|¬X) · P (B|¬X) · P (¬X) P (X|A ∩ B) = This is exactly the formula used in part (b). (6) Compute the entropy of the F08 Physics 140A grade distribution (in bits). The distribution is available from http://physics.ucsd.edu/students/courses/fall2008/physics140. Assume 11 possible grades: A+, A, A-, B+, B, B-, C+, C, C-, D, F. Solution : Assuming the only possible grades are A+, A, A-, B+, B, B-, C+, C, C-, D, F (11 possibilities), then from the chart we produce the entries in Tab. 1. We then find S=− 11 X pn log2 pn = 2.82 bits n=1 The maximum information content would be for pn = log2 11 = 3.46 bits. 7 1 11 for all n, leading to Smax = P n Nn = 38 Nn −pn log2 pn A+ 2 0.224 A 9 0.492 A7 0.450 B+ 3 0.289 B 9 0.492 B3 0.289 C+ 1 0.138 Table 1: F08 Physics 140A final grade distribution. 8 C 2 0.224 C0 0 D 2 0.224 F 0 0