PROJECTION METHODS FOR LINEAR AND NONLINEAR EQUATIONS Dissertation submitted to the

advertisement

PROJECTION METHODS FOR LINEAR AND

NONLINEAR EQUATIONS

Dissertation submitted to the

Hungarian Academy of Sciences

for the degree ”MTA Doktora”

Aurél Galántai

University of Miskolc

2003

2

Contents

1 Preface

5

2 Triangular decompositions

2.1 Monotonicity of LU and LDU factorizations . . . . . . . . . . . . . . . .

2.2 The geometry of LU decomposability . . . . . . . . . . . . . . . . . . . . .

2.3 Perturbations of triangular matrix factorizations . . . . . . . . . . . . . .

2.3.1 Exact perturbation terms for the LU factorization . . . . . . . . .

2.3.2 Exact perturbation terms for the LDU and Cholesky factorizations

2.3.3 Bounds for the projection Pk (B) . . . . . . . . . . . . . . . . . . .

2.3.4 Norm bounds for the perturbations of LU and LDU factorizations

2.3.5 Norm bounds for the Cholesky factorizations . . . . . . . . . . . .

2.3.6 Componentwise perturbation bounds . . . . . . . . . . . . . . . . .

2.3.7 Iterations for upper bounds . . . . . . . . . . . . . . . . . . . . . .

7

8

10

12

15

17

19

23

24

27

32

3 The

3.1

3.2

3.3

3.4

3.5

.

.

.

.

.

37

39

41

44

47

49

.

.

.

.

.

53

53

56

59

65

68

rank reduction procedure of Egerváry

The rank reduction operation . . . . . . . .

The rank reduction algorithm . . . . . . . .

Rank reduction and factorizations . . . . .

Rank reduction and conjugation . . . . . .

Inertia and rank reduction . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

4 Finite projection methods for linear systems

4.1 The Galerkin-Petrov projection method . . . .

4.2 The conjugate direction methods . . . . . . . .

4.3 The ABS projection methods . . . . . . . . . .

4.4 The stability of conjugate direction methods . .

4.5 The stability of the rank reduction conjugation

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5 Projection methods for nonlinear algebraic systems

71

5.1 Extensions of the Kaczmarz method . . . . . . . . . . . . . . . . . . . . . 73

5.2 Nonlinear conjugate direction methods . . . . . . . . . . . . . . . . . . . . 78

5.3 Particular methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

5.3.1 Methods with Þxed direction matrices . . . . . . . . . . . . . . . . 83

5.3.2 The nonlinear ABS methods . . . . . . . . . . . . . . . . . . . . . 85

5.3.3 Quasi-Newton ABS methods . . . . . . . . . . . . . . . . . . . . . 89

5.4 Monotone convergence in partial ordering . . . . . . . . . . . . . . . . . . 97

5.5 Special applications of the implicit LU ABS method . . . . . . . . . . . . 104

5.5.1 The block implicit LU ABS method on linear and nonlinear systems

with block arrowhead structure . . . . . . . . . . . . . . . . . . . . 105

5.5.2 Constrained minimization with implicit LU ABS methods . . . . . 110

CONTENTS

4

6 Convergence and error estimates

6.1 A posteriori error estimates for linear and nonlinear equations . . . . .

6.1.1 Derivation and geometry of the Auchmuty estimate . . . . . .

6.1.2 Comparison of estimates . . . . . . . . . . . . . . . . . . . . . .

6.1.3 Probabilistic analysis . . . . . . . . . . . . . . . . . . . . . . . .

6.1.4 The extension of Auchmuty’s estimate to nonlinear systems . .

6.1.5 Numerical testing . . . . . . . . . . . . . . . . . . . . . . . . . .

6.2 Bounds for the convergence rate of the alternating projection method

6.2.1 The special case of the alternating projection method . . . . .

6.2.2 A new estimate for the convergence speed . . . . . . . . . . . .

6.2.3 An extension of the new estimate . . . . . . . . . . . . . . . . .

6.2.4 A simple computational experiment . . . . . . . . . . . . . . .

6.2.5 Final remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

115

115

116

118

119

120

121

125

126

127

131

132

134

7 Appendices

7.1 Notation . . . . . . . . . . . . . . . . . . . . . . . . . .

7.2 Unitarily invariant matrix norms and projector norms

7.3 Variable size test problems . . . . . . . . . . . . . . . .

7.4 A FORTRAN program of Algorithm QNABS3 . . . .

.

.

.

.

.

.

.

.

135

135

136

142

148

8 References

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

165

Chapter 1

PREFACE

This thesis contains the author’s work concerning the numerical solution of systems of

linear and nonlinear algebraic equations. This activity includes the development, application and testing of new and efficient algorithms (ABS methods), general algorithmic

frameworks, a special projection based local convergence theory, monotone (and global)

convergence theory in partial ordering and a perturbation theory of the linear conjugate

direction methods and rank reduction conjugation.

The investigated algorithms are essentially projection methods that are also featured as conjugate direction methods. The conjugate directions are generated using

Egerváry’s rank reduction procedure [76]. This fact and the requirements of the monotone

convergence theory led to the investigation of the triangular decompositions and the rank

reduction procedure. Concerning the triangular decompositions we obtained a monotonicity theorem in partial ordering, a geometric characterization and a complete perturbation

theory. Concerning the rank reduction algorithm we obtained several basic results that include the necessary and sufficient condition for the breakdown free computation, canonical

forms, the characterization of the related full rank factorization, characterizations of different obtainable factorizations and the inherent conjugation properties. In fact, it turned

out that all full-rank factorizations are related to triangular factorizations in a speciÞed

way and so the conjugation procedure is triangular factorization based. Our triangular

factorization and rank reduction results are the basis for many results presented in the

thesis. These results however are useful in other subjects of numerical linear algebra as

well.

For any numerical method it is important to know the quality of the obtained

numerical solution. Here we investigate and show the practical value of the a posteriori

estimate of Auchmuty [17]. It is also important

to estimate the speed of iterative al¡ ¢

gorithms. Here we give a computable (O n3 ) bound for the convergence speed of the

alternating projection method whose convergence was proved by von Neumann, Halperin

and others [70].

The unscaled scalar nonlinear ABS method was developed by Abaffy, Galántai

and Spedicato [8]. The block linear and nonlinear ABS method was developed by Abaffy

and Galántai [6]. Except for the algorithm none of the joint results are included in the

thesis. The development and testing of the quasi-Newton ABS method of Section 5.3.3

is a joint work with A. Jeney. The presented convergence results are the author’s own

results.

The Appendices also contain some of the author’s results necessary in earlier

sections.

6

Preface

Chapter 2

TRIANGULAR DECOMPOSITIONS

The importance and usefulness of the Gaussian elimination (GE) became apparent in

the 1940’s, when the activity of Hotelling, von Neumann and Goldstine, Turing and Fox,

Huskey and Wilkinson resulted in the observation that Gaussian elimination is the right

method for digital computers for solving linear equations of the form

¢

¡

Ax = b

A ∈ Rm×m

(for details see [143], [233]). In fact, von Neumann and Goldstine [196] and Turing [239]

discovered that GE computes an LU (LDU ) factorization for general A and an LDLT

factorization for positive deÞnite symmetric A. They also made the Þrst error analysis

of the method and the involved triangular factorizations. The Cholesky factorization

and method were discovered and used Þrst in geodesy around the 1920’s. Since then

the triangular factorizations and the triangular factorization based algorithms (GE and

Cholesky and variants, LR method for eigenvalue computations) became important in

many Þelds of applied mathematics and the number of related problems and papers is

ever increasing (see, e.g., [143], [233]).

Here we make three contributions to the theory of triangular factorizations. We

prove the monotonicity of the LU and LDU factorizations of M matrices in partial ordering. We also give a geometric characterization of the LU decomposability in terms of

subspace angles and gap. Finally we give a complete perturbation theory for all known

triangular decompositions. We also mention that all three subjects were originally required by the development of nonlinear ABS methods. These results however are useful

elsewhere on their own.

DeÞnition 1 The matrix A ∈ Fn×n is said to be lower triangular if aij = 0 for all i < j.

If aij = 0 for all i ≤ j, then A is strictly lower triangular. If aij = 0 for i < j and aii = 1

(i = 1, . . . , n), then A is unit lower triangular.

DeÞnition 2 The matrix A ∈ Fn×n is said to be upper triangular if aij = 0 for all i > j.

If aij = 0 for all i ≥ j, then A is strictly upper triangular. If aij = 0 for i > j and aii = 1

(i = 1, . . . , n), then A is unit upper triangular.

The LU decomposition of a matrix A ∈ Fn×n is deÞned by A = LU , where L

is lower triangular and U is upper triangular. The LU decomposition, if it

¢ is not

¡ exists,

unique. For any nonsingular diagonal matrix D, decomposition A = (LD) D−1 U is also

an LU factorization of the matrix. The sufficient part of the following result was proved

by Turing (see, e.g., [143]).

Theorem 3 Let A ∈ Fn×n be a nonsingular matrix. The matrix A has a unique LU

decomposition

A = LU,

(2.1)

Triangular decompositions

8

where L is unit lower triangular and U is upper triangular, if and only if the leading

principal submatrices are all nonsingular.

DeÞnition 4 The block LU decomposition of the partitioned matrix

k

A = [Aij ]i,j=1 ∈ Fn×n

(Aij ∈ Fli ×lj )

is deÞned by A = LU , where L and U are block triangular matrices of the form

k

(Lij ∈ Fli ×lj ; Lij = 0, i < j)

k

(Uij ∈ Fli ×lj ; Uij = 0, i > j).

L = [Lij ]i,j=1

and

U = [Uij ]i,j=1

Theorem 5 The matrix A has a block LU decomposition if and only if the Þrst k − 1

leading principal block submatrices of A are nonsingular.

For proofs of the above theorems, see e.g., [122], [144], [143].

A block triangular matrix is unit block triangular if its diagonal elements are unit

matrices. If L or U is unit block triangular, then the block LU decomposition is unique.

DeÞnition 6 A partitioned nonsingular matrix A ∈ Fn×n is said to be (block) strongly

nonsingular, if A has a (block) LU decomposition.

The conditions of strong nonsingularity are clearly the most restrictive in the

case when all blocks are scalars. Such a case implies block strong nonsingularity for any

allowed partition. A geometric characterization of the strong nonsingularity will be given

in Section 2.2.

Occasionally we denote the unique LU factorization of A by A = L1 U, where

L1 stands for the unit lower triangular component. The unique LDU factorization of A

is deÞned by A = L1 DU1 , where L1 is unit lower triangular, D is diagonal and U1 is

unit upper triangular (U = DU1 ). A special case of the triangular factorizations is the

Cholesky factorization.

Theorem 7 (Cholesky decomposition). Let A ∈ Cn×n be Hermitian and positive deÞnite.

Then A can be written in the form

A = LLH ,

(2.2)

where L is lower triangular with positive diagonal entries. If A is real, L may be taken to

be real.

2.1

Monotonicity of LU and LDU factorizations

The following result establishes the monotonicity of the triangular decompositions of Mmatrices [103], [104].

DeÞnition 8 A matrix A is reducible if there is a permutation matrix Π such that

·

¸

X 0

ΠT AΠ =

Y Z

with X and Z both square blocks. Otherwise, the matrix is called irreducible.

Monotonicity of LU and LDU factorizations

9

The following result is related to the fact ([23], [84], [219], [241]) that a nonsingular

matrix A is an M-matrix if and only if there exist lower and upper triangular M-matrices

R and S, respectively, such that A = RS.

Theorem 9 Let A and B be two M-matrices such that A ≤ B and assume that A is

nonsingular or irreducible singular and B is nonsingular. Let A = LA VA and B = LB VB

be the LU factorizations of A and B such that both LA and LB are unit lower triangular.

Then

LA ≤ LB ,

VA ≤ VB .

(2.3)

In addition, if VA = DA UA and VB = DB UB , where UA and UB are unit upper triangular,

DA and DB are diagonal matrices, then

DA ≤ DB ,

UA ≤ UB .

(2.4)

Proof. We prove the result by induction. Assume that k × k matrices A and B

are nonsingular such that LA ≤ LB and VA ≤ VB hold. Let

·

·

¸

¸

¡

¢

A c

B p

0

0

c, r, p, q ∈ Rk .

A =

, B =

rT a

qT b

Assume that A0 and B 0 are also nonsingular M -matrices. They have the LU -factorizations

LA

0

VA

L−1

A c

,

A0 =

rT VA−1 1

0 a − rT A−1 c

B0 =

LB

q

T

VB−1

0

1

VB

L−1

B p

0

T

b−q B

−1

p

.

By assumption LA ≤ LB , VA ≤ VB , c ≤ p ≤ 0, r ≤ q ≤ 0 and 0 < a ≤ b. Relation L−1

A ≥

−1

−1

−1

−1

T −1

T −1

≥

0

implies

L

c

≤

L

p

≤

0.

Inequality

V

≥

V

≥

0

implies

r

V

≤

q

V

L−1

B

A

B

A

B

A

B ≤

0. Notice that a − rT A−1 c = det (A0 ) / det (A) > 0 and b − q T B −1 p = det (B 0 ) / det (B) >

0. Finally 0 < a − rT A−1 c ≤ b − q T B −1 p follows from A−1 ≥ B −1 ≥ 0. Thus we proved

the Þrst part of the theorem for the nonsingular case. If A0 is an irreducible singular

matrix of order m, then by Theorem 4.16 of Berman and Plemmons [23] (p.156) A0 has

rank m− 1, each principal submatrix of A0 other than A0 itself is a nonsingular M -matrix,

a = rT A−1 c and A0 has the LU -factorization

LA

0

VA L−1

A c

A0 =

0

0

rT VA−1 1

with singular upper triangular matrix VA0 . As 0 ≤ b − q T B −1 p the theorem also holds in

this case. Let VA = DA UA and VB = DB UB . As AT and B T are also M-matrices that

satisfy AT ≤ B T , we have the LU -factorizations AT = UAT (DA LTA ) and B T = UBT (DB LTB )

with UAT ≤ UBT and DA LTA ≤ DB LTB . This implies UA ≤ UB . The inequality 0 ≤ DA ≤

DB follows from the relations VA ≤ VB , diag (VA ) = DA and diag (VB ) = DB . The same

reasoning applies to A0 and B 0 if they are nonsingular. If A0 is irreducible singular, then

−1 −1

DA 0

UA DA

LA c

,

DA0 UA0 =

0 0

0

0

Triangular decompositions

10

DB0 UB0 =

DB

0

0

b − q T B −1 p

UB

−1 −1

DB

LB p

0

1

,

−1 −1

−1 −1

from which 0 ≤ DA0 ≤ DB0 immediately follows. As DA

LA c ≤ DB

LB p ≤ 0 the rest

of the theorem also follows.

Remark 10 From Theorem 4.16 of Berman and Plemmons [23] (p. 156) it follows that

Theorem 9 does not hold if B is irreducible singular and A 6= B.

Theorem 9 is not true for general matrices. Using the results of Jain and Snyder

[162] we deÞne matrices A and B as follows:

1 −4

9 −12

2 −4

9 −12

−4

17 −40

57

17 −40

57

, B = −4

.

A=

9 −40

98 −148

9 −40

98 −148

−12

57 −148

242

−12

57 −148

242

These matrices are monotone, A ≤ B and

1

0

0 0

1

0

0

−4

−2

1

0

0

1

0

, LB =

LA =

9/2 −22/9

9 −4

1 0

1

−12

9 −4 1

−6 11/3 −240/67

LA and LB are not comparable, implying that Theorem 9 does not

matrices. The reverse of Theorem 9 is also not true. Let

1

0

0 0

1

0

0

−4

−4

1

0

0

1

0

, LB =

LA =

−9 −4

0 −4

1 0

1

−12 −9 −4 1

0

0 −4

0

0

.

0

1

hold for monotone

0

0

.

0

1

Matrices LA and LB are M-matrices and LA ≤ LB . The product matrix LA LTA is not an

M-matrix. Furthermore LA LTA and LB LTB are not comparable. We remark however that

if L and R are lower triangular M-matrices such that LLT ≤ RRT holds, then L ≤ R

also holds. This result is due to Schmidt and Patzke [216].

A symmetric nonsingular M -matrix is called a Stieltjes matrix. The Stieltjes

matrices are positive deÞnite. An easy consequence of Theorem 9 is the following.

eL

eT are Stieltjes matrices with their corresponding

Corollary 11 If A = LLT and B = L

e

Cholesky factorizations and A ≤ B, then L ≤ L.

Theorem 9 will be used in the monotone convergence proof of nonlinear conjugate

direction methods in Section 5.4.

2.2

The geometry of LU decomposability

We give a geometric characterization of the LU decomposability or strong nonsingularity

[110], which plays a key role in many cases and especially in our investigations. The result

is rather different from the algebraic characterization given by Theorem 3.

We recall that for any nonsingular matrix A ∈ Rn×n there is a unique QRfactorization A = QA RA such that the main diagonal of RA contains only positive entries.

If the Cholesky decomposition AT A = RT R is known, then QA = AR−1 and RA = R.

The geometry of LU decomposability

11

Proposition 12 A nonsingular matrix A ∈ Rn×n has an LU factorization if and only if

its orthogonal factor QA has an LU factorization.

Proof.

If¢the LU factorization A = LA UA exists, then LA UA = QA RA , from

¡

−1

= QA follows. As UA R−1

which LA UA RA

A is upper triangular, this proves the only if

part. In turn, if QA = LU , then A = QA RA = L (URA ) proving the LU decomposability

of matrix A.

As κ2 (A) = κ2 (RA ) we can say that the orthogonal part QA of matrix A is

responsible for the LU decomposability, while the upper triangular part RA of the QRfactorization determines the spectral condition number of A (for the use of this fact see

[52] or [143]).

We now recall the following known results on angles between subspaces (see, e.g.,

[128]).

DeÞnition 13 Let M, N ⊆ Rn be two subspaces such that p = dim (M) ≥ dim (N ) = q.

The principal angles θ1 , . . . , θq ∈ [0, π/2] between M and N are deÞned recursively for

k = 1, . . . , q by

cos (θk ) = max max uT v = uTk vk ,

u∈M v∈N

(2.5)

subject to the constraints:

kuk2 = 1,

kvk2 = 1,

uTi u = 0,

viT v = 0,

i = 1, . . . , k − 1.

(2.6)

Note that 0 ≤ θ1 ≤ θ2 ≤ . . . ≤ θq ≤ π/2. Let U = [u1 , . . . , un ] ∈ Rn×n be

orthogonal such that U1 = [u1 , . . . , up ] is a basis for M, and U2 = [up+1 , . . . , un ] is a

basis for M ⊥ . Similarly, let V = [v1 , . . . , vn ] ∈ Rn×n be an orthogonal matrix such that

V1 = [v1 , . . . , vq ] is a basis for N , and V2 = [vq+1 , . . . , vn ] is a basis for N ⊥ . Let us

consider the partitioned orthogonal matrix

· T

¸

¡ T

¢

U1 V1 U1T V2

U1 V1 ∈ Rp×q .

(2.7)

UT V =

T

T

U2 V1 U2 V2

Let θi = θi (M, N) be the ith principal angle between the subspaces M and N . It follows

from a result of Björck and Golub [26] (see also [128]) that

¡

¢

σi U1T V1 = cos (θi (M, N )) (i = 1, . . . , q) .

Let us consider now the orthogonal factor QA of matrix A ∈ Rn×n in 2 × 2

partitioned form:

"

#

(k)

(k)

³

´

Q11 Q12

(k)

k×k

Q

.

(2.8)

QA =

∈

R

11

(k)

(k)

Q21 Q22

(k)

The matrix QA has an LU factorization, if and only if Q11 is nonsingular for all k =

(k)

1, . . . , n − 1. The matrix Q11 is nonsingular, if and only if its singular values are positive.

¡ ¢|k

¡ ¢n−k|

Letting U = QTA , V = I, U1 = QTA , U2 = QTA

, V1 = I |k and V2 = I n−k|

in partition (2.7) we obtain partition (2.8). Thus

³

´

³ ³ ³¡ ¢|k ´

³ ´´´

(k)

, R I |k

, i = 1, . . . , k.

(2.9)

σi Q11 = cos θi R QT

Triangular decompositions

12

(k)

Hence the matrix Q11 is nonsingular, if cos (θi ) > 0 holds for all i. This happens, if and

only

³ if ´θi < π/2 for all i = ¡1, . .¢. , k. In other words, the angles between the subspaces

R QkA (row space) and R I |k must be less than π/2.

This observation can be expressed in terms of subspace distance or gap, which is

deÞned for subspaces M, N ⊂ Rn by

d (M, N) = kPM − PN k2 ,

(2.10)

where PM and PN are the orthogonal projectors onto M and N , respectively. It is also

known (see, e.g., [234], [109]) that

½

sin (θq ) , dim (M) = dim (N) = q

kPM − PN k2 =

(2.11)

1, dim (M ) 6= dim (N )

Thus if all θi < π/2, then sin (θi ) < 1 and kPM − PN k2 < 1.

Proposition

orthogonal

matrix QA ∈ Rn×n has an LU factorization, if and only

³ ³ ´14 The

´

¡

¢

if θk R QkA , R I |k < π/2 holds for all k = 1, . . . , n − 1. The equivalent condition is

for all k = 1, . . . , n − 1.

³ ´´

³ ³ ´

<1

d R QkA , R I |k

(2.12)

Theorem 15 A nonsingular

∈ Rn×n has an LU factorization if and only if

³ ³ matrix

´ A

¡ |k ¢´

k

< π/2 holds for all k = 1, . . . , n − 1. The

for QA the condition θk R QA , R I

equivalent condition is

³ ´´

³ ³ ´

<1

(2.13)

d R QkA , R I |k

for all k = 1, . . . , n − 1.

If a nonsingular matrix A has no LU factorization, then a permutation matrix

P exists such that P A has an LU factorization. As P A = (P QA ) RA is also a QRfactorization, the multiplication of A by P (change of rows) improves on the orthogonal

part QA of matrix. In other words, the change of row vectors keeps out π/2 as a principal

angle.

2.3

Perturbations of triangular matrix factorizations

It was recognized very early that the perturbation of the triangular factorizations affects

the stability of the Gaussian elimination type methods. Von Neumann and Goldstine

[196], [197], and Turing [239] gave the Þrst perturbation analysis, although in implicit

form. In fact, they investigated the inversion of matrices via the Gaussian elimination

and the effect of rounding errors. For example, von Neumann and Goldstine [196] show

e

certain° conditions and L

that if the positive deÞnite and symmetric A ∈ Rn×n satisÞes

°

°

°

e L

eT ° ≤ 0.42n2 u holds,

e are the computed LDLT factorization of A, then °A − LD

and D

where u is the machine unit. Most of the error analysis works on Gaussian elimination

type methods is related to some kind of ßoating point arithmetic and essentially backward

error analysis. This is due to Wilkinson, who proved the following important and famous

result.

Perturbations of triangular matrix factorizations

13

Theorem 16 (Wilkinson). Let A ∈ Rn×n and suppose GE with partial pivoting produces

a computed solution x

b to Ax = b. Then

(A + ∆A) x

b = b,

k∆Ak∞ ≤ 8n3 ρn kAk∞ u + O(u2 ),

where ρn is the growth factor of the pivot elements.

Wilkinson also proved that the triangular factors L and U found when performing

GE are the exact triangular factors of A + F , where the error matrix F is bounded

essentially in terms of machine word-length and the increase in magnitude of the elements

of A as the calculation proceeded.

The true meaning of the Wilkinson theorem is that the computed solution exactly

satisÞes a perturbed equation. It does not give any answer however, if one is interested

in the solution error x − x

b, or the error inßuencing factors.

The study of the perturbations of triangular factorizations started relatively lately.

Broyden [35] was perhaps the Þrst researcher, who showed that the triangular factors

can be badly conditioned even for well-conditioned matrices. Since then many authors

investigated the perturbations of triangular matrix factorizations [19], [25], [46], [47], [48],

[74], [112], [231], [232], [235], [236], [237] (see also [143]).

We note that the stability of triangular factorizations is also important in rank

testing [126], [204] (for rank testing see Stewart [233]).

The earlier perturbation results are true upper estimates for the solution of certain

nonlinear perturbation equations or approximate bounds based on linearization. Here we

solve these perturbation equations and derive the exact perturbation expressions for the

LU , LDU , LDLT and Cholesky factorizations. The exact perturbation terms are valid

for all matrix perturbations keeping nonsingularity and factorability. The results are then

used to give upper bounds that improve the known bounds in most of the cases. Certain

componentwise upper bounds can be obtained by monotone iterations as well. The results

of this section were published in papers [112] and [117].

Assume that A ∈ Rn×n has an LU factorization. We consider the unique LU

and LDU factorizations A = L1 U and A = L1 DU1 , respectively, where L1 is unit lower

triangular, D is diagonal and U1 is unit upper triangular (U = DU1 ). If A ∈ Rn×n is a

symmetric, positive deÞnite matrix, then it has a unique LDLT factorization A = L1 DLT1 ,

where L1 is unit lower triangular and D is diagonal with positive diagonal entries. The

Cholesky factorization of this A is denoted by A = RRT , where R = D1/2 LT1 is upper

triangular.

Let δA ∈ Rn×n be a perturbation such that A + δA also has LU factorization.

Then

A + δA = (L1 + δL1 ) (U + δU )

(2.14)

A + δA = (L1 + δL1 ) (D + δD ) (U1 + δU1 )

(2.15)

and

are the corresponding unique LU and LDU factorizations. The perturbation matrices δL1 ,

δU , δD and δU1 are strict lower triangular, upper triangular, diagonal and strict upper

triangular, respectively. For technical reasons we also use the unique LU factorization

A = LU1 , where L = L1 D is lower triangular and U1 is unit upper triangular. In this

case the LU factorization of the perturbed matrix will be given by

A + δA = (L + δL ) (U1 + δU1 ) ,

(2.16)

Triangular decompositions

14

where δL is lower triangular. We refer to this particular LU factorization as LU1 factorization, while the Þrst one will be called as L1 U factorization.

If A is symmetric and positive deÞnite and the perturbation δA is symmetric such

A + δA remains positive deÞnite, then the perturbed LDLT factorization is given by

A + δA = (L1 + δL1 ) (D + δD ) (L1 + δL1 )T ,

(2.17)

where δL1 is strict lower triangular and δD is diagonal. The LDLT factorization is a

T

). Hence any statement on the

special case of the LDU factorization (U1 = LT1 , δU1 = δL

1

perturbed LDU factorization is also a statement on the perturbed LDLT factorization

provided that A is symmetric and positive deÞnite. Therefore we do not formulate separate

theorems on the perturbation of the LDLT factorization.

The perturbed Cholesky decomposition is given by

T

A + δA = (R + δR ) (R + δR ) ,

(2.18)

where δR is upper triangular.

n

We use the following notation. Let A = [aij ]i,j=1 . Then

½

aij , i ≥ j − l

tril (A, l) = [αij ]ni,j=1 , αij =

, (0 ≤ |l| < n)

0, i < j − l

and

triu (A, l) = [βij ]ni,j=1 ,

βij =

½

aij , i ≤ j − l

,

0, i > j − l

(0 ≤ |l| < n) .

(2.19)

(2.20)

Related special notations are tril (A) = tril (A, 0), tril∗ (A) = tril (A, −1), triu (A) =

triu (A, 0) and triu∗ (A) = triu (A, 1). Let ei ∈ Rn denote the ith unit vector and let

P

P

I (k) = ki=1 ei eTi for 1 ≤ k ≤ n and I (k) = 0 for k ≤ 0. Similarly, let I(k) = ni=k+1 ei eTi

for 0 ≤ k < n and I(k) = 0 for k ≥ n. Note that I (n) = I = I(0) and I(k) + I (k) = I. We

also use the notation Ik for the k × k unit matrix.

Lemma 17 Let C, B ∈ Rn×n and assume that I − B is nonsingular and has LU factorization. Then the solution of the equation

W = C + Btriu (W, l)

(l ≥ 0)

(2.21)

(k = 1, . . . , n) .

(2.22)

is given by

´−1

³

Cek

W ek = I − BI (k−l)

Relations

W ek = Cek + Btriu (W, l) ek and triu (W, l) ek = I (k−l) W ek

¢

¡ Proof.(k−l)

W ek = Cek which gives the result. The nonsingularity of I − BI (k−l)

imply I − BI

follows from the assumptions that I − B is nonsingular and has LU factorization.

Note that I (j) = 0 (j ≤ 0) implies that W ek = Cek for k ≤ l. Equation (2.21)

can be transformed into one single system by using the vec operation:

´

³

(2.23)

diag I − BI (1−l) , . . . , I − BI (n−l) vec (W ) = vec (C) .

Corollary 18 Let C, B ∈ Rn×n and assume that I − B is nonsingular and has LU

factorization. Then the solution of the equation

W = C + tril (W, −l) B

(l ≥ 0)

(2.24)

is given by

³

´−1

eTk W = eTk C I − I (k−l) B

(k = 1, . . . , n) .

(2.25)

Perturbations of triangular matrix factorizations

15

¡

¢

Proof. As W T = C T + B T tril (W, −l)T = C T + B T triu W T , l Lemma 17

implies the requested result.

We Þrst give the exact perturbations in terms of A, the original factors and the

perturbation matrix δA . The perturbations will be derived from the perturbations of

certain lower triangular factors. It will be shown that a certain projection plays a key

role in the perturbation of the components. Then we derive and analyze corresponding

perturbation bounds which are compared with the existing ones.

2.3.1 Exact perturbation terms for the LU factorization

We Þrst derive the perturbations of the lower triangular factors in the LU factorizations

A = L1 U and A = LU1 , respectively. We assume that A and A + δA are nonsingular.

−1

and write

Let X1 = L−1

1 δL1 , Y = δU U

−1

−1

= L−1

L−1

1 (A + δA ) U

1 (L1 + δL1 ) (U + δU ) U

(2.26)

−1

in the form I + B = (I + X1 ) (I + Y ) = I + X1 + Y + X1 Y , where B = L−1

.

1 δA U

Observe that X1 is strict lower triangular and Y is upper triangular. Hence I + X1 is unit

lower triangular and I + Y is upper triangular and provide the unique L1 U factorization

of I + B. Note that I + B and I + Y are nonsingular by the initial assumptions. From

the identity

B = X1 + Y + X1 Y

(2.27)

−1

−1

−1

it follows that F := B (I + Y ) = Y (I + Y ) + X1 , where Y (I + Y ) is upper tri−1

∗

angular. Hence tril (F ) = X1 and triu (F ) = Y (I + Y ) . The relation (I + Y )−1 =

I − Y (I + Y )−1 implies B (I + Y )−1 = B − BY (I + Y )−1 which can be written as

F = B − Btriu(F ).

(2.28)

Hence the exact perturbation term is given by δL1 = L1 X1 = L1 tril∗ (F ). Lemma 17 and

relation tril∗ (F ) ek = I(k) F ek yield

·³

¸

´−1

´−1

³

(1)

(n)

Be1 , . . . , I + BI

Ben

F = I + BI

(2.29)

and

·

¸

³

´−1

³

´−1

Be1 , . . . , I(n) I + BI (n)

Ben .

X1 = I(1) I + BI (1)

(2.30)

Hence the kth column of δL1 is given by

δL1 ek = L1 X1 ek = L1 Pk (B) Bek

(k = 1, . . . , n),

(2.31)

where

³

´−1

Pk (B) = I(k) I + BI (k)

is a projection of rank n − k.

If B is partitioned in the form

¸

· 1

Bk Bk4

B=

Bk2 Bk3

(k = 1, . . . , n)

¡ 1

¢

Bk ∈ Rk×k ,

(2.32)

(2.33)

Triangular decompositions

16

then

Pk (B) =

·

0

¡

¢−1

2

−Bk I + Bk1

0

In−k

¸

.

(2.34)

We do now a similar calculation for the LU1 factorization. Let X = L−1 δL ,

Y1 = δU1 U1−1 and write

L−1 (A + δA ) U1−1 = L−1 (L + δL ) (U1 + δU1 ) U1−1

(2.35)

e = (I + X) (I + Y1 ) = I + X + Y1 + XY1 , where B

e = L−1 δA U −1 . Here

in the form I + B

1

X is lower triangular and Y1 is strict upper triangular. Hence I + X is lower triangular

e

and I + Y1 is unit upper triangular and provide the unique LU1 factorization of I + B.

e

Note that I + B is nonsingular. Again, the identity

e = X + Y1 + XY1

B

(2.36)

∗

e − Btriu

e

(G).

G=B

(2.37)

e (I + Y1 )−1 = Y1 (I + Y1 )−1 + X, where Y1 (I + Y1 )−1 is strict upper triimplies G := B

e (I + Y1 )−1 =

angular. Hence tril (G) = X, triu∗ (G) = Y1 (I + Y1 )−1 and we can write B

e − BY

e 1 (I + Y1 )−1 in the form

B

Hence the exact perturbation term δL is given by δL = LX = Ltril (G). Lemma 17 and

tril (G) ek = I(k−1) Gek imply

·³

¸

´−1

´−1

³

(0)

(n−1)

e

e

e

e

G = I + BI

Be1 , . . . , I + BI

Ben

(2.38)

and

¸

·

³

´−1

³

´−1

e (0)

e (n−1)

e 1 , . . . , I(n−1) I + BI

e n .

Be

Be

X = I(0) I + BI

(2.39)

Thus the kth column of δL is given by

³ ´

e Be

e k

δL ek = LXek = LPk−1 B

(k = 1, . . . , n) .

(2.40)

This relation is showing the structural differences between δL1 and δL . A close inspection

on δL also reveals that it includes a Schur complement, while δL1 does not.

We can now derive perturbation δU of the upper triangular factor in the LU

factorization A = L1 U . By transposing A and A + δA = (L1 + δL1 ) (U + δU ) we obtain

the perturbed LU1 factorization

¢¡ T

¢

¡

T

T

T

AT + δA

L1 + δL

,

(2.41)

= U T + δU

1

¡

¢

e = U −T δ T L−T = B T and δ T ek = U T Pk−1 B T B T ek (k = 1, . . . , n). Hence

where B

A 1

U

¡ ¢¤T

£

U (k = 1, . . . , n) .

(2.42)

eTk δU = eTk B Pk−1 B T

Theorem 19 Assume that A and A + δA are nonsingular and have LU factorizations

−1

. The exact

A = L1 U and A + δA = (L1 + δL1 ) (U + δU ), respectively. Let B = L−1

1 δA U

perturbation terms δL1 and δU are then given by δL1 = L1 X1 and δU = Y U , where

X1 ek = Pk (B) Bek

(k = 1, . . . , n)

(2.43)

and

¡ ¢¤T

£

eTk Y = eTk B Pk−1 B T

(k = 1, . . . , n) .

(2.44)

Perturbations of triangular matrix factorizations

17

Remark 20 It can be easily seen that Y = triu (G), where G is the unique solution of

the equation

G = B − tril∗ (G) B.

(2.45)

Remark 21 The known perturbation bounds for the LU factorization are derived under

the condition kBk < 1 or ρ (|B|) < 1 ([19], [25], [46], [47], [231], [232], [236]). Here we

only assumed that I + B is nonsingular and has LU factorization.

Remark 22 The exact perturbation terms can be made formally independent of A by setting δA = L1 ∆A U . In this case B = ∆A , X1 and Y are independent of A. Consequently,

the condition numbers introduced in [231], [143] and [46] are not justiÞed in this case.

Remark 23 The exact perturbation terms δL1 and δU are related to certain block LU

factorizations, from which they can also be derived.

Remark 24 If A and A + δA are nonsingular M-matrices such that A ≤ A + δA , i.e.

δA ≥ 0, then Theorem 9 implies that δL1 , δU ≥ 0, B ≥ 0, X1 ≥ 0 and Y ≥ 0.

2.3.2 Exact perturbation terms for the LDU and Cholesky factorizations

Theorem 25 Assume that A and A + δA are nonsingular and have LDU factorizations A = L1 DU1 and A + δA = (L1 + δL1 ) (D + δD ) (U1 + δU1 ), respectively. Let B =

−1

. The exact perturbation terms δL1 , δD and δU1 are then given by δL1 = L1 X1 ,

L−1

1 δA U

δD = ΓD and δU1 = Y1 U1 ,where

X1 ek = Pk (B) Bek

and

(k = 1, . . . , n) ,

¡ ¢¤T

£

ek eTk

eTk Γ = eTk B Pk−1 B T

£ ¡ ¢¤T

D

eTk Y1 = eTk D−1 B Pk B T

(2.46)

(k = 1, . . . , n)

(2.47)

(k = 1, . . . , n) .

(2.48)

Proof. We have to derive only δU1 and δD . If A + δA is decomposed in the LU1

form

A + δA = (L + δL ) (U1 + δU1 ) ,

¢¡ T

¢

¡

T

T

T

e = L−1 δA U −1 .

L + δL

is an L1 U factorization. Let B

then AT + δA

= U1T + δU

1

1

³ ´

T

T T

T

T

T

e

e

Theorem 19 implies δ = U Y , where Y ek = Pk B B ek . Hence δU = Y1 U1 ,

U1

1

1

1

1

where

h ³ ´iT

eT

e Pk B

eTk Y1 = eTk B

(k = 1, . . . , n) .

³ ´

Also Y1 = triu∗ Fe holds, where Fe is the unique solution of the equation

³ ´

e − tril Fe B.

e

Fe = B

e = D−1 BD we obtain

Substituting B

£ ¡ ¢¤T

eTk Y1 = eTk D−1 B Pk B T

D

(k = 1, . . . , n) .

(2.49)

Triangular decompositions

18

Relation

A + δA = (L1 + δL1 ) (U + δU ) = (L1 + δL1 ) (D + δD ) (U1 + δU1 )

implies

δU = (D + δD ) (U1 + δU1 ) − DU1 = δD U1 + (D + δD ) δU1 .

Here (D + δD ) δU1 is strict upper triangular and U1 is unit upper triangular. Hence

δD = diag (δD U1 ) = diag (δU ). The relation δU = triu (G) U , where U is upper triangular,

implies

diag (δU ) = diag (G) diag (U ) = diag (G) D.

Let diag (G) = Γ = diag (γk ). By deÞnition

γk = eTk Gek = eTk GI(k−1) ek = eTk triu (G) ek = eTk Y ek ,

where Y is given by Theorem 19.

We remind that in the case of the LDLT factorization the term δU1 can be

dropped.

Theorem 26 Let A ∈ Rn×n and A + δA ∈ Rn×n be symmetric positive deÞnite and have

T

the Cholesky factorizations A = RT R and A + δA = (R + δR ) (R + δR ), respectively. Let

−T

−1

b

B = R δA R . The exact perturbation term δR is then given by δR = ΩR, where

and

³ ´T

³

´

1/2

1/2

b

b k B

eTk Ω = (1 + γk ) − 1 eTk + (1 + γk ) eTk BP

³ ´T

b ek

b k−1 B

γk = eTk BP

(k = 1, . . . , n)

(k = 1, . . . , n) .

(2.50)

(2.51)

Proof. The perturbation of the Cholesky factorization is derived from the identity

A + δA = (R + δR )T (R + δR ) = (L1 + δL1 ) (D + δD ) (L1 + δL1 )T .

As D +δD has only positive entries on the main diagonal we can deÞne (D + δD )1/2 . Thus

by the unicity of the Cholesky factorization

1/2

1/2 T

.

R + δR = (D + δD ) LT1 + (D + δD ) δL

1

Hence

´

³

1/2

1/2 T

.

δR = (D + δD ) − D1/2 LT1 + (D + δD ) δL

1

(2.52)

b = R−T δA R−1 and substitute B =

Theorem 25 gives δL1 = L1 X1 and δD = ΓD. Let B

³ ´

³ ´T

b D−1/2 and γk = eT BP

b ek .

b −1/2 . It follows that X1 (B) = D1/2 X1 B

b k−1 B

D1/2 BD

k

³ ´

b . The positivity conditions

Hence δL1 = RT ΛD−1/2 and δD = ΓD, where Λ = X1 B

eTk Dek > 0 and eTk (D + δD ) ek > 0 imply that γk > −1 for all k. Thus (D + δD )1/2 =

1/2

1/2

1/2

(I + Γ) D1/2 and δR = ΩR, where Ω = (I + Γ) − I + (I + Γ) ΛT .

Perturbations of triangular matrix factorizations

19

2.3.3 Bounds for the projection Pk (B)

In the previous sections we have seen that projections Pk (B) play the key role in perturbation errors. Next we give bounds for these projections. A crude upper bound is given

by

Lemma 27 If kBk2 < 1, then

kPk (B)k2 ≤ 1/ (1 − kBk2 ) .

°

°

°

−1 °

Proof. Using °(I + A) ° ≤ 1/ (1 − kAk) (kAk < 1) we can write

(2.53)

°

°

°I(k) °

1

1

2 °

°

° =

°

≤

.

1 − kBk2

1 − °BI (k) °2

1 − kBk2 °I (k) °2

°

³

´−1 °

°

°

°I(k) I + BI (k)

° ≤

°

°

2

A sharper bound can be obtained by using the following result.

Lemma 28 Let

F =

·

0

Z

0

I

¸

¡

∈ R(p+q)×(p+q)

¢

Z ∈ Rq×p .

If σ1 denotes the maximal singular value of Z, then

q

q

kF k2 = 1 + σ12 = 1 + kZk22 .

(2.54)

(2.55)

Proof. Let Z have the singular value decomposition Z = P ΣQT , where P ∈ Rq×q

and Q ∈ Rp×p are orthogonal,

Σ = diag (σ1 , . . . , σr ) ∈ Rq×p

and σ1 ≥ . . . ≥ σr ≥ 0. As

°· T

¸·

° Q

0

0

°

° 0 PT

Z

0

I

¸·

Q 0

0 P

(r = min {p, q}) ,

°·

¸°

¸°

°

°

°

° =° 0 0 °

° Σ I °

°

2

2

we have to determine the spectral radius of the matrix

·

¸T ·

¸

¸ · T

0 0

0 0

Σ Σ ΣT

.

=

Σ I

Σ

I

Σ I

If p ≤ q, this matrix has the 3 × 3 block

2

D

D

0

form

D

Ip

0

0

,

0

Iq−p

where D = diag (σ1 , . . . , σp ) ∈ Rp×p . Hence 1 is an eigenvalue of the matrix with multiplicity q − p. The rest of eigenvalues are those of matrix

¸

· 2

D D

.

D Ip

Consider

·

D2 − λI

D

D

Ip − λIp

¸

.

Triangular decompositions

20

¡

¢

¡

¢

As D2 − λI D = D D2 − λI we can write that

µ· 2

¸¶

¢

¢

¡

¡

D − λI

D

det

= det (1 − λ) D2 − λI − D2

D

Ip − λIp

p

Y

£

¡

¤

¢

=

(1 − λ) σi2 − λ − σi2

=

i=1

p

Y

i=1

£ ¡

¢¤

λ λ − 1 − σi2 = 0.

This implies that λ = 0 is an eigenvalue with multiplicity p, and λi = 1 + σi2 (i = 1, . . . , p)

are the remaining eigenvalues. Thus the spectral radius is 1 + σ12 . If p > q, the matrix

above has the following 3 × 3 block form

2

D 0 D

0

0 0 ,

D 0 I

where D = diag (σ1 , . . . , σq ) ∈ Rq×q . This matrix is permutationally similar to the matrix

2

D D 0

D I 0 .

0

0 0

Hence the eigenvalues are 0 with multiplicity p and λi = 1 + σi2 (i = 1, . . . , q). Thus we

proved that in any case

°·

¸°

q

q

° 0 0 °

° = 1 + σ2 = 1 + kZk2 .

°

(2.56)

1

2

° Z I °

2

Lemma 29 For k = 0, . . . , n,

kPk (B)k2 ≤

s

1+

kBk22

(1 − kBk2 )2

(kBk2 < 1) .

(2.57)

Proof. We recall that Pk (B) has the form (2.34) and we can apply Lemma 28

° ° ° °

¡

¢−1

. The inequality °Bk1 °2 , °Bk2 °2 ≤ kBk2 and kBk2 < 1 imply

with Z = −Bk2 I + Bk1

° ° °

¢−1 °

kBk2

kBk2

°¡

°

≤

kZk2 ≤ °Bk2 °2 ° I + Bk1

.

° ≤

1 − kBk1 k

1 − kBk2

2

As

s

1+

x2

2

(1 − x)

≤

1

1−x

(0 ≤ x < 1)

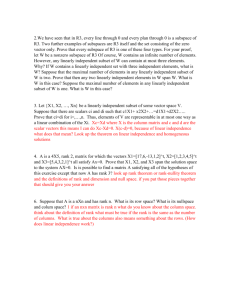

this upper estimate

of kPk (B)k2 is sharper than (2.53). Figure 1 shows the ratio function

q

2

x

[1/ (1 − x)] / 1 + (1−x)

2.

We introduce the quantity

p (B) = max kPk (B)k2

0≤k≤n

(2.58)

which we use in upper estimates to follow. This quantity can be replaced by any of the

following bounds.

Perturbations of triangular matrix factorizations

21

q

Figure 1 Function [1/ (1 − x)] / 1 +

x2

(1−x)2

Corollary 30

p (B) ≤

s

1+

kBk22

(1 − kBk2 )2

(kBk2 < 1) .

(2.59)

Remark 31 Bound (2.53) gives the weaker estimate

p (B) ≤

1

1 − kBk2

(kBk2 < 1) .

(2.60)

We now give an ”all”¯ B bound.

The Bauer-Skeel condition number of a matrix

¯

A is deÞned by κSkeel (A) = ¯A−1 ¯ |A|.

Lemma 32 Assume that I+B is nonsingular and has LU factorization I+B = LI+B UI+B ,

where LI+B is lower triangular and UI+B is upper triangular. Then

¢¡

¢

¡

(2.61)

Pk (B) = I(k) LI+B I(k) L−1

I+B ,

° °

°

°

°

kPk (B)k2 ≤ °I(k) LI+B °2 °I(k) L−1

I+B ≤ κ2 (LI+B )

and

¯¯

¯

¯

¯ −1 ¯

¡ −1 ¢

¯

¯

¯

|Pk (B)| ≤ ¯I(k) LI+B ¯ ¯I(k) L−1

I+B ≤ |LI+B | LI+B = κSkeel LI+B .

Proof. Let

I +B =

·

I + Bk1

Bk2

Bk4

Bk3

¸

= LI+B UI+B ,

where

LI+B =

·

L11

L21

0

L22

¸

,

UI+B =

·

U11

0

U12

U22

¸

¡

¢

L11 , U11 ∈ Rk×k ,

(2.62)

(2.63)

Triangular decompositions

22

LI+B is lower triangular and UI+B is upper triangular. Then

·

¸

0

0

¡

¢

Pk (B) =

,

−1

I

−Bk2 I + Bk1

¡

¢−1

−1

= (L21 U11 ) (L11 U11 ) = L21 L−1

where Bk2 I + Bk1

11 . We can write

·

¸ ·

¸·

¸

0

0

0

0

0

0

=

−1

L21 L22

−L21 L−1

I

−L−1

L−1

11

22 L21 L11

22

¡

¢¡

¢

−1

= I(k) LI+B I(k) LI+B .

Hence

¢¡

¢

¡

Pk (B) = I(k) LI+B I(k) L−1

I+B .

(2.64)

Corollary 33 Under the conditions of the lemma

¡ ¢

p (B) ≤ κ2 (LI+B ) , p B T ≤ κ2 (UI+B ) .

(2.65)

Remark 34 Here we can use optimal diagonal scaling to decrease κ2 (LI+B ) or κ2 (UI+B )

(see, e.g., [143]).

Finally we recall a result of Demmel [60] (see, also Higham [143]). Let

·

¸

¡

¢

A11 A12

A11 ∈ Rk×k

A=

∈ Rn×n

A21 A22

be symmetric and positive deÞnite. Then

´

³

°

°

°A21 A−1 ° ≤ κ2 (A)1/2 − κ2 (A)−1/2 /2.

11 2

b such that I + B

b is symmetric and positive deÞnite,

Lemma 35 For any B

Proof. Let

´1/2

³ ´ 1 1 ³

b

b ≤ + κ2 I + B

.

p B

2 2

b=

A=I +B

"

b1

I +B

k

2

b

Bk

b4

B

k

b3

I +B

k

#

(2.66)

(2.67)

(2.68)

.

Demmel’s result implies

° ³

µ

´−1 °

´i1/2 h ³

´i−1/2 ¶

°

° 2

1 h ³

b I +B

b1

b

b

°B

°

− κ2 I + B

,

k

° k

° ≤ 2 κ2 I + B

2

which yields

° ³ ´°

´1/2

1 1 ³

°

b °

b

.

°Pk B

° ≤ + κ2 I + B

2 2

2

(2.69)

Perturbations of triangular matrix factorizations

23

2.3.4 Norm bounds for the perturbations of LU and LDU factorizations

Theorem 36 Assume that A and A + δA are nonsingular and have LU factorizations

−1

. Then

A = L1 U and A + δA = (L1 + δL1 ) (U + δU ), respectively. Let B = L−1

1 δA U

kδL1 kF ≤¡ kL1¢k2 kX1 kF and kδU kF ≤ kUk2 kY kF , where kX1 kF ≤ p (B) kBkF and

kY kF ≤ p B T kBkF . Also we have

kδL1 − L1 tril∗ (B)kF ≤ kL1 k2 p (B) kBk2 ktriu (B)kF

(2.70)

and

¡ ¢

kδU − triu (B) U kF ≤ kU k2 p B T kBk2 ktril∗ (B)kF .

(2.71)

Proof. By deÞnition kX1 ek kF ≤ kPk (B)k2 kBek kF ≤ p (B) kBek kF and kX1 kF ≤

p (B) kBkF . Similarly,

° T °

°

° °

°

¡ ¢°

¡ ¢°

°e Y ° ≤ °eT B ° °Pk−1 B T ° ≤ p B T °eT B °

k

k

k

F

F

2

F

¡ T¢

and kY kF ≤ p B kBkF . Relations Pk (B) = I(k) − Pk (B) BI (k) , I(k) Bek = tril∗ (B) ek

∗

(k)

b

b

and °I (k) Be

° k = triu (B) ek imply X1 = tril (B) − X1 , where X1 ek = Pk (B) BI Bek

°b °

and °X1 ° ≤ p (B) kBk2 ktriu (B)kF . The relations

F

¡ ¢¤T

¡ ¢¤T

£

£

Pk−1 B T

= I(k−1) − I (k−1) B Pk−1 B T

,

eTk BI(k−1) = eTk triu (B)

and eTk BI (k−1) = eTk tril∗ (B) also yield Y = triu (B) − Yb , where

¡ ¢¤T

£

eTk Yb = eTk BI (k−1) B Pk−1 B T

° °

¡ ¢

° °

and °Yb ° ≤ p B T kBk2 ktril∗ (B)kF . Relations δL1 = L1 X1 and δU = Y U imply the

F

last two bounds of the theorem.

We can make the following observations.¡ ¢

1. If kBk2 < 1, then both p (B) and p B T are bounded by (2.59). Thus our

result is better than that of Barrlund [19], which corresponds to the bound (2.60). For

general B, we have the bounds (2.65).

e ), then δL1 = 0. Hence we overestie is upper triangular (δA = L1 UU

2. If B = U

mate δL1 . A similar argument applies to δU . Thus we need more sensitive and asymptotically correct estimates.

3. It is clear that δL1 ∼ L1 tril∗ (B) and δU ∼ triu (B) U hold for B → 0 in

agreement with Stewart [231].

Theorem 37 Assume that A and A + δA are nonsingular and have LDU factorizations A = L1 DU1 and A + δA = (L1 + δL1 ) (D + δD ) (U1 + δU1 ), respectively. Let B =

−1

. Then

L−1

1 δA U

kδL1 kF ≤ kL1 k2 kX1 kF , kδD kF ≤ kDk2 kΓkF

and

where kX1 kF

Also we have

kδU1 kF ≤ kU1 k2 kY1 kF ,

¡ ¢

¡ ¢

≤ p (B) kBkF , kΓkF ≤ p B T kBkF and kY1 kF ≤ κ2 (D) p B T kBkF .

kδL1 − L1 tril∗ (B)kF ≤ kL1 k2 p (B) kBk2 ktriu (B)kF ,

(2.72)

Triangular decompositions

24

and

¡ ¢

kδD − diag (B) DkF ≤ kDk2 p B T kBk2 ktril∗ (B)kF

°

°

¡

¡

¢ °

¡ ¢

¢°

°δU1 − triu∗ D−1 BD U1 ° ≤ kU1 k kDk p B T kBk °tril D−1 B ° .

2

2

2

F

F

(2.73)

(2.74)

Proof. The Þrst three bounds are direct consequences of Theorem 25. The

fourth bound is included in Theorem 36. So we prove only the last two bounds. Simple

b where

calculations and eTk BI (k−1) = eTk tril∗ (B) lead to Γ = diag (B) − Γ,

¡ ¢¤

£

b = eTk BI (k−1) B Pk−1 B T T ek eTk

eTk Γ

° °

¡ ¢

°b°

and °Γ

° ≤ p B T kBk2 ktril∗ (B)kF . Using similar arguments, eTk ZI(k) = eTk triu∗ (Z)

F

¡

¢

and eT ZI (k) = eT tril (Z) we have Y1 = triu∗ D−1 BD − Yb1 , where

k

and

k

£ ¡ ¢¤T

D

eTk Yb1 = eTk D−1 BI (k) B Pk B T

° °

°

¡

¡ ¢

¢°

°b °

°Y1 ° ≤ kDk2 p B T kBk2 °tril D−1 B °F .

F

Relations δD = ΓD and δU1 = Y1 U1 imply the bounds of the theorem.

T

Barrlund [19] derived

° following LDL perturbation bounds. If A is symmet° −1the

°

°

kδA k2 < 1, then

ric, positive deÞnite and A

2

°3/2

1/2 °

1 kAk2 °A−1 °2 kδA kF

kδL1 kF ≤ √

= ∆B

(2.75)

L1

1 − kA−1 k2 kδA k2

2

and

where

°

¢

¤

£

¡°

kδD kF ≤ (κ2 (A) + 1) ω °A−1 °2 kδA k2 − 1 kδA kF = ∆B

D,

(2.76)

1

1

ln

,

x 1−x

0 < x < 1.

(2.77)

°

°

These perturbation bounds are the functions of °A−1 °2 kδA k2 , while our bounds

depend on B. The inequality

³

´°

°

kδA kp

¡

¢

≤ kBkp ≤ κ2 D1/2 °A−1 °2 kδA kp (p = 2, F )

(2.78)

1/2

kAk2

κ2 D

ω (x) =

indicates that a direct comparison of the estimates

¡ is not

¢ easy. In many cases our estimates

are better. For example, if kAk2 ≥ 2 kDk2 κ2 D1/2 , δA 6= 0 and kBk2 ≤ 1/2, then our

estimate for δD is better than Barrlund’s estimate (2.76).

2.3.5 Norm bounds for the Cholesky factorizations

We give two theorems.

Theorem 38 Let A ∈ Rn×n and A + δA ∈ Rn×n be symmetric positive deÞnite and have

the Cholesky factorizations A = RT R and A + δA = (R + δR )T (R + δR ), respectively. Let

b = R−T δA R−1 . Then

B

³ ³ ´° ° ´ ³ ´° °

b °

b°

b °

b°

(2.79)

kδR kF ≤ θ p B

° kRk2 ,

° p B

°B

°B

2

where

θ (x) =

√

1+x−1 √

+ 1+x

x

F

(x ≥ 0) .

(2.80)

Perturbations of triangular matrix factorizations

25

Proof. Theorem 26 implies that δR = ΩR, where

¯

¯

°

° ° ³ ´°

° T °

°

°ek Ω° ≤ ¯¯(1 + γk )1/2 − 1¯¯ + (1 + γk )1/2 °

b k°

b °

Be

°

° °Pk B

°

F

2

and

2

°

°

° °

° ³ ´

³ ´°

°b ° °

°b °

b °

b

|γk | ≤ °Be

k ° °Pk−1 B ° ≤ °Bek ° p B .

2

2

2

¯√

¯

√

Let φδ (x) = ¯ 1 + x − 1¯ + δ 1 + x, x > −1 and |x| ≤ δ (δ ≥ 0). Then

max φδ (x) =

τ ≤x≤δ

√

√

1+δ−1+δ 1+δ

(τ = max {−1, −δ}) .

(2.81)

Now we can write

p

p

° T °

°ek Ω° ≤ 1 + δk − 1 + δk 1 + δk ,

F

°

³ ´°

b °

b k°

where δk = p B

° . As θ (x) (θ (0) = 3/2) is strictly monotone increasing in x ≥ 0,

°Be

2

we can write

µ√

¶

° T °

1 + δ∗ − 1 √

∗

°e Ω° ≤

+ 1 + δ δk ,

k

F

δ∗

³ ´° °

b °

b°

where δ ∗ = p B

° . Hence

°B

2

µ√

¶ ³ ´° °

1 + δ∗ − 1 √

∗ p B

b °

b°

kΩkF ≤

+

1

+

δ

° ,

°B

δ∗

F

which is the requested result.

Remark 39 Inequality

3

2

≤ θ (x) ≤

kδR kF ≤

µ

3

2

+ 12 x (x ≥ 0) implies the bound

° ¶ ³ ´° °

3 1 ³ b´ °

° b°

b °

b°

+ p B °B

° p B

° .

°B

2 2

2

F

(2.82)

Remark 40 The bound of the theorem can be weakened to

r

³ ´° °

³ ´° ° r

³ ´° °

b °

b°

b °

b°

b °

b°

kΩkF ≤ 1 + p B

1+p B

° −1+p B

°

° .

°B

°B

°B

(2.83)

Proposition 41 Under the assumptions of Theorem 26

r

° °

° b°

kΩkp ≥ 1 + °B

° − 1 (p = 2, F ) .

(2.84)

F

F

F

We give now a sharp lower estimate for kΩk.

p

b Hence

Proof. The matrix Ω satisÞes the relation Ω + ΩT + ΩT Ω = B.

° °

° b°

2

°B ° ≤ 2 kΩkp + kΩkp ,

p

which implies the statement.

Triangular decompositions

26

This lower bound is sharp. DeÞne

·

¸

0 0

Ω=

0 x

(x ≥ 0) .

Then

T

T

Ω+Ω +Ω Ω=

·

0

0

0 2x + x2

¸

b

= B,

r

° °

° °

√

° b°

° b°

2

=

2x

+

x

.

As

1 + °B

kΩkF = x and °B

°

° − 1 = 1 + 2x + x2 − 1 = x = kΩkF ,

F

F

the assertion is proved. The proof is also valid in the spectral norm.

We can establish thatr

our upper estimate for Ω exceeds the lower bound essentially

³ ´° °

³ ´° °

°

°

b °

b°

b °B

b°

1+p B

by the quantity p B

° .

°B

F

F

Following Stewart [232] we deÞne Up (Z) = triu∗ (Z) + pdiag (Z) for 0 ≤ p ≤ 1.

Theorem 42 Let A ∈ Rn×n and A + δA ∈ Rn×n be symmetric positive deÞnite and have

T

the Cholesky factorizations A = RT R and A + δA = (R + δR ) (R + δR ), respectively. Let

b = R−T δA R−1 . Then

B

°

³ ´ °

³° °

³ ´´ ³ ´ ° °2

°

° b°

b R°

b p B

b °

b°

(2.85)

° ≤ kRk2 φ °B

° ,p B

° ,

°δR − U1/2 B

°B

F

F

F

where

µ

¶

1

1

φ (x, y) = 1 + √

+ y + xy 2 .

2

2 2

(2.86)

Proof. Relation

√

x

1 + x = 1 + + Rx ,

2

|Rx | ≤

x2

2

(x ≥ −1)

implies that

1

(I + D)1/2 = I + D + RD ,

2

kRD kp ≤

1

kDk2p

2

(p = 2, F ) ,

where D = diag (di ) is such that di ≥ −1 (i = 1, . . . , n). We can write that Γ =

³ ´iT

h

³ ´

b

b = eT BI

b (k−1) B

b Pk−1 B

b − Γ,

b where eT Γ

ek eTk and

diag B

k

k

° °

³ ´°

³ ´° ° °

°

°b°

b °

b °

b°

° °tril∗ B

°Γ° ≤ p B

° .

°B

F

2

F

° °

³ ´

³ ´T

°bT °

b −Λ

b T , where eT Λ

b

b T = eT BI

b (k) BP

b k B

and

Similarly, ΛT = triu∗ B

°Λ ° ≤

k

k

F

³ ´°

³ ´° ° °

°

°

°

°

b ° . Hence Ω can be written as

b °B

b ° °tril B

p B

2

F

µ

¶³

³ ´

´

³ ´

´

1

1³

b

b

b −Λ

bT

triu∗ B

diag B − Γ + RΓ + I + Γ + RΓ

Ω=

2

2

µ

¶

³ ´ 1

1

T

b

b

b

= U1/2 B − Γ + RΓ − Λ +

Γ + RΓ ΛT .

2

2

Perturbations of triangular matrix factorizations

27

and

µ

¶

°

° °

°

³ ´°

° °

1°

1

°bT °

°

°

°b°

b

kΓkF + kRΓ kF °ΛT °F .

°Ω − U1/2 B ° ≤ °Γ° + kRΓ kF + °Λ ° +

2

2

F

F

F

°

° °

³ ´°

³ ´° °

° °

°

° b°

2

b °

b °

b°

By noting that °ΛT °F ≤ p B

°

° , kRΓ kF ≤ 12 kΓkF and °tril∗ B

° ≤ √12 °B

°B

F

F

F

we can easily obtain

µ

¶° ° ³ ´ ° °

°

³ ´°

³ ´ 1 ° °3

³ ´

2

1

° b °2

°

°

b°

b

b + °

b .

b°

b +°

° p2 B

° p3 B

°B

°B ° p B

°Ω − U1/2 B ° ≤ 1 + √

°B

2

F

F

F

F

2 2

This° bound

° is asymptotically correct. For large kBk it is worse than our Þrst

bound. For °A−1 °2 kδA k2 < 1 Sun [235] proved that

°

°

1 kRk2 °A−1 °2 kδA kF

kδR kF ≤ √

= ∆SR .

(2.87)

2 1 − kA−1 k2 kδA k2

° °

° °

°

°

° b°

° b°

It is easy to prove that Sun’s estimate is better, if °B

° = °A−1 °2 kδA k2 and °B

° ≤ 1/2.

2

° °2

° °

√

° b°

° b°

Our estimate is better, if °B

° ≤ 1/2 and κ2 (A) > 4 2n.

° = kδA k2 / kAk2 , °B

2

2

Drmaÿc, Omladiÿc and Veseliÿc ([74]) proved that for kBkF ≤ 1/2,

°

√ °

° b°

r

µ

¶

°

°

B

2

°

°

1

° b°

F

r

kΩkF ≤ √ 1 − 1 − 2 °B

=

°

° ° .

F

2

° b°

1 + 1 − 2 °B °

(2.88)

F

For small perturbations this estimate is better than any of the previous estimates. However

it is valid only for small perturbations unlike our estimates which are valid for all allowed

perturbations.

2.3.6 Componentwise perturbation bounds

We use the following simple observations. If ρ (|B|) < 1, then I − |B| is an M -matrix and

¯

¯

¯

−1 ¯

−1

(2.89)

¯(I + B) ¯ ≤ (I − |B|) .

¯

¯

¯

¯

The gap (I − |B|)−1 − ¯(I + B)−1 ¯ is estimated as follows:

¯

¯

¯

¯

0 ≤ (I − |B|)−1 − ¯(I + B)−1 ¯ ≤ (I − |B|)−1 (|B| + B) (I − |B|)−1 .

(2.90)

Note that |B| + B ≥ 0. If B ≤ 0, ¡then |B|¢+ B =

(I ¢+ B)−1 = (I − |B|)−1 .

¡ 0 and

(k)

(k+1)

≤ ρ |B| I

≤ ρ (|B|) similar statements

As ρ (B) ≤ ρ (|B|) and ρ |B| I

hold for the matrices BI (k) . We also exploit that for ρ (|B|) < 1, I − |B| I (k) is an

M-matrix (k = 0, 1, . . . , n) and

´−1 ³

´−1

³

I ≤ I − |B| I (k)

≤ I − |B| I (k+1)

≤ (I − |B|)−1 .

(2.91)

Theorem 43 Assume that A and A + δA are nonsingular and have LU factorizations

−1

. Then

A = L1 U and A + δA = (L1 + δL1 ) (U + δU ), respectively. Let B = L−1

1 δA U

|δL1 | ≤ |L1 | tril∗ (|F |) ,

|δU | ≤ triu (|G|) |U | ,

(2.92)

Triangular decompositions

28

where F and G are given by equations (2.28) and (2.45), respectively. If ρ (|B|) < 1, then

¡

¢

¢

¡

|δL1 | ≤ |L1 | tril∗ F b,1 , |δU | ≤ triu Gb,1 |U| ,

(2.93)

where F b,1 and Gb,1 are the unique solutions of the equations

F = |B| + |B| triu (F ) ,

G = |B| + tril∗ (G) |B|

(2.94)

respectively.

Proof. Theorem 19 implies that |δL1 | ≤ |L1 | |X1 |, |δU | ≤ |Y | |U |, where X1 =

¢−1

¡

Bek

tril∗ (F ) and Y = triu (G). Matrices F and G have the forms F ek = I + BI (k)

¡

¢−1

T

T

(k−1)

and ek G = ek B I + I

B

(k = 1, . . . , n). Condition ρ (|B|) < 1 implies

¯³

¯

´−1 ¯

´−1

³

¯

¯ |B| ek ≤ I − |B| I (k)

|B| ek = F b,1 ek

(2.95)

|F | ek ≤ ¯¯ I + BI (k)

¯

and

¯³

´−1 ¯¯

³

´−1

¯

¯ ≤ eTk |B| I − I (k−1) |B|

= eTk Gb,1 ,

eTk |G| ≤ eTk |B| ¯¯ I + I (k−1) B

¯

(2.96)

where the equalities follow from Lemma 17.

Remark 44 Estimates F b,1 and Gb,1 are sharp. If B ≤ 0, then |F | = F b,1 and |G| =

Gb,1 . Such situation (B ≤ 0) occurs, if A is an M -matrix and δA ≤ 0.

Inequality (2.91) implies

−1

F b,1 ≤ (I − |B|) |B| = F b,2 ,

Gb,1 ≤ |B| (I − |B|)−1 = Gb,2 .

The resulting weaker estimates

¡

¢

|δL1 | ≤ |L1 | tril∗ F b,2 ,

are due to Sun [236]. Note that F b,2 = Gb,2 .

¯

¢¯

¡

|δU | ≤ ¯triu Gb,2 ¯ |U |

(2.97)

Theorem 45 Assume that A and A + δA are nonsingular and have LU factorizations

−1

. Then

A = L1 U and A + δA = (L1 + δL1 ) (U + δU ), respectively. Let B = L−1

1 δA U

¡

¡

¢

¢

|δU | ≤ triu (|B| κSkeel (UI+B )) |U| ,

|δL1 | ≤ |L1 | tril∗ κSkeel L−1

(2.98)

I+B |B| ,

where I + B = LI+B UI+B .

¡

¢

Proof. From Lemma 32 we obtain |Pk (B)| ≤ κSkeel L−1

I+B ,

¡

¢

|X1 | ek ≤ κSkeel L−1

I+B |B| ek ,

¡

¡

¢

¢

and |X1 | ≤ tril∗ κSkeel L−1

I+B |B| . Also, Lemma 32 implies

¡ ¢ ¡

¢¡

¢

−T

T

I(k) UI+B

Pk B T = I(k) UI+B

and

¯£ ¡ ¢¤ ¯ ¯

¯¯

¯

T¯

¯

−1

I(k) ¯ ¯UI+B I(k) ¯ ≤ κSkeel (UI+B ) .

¯ Pk B T

¯ ≤ ¯UI+B

Hence |Y | ≤ |B| κSkeel (UI+B ) and |Y | ≤ triu (|B| κSkeel (UI+B )).

(2.99)

Perturbations of triangular matrix factorizations

29

Theorem 46 Assume that A and A + δA are nonsingular and have LDU factorizations A = L1 DU1 and A + δA = (L1 + δL1 ) (D + δD ) (U1 + δU1 ), respectively. Let B =

−1

e = L−1 δA U −1 . Then

L−1

and B

1 δA U

1

³¯ ¯´

¯ ¯

(2.100)

|δL1 | ≤ |L1 | tril∗ (|F |) , |δD | ≤ diag (|G|) |D| , |δU1 | ≤ triu∗ ¯Fe¯ |U1 | ,

where F , G and ³¯

Fe are

¯´ given by equations (2.28), (2.45) and (2.49), respectively. If

¯ e¯

ρ (|B|) < 1 and ρ ¯B ¯ < 1, then

³

´

¡

¢

¢

¡

|δL1 | ≤ |L1 | tril∗ F b,1 , |δD | ≤ diag Gb,1 |D| , |δU1 | ≤ triu∗ Feb,1 |U1 | ,

(2.101)

where F b,1 , Gb,1 are the unique solutions of equations (2.94), and Feb,1 is the unique

solution of equation

¯ ¯

³ ´¯ ¯

¯ e¯

¯ e¯

(2.102)

Fe = ¯B

¯.

¯ + tril Fe ¯B

Proof. Theorem 25 implies that |δL1 | ≤ |L1 | |X1 |, |δD³| ≤

´ |Γ| |D| and |δU1 | ≤

∗

∗ e

|Y1 | |U1 |, where X1 = tril (F ), Γ = diag (G) and Y1 = triu F . We have to prove

³

´−1

e I + I (k) B

e

only the last bound. Matrix Fe has the form eTk Fe = eTk B

(k = 1, . . . , n).

³¯ ¯´

¯ e¯

Condition ρ ¯B

¯ < 1 implies

eTk

¯ ¯

¯ e¯

¯F ¯ ≤ eTk

¯ ¯ ¯¯³

´−1 ¯¯

¯ e¯ ¯

(k) e

¯ ≤ eTk

¯B ¯ ¯ I + I B

¯

¯ ¯³

¯ ¯´−1

¯ e¯

¯ e¯

= eTk Feb,1 .

¯

¯B ¯ I − I (k) ¯B

(2.103)

b,1

eb,1 are sharp. If B ≤ 0 and B

e ≤ 0, then |F | =

Remark 47 Estimates F b,1 , G

¯ ¯ and F

¯ e¯

b,1

b,1

b,1

e

e

= ¯F ¯. Such situation (B ≤ 0, B ≤ 0) occurs, if A is an

F , |G| = G and F

M-matrix and δA ≤ 0.

Parts of Theorems 43 and 46 were obtained in [112] in a different form.

Theorem 48 Assume that A and A + δA are nonsingular and have LDU factorizations A = L1 DU1 and A + δA = (L1 + δL1 ) (D + δD ) (U1 + δU1 ), respectively. Let B =

−1

e = L−1 δA U −1 . Then

L−1

and B

1 δA U

1

¡

¡

¢

¢

|δL1 | ≤ |L1 | tril∗ κSkeel L−1

(2.104)

I+B |B| ,

|δD | ≤ diag (|B| κSkeel (UI+B )) |D| ,

³¯ ¯

³

´´

¯ e¯

|δU1 | ≤ triu∗ ¯B

¯ κSkeel UI+Be |U1 |

e = L eU e.

where I + B = LI+B UI+B and I + B

I+B I+B

(2.105)

(2.106)

Proof. Estimates for δL1 and δU follow from Theorem 45 and the relation Γ =

h ³ ´iT

eT

e Pk B

. Lemma 32 implies

diag (D). We recall that δU1 = Y1 U1 , where eTk Y1 = eTk B

³ ´ ³

´³

´

−T

e T = I(k) U T

I(k) UI+

that Pk B

e

e . Hence

I+B

B

´³

´

h ³ ´iT ³

−1

eT

U

Pk B

= UI+

I

I

e

(k)

(k)

e

I+B

B

Triangular decompositions

30

¯ ¯

³

´

¯

¯

¯ e¯

and ¯eTk Y1 ¯ ≤ eTk ¯B

¯ κSkeel UI+Be . This implies |Y1 | ≤

³¯ ¯

³

´´

¯ e¯

triu∗ ¯B

¯ κSkeel UI+Be |U1 | .

¯ ¯

³

´

¯ e¯

¯B ¯ κSkeel UI+Be and |δU1 | ≤

Assume that A is symmetric and positive deÞnite. Replace F b,1 and Gb,1 in

Theorem 46 by F b,2 and Gb,2 , respectively. We then have the weaker estimates

¡

¢

(2.107)

|δL1 | ≤ |L1 | tril∗ F b,2

and

¢

¡

|δD | ≤ diag Gb,2 D.

(2.108)

We recall that Sun [236] for symmetric

positive

deÞnite matrices proved that

¢

¡

under the assumptions ρ (|B|) < 1 and diag D−1 Eld < I,

³

¡

¡

¢¢−1 −1 ´

|δL1 | ≤ |L1 | tril∗ Eld I − diag D−1 Eld

,

D

(2.109)

|δD | ≤ diag (Eld )

(2.110)

with

¯

¯

¯

¢ ¯

¡

−T ¯ −1 −1 ¯ −1

¯.

D

L1 δA L−T

Eld = I − ¯L−1

1 δA L1

1

(2.111)

We compare now estimates (2.107)-(2.108) and (2.109)-(2.110), respectively. We

exploit the fact that for any diagonal matrix D, |AD| = |A| |D| and diag (AD) =

diag (A) D hold. We can write

Gb,2 = F b,2 = (I − |B|)−1 |B|

¯

¯

¯ −1

¡

¢ ¯

−T ¯ −1 −1 ¯ −1

¯ D = Eld D−1

= I − ¯L−1

D

L1 δA L−T

1 δA L1

1

and then estimate (2.108) yields

¢

¡

|δD | ≤ diag Gb,2 D = diag (Eld ) .

(2.112)

¢¢−1

¡

¡

¢¢−1 −1

¡

¡

As I − diag D−1 Eld

≥ I and Eld I − diag D−1 Eld

D ≥ Eld D−1 , the bound

(2.109) satisÞes

³

¡

¡

¢¢−1 −1 ´

¡

¢

≥ |L1 | tril∗ Eld D−1

(2.113)

D

|L1 | tril∗ Eld I − diag D−1 Eld

¡

¢

(2.114)

= |L1 | tril∗ F b,2 .

Thus it follows that Theorem 46 improves the LDLT perturbation result of Sun [236].

Theorem 49 Let A ∈ Rn×n and A + δA ∈ Rn×n be symmetric and positive deÞnite.

T

T

−T

−1

b

Assume

³¯ ¯´ that A = R R and A + δA = (R + δR ) (R + δR ) and let B = R δA R . If

¯ b¯

ρ ¯B

¯ < 1, then

|δR | ≤ Ωb,1 |R| ,

¯ ¯³

¯ ¯´−1

¯ b¯

¯ b¯

where eTk Ωb,1 = eTk ¯B

I(k−1) .

¯ I − I (k) ¯B

¯

(2.115)

Perturbations of triangular matrix factorizations

31

Proof. Note that R and R + δR have only positive diagonal entries. Let X =

δR R−1 . Then

T

R−T (A + δA ) R−1 = R−T (R + δR ) (R + δR ) R−1

(2.116)

b = X + X T + X T X. It follows that

can be written in the form B

b (I + X)−1 = X (I + X)−1 + X T ,

W := B

where X (I + X)−1 is upper triangular and X³T is lower triangular.

Hence triu (W ) =

´

¡ T¢

−1

−1

T

+ X . The relation

X (I + X) + diag X and tril (W ) = diag X (I + X)

−1

(I + X)

= I − X (I + X)−1

implies

b − BX

b (I + X)−1 .

b (I + X)−1 = B

B

It is easy to see that

µ

¶

´

³

xii

−1

= diag

diag X (I + X)

,

1 + xii

and

sign

Hence

and

µ

xii

1 + xii

¶

= sign (xii )

¯

¯

¯

−1 ¯

¯X (I + X) ¯ ≤ triu (|W |) ,

¢

¡

diag X T = diag (xii )

(i = 1, . . . , n) .

¯ T¯

¯X ¯ ≤ tril (|W |)

¯ ¯ ¯ ¯

¯ b¯ ¯ b¯

|W | ≤ ¯B

¯ + ¯B ¯ triu (|W |) .

¯ ¯

¯ b¯

It is clear that |W | ≤ F b,1 , where F b,1 is the unique solution of the equation F = ¯B

¯+

¯ ¯

¯

¯

¯

¯

´−1

³

¯ b¯

¯ b ¯ (k)

¯ b¯

¯B ¯ triu (F ). In componentwise form F b,1 ek = I − ¯B

¯I

¯B ¯ ek (k = 1, . . . , n). As

|X| ≤ [tril (|W |)]T , we have

¯

¯

¯ ¯

|δR | = ¯δR R−1 R¯ ≤ ¯δR R−1 ¯ |R| ≤ [tril (|W |)]T |R|

£

¡

¢¤T

≤ tril F b,1

|R| = Ωb,1 |R| .

This result is an improvement over Sun’s estimate [236], which has the form

µ¯ ¯ ³

¯ ¯´−1 ¶

¯ b¯

¯ b¯

|δR | ≤ triu ¯B

|R| .

(2.117)

¯

¯ I − ¯B

This claim follows from the inequality

µ¯ ¯ ³

µ¯ ¯ ³

¯ ¯´−1 ¶

¯ ¯´−1 ¶

¯ b¯

¯ b¯

¯ b¯

T

T ¯ b¯

ek triu ¯B ¯ I − ¯B ¯

= ek ¯B ¯ I − ¯B

I(k−1)

¯

¯ ¯³

¯ ¯´−1

¯ b¯

¯ b¯

≥ eTk ¯B

I(k−1) .

¯ I − I (k) ¯B

¯

Triangular decompositions

32

2.3.7 Iterations for upper bounds

The componentwise upper bounds can be obtained by Þxed point iterations which are

monotone in certain cases. The following concept and result is an extension of Ortega

and Rheinboldt ([202]).

DeÞnition 50 Let G : Rn×n → Rn×n be a given map and P ∈ Rn×n be such that P ≥ 0

and ρ (P ) < 1. The map G is said to be a P -contraction on Rn×n , if

¢

¡

(2.118)

|G (X) − G (Y )| ≤ P |X − Y |

X, Y ∈ Rn×n .

Theorem 51 If G is a P -contraction on Rn×n , then for every X0 ∈ Rn×n , the sequence

Xk+1 = G (Xk )

(k = 0, 1, . . . )

(2.119)

converges to the unique Þxed point X ∗ of G and

|Xk − X ∗ | ≤ (I − P )−1 P |Xk − Xk−1 |

|Xk − X ∗ | ≤ (I − P )−1 P k |X1 − X0 |

(k = 1, 2, . . . ) ,

(2.120)

(k = 1, 2, . . . ) .

(2.121)

Assume that X ≤ Y implies G (X) ≤ G (Y ). If W0 ≤ W1 = G (W0 ), then Wi+1 =

G (Wi ) (i = 0, 1, . . . ) is monotone increasing. If V0 ≥ G (V0 ) = V1 , then Vi+1 = G (Vi )

(i = 0, 1, . . . ) is monotone decreasing and Wi ≤ Wi+1 ≤ X ∗ ≤ Vi+1 ≤ Vi (i = 0, 1, . . . ).

Theorem 51 implies the following results.

Theorem 52 Consider equation W = C + Btriu (W, l), where B, C, W ∈ Rn×n and

l ≥ 0. If ρ (|B|) < 1, then for every W0 ∈ Rn×n the sequence Wk+1 = C + Btriu (Wk , l)

converges to W and

|Wk − W | ≤ (I − |B|)−1 |B|k |W1 − W0 |

(k = 1, 2, . . . ) .

(2.122)

Furthermore, if B ≥ 0 and C ≥ 0, then by setting X0 = 0 and Y0 = (I − B)−1 C the

iterates Xk+1 = C + Btriu (Xk , l) and Yk+1 = C + Btriu (Yk , l) (k ≥ 0) are monotone

and satisfy

−1

0 ≤ Xk ≤ Xk+1 ≤ W ≤ Yk+1 ≤ Yk ≤ (I − B) C.

(2.123)

If B ≥ 0 and C ≤ 0, then by setting X0 = (I − B)−1 C and Y0 = 0 the iterates {Xk } and

{Yk } satisfy

(I − B)−1 C ≤ Xk ≤ Xk+1 ≤ W ≤ Yk+1 ≤ Yk ≤ 0

(k ≥ 0) .

(2.124)

Proof. For ρ (|B|) < 1 the mapping φ (X) = C+Btriu (X, l) is a |B|-contraction.

For B ≥ 0 the inequality X ≤ Y implies φ (X) ≤ φ (Y ). If, in addition, C ≥ 0, then