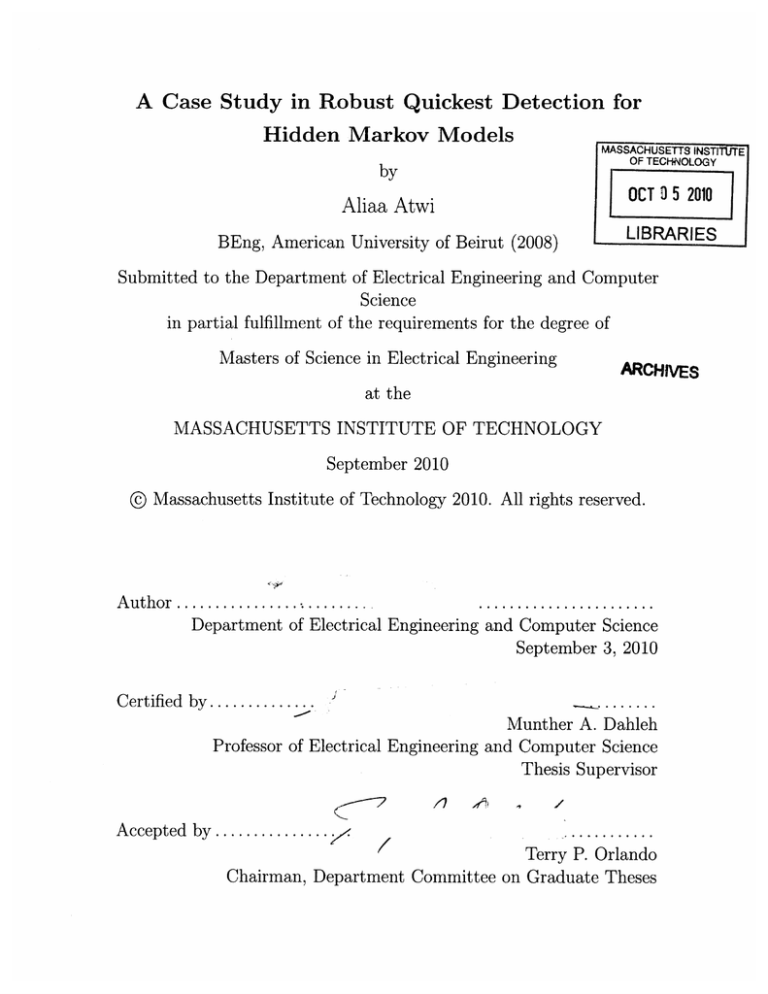

A Case Study in Robust Quickest Detection for

Hidden Markov Models

by

MASSACHUSETTS INSTfiJTE

OF TECHNOLOGY

OCT 3 5 2010

Aliaa Atwi

BEng, American University of Beirut (2008)

LIBRARIES

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Masters of Science in Electrical Engineering

ARCHIVES

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2010

@ Massachusetts Institute of Technology 2010. All rights reserved.

Au th or ....................

...

.......................

Department of Electrical Engineering and Computer Science

September 3, 2010

Certified by............

.

.......

Munther A. Dahleh

Professor of Electrical Engineering and Computer Science

Thesis Supervisor

/1

A~

Ad-

Accepted by ............

Terry P. Orlando

Chairman, Department Committee on Graduate Theses

2

A Case Study in Robust Quickest Detection for Hidden

Markov Models

by

Aliaa Atwi

Submitted to the Department of Electrical Engineering and Computer Science

on September 3, 2010, in partial fulfillment of the

requirements for the degree of

Masters of Science in Electrical Engineering

Abstract

Quickest Detection is the problem of detecting abrupt changes in the statistical behavior of an observed signal in real-time. The literature has focused much attention

on the problem for i.i.d. observations. In this thesis, we assess the feasibility of two

HMM quickest detection frameworks recently suggested for detecting rare events in a

real data set. The first method is a dynamic programming based Bayesian approach,

and the second is a non-Bayesian approach based on the cumulative sum algorithm.

We discuss implementation considerations for each method and show their performance through simulations for a real data set. In addition, we examine, through

simulations, the robustness of the non-Bayesian method when the disruption model

is not exactly known but belongs to a known class of models.

Thesis Supervisor: Munther A. Dahleh

Title: Professor of Electrical Engineering and Computer Science

4

Acknowledgments

First and foremost, I thank God for all the experiences He put in my way, including

the ones I was too short-sighted to understand and appreciate until much later.

I would like to thank my advisor Professor Munther Dahleh for giving me the

opportunity to join his group, and for being extremely patient and supportive thereafter. I appreciate the lessons I learned from you, both technical and otherwise. Your

insight allowed me to experience a very rewarding research journey, much needed for

a rookie like me.

I would also like to thank Dr. Ketan Savla, for going above and beyond the call

of duty on several occasions to help me with this work. Your feedback and criticism

are highly appreciated, and I look forward to more.

My collegues in LIDS have each contributed to making my time more enjoyable.

I would especially like to thank Mesrob Ohannessian for supporting me in difficult

times and for his willingness to go through philosophical discussions that no one else

would agree to have with me. I would also like to thank Parikshit Shah for expanding

my foodie horizons and for all the fun times we had together. Yola Katsargiri for

being a constant source of positive energy and enthusiasm for me and a lot of people

in LIDS. Michael Rinehart for the long enjoyable chats, tons of advice, and the toldyou-so's that followed. Giancarlo Baldan for being an awesome office mate, friend

and source of encouragement. Sleiman Itani for introducing me to LIDS and being a

valuable friend ever since. I would also like to thank Giacomo Como, Rose Faghih,

Mitra Osqui, Ermin Wei, AmirAli Ahmadi, Ali Parandegheibi, Soheil Feizi, Arman

Rezaee, Ulric Ferner, Noah Stein for the conversations, help and support they have

given me.

Finally, I would like to thank the dearest people to my heart, my family. Mum and

dad for their unconditional love and countless sacrifices. My grandparents for keeping

me in their dawn prayers year after year. And Sidhant, for being my bestfriend and

my rock. I dedicate my thesis to all of you.

6

Contents

13

1 Introduction

2

17

Quickest Detection for I.I.D. Observations

2.1

2.2

Problem Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

2.1.1

Non-Bayesian Formulations

. . . . . . . . . . . . . . . . . . .

18

2.1.2

Bayesian Formulations . . . . . . . . . . . . . . . . . . . . . .

19

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

R obustness

3 DP-Based Bayesian Quickest Detection for Observations Drawn from

23

a Hidden Markov Model

3.1

Setup and Problem Statement . . . . . . . . . . . . . . . . . . . . . .

23

3.2

Bayesian Framework

. . . . . . . . . . . . . . . . . . . . . . . . . . .

24

3.3

Solution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

25

3.3.1

3.3.2

An Infinite Horizon Dynamic Program with Imperfect State

Inform ation . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

. . . . . . . . . . . . .

28

E-Optimal Alarm Time and Algorithm

4 Implementation of the Bayesian DP-based HMM Quickest Detection

Algorithm for Detecting Rare Events on a Real Data Set

31

4.1

Description of Data Set . . . . . . . . . . . . . . . . . . . . . . . . . .

31

4.2

Proposed Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33

4.3

Modeling the Data Set using HMMs

. . . . . . . . . . . . . . . . . .

34

4.4

Implementation of the Quickest Detection Algorithm

. . . . . . . . .

37

4.5

Simulation Results

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

5 NonBayesian CUSUM Method for HMM Quickest Detection

6

...............

38

43

43

5.1

Sequential Probability Ratio Test (SPRT)

5.2

Cumulative Sum (CUSUM) Procedure .....................

44

5.3

HMM CUSUM-Like Procedure for Quickest Detection ............

46

5.4

Simulation Results for Real Data Set ...................

47

5.5

Robustness Results . . . . . . . . . . . . . . . . . . . . . . . . . . . .

53

Conclusion

7 Appendix

61

63

List of Figures

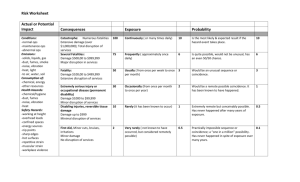

4-1

An Average Week of Network Traffic . . . . . . . . . . . . . . . . . .

4-2

Three Weeks of Data from Termini with a Surge in Cellphone Network

Traffic around Termini on Tuesday of the Second Week . . . . . . . .

32

33

4-3

log{Pr[O I Q(N,)]} for Increasing Number of States of Underlying Model 36

5-1

Detection Time Versus h for 1 week Termini Data Fit with a 6-state

HM M : h c (1, 1200) . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5-2

Detection Time Versus h for 1 week Termini Data Fit with a 6-state

HM M : h E (10,1000) . . . . . . . . . . . . . . . . . . . . . . . . . . .

5-3

48

49

Detection Time Versus h for 1 week Termini Data Fit with a 6-state

H M M : h c (0, 1.6) . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

50

5-4 HMM-CUSUM as a Repeated SPRT: No False Alarm Case . . . . . .

50

5-5

HMM-CUSUM as a Repeated SPRT: Case of False Alarm around h=150 51

5-6 HMM-CUSUM as a Repeated SPRT: Case of No Detection Caused by

Too High Threshold . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5-7

Average Alarm Time Versus h for Locally Optimal 6-State Model Describing Rome Data . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5-8

52

Average Alarm Time Versus h for Different Models in the Disruption

Class. (* = model 1) (o = model 2) (- = model 3)

5-9

51

. . . . . . . . . .

53

Performance of HMM-CUSUM when the Assumed Disruption Model is

that Obtained from the Termini Data and the Actual Disruptions are

Drawn from each Model in C (- = Model from Termini) (* = Perturbed

Model 1) (o = Perturbed Model 2) (- = Perturbed Model 3) . . . . .

56

5-10 Performance of HMM-CUSUM when the Assumed Disruption Model

is "Perturbed Model 1" and the Actual Disruptions are Drawn from

each Model in C (- = Model from Termini) (* = Perturbed Model 1)

(o = Perturbed Model 2) (- = Perturbed Model 3)

. . . . . . . . . .

57

5-11 Performance of HMM-CUSUM when the Assumed Disruption Model

is "Perturbed Model 2" and the Actual Disruptions are Drawn from

each Model in C (- = Model from Termini) (* = Perturbed Model 1)

(o = Perturbed Model 2) (- = Perturbed Model 3)

. . . . . . . . . .

58

5-12 Performance of HMM-CUSUM when the Assumed Disruption Model

is "Perturbed Model 3" and the Actual Disruptions are Drawn from

each Model in C (- = Model from Termini) (* = Perturbed Model 1)

(o = Perturbed Model 2) (- = Perturbed Model 3)

. . . . . . . . . .

59

List of Tables

4.1

Model Parameters of Business As Usual and Disruption States for N,

4.2

Detection Time and Horizon for Different False Alarm Costs when

=

3 39

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

4.3

Model Parameters for Business As Usual and Disruption for N, = 4 .

39

4.4

Detection Time and Horizon for Different False Alarm Costs when

Ns = 3

Ns=4 .......

5.1

..

40

..................................

Actual Disruption Emission A's (Transition Matrix and Initial Probability Same as Those from Termini) . . . . . . . . . . . . . . . . . . .

5.2

54

Actual Disruption Emission A's (Transition Matrix and Initial Probability Same as Those from Termini) . . . . . . . . . . . . . . . . . . .

55

7.1

Model Parameters for Business As Usual for N, = 6

. . . . . . . . .

63

7.2

Model Parameters for Business As Usual and Disruption for N, = 6 .

64

12

Chapter 1

Introduction

Quickest Detection is the problem of detecting abrupt changes in the statistical behavior of an observed signal in real-time. Designing optimal quickest detection procedures

typically involves a tradeoff between two performance criteria; one being a measure

of the delay between the actual change point and the time of detection, and the other

being a measure of the frequency of false alarms [15].

The literature has focused much attention on the case of i.i.d. observations before and after the change occurs. However, real applications often involve complex

structural interdependencies between data points which may be modelled using hidden markov models (HMMs). HMMs have been successfully used in wide array of

real applications including speech recognition, economics, digital communications,

etc. A recent paper by Dayanik and Goulding provides a Bayesian dynamic programming based framework to study the quickest detection problem when observations

are drawn from a hidden Markov model. In addition, Chen and Willett suggested

a non-Bayesian framework for studying the problem in [5]. In this thesis, we assess

the feasibility of the suggested methods in detecting disruptions in a real data set,

consisting of a time series of normalized communication network traffic from Rome.

A recent trend in urban planning is to view cities as cyber-physical systems (CPS)

that acquire data from different sources and use it to makes inferences about the states

of cities and provide services to their inhabitants. Cell phone data is available at little

to no cost to city planners, and can be used to detect emergencies that require a rapid

response. A timely response is crucial in such an application to avert catastrophes,

and false alarms may lead to unneeded costly measures. Thus, the problem of using

cell phone data to detect abnormal states in a city can be suitably formulated as a

quickest detection problem. In addition, the nature of cell phone data as data that

reflects periodic human activity indicates that HMMs would provide a more accurate

description of the data than i.i.d. alternatives.

An important consideration in our application is robustness when the disruption

model is not exactly known. This is a realistic assumption to make about rare events

(abnormal states). A recent paper by Unnikrishnan et al [8] addresses this problem

for the case of i.i.d. observations. In this thesis, we examine the issue of robustness

for the non-bayesian framework suggested in [5] through simulations.

The rest of this thesis is oraginized as follows:

* In Chapter 2, we describe the classical quickest detection problem where the

observations before and after the change are i.i.d. samples from two independent

distributions. We outline both Bayesian and non-Bayesian formulations often

used to describe the optimal tradeoff between detection delay and false alarm.

We also describe the minimax robustness criterion in [5] for the i.i.d. case.

" In chapter 3, we present the Bayesian DP-based formulation suggested in [6]

for solving the problem when the observations are sampled from HMMs. We

describe the optimal solution, which is based on infinite horizon dynamic programming with imperfect state knowledge.

" In chapter 4, we discuss the implementation aspects of the Bayesian DP-based

quickest detection algorithm in [6] to detect disruptions in the real data set at

hand. More especifically, we describe the data set and the procedure we follow

for modeling it with HMMs. We then describe the approximations required

to address several computational challenges in the dynamic program describing

the optimal solution. Finally, we present the results of our simulations for the

Rome data.

* In chapter 5, we present the non-Bayesian formulation suggested in [5] which

extends the CUSUM algorithm often used for the i.i.d. case to the case of

observations from HMM. We explain the behavior of the suggested procedure

through simulations, and proceed to examine the performance of the procedure

when the disruption model is not entirely known, but is known to belong to a

class of models.

* Finally, in chapter 6, we summarize our findings and suggest directions for

future work.

16

Chapter 2

Quickest Detection for I.I.D.

Observations

The problem of detecting abrupt changes in the statistical behavior of an observed

signal has been studied since the 1930s in the context of quality control. In more

recent years, the problem was studied for a wide variety of applications including

climate modeling, econometrics, enviromental and public health, finance, image analysis, medical diagnosis, navigation, network security, neuroscience, remote sensing,

fraud detection and counter-terrorism, and even the analysis of historical texts. In

many of those applications, the detection is required to occur online (in real time) as

soon as possible after it happens. Some examples of situations that require immediate detection are: the monitoring of cardiac patients, detecting the onset of seismic

events that precede earthquakes, and detecting security breaches. [15]

This type of problem, known as the "quickest detection problem", will be the focus

of this chapter. In particular, we will address the case when the observed data is i.i.d.

before the change, and continues to be i.i.d. thereafter under a different model.

2.1

Problem Statement

Consider a sequence Z1, Z2 , ... of random observations, and suppose that there is

a change time T > 1 such that, Z1, Z 2 , ...

, ZT_1

are i.i.d. according to the known

marginal distribution Qo, and ZT, ZT+1,...

are i.i.d. according to a different known

marginal distribution Q1. For a given T, the sequence Z 1 , Z 2 ,. ..

dent of ZT, ZT+1,...

,

ZT_1 is indepen-

Our aim is to detect the change in the model describing the

observations as quickly as possible after it happens while minimizing the frequency of

false alarms. A sequential change detection procedure is characterized by a stopping

time T with respect to the observation sequence. Essentially, r is a random variable

defined on the space of observation sequences, where r= k is equivalent to deciding

that the change time T for a certain sequence has occurred at or before time k. We

denote by A the set all of allowable stopping times.

2.1.1

Non-Bayesian Formulations

In Non-Bayesian quickest detection, the change time T is taken to be a fixed but

unknown quantity. Several formulations have been proposed for the optimal tradeoff

between false alarm and detection delay.

The minimax formulation suggested by Lorden in [10] is based on minimizing

the worst-case detection delay (over all possible change points T and all possible

realizations of the pre-change sequence) subject to a false alarm constraint. The

worst-case delay is defined as,

WDD(r) = sup ess sup EQ[(T- T + 1)+IZ1, Z 2 ,...,Z]

T>1

where EQ refers to the expectation operator when the change happens at T and the

pre-change and post-change sequences are sampled from Qo and Q1 respectively. The

false alarm rate is defined as,

FAR(T) =

where E,

[T]

1

EQ [,T]

can be interpreted as the mean time to false alarm. The objective can

then be expressed as:

minWDD(r) s.t. FAR(r) < a-

(2.1)

The optimal solution to [10] was shown by Moustakides [12] to be Page's decision

rule [13]:

n

rc = inf{n 2 1: max

LQ(Zi) > 3}

1<k< nE

~ ~ i=k

(2.2)

where LQ is the log-likelihood ratio between Q1 and Qo and 3 is chosen such that,

E (Zi) > 1.

Another formulation was suggested by Pollak in [14]. Worst-case average delay

was used instead of WDD(T) to quantify delay.

2.1.2

Bayesian Formulations

In Bayesian quickest detection, the change time T is assumed to be a random variable

with a known prior distribution. Let CD be a constant describing the cost for each

observation we take past the change time T, and CF be the cost of false alarm. The

performance measures are the average detection delay:

ADD(r) = E[(T

-

T)+]

where expectation is over r and and all possible observation sequences. The probability of false alarm:

PFA(T) = P(r < T)

The objective is to seekr E A that solves the optimization problem:

inf ADD(T) s.t PFA(T)

TrEA

<

a

Equivalently, the optimization problem can be expressed as:

inf CF.PFA(T) + CD.ADD(T)

-rEA

where C

=

CFCD is chosen to guarantee PFA(r) < a.

Taking the prior on T to be geometric, the optimal policy is given by Shiryaev's

test[18]??:

7,Pt = inf{k > 0 |

7rk >

7T

where irk is the posterior probability that T < k given the observation sequence up

to time k, and 7r* is a threshold that depends on the ratio C =

I*

D.

When C = 1,

=0.

2.2

Robustness

The policies outlined above are optimal assuming that we have exact knowledge

of Qo and Q1. Several applications, however, involve imperfect knowledge of these

distributions. [8] provides robust versions of the quickest detection problems above

when the pre-change and post-change distributions are not known exactly but belong

to known uncertainty classes of distributions Po and P. For the Bayesian criterion,

the version of the (minimax) robust problem suggested in [8] is:

min

sup

PoEPo,P1EP1

E{(T- T + 1)+I

s.t. sup Pr(r < T) < y

P0

P0

For uncertaintly classes that satisfy specific conditions, least favorable distributions (LFDs) can be identified such that the solution to the robust problem is the

same as the solution for the non-robust problem designed for the LFDs. To describe

the conditions, we need to introduce the notion of "joint stochastic boundedness".

Notation: p

>-p'

means that if X ~ po and X' ~ pi, then Pr(X > m) > Pr(X' >

m), for all real m.

Definition (Joint Stochastic Boundedness): Let (o, vi) E Qo x Qi be a pair of

Let L* denote

distributions such that vi is absolutely continuous with respect to 17E5.

the log likelihood ratio between v and Fo.

distribution of L*(X) when X ~ vj,

L*(X) when X

-

j

For each vj E Pj, let p denote the

= 0,1. jio and p, denote the distribution of

4o and X ~ v, repectively. The pair (Qo, Q1) is said to be jointly

stochastically bounded by (UK, v) if for all (vo, v1 ) in (Qo, Q1), p7-

-

,Po and P1

-4

a.

Under certain assumptions on Qo and Q1, the pair (Uo, v) are LFDs for the robust

quickest detection problem. Loosely speaking, the LFD from one uncertainty class is

the distribution that is "nearest" to the other uncertainty class.

The conditions on Qo and Q1 for the Bayesian robust quickest detection problem

are:

" Qo contains only one distribution vo, and the pair (Qo, Q1) is jointly stochastically bounded by (vo, vi).

" The prior distribution of T is geometric.

" L*(.) is continuous over the support of vo.

22

Chapter 3

DP-Based Bayesian Quickest

Detection for Observations Drawn

from a Hidden Markov Model

3.1

Setup and Problem Statement

Consider a finite-state Markov chain M

=

{Mt; t > 1} with d states, and suppose

that the initial state distribution and the one-step transition matrix of M change

suddenly at some unobservable random time T. Conditioned on the change time, M

is time-homogenous before T with initial state distribution y and one-step transition

matrix Wo and is time-homogenous thereafter with initial distribution p and one-step

transition matrix W1 .

The change time T is assumed to have a zero-modified geometric distribution with

parameters 0 and 0, meaning that

T =

Let the process X

=

0,

t,

w. p. 0

w.p. (1 - 00)(1 - 6)'~10

{Xt; t > 1} denote a sequence of noisy observations of M.

The probability distribution of Xt is a function of the current state Mt and whether

or not the change has occured by time t. For instance, Xt can be assumed to have a

poisson distribution with parameter Aij, where i E 1,...,d refers to the value of Mt,

and j = 11t

T

is 0 before the change and 1 thereafter.

We would like to use the noisy observation sequence X to detect the change in

the underlying unobservable sequence M as soon as possible while minimizing false

alarms.

The schemes in chapter 2 were developed assuming independence between observations conditioned on the change time. The Markov assumption of the setup in this

chapter necessitates a different approach for solving the problem.

3.2

Bayesian Framework

The framework presented in this section was presented in [6]. It proceeds as follows:

Define the process Y by Y = (Mt, 1{t

T})

for t > 1. Y = (d, 0) has the interpre-

tation that Mt = d and the change has not occured yet (t < T). Similarly, Y = (d, 1)

means that the change has occured and Mt = d. The state space of the process is

Y = {(0, 0), (1, 0), ...

,7

(d, 0), (0, 1), (1,1 1), ...

,7

(d, 1)}

and is partitioned into

Yo = {(0, 0), (1, 0)..., (d, 0)} and Y1 = {(0, 1), (1, 1),

... ,

(d, 1)}

First, we observe that Y is a Markov process with initial distribution r = ((1 - 0o)p, Oop)

and one-step transition matrix P=

(

0 )Wjow1.

0

We can also see that Y

W1

forms a recurrent class, and the states in Yo are transient. Therefore, the change

time T of M is the time till absorption of Y in Y1 :

T = min{t > 1;Yt

Yo}

The quickest detection problem for HMMs thus reduces to the problem of using

noisy observation to detect absorption in an unobservable Markov chain as quickly

as possible with a false alarm constraint.

The desire to detect changes quickly is reflected in a cost a paid for every observation taken after T without detecting a change. False alarms are penalized by a cost b

for declaring a change before T. 1 The Bayes' risk associated with a certain decision

rule T is thus:

p(r) = a.E [(T - T)+] + b. Pr(T < T)

(3.1)

where expectation is taken over all possible sequences X and Y and all change times

T.

The objective is to solve the following optimization problem:

inf

3.3

[t(r)

(3.2)

Solution

For every t > 0, let Ht = (Ut(y), y E Y) be the row vector of posterior probabilities

t(y) = Pr{Yt= y X, X 2 ,...X}, y E Y

that the Markov chain Y is in state y E Y at time t given the history of the observation

process X.

The process {f1t, t > 0} is a Markov process on the probability simplex state space

P = {7r E [0, 1]1Y; Z 7r(y) = 1} with

yY

=

UItPdiag(f(Xt+1))

(33)

fltPf (Xt+i)

where f(Xt+1) is the row vector of emission probabilities of Xt+1 under y E Y, and

'The framework suggested in [6] allows different states of Y to be associated with different costs

for delay and false alarm; however, in this thesis, we assume the cost to be uniform across all states.

diag(f(Xt+1)) is the diagonal matrix formed using the elements of f(Xt+1).

Let g(7r) be the expected delay cost for the current sample over all possible underlying y E Y given the past. Similarly, define h(ir) as the expected cost of false

alarm over y E Y given our knowledge of the past if change is declared at the current

sample. The two quantities are given by:

g(r)

=

ar(y)

h(7r)

=

br(y)

yE Yo

The Bayes' risk can then be expressed as

P(T) = E

[

g(Ut) + h(H(T)) , for all T E A

t=0

where the expectation is taken over all possible observation sequences X and underlying markov chains Y.

[6] suggests that the optimal cost of the problem (3.2) is a function of TI, the initial

state probability distribution of Y. More especifically,

p* =

inf

p(T) = Jn)

where

g( 7It + h(Ur)

J(r) = inf E,

t=0

J(ir) is the value function of an optimal stopping problem over the Markov process

Hi, and E, is the expected value over all sequences X and Y given that HO = 7r.

[6] proceeds to prove that the value function satisfies the following Bellman equation:

J(7r) = min{h(7r), g(wr) + E [J(7r') r] }

where

7r'

(3.4)

is obtained from 7r through the update equation (3.3), and expectation is

taken over HU'. This will be explained in more detail in the next subsection.

3.3.1

An Infinite Horizon Dynamic Program with Imperfect

State Information

Equation (3.4) captures the tradeoff between immediate and future costs at the heart

of dynamic programming(DP). Dynamic programming is concerned with decisions

made in stages, where each decision poses an immediate cost, and influences the

context in which future decisions are made in way that can be predicted to some

extent in the present. The goal is to find decision making policies that minimize the

cost incurred over a number of stages. In DP formulations, a discrete-time dynamic

system with current state i is assumed to transition to state

dependent on the control u. A transition from i to

j

j

with probability pij (u)

under u poses a cost pc(i, u, j)

in the present and a cost J*(j) in the future, where J*(j) is refered to as the optimal

cost-to-go of state j over all remaining stages. The costs to go can be shown to satisfy

a form of Bellman's equation:

J*(i) = min E [pc(i, u, j) + J*(j) I i, u] , ,for all i,

(3.5)

U

where the expectation is taken over

j. [4]

The DP formulation just described assumes perfect knowledge of the system's

states. Our setup, however, grants us access only to noisy observations of the states

Y, making this a DP problem with imperfect state information. [3] shows that this

type of problems can be reduced to a perfect-state-knowledge DP where the state

space consists of the partial information available at every time. In our application,

the partial information at time t is the vector of posterior probabilities 7rt obtained

from the observation sequence X 1 , X 2 , ...Xt and the initial state distribution

70.

Equation (3.4) is clearly a DP Bellman equation of the same form as (3.5) where

the state space is the 2d-dimensional simplex P. The decision u is either to"declare

a change and stop" or "continue sampling". If we choose to continue sampling, we

incure a cost pc(r, continue, 7r')

=

g(ir) in the present representing the expected

current sample cost (over the possible underlying states of Y given the history so

far). In addition we incur E [J(ir') Iff] in the future where expectation is taken over

the next posterior H' (a function of the next random noisy observation). On the other

hand, if we choose to declare a change, we incur a cost pc(wr, stop,7r') = h(7r) in the

present representing the expected cost of false alarm, and no future costs.

The Bellman equation (3.4) has an infinite horizon making it computationally

infeasible.

An approximate solution can be obtained by solving the problem for

only N(e) stages, where E corresponds to a tolerable error margin. Let pN(e) be the

optimal cost for the finite horizon problem with N(E) stages. [6] indicates that for

every positive e, there exists N(E) such that pN(,)

N(E) = - -+

-

1* < e. More especifically,

I - (1- 6)Wo-1(y,y'))

This expression is consistent with the intuition that higher false alarm costs, lower

sampling costs, higher precision, and higher expected time of change value each call

for a longer horizon. pN(e) is the N-stage value function evaluated at 7ro = rq. The

N-stage finite horizon value function for a starting posterior probability 7rt can be

obtained through the following recursion:

Jk+i(rt) = min {h(wt), g(7rt) + Ext,, (J(Ut+1)

where

j

= 1, 2, -

I It

= 7tt)}

(3.6)

, N(E) and J 0 := h, and rt+1 can easily be calculated from 7t

for a given value of Xi+1 using (3.3). Simply stated, this equation uses the optimal

cost-to-go calculated for a horizon k to calculate the optimal cost-to-go for a horizon

k +1.

3.3.2

E-Optimal Alarm Time and Algorithm

We recap that the problem is to detect a change in the underlying Markov model

through observing noisy samples of it. Every sample past the change point where the

change goes undetected poses a cost a, and false alarms pose a cost b. The algorithm

for detecting the change suggested in [6] is as follows:

Starting with observation X1 and ro = rl, calculate the updated posterior 7ri from

r0 and X 1 using (3.3). If r1 belongs to the region

FN(e) =

{7r E P; JN(E)(7)

=

then declare that a change has occured and stop sampling. Otherwise, repeat for

t = 2 by sampling X 2 , and calculating r2 from 7ri and X 2 , and so on.

The region FN(e) is calculated offline only once for a set of model parameters

and costs.

[6] shows that the region is a non-empty closed convex subset of the

2d-dimensional simplex P that decreases with increasing N(E), and converges to F

(the infinite horizon optimal stopping region) as N -* oc. Calculating this region in

practice poses problems that will be addressed in the next chapter.

30

Chapter 4

Implementation of the Bayesian

DP-based HMM Quickest

Detection Algorithm for Detecting

Rare Events on a Real Data Set

In this chapter, we propose a framework for detecting distruptions in real correlated

data sequences based on the HMM quickest detection algorithm described in chapter

3. We proceed to test the framework on a real life data set representing cell phone

traffic in Rome.

4.1

Description of Data Set

A recent trend in urban planning is to view cities as cyber-physical systems that

acquire data from different sources and use it to make inferences about the states

of cities and provide services for their inhabitants. Wireless network traffic data is

available at little to no cost to city planners [7], and can be used to detect emergencies

that require a rapid response. A timely response is crucial in such an application to

avert catastrophes, and false alarms may lead to unnecessary costly measures. Thus

the problem of using network traffic to detect disruptions in a city can be suitably

formulated as a quickest detection problem. In addition, the nature of this kind of

data as one that reflects periodic human activity indicates that HMMs would provide

a more accurate description of the data than i.i.d. alternatives.

100 -

0

200

4WO

6W

BOW

low

1200

14WU

16W0

16W

2")

Figure 4-1: An Average Week of Network Traffic

In 2006, a collaboration between Telecom Italia (TI) and MIT's SENSEable City

Laboratory allowed unprecedented access to aggregate mobile phone data from Rome.

The data consists of normalized numbers of initiated calls in a series of 15 minute

intervals spanning 3 months around Termini, Rome's business subway and railway

station. The data exhibits differences between weekdays, fridays and weekends, with

the latter exhibiting lower overall values. All days, however, share a pattern of rapid

increase in communication activity between 6 and 10a.m. followed by a slight decrease

and another increase after working hours. Night time exhibits the lowest level of

traffic[7]. This activity is depicted in figure 4-1. .

A disruption of the periodic pattern is known to have occured in week 8 of the

interval covered, leading to a surge in communication traffic, as shown in figure 4-2.

In the following section we propose a method to detect such disruptions in correlated

data using the HMM quickest detection algorithm in [6]. We test the performance of

the algorithm on the Rome data assuming perfect knowledge of the business as usual

and disruption models. This unrealistic assumption will be subsequently adressed in

chapter 5.

Figure 4-2: Three Weeks of Data from Termini with a Surge in Cellphone Network

Traffic around Termini on Tuesday of the Second Week

4.2

Proposed Setup

Consider the two-state Markov chain S

=

{St; t > 0}, and let St denote the state of

Rome at time t where St E {0, 1} for all t. St = 0 indicates that at time t, the data

is in "business as usual", and St = 1 indicates a disruption. Assume that the initial

probability of being in state St = 1 is 0, and that the transition matrix is

U

=

Oa

1 - Oa

6b

1 -

6b

Assume without a loss of generality that the chain starts with state Si = 0. The

time T until the chain enters state 1 for the first time is a zero-modified geometric

random variable with parameters 0 and 9a. Detecting a disruption as fast as possible

is equivalent to applying a quickest detection algorithm on real observations where

the change time is T. The model parameters W , W and the emission probabilities

for the pre/post-change models are obtained from training data. Emission probability

distributions describing the normalized initiated call counts for different states of the

city will be assumed Poisson. Upon detecting a disruption, the algorithm is restarted

while switching the pre-change and post-change HMMs to detect the end of the

disruption and return of business as usual. The new change time is a zero-modified

geometric random variable with parameters 01 and 6b, where 01 is the probability that

the first observation after declaring a disruption is a business as usual observaton.

4.3

Modeling the Data Set using HMMs

The quickest detection algorithm in [6] assumes that the HMM model describing

the observation sequence is known before and after the change. Eventhough this

assumption may be unrealistic for the disruption state, the description for business

as usual state can be obtained fairly accurately through fitting an HMM in long

training sequences from the past. In this section, we focus on the implementation

aspects of fitting an HMM in the business as usual Rome data obtained from Termini.

For a given number of underlying states N, the parameters that describe a hidden

markov model with Poisson distributions are [17]:

" The transition probability matrix A of the underlying Markov chain, where

as = Pr [st+1 = j | st = i] and st is the underlying state at time t.

" The emission probability vector B such that B(i) is the parameter of the Poisson

random variable describing the observations under state i of the underlying

Markov chain.

* The vector I of initial probabilities of the underlying states.

The joint probability and defining property of an HMM sequence is:

t-1

Pr(si,s2, ,stzi,x 2,...,xt) =1 Ijasksk+1

.k=1

si

[ Poiss(x,

t

-1=1

where Poiss(xl, sl) refers to the Poisson pdf at x, with parameter B(si).

We would like to solve for the model Q = (A, B, I, N,) that best describes a finite

training sequence of business as usual data 0 for a fixed number of states N,. There

is no known way to analytically solve for the model Q with maximizes Pr(O IQ) [17].

However, starting with an intial "guess" model Q1 = (A1 , B 1 , I1, N,), we can choose

a model Q2

=

(A 2 , B 2 , 12, N,) that locally maximizes Pr(O I Q) using an iterative

procedure like Baum-Welch [9] (a variant of the EM Algorithm [11]). In this work,

we used functions from the "mhsmm" package in the statistical computing language

R [16] to implement the Baum-Welch algorithm for Poisson observations. Note that

this approach assumes the number of states to be fixed by the user and provides

locally optimal solutions.

The choice of initial model parameters in the Baum-Welch algorithm has a significant effect on the optimality of its final estimate. In particular, the choice of

emission probability parameters are essential for rapid and proper convergence of

the re-estimation formulas used [17]. In our implementation, we made the following

choices for the initial model:

" All underlying states are initially equally likely.

" All transitions are allowed and equally likely.

" The parameters of the different Poisson emission probabilities were obtained by

sorting the observation sequence, dividing it into N, bins of equal length, and

averaging the observations in each bin resulting in B.

To determine the number of states N, that best describes our data sequence 0,

we ran the Baum-Welch algorithm on 0 for increasing number of states N,. Pr(O I

Q(N,)) where Q(N,) is the locally optimal model for N, states, was taken as a measure

of accuracy of fit.

The probability of an observation sequence 0 of length len under a given HMM

Q=(A,B,I) can be calculated using the Forward Algorithm [2] [1]. Consider the forward

variable defined by:

at(i) = Pr[O102...Ot, St = i

I Q]

We can find at(i) recursively, as follows:

" Initialization: ai(i) = I(i).Poiss(01 , B(i)) for 1 < i < N,.

" Induction: at+1(j) =

1 < t < len.

(

at(i)ai) Poiss(Ot+

1 , B(j)) for 1 <

j <

N, and

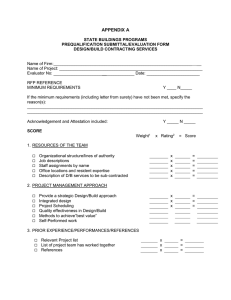

x 10,

-2 -

-2.5-

00

-35-

-4-/

-4.5

-5

2

4

6

8

10

16

14

12

18

Ns

Figure 4-3: log{Pr[O 1Q(N,)]} for Increasing Number of States of Underlying Model

* Termination: Pr(OQ) =

ENi aen(i)

In practice, the forward variable "underflows" for larger t, meaning that it heads

exponentially to 0 exceeding the precision range of essentially any machine [17]. A

standard procedure to tackle this issue is to scale at(i) by a factor independent of i:

Ct

1

NO

Ei at(i)

Then we can calculate the desired probability as:

len

log[Pr(O | Q)] =

log(ct)

t=1

Figure 4-3 is a plot of log{Pr(O 1 Q(N,))} for N, between 2 and 18. Note that

increasing the number of states initially results in increasing accuracy, but beyond

N, = 6 there is no significant gain in increasing N,.

In many real life scenarios, the training data available for HMM fitting has missing

samples creating the need for interpolation. The data set available to us had missing samples spanning days at a time, making interpolation an unfavorable option,

especially knowing the pattern of variation within each day. To preserve the periodic

pattern of the data described in [7], we chose to address the issue by replacing missing

samples with the average value corresponding to available samples in the same day

of the week (on a different week) and time of the day as the missing sample.

4.4

Implementation of the Quickest Detection Algorithm

For a given HMM description of the data, the quickest detection algorithm cosists of

two parts: one that needs to be executed only once and offline to calculate the region

pN(,) for the given model parameters, and one that uses ]pN(e) to detect changes in

different data sequences from the model in real time.

Despite the favorable properties of region FN(e) (namely convexity and closedness), its boundary cannot generally be expressed in closed form [6].

determination of

pN(e)

involves computing

jN(e)

(ir) for all

7r

The offline

E P. Since P is the

2d-dimensional probability simplex, this compuation is intractable. To get around

this problem, we resort to a simple form of cost-to-go function approximation, where

the optimal cost-to-go is computed only for a set of representative states.

The representative states are chosen through a discretization of the 2d-dimensional

probability simplex. The optimal cost to go is then calculated offline for all posterior

probability vectors in the resulting grid. However, for a posterior probability vector

7 in the grid, the calculation requires knowing the value of the optimal cost-togo function at all states (posterior probability vectors) accessible from r. Nearest

neighbour interpolation is used to estimate the cost-to-go values corresponding to

accessible states outside the grid.

Determining FN(e) poses another challenge relating to the calculation of the

factor at stage k+1: Ex,, (Jk(nt+i) |

It =

Q-

7rt). Even when the values of Jk(Hlt+1)

for different Ut+1's are known, the expectation is taken over Xt+1 that can take

countably infinite values. Conditioned on the underlying state Yt+1 , Xt+1 is a Poisson

random variable with a parameter A(y) that depends on the value y of Yt+1. For that

reason, we approximate the Q-factor for a certain Hit = 7t by the weighted average of

Jk(Ut+ 1 ) evaluated at a well-chosen set SA of values of Xt+1 . More specifically, the

approximate Q-factor is:

ZkeSA

app

Pr(Xt+1 = X

I 7rt)jk(7rt+1)

Pr(Xt+1 E A I7rt)

The set A is chosen to include points where most of the Poisson p.d.f. is centered for

parameters A(y) where y E Y.

4.5- Simulation Results

In this section, we show through simulation that the algorithm outlined in [6] can

indeed be utilized to detect the disruption shown in figure 4-2 despite several approximations taken to facilitate its running. However, the computational complexity

of the algorithm renders it extremely impractical, especially for rich data sets whose

description requires a high number of markov states.

In section 4.3, we showed that the number of underlying states required to model

the data set with "reasonable" accuracy is N, = 6. However, the discretization step

described in 4.4 results in exponential computational complexity in the number of

states N,. For simplicity of computations, we start by modeling the data with a three

state HMM, hoping that the model captures enough of the properties of the business

as usual and disruption states to detect the disruption. The number of underlying

states is then increased if needed in order to achieve better detection.

A locally optimal three state HMM for the Termini data is shown in table 4.1.

The initial model for the HMM fitting was obtained as per the guidelines in section

4.3 .

We run the change detection algorithm in the beginning of the disruption week.

Model Parameter

Inital Probability

Transition Matrix

Business As Usual Model

[1 0 0]

0

0.9851 0.0149

0.0167 0.9737 0.0096

0

0.0089

0.9911

Disruption Model

[1 0 0]

0.9522 0.0478 0.0000

0.0905 0.8000 0.1095

0.0000

0.1191

0.8809

[197 232 249]

[3 27 107]

Emission A's

Table 4.1: Model Parameters of Business As Usual and Disruption States for N, = 3

The disruption week consists of 2016 samples, where the disruption starts on Tuesday

around sample 406 (with a value of 181).

Horizon N(E)

31

301

3034

6143

False Alarm Cost

0.5

1

10

20

Detection Sample

404

405

405

405

Table 4.2: Detection Time and Horizon for Different False Alarm Costs when N, = 3

With a discretization step size of 0.25, sample cost of 1, error margin e=3 (equivalent to detecting on average three samples after a disruption has started, if no false

alarms are allowed), and a prior 0 = 1/300 (equivalently expressed as expected time

till disruption being 300 samples starting from business as usual), we obtain the

detection times in table(4.2) for different false alarm costs.

Model Parameter

Inital Probability

.

.

Transition Matrix

Business As Usual Model

[1 0 0 0]

0

0

0.9869 0.0131

0

0.0153 0.9683 0.0164

0

0.0279 0.9510 0.0210

0

Emission A's

0

0.0127

[ 2 19 58 117]

0.9873

Disruption Model

[10 0 0]

0.0727 0.9273 0.0000 0.0000

0.0000 0.9502 0.0498 0.0000

0.0000 0.0907 0.7992 0.1101

0

0.0000

0.1217

[181 198 232 250]

Table 4.3: Model Parameters for Business As Usual and Disruption for N, = 4

The number of states of the underlying model was then increased to N, = 4,

keeping all other parameters constant, resulting in the model in table 4.3, and the

0.8783

False Alarm Cost

0.5

1

10

20

Horizon N(E)

134

267

2700

5467

Detection Sample

404

406

406

406

Table 4.4: Detection Time and Horizon for Different False Alarm Costs when N, = 4

detection times in table 4.4.

For N, = 3, we observe that a false alarm occured for all values of CF/CD chosen,

resulting in a cost of 20. The value observed at 405 is 171, which is generally assumed

to be a business as usual value for weekday peak time traffic.

However, loosely

speaking, this value is considered to be at the boundary of allowable business as

usual observations, since the highest A in the business as usual state for N, = 3 is

107. Meanwhile the lowest A in the disruption state is 197. It is then understandable

that for N,

=

3 and for a cost of false alarm in the range we chose, this boundary case

is resolved by detecting a disruption. Note that running the algorithm for CFCD high

enough to result in correct detection was practically infeasible on a regular machine.

Coincidentally, for this particular case, the false-alarm-causing value (171) was close

enough to the actual disruption that the behavior was acceptable for real applications.

Increasing N, to 4 helped resolve the false alarm issue discussed above for a computationally practical cost CF/CD. We also notice that the detection accuracy was

almost independent of the false alarm cost when the cost of false alarm is greater

than the sample cost. This behavior is not typical of the quickest detection algorithm, which is generally expected to exhibit variation in performance with varying

cost criteria. We hypothesize that this behavior was observed due to a much higher

likelihood of the disruption observations under the disruption model than the business as usual model. "Much higher" is measured in terms of the difference between

sample and observation cost.

The fact that we were able to detect the change with N, = 4 indicates that the

difference between business as usual and disruption data is significant enough that

even a crude model was enough to describe it.

The computational complexity of the offline procedure with the approximations

suggested is in the oder of

1d

(N(e) + Np)

dstep

+ 1

where dstep is the probability grid step size, and N, the number of poisson samples

used to approximate the Q-Factor.

42

Chapter 5

NonBayesian CUSUM Method for

HMM Quickest Detection

A different approach for HMM-quickest detection was proposed by Chen and Willet

[5]. Their approach is non-Bayesian and mimicks the CUSUM test for i.i.d. observations. In this chapter, we present the algorithm suggested in [5], and compare it

with the previously discusses approach in [6], especially from the point of view of

feasibility for real data sets. We then shed light on the robustness of the algorithm

when the disruption model is not entirely known.

5.1

Sequential Probability Ratio Test (SPRT)

The sequential probability ratio test (SPRT) was devised by Wald [19] to solve the

following sequential hypothesis testing problem. Suppose that X 1 , X 2 ,...,X, is a

sequence of observations arriving continually (i.e. n is not a fixed number), and

suppose that H (K) is the hypothesis that the observations are i.i.d. according to

distribution PH (PK). The purpose is to be able to identify the correct hypothesis as

soon as possible, while minimizing decision errors.

For a given n, the likelihood ratio is

L (n) =

Pk(X, ... , Xn)

PH(X1, ... ,X)

=

PK(X1) n PK(Xi I Xi-1 , ... , X1)

1

-

PH(X1) =2 PH(X |

X'_ 1 ,..

.

,X 1 )

The SPRT defines the following stopping rule:

N* = min{n > 1 : L(n) > A or L(n)

The decision made is H if {L(n)

B}

A} and K otherwise. The thresholds A and B are

chosen to meet a set of performance criteria. The performance measures include, as

in the traditional hypothesis testing problem, the probability of error (Pe) and rate

of false alarm (Pf):

Pe = PK(L(N*)

B)

Pf = PH(L(N*) >A)

The design parameters A and B can be determined in terms of the target Pe and

Pf using Wald's approximation, giving:

A

B

1 - leP

Pf

(5.1)

Pf

(5.2)

1- Pe

In addition to minimizing P, and Pf, the sequential nature of the problem poses

two more performance criteria: the average run length (ARL) under H and K. The

SPRT is optimal in the sense that it minimizes ARL under both H and K for fixed

Pe and Pf.

5.2

Cumulative Sum (CUSUM) Procedure

In section 2.1.1, we mentioned that the optimal stopping time for the minimax formulation of the i.i.d. non-Bayesian quickest detection problem is given by Page's

decision rule (2.2).

For pre-change and post-change probability measures fH and fK, Page's test can

be easily reformulated in terms of the following recursion, known as the CUSUM test

[13]:

N* = min{n > 1 : S, 2 h}

where

S. = max{0, Sn-_

+ g(X.)}

and

g(X,) = ln

_____

(fH (Xn)

h is a parameter than can be chosen according to our desired tradeoff between false

alarm and detection delays.

Essentially, the CUSUM test is a series of SPRTs on the two hypotheses H (observations are due to fH) and K (observations are due to fH) where the lower limit A is

0 and the upper limit B is h. Every time the SPRT declares hypothesis A as its decision, we can assume that the change has not occured yet, and the SPRT is restarted.

This pattern continues until the first time that the SPRT detects hypothesis K (hence

crossing the threshold h for the first time).

The main requirement for CUSUM-like procedures to work is the "antipodality"

condition:

E[g(Xn) H] < 0

E[g(Xn)

K]

> 0

For g(X,) = ln(K xn)), those conditions follows from the non-negativity of condi-

tional KL distance.

The only performance measures for CUSUM are the ARL under H and K, since

detection is guaranteed to happen for all "closed" tests (Pr[N*< oc] = 1). CUSUM

exhibits minimax optimality in the sense that, for a given constraint on the delay

between false alarms, it minimizes the worst case delay to detection.

5.3

HMM CUSUM-Like Procedure for Quickest

Detection

Designing CUSUM procedures involves finding a relevant function g(X,) that satisfies

Page's recursion and the antipodality principle. [5] proposes using the scaled forward

variable described in section 4.3 for defining such a function for quickest detection on

HMM observations. For the k'th sample after the last SPRT,

g(n; k)(Xn) = In1n

f(Xn

I

.Xk)

(fH(Xn I Xn_1,7

Xk))

The CUSUM recursion is then

Sn = max{O, Sn_1 + g(n; k)}

To calculate fK(X,

I

Xn- 1 , ... ,Xk) and fH(Xn I Xn- 1, ... ,Xk), the scaled forward

variable &t(i) is used:

N

fH(X I X_1, ... ,X1) =

SaHt(i)

N

fK(Xt I Xt1, ... ,X1)

=

ZcKt(i)

The antipodality property for this procedure is proven in [5] and is again directly

related to the non-negativity of conditional KL-distance. It is also shown that the

average detection delay is linear in h, whereas the average delay between false alarms

is exponential in h, just like the behavior exhibited for the i.i.d.

CUSUM. This

"log-linear" behavior is the main reason why CUSUM-like algorithms work.

More especifically, the average detection delay (D) and time till false alarm (T)

are given by:

D~z

h

D(fK 1 fH)

eh

where B is the expected value of a single SPRT test statistic B knowing that a correct

detection for H has occured. B can be obtained through simulation.

5.4

Simulation Results for Real Data Set

In this section, we discuss the results of running the HMM-CUSUM algorithm on the

data from Termini. The algorithm does not require any priors to be given, except for

the HMM before and after disruption and a threshold h. The models parameter used

correspond to N, = 6 and are given in the Appendix. The threshold h determines the

desired tradeoff between the frequency of false alarms and average detection delay.

Higher h results in exponentially lower frequency of alarms, and a linear increase in

detection delay.

Figures 5-1, 5-2 and 5-3 show the alarm times obtained from running HMMCUSUM on Termini data for different values of h. In figure 5-3, we observe that

small h (h < 1.5) leads to false alarms. The time between false alarms, however,

increases exponentially as h increases (figure 5-1), and for 1.5 < h < 1100, HMMCUSUM consistently detected the disruption but with linearly increasing delay (figure

5-2). Finally, beyond h = 1100, the alarm time went to infinity (figure 5-1), meaning

that the disruption went undetected.

This behavior can be explained by plotting the trajectory of S, the cumulative

sum, for the Termini data under different values of h from the intervals outlined

above. Figure 5-4 shows the behavior of the cumulative sum in the "detection region".

Initially, the data showed an overwhelming likelihood of being "business as usual"

which lead to restarting the SPRT with the negative Sn reset to 0. As n approached

Monday's peak traffic time, the likelihood of disruption increased (since high traffic

180016001400S1200 -

E

1

0

8-0

cc

o-

600400200--

:E

HM

01

0

Eh

(1, 120

200

400

600

h

800

1000

1200

Figure 5-1: Detection Time Versus h for 1 week Termini Data Fit with a 6-state

HMM: h E (1, 1200)

is characteristic of the disruption) leading to a positive drift in the direction of h, but

the increase was not enough to cross the threshold and raise an alarm. Eventually,

Tuesday's surge in traffic raised the likelihood of disruption to the point of crossing

the threshold, leading to detection. Figure 5-5 shows the behavior of S,, when h is

in the false alarm region. In this case, the positive drift caused by Monday's peak

traffic was enough to cross the low threshold, and cause a false alarm. Finally, when

h is too high, the drift caused by 45 disruption samples is not enough for S" to cross

the threshold, and the consequent business as usual samples only serve to point the

drift away from the threshold towards 0, as shown in figure 5-6.

Figure 5-7 shows an approximate average alarm time for data sampled from the

Termini disruption model (Appendix) under different values of h. The average alarm

time for each h was obtained through Monte Carlo simulations. To guarantee detection, on average, the value of h required is greater than 7.5, much higher than the

corresponding value (> 1.5) required for the specific Termini sequence. One explanation for that is that the Termini sequence was used to define business as usual and

440-

435-

430E

4)

E

425-

< 420-

415-

410~

405

I

0

100

200

300

400

600

500

h e (10,1000)

700

800

900

1000

Figure 5-2: Detection Time Versus h for 1 week Termini Data Fit with a 6-state

HMM: h E (10, 1000)

disruption states, and is thus a "perfect fit" to the model and decision rule, clearly

depicting the variation between business as usual and disruption. This behavior is

not necessarily typical of all sequences from the model.

In the context of disruption detection for real data, the HMM-CUSUM algorithm

exhibits several advantages as compared to the DP-based bayesian framework suggested in [6]:

" It gives instant "real time" results without the need to run lengthy computations

offline.

" Its implementation was fairly simple and did not involve some of the issues

encountered with the DP-based algorithm (infinite state space, infinite horizon

and a cost-to-go function and Q-factor without a closed form).

" Increasing accuracy in HMM-CUSUM did not lead to any aditional computational complexity and was simply achieved by increasing the threshold h, unlike

...

..

.....

3002502001501005000

Figure 5-3: Detection Time Versus h for 1 week Termini Data Fit with a 6-state

HMM: h E (0, 1.6)

2.5-

2

1.5-

0.5-

0

0

50

250

300

200

Sample Number n

n s 150 corresponds to Monday peak

n ~406 corresponds to the disruptive peak on Tuesday

100

150

350

400

Figure 5-4: HMM-CUSUM as a Repeated SPRT: No False Alarm Case

" OAMi" -

-

---

-

0

50

100

--

200

250

300

Sample Number n

nr 150 is the Monday peak

n ~406 isthe disruptive peak on Tuesday

150

-

-

-

350

400

Figure 5-5: HMM-CUSUM as a Repeated SPRT: Case of False Alarm around h=150

1000 -

500 F

i

j

200

400

600

1000

1200

800

Sample Number n

1400

1600

1800

Figure 5-6: HMM-CUSUM as a Repeated SPRT: Case of No Detection Caused by

Too High Threshold

..............

..........

5 K50

............

..............

..............

...............

....

..

......

- I............

.....

............

.....

.....

......

.....

.....

...

....

........

....

.............

.........

Figure 5-7: Average Alarm Time Versus h for Locally Optimal 6-State Model Describing Rome Data

in the bayesian framework where increasing CF/CD resulted in a longer horizon

and more computations.

" The CUSUM procedure scales well with the increasing number of underlying

model states. The DP-based algorithm has exponential complexity in the number of states under the proposed discretization scheme.

" The HMM-CUSUM does not require a look-up table (memory) for its implementation, as does the DP-based algorithm (for storing the decision region).

The Bayesian approach in turn has the following advantages when compared to

CUSUM:

* The Bayesian approach has rigorous theoretical guarantees on its performance,

whereas HMM-CUSUM's performance guarantees often include approximations.

" The user is given more parameters to control the tradeoff between false alarm

and detection delay. In addition to CF and CD, the user can control the prior

belief on the time until disruption and the probability of starting in the disruption state.

:

-

...

........

.......................................

4;

N N MEN ! wNwiO

-_

...............

* The costs CF and CD are intuitively meaningful, unlike the threshold h which

can represent vastly different tradeoff for different problems.

Robustness Results

5.5

In this section we examine, through simulation, the robustness of the HMM-CUSUM

procedure when the disruption model is not entirely known.

440

420

400

380

360

E

340

320

300

280

260

240 L

0

5

10

15

20

h*1 00/30

h e [0.01,12]

25

30

35

40

Figure 5-8: Average Alarm Time Versus h for Different Models in the Disruption

Class. (* = model 1) (o = model 2) (- = model 3)

First, we study the effect of incorrectly assuming that the disruption model is

the one obtained from Termini data (Appendix) when the actual disruption model

is as described in table 5.1. The actual models have the same underlying transition

matrix and initial state distribution as the assumed model, with progressively smaller

Model

1

2

3

Emission A's

161 166 177 194 213 228

141 146 157 174 193 208

121 126 137 154 173 188

Table 5.1: Actual Disruption Emission A's (Transition Matrix and Initial Probability

Same as Those from Termini)

emission As. Figure 5-8 shows the average alarm time for data drawn from those

different disruption models under different values of h.

If the actual disruption model is in fact the one used to design the HMM-CUSUM

procedure (Appendix), an optimal threshold of interest is h = 7.5. On average, the

interval h > 7.5 guarantees negligible false alarm rates, and since detection delay

increases with the threshold, the lowest h in the interval would in addition guarantee

minimal detection delay. Hereafter, we focus our analysis on the threshold h = 7.5.

Figure 5-8 shows that the detection delay for h = 7.5 under the different disruption

models is on average higher (less optimal) than the one obtained when the actual

and assumed models were identical (figure 5-7).

This phenomenon has a simple

explanation. When the actual As of the disruption model are less than the assumed

ones, it will take more samples for S, to show the magnitude of positive drift that

would lead to crossing the threshold designed for higher As. This leads to delayed

detections on average.

Second, we attempt to find a robust HMM-CUSUM procedure that would guarantee a desired performance when the disruption is known to belong to a class C with

|C| models. The desired performance we focus on is again minimizing detection delay

for negligible false alarm rate. The components defining the robust HMM procedure

are the threshold h and the assumed disruption model.

We suggest the following procedure:

* For each model Ci in the class (C), plot the average alarm time when the

HMM-CUSUM is designed for disruptions from Ci and the actual disruptions

are drawn from C. with j = 1,..., 1C|

Threshold For Worst Average Alarm

Assumed

Time At Threshold

False Alarm

Model

437

9.6

Termini

427

9.6

Perturbed Model 1

427

10

Perturbed Model 2

43

6.4

Perturbed Model 3

Worst Delay

Model

Perturbed Model

Perturbed Model

Perturbed Model

Perturbed Model

3

3

3

1

Table 5.2: Actual Disruption Emission A's (Transition Matrix and Initial Probability

Same as Those from Termini)

" From each Ci plot obtained, find the lowest threshold hi that guarantees negligible false alarm rate (average alarm time> 406) for all Cj. In addition, find

the maximum average delay (Di) for all C, at the chosen hi.

" Choose the pairing of Ci and hi that minimizes the maximum average delay Di

found in the previous step.

This procedure outlines a practical method to find an approximate minimax robust HMM-CUSUM for a desired performance tradeoff, when the assumed model is

constrained to belong to C. For the class C consisting of the models in table 5.1 and

the original model obtained from Termini, we obtain the plots in figures 5-9, 5-10,

5-11, and 5-12. In figure 5-9, we observe that when the HMM-CUSUM is designed

for the Termini disruption, the desired false alarm performance is guaranteed for all

models in C when h = 9.6. For that threshold, the worst average detection delay

happens for data drawn from perturbed model 3 5.1 and has a value of 31 samples

(worst case average alarm time = 437). Table 5.2 summarizes the results observed for

all models in the class. Based on the simulation results, the design model of choice

that guarantees minimax robustness for the desired performance tradeoff is perturbed

Model 1 or 2 with a worst case detection delay of 21. The tie can be resolved by taking

the one that has a lower threshold, perturbed Model 1. Formalizing this procedure is

left for future work.

5

10

15

20

h*1 0/3

h e (0.01,12)

25

30

35

40

Figure 5-9: Performance of HMM-CUSUM when the Assumed Disruption Model is

that Obtained from the Termini Data and the Actual Disruptions are Drawn from

each Model in C (- = Model from Termini) (* = Perturbed Model 1) (o = Perturbed

Model 2) (- = Perturbed Model 3)

....................

....

................................

"9

450

E 350

300

2

4

6

8

10

12

h*2.5

hE (2,11)

14

16

18

20

22

Figure 5-10: Performance of HMM-CUSUM when the Assumed Disruption Model is

"Perturbed Model 1" and the Actual Disruptions are Drawn from each Model in C

(- = Model from Termini) (* = Perturbed Model 1) (o = Perturbed Model 2) (- =

Perturbed Model 3)

#

450

350

(D

300

2

4

6

8

10

12

h*2.5

h e (2,11)

14

16

18

20

22

Figure 5-11: Performance of HMM-CUSUM when the Assumed Disruption Model is

"Perturbed Model 2" and the Actual Disruptions are Drawn from each Model in C

(- = Model from Termini) (* = Perturbed Model 1) (o = Perturbed Model 2) (- =

Perturbed Model 3)

......

.......

.........

500

E

1=

350 --

&300L,

250-

200---

150

1

2

4

6

8

10

12

h*2.5

h e (2, 11)

14

16

18

20

22

Figure 5-12: Performance of HMM-CUSUM when the Assumed Disruption Model is

"Perturbed Model 3" and the Actual Disruptions are Drawn from each Model in C

(- = Model from Termini) (* = Perturbed Model 1) (o = Perturbed Model 2) (- =

Perturbed Model 3)

60

Chapter 6

Conclusion

In this thesis, we assessed the feasibility of two HMM quickest detection procedures

for detecting disruptions in a real data sequence.

For the Bayesian procedure described in [6], we suggested approximations for

finding the optimal region described by an infinite horizon dynamic program with

a continuous state space. The approximations included discretizing the continuous

state space and the countably infinite observation space, resulting in approximate

cost-to-go function and Q-factor values for a grid of probability vectors. The suggested approximations do not scale well with increasing state-space dimension, which

led us to experiment with running the algorithm on a three and four state HMM

representation of the Rome data (which requires at least six states for its accurate

representation). The algorithm successfully detected the disruption in the data for

relatively low costs only when the number of HMM states was four. Eventhough the

algorithm succeeded at detecting the disruption, our assessment was that the method

is impractical for rich real data sets under the approximation methods we chose.

We proceeded to assess the feasibility of the non-Bayesian CUSUM-like procedure

suggested in [5]. The procedure had the advantage of being very simple to implement,

and of providing real-time detection without the use of time consuming offline calculations or memory intensive look-up tables. It scales well with the number of states

of the underlying HMM models, which allowed us to to use a 6-state representation

of the Rome data and find the alarm time for even the most extreme tradeoffs be-

tween false alarm frequency and detection delay. While the performance guarantees

on HMM-CUSUM often involve approximations, the method is more suited for real

data due to the advantages just outlined.

Finally, we examined the robustness of the HMM-CUSUM when the disruption

model is not exactly known, but the belongs to a known class of HMMs. When the

actual model for the disruption data is 'nearer' to the business as usual model than

the assumed disruption model is, simulations show a noticeable performance drop

for a fixed threshold. We then suggested an experimental approximate method for

designing a CUSUM procedure that guarantees some sense of minimax robustness

when the disruption belongs to a known class of models.

Future work on this topic can focus on:

" Finding optimal solutions for the robust HMM quickest detection in the minimax sense, extending the i.i.d. results in [81

* Finding rigorous notions of distance for HMMs compatible with the quickest

detection problem, especially when the initial state distributions of the prechange and post-change sequences are not the steady state distributions of the

corresponding HMMs.

" Finding more efficient approximations for the optimal region described in [6]

exploiting its characteristics (convexity, closedness, etc) to increase the range

of computationally feasible dimensionality.

Chapter 7

Appendix

Parameter

Inital Probability

.

.

Transition Matrix

Emission A's

0.9857

0.0259

0.0000

0

0

0

Business As Usual Model

[1 0 0 0 0 0]

0

0

0.0143 0.0000

0.9383 0.0357 0.0000

0

0

0.0331 0.9415 0.0254

0.0000 0.0376 0.9381 0.0243

0.0158 0.9565

0

0

0

0.0000 0.0334

0

[ 1 10 24 50 94 133]

0

0

0

0.0000

0.0276

0.9666

Table 7.1: Model Parameters for Business As Usual for N, = 6

Parameter

Inital Probability

.

i

Transition Matrix

Emission A's

0.0000

0.0000

0.0000

0.0000

0.0000

1.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

Disruption Model

[1 0 0 0 0 0]

0.0000 0.0000

0.0000 1.0000

1.0000 0.0000

0.2423 0.5102

0.0000 0.1201

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.8799

0.0000

0.0000

0.0000

0.2475

0.0000

0.0900

0.9100

[181 186 197 214 233 248]

Table 7.2: Model Parameters for Business As Usual and Disruption for N,

=

6

Bibliography

[1] L. E. Baum and J. A. Egon. An inequality with applications to statistical estimation for probabilistic functions of a markov ptocess and to a model for ecology.

Bull. Amer. Meteorol. Soc., 73:360-363, 1967.

[2] L. E. Baum and G. R. Sell. Growth functions for transformations on manifolds.

Pac. J. Math, 27(2), 1968.

[3] D.P. Bertsekas. Dynamic Programming and Optimal Control, volume 1, chapter 8. Athena Scientific, third edition, 2007.

[4] D.P. Bertsekas and J.N. Tsitsiklis.

Athena Scientific, 2007.

Neuro-Dynamic Programming, chapter 1.

[5] B. Chen and P. Willett. Detection of hidden markov model transient signals.

IEEE Transactions on Aerospace and Electronic Systems, 36(4):1253-1268, October 2000.

[6] S. Dayanik and C. Goulding. Sequential detection and identification of a change

in the distribution of a markov-modulated random sequence. IEEE Transactions

on Information Theory, 55(7), July 2009.

[7] A. Sevtsuk J. Reades, F. Calabrese and C. Ratti. Cellular census: Explorations

in urban data collection. IEEE Pervasive Computing, 6(3), 2007.

[8] V. V. Venugopal J. Unnikrishnan and S. Meyn. Minimax robust quickest change

detection. IEEE Transactions on Information Theory, 2, June 2010. Draft.

Submitted Nov 2009, revised May 2010.

[9] G. Soules N. Weiss L. Baum, T. Petrie. A maximization technique occuring

in the statistical analysis of probabilitic functions of markov chains. Annals of

Mathematical Statistics, 41:164-171, 1970.

[10] G. Lorden. Procedures for reacting to a change in distribution. Annals of Mathematical Statistics, 42(6):1897-1908, 1971.

[11] T. Moon. The expectation maximization algorithm. IEEE Signal Processing

Magazine, 13:47-60, 1996.

[12] G.V. Moustakides. Optimal stopping times for detecting changes in distributions.

Annals of Statistics, 14(4):1379-1387, December 1986.

[13] E.S. Page. Continuous inspection schemes. Biometrika, 41:100-115, 1954.

[14] M. Pollak. Optimal detection of a change in distribution. Annals of Statistics,

13(1):206-227, 1985.

[15] V.H. Poor and 0. Hadjiliadis. Quickest Detection. Cambridge Univeristy Press,

first edition, 2009.

[16] R Development Core Team. R: A Language and Environment for Statistical

Computing. R Foundation for Statistical Computing, Vienna, Austria, 2010.

ISBN 3-900051-07-0.

[17] L. R. Rabiner. A tutorial on hidden markov models and selected applications in

speech recognition. Proceedings of the IEEE, 77(2), February 1989.

[18] A.N. Shiryaev. On optimum methods in quickest detection problems. Theory of

Prob. and App., 8:22-46, 1963.