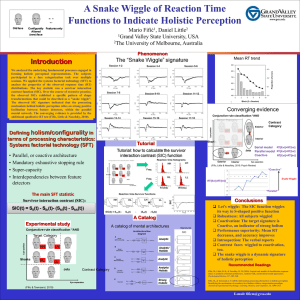

Information-Processing Architectures in Multidimensional Classification: A

advertisement