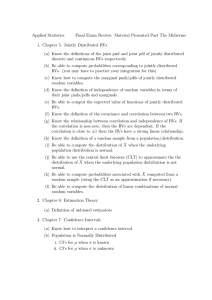

Math-UA.233.001: Theory of Probability Final cheatsheet

advertisement

Math-UA.233.001: Theory of Probability Final cheatsheet The final will be a closed-book exam, so there are some facts from the course that you’ll be expected to remember. It will not be assumed that you remember everything from all the classes. Try to make sure you know the following, in addition to the material from the midterm cheatsheet. Definitions: • A RV being ‘continuous’, meaning that it has a ‘probability density function’ (‘PDF’). How the PDF yields probabilities involving that RV. • Expected value and variance for continuous RVs. • Important distributions of continuous random variables: (i) Unifpa, bq; (ii) Exppλq; (iii) Npµ, σ 2 q. Also: meaning of the parameters in terms of expectation or variance for (ii) and (iii). • The ‘joint CDF’ of two or more RVs, and the ‘joint PMF’ of two or more discrete RVs. • Two or more RVs being ‘jointly continuous’, meaning that they have a ‘joint PDF’. How the joint PDF yields probabilities involving those RVs. • The jointly continuous RVs which describe a uniform random point in A, where A is a region of the plane that has finite positive area. 1 • The ‘marginals’ of the joint PMF (resp. PDF) of a pair of discrete (resp. jointly continuous) of RVs: how they’re computed and what they mean. • Independence for RVs (not necessarily discrete or continuous). • The ‘conditional PMF’ (resp. ‘conditional PDF’) of X given Y , where X and Y are discrete (resp. jointly continuous). Conditional probabilities in mixed situations (one cts RV and an event). • The ‘conditional expectation’ E rX | Y s in case X and Y are either both discrete or jointly continuous. • Covariance of a pair of RVs. • A sequence of ‘i.i.d.’ RVs X1 , . . . , Xn , and their ‘sample mean’ and ‘standardized sum’. Ideas, facts, propositions: ³8 • Basic properties of PDFs: f ¥ 0 and 8 f 1. The second property can sometimes be used to find a normalizing constant. • Formula for computing E rg pX qs in terms of the PDF of X, where X is a continuous RV and g is a function from real values to real values, without computing the distribution of g pX q. • If X is a continuous RV then fX in terms of other things. FX1 ; this is often used for computing fX • How to find the PDF of a transformed continuous RV Y g pX q. Two ways: (i) if X is a continuous RV and g is differentiable and strictly increasing, there’s a standard formula; (ii) in other cases, an alternative is to find the PDF of g pX q by first computing its CDF ‘by hand’. • Linearity of Expected Value for continuous RVs. • VarpX q E rX 2 s pE rX sq2 . • If Z is Npµ, σ 2 q, then aZ b is Npaµ b, a2 σ 2 q. • The Memoryless Property of exponential RVs. • Basic calculations involving variance and covariance of several RVs. 2 • CovpX, Y q E rXY s E rX sE rY s. • If X and Y are independent, then CovpX, Y q 0. • Computing variance for sums in terms of individual variances and covariances. • The Jacobian Formula for the joint PDF of pU, V q when pg1pX, Y q, g2pX, Y qq (i) X, Y are jointly continuous RVs such that pX, Y q always lies in some region A of the plane, and (ii) pg1 , g2 q is a differentiable and invertible ‘change-of-variables’ which maps A to another region B. • If X and Y are jointly continuous, and if we fix y and regard fX |Y px|y q as just a function of x for a fixed value of y, then this is still a PDF (intuitively, it is the ‘PDF of X conditioned on the event tY y u’). It satisfies all the same basic properties as unconditioned PDFs. • The intuitive interpretations of conditional PDF and conditional expected value for a jointly continuous pair of RVs: fX |Y px|y q E rX | Y y s P px ¤ X ¤x dx | y ¤ Y dx E rX | y ¤ Y ¤ y dy s, ¤y dy q , provided y is a real value such that fY py q ¡ 0. • The Multiplication Rule for joint and conditional PDFs: fX,Y px, y q fX |Y px|y q fY py q. (You should be able to recognize when a problem is giving you information about fY and fX |Y , rather than about fX,Y directly.) • The version of Bayes’ Theorem for conditional probabilities relating an event and a continuous RV. 3 • The Law of Total Probability for conditioning on a continuous RV: P pE q » 8 8 P pE | X xqfX pxq dx. (You should be able to recognize when a question is giving you conditional probabilities of the form P pE | X xq.) • The conditional expectation E rX | Y s may be regarded as a new RV which is a function of Y . • Based on the previous point, the Law of Total Expectation: E rX s E E rX | Y s . • If X and Y are jointly continuous, then they are independent if and only if fX,Y px, y q can be written as a product g pxqhpy q valid for all real values x and y. • If X and Y are independent continuous RVs and Z a continuous RV and fZ pz q » 8 8 X Y , then Z is also fX puqfY pz uq du. Make sure you can work the following examples yourself: – sum of two independent Unifp0, 1q RVs (in this case Z has the ‘tent distribution’, but you’re not expected to remember that); – sum of two independent Exppλq RVs (in this case Z has the ‘Gammap2, λq distribution’, but you’re not expected to remember that). • How to use a table of values for Φ, the CDF of a standard normal RV. • Sums of independent normal RVs are still normal, where the means add and the variances add. • Markov’s and Chebyshev’s Inequalities. • The Weak Law of Large Numbers (precise mathematical version of the ‘Law of Averages’), and its special cases for binomialpn, pq RVs (where n is large and p is fixed). (Need to know statement and use, not proof.) 4 • The Central Limit Theorem, and its special case for binomialpn, pq RVs (where n is large and p is fixed). (Need to know statement and use, not proof.) (If you need another theorem from the course, it will be given to you in the question.) 5