Research Article The Probability of a Confidence Interval Based on

advertisement

Hindawi Publishing Corporation

Journal of Applied Mathematics

Volume 2013, Article ID 131424, 4 pages

http://dx.doi.org/10.1155/2013/131424

Research Article

The Probability of a Confidence Interval Based on

Minimal Estimates of the Mean and the Standard Deviation

Louis M. Houston

The Louisiana Accelerator Center, The University of Louisiana at Lafayette, LA 70504-4210, USA

Correspondence should be addressed to Louis M. Houston; houston@louisiana.edu

Received 22 January 2013; Revised 9 May 2013; Accepted 11 May 2013

Academic Editor: Jin L. Kuang

Copyright © 2013 Louis M. Houston. This is an open access article distributed under the Creative Commons Attribution License,

which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Using two measurements, we produce an estimate of the mean and the sample standard deviation. We construct a confidence

interval with these parameters and compute the probability of the confidence interval by using the cumulative distribution function

and averaging over the parameters. The probability is in the form of an integral that we compare to a computer simulation.

1. Introduction

A confidence interval is an interval in which a measurement

falls with a given probability [1]. This paper addresses the

question of defining the probability of a confidence interval

that can be constructed when only a minimal number of

measurements are known.

The problem has a couple of significant applications. In

geophysics, time-lapse 3D seismic monitoring is used to

monitor oil and gas reservoirs before and after production.

Because of the high cost of 3D seismic monitoring, a minimal-effort time-lapse 3D seismic monitoring approach was

proposed by Houston and Kinsland [2]. This approach proposes a minimal measurement interval based on preliminary

measurements. It implies successively smaller seismic surveys

as monitoring continues and knowledge of the behavior of the

reservoir grows. The success of the minimal-effort method

is based on the probability that the reservoir is detected by

a seismic survey. The minimal-effort method is based on a

minimal number of measurements, which connects it to the

topic of this paper.

Another significant application of a confidence interval

based on a minimal number of measurements is the problem

of cancer treatment based on the irradiation of a tumor

[3]. The purpose of irradiation is to destroy the tumor, but

targeting the tumor can be problematic because tumors often

exhibit small random motions within the body. Because of

random tumor motion, the result of radiation treatment

often includes the destruction of healthy tissue. Clearly, it is

beneficial that cancer radiation treatment incorporates minimal intervals. The success of the cancer treatment is based

on the probability that the tumor is irradiated while using

a minimal irradiation interval. This success hinges on the

ability of the radiation treatment to incorporate preliminary

measurements that are often minimal. It is upon this basis

that the cancer treatment problem connects to the topic of

this paper.

In this paper, we construct a confidence interval based on

minimal estimates of the mean and the standard deviation.

Explicitly, we use two measurements to specify an interval

that contains a subsequent measurement with a given probability. The mathematical effort includes the derivation of a

specific probability for the confidence interval. The probability is computed using the cumulative distribution function,

and this probability is averaged over both the estimate of the

mean and the sample standard deviation to yield a specific

value. The results are compared to a computer simulation that

estimates the probability based on frequency.

2. Probability Based on the Cumulative

Distribution Function

The cumulative distribution function [4] is given as

𝐹 (𝑥; 𝜇, 𝜎2 ) =

𝑥

2

2

1

∫ 𝑒−(𝑡−𝜇) /2𝜎 𝑑𝑡,

√2𝜋𝜎 −∞

(1)

2

Journal of Applied Mathematics

where 𝑥 is a measurement, 𝜇 is the mean, and 𝜎 is the standard deviation. Let V = (𝑡 − 𝜇)/√2𝜎. So, 𝑑V = 𝑑𝑡/√2𝜎, and

(1) becomes

𝐹 (𝑥; 𝜇, 𝜎2 ) =

=

So we have

𝑃(

1 (𝑥−𝜇)/√2𝜎 −V2

𝑒 𝑑V

∫

√𝜋 −∞

1 0 −V2

1 (𝑥−𝜇)/√2𝜎 −V2

𝑒 𝑑V (2)

∫ 𝑒 𝑑V +

∫

√𝜋 −∞

√𝜋 0

𝑥−𝜇

1 1

),

= + erf (

√2𝜎

2 2

(𝑥 + 𝑥2 )

(𝑥1 + 𝑥2 )

𝑥 + 𝑥2

𝑥 + 𝑥2

≤𝑥≤ 1

)

− 𝑛 1

+ 𝑛 1

√2

√2

2

2

=

((𝑥1 + 𝑥2 ) /2) + 𝑛 (𝑥1 − 𝑥2 /√2) − 𝜇

1

)

erf (

2

𝜎√2

+

𝑛 (𝑥1 − 𝑥2 /√2) + 𝜇 − ((𝑥1 + 𝑥2 ) /2)

1

).

erf (

2

𝜎√2

(9)

where erf is the error function [5]. We know that the cumulative distribution function designates probability as

𝐹 (𝑥; 𝜇, 𝜎2 ) = 𝑃 (𝑋 ≤ 𝑥) .

(3)

This equation can be simplified. Let 𝑦 = 𝑥1 + 𝑥2 and 𝑧 =

𝑥1 − 𝑥2 . Then, (9) becomes

Therefore, we can write

𝑃 (𝑥 − 𝑛𝑠 ≤ 𝑥 ≤ 𝑥 + 𝑛𝑠) = 𝐹 (𝑥 + 𝑛𝑠; 𝜇, 𝜎2 )

𝑃(

− 𝐹 (𝑥 − 𝑛𝑠; 𝜇, 𝜎2 ) ,

(4)

𝑦

𝑦

|𝑧|

|𝑧|

≤𝑥≤ +𝑛 )

−𝑛

√2

√2

2

2

=

where 𝑥 is the estimate of the mean given as

𝑥=

1 𝑁

∑𝑥

𝑁 𝑖=1 𝑖

+

(5)

and 𝑠 is the sample standard deviation given as

𝑠=√

=

1 𝑁

2

∑(𝑥 − 𝑥) .

𝑁 − 1 𝑖=1 𝑖

(6)

=

𝑥 + 𝑛𝑠 − 𝜇

1

)

erf (

2

𝜎√2

+

𝑛𝑠 + 𝜇 − 𝑥

1

).

erf (

2

𝜎√2

𝑠 = √ (𝑥1 −

𝑥1 + 𝑥2

,

2

2

2

2𝑥 − 𝑥1 − 𝑥2

2𝑥 − 𝑥1 − 𝑥2

= √( 1

) +( 2

)

2

2

2

2

(𝑥 − 𝑥2 )

(𝑥 − 𝑥1 )

=√ 1

+ 2

4

4

𝑥 − 𝑥2

.

= 1

√2

1

𝑛 |𝑧| (𝑦 − 2𝜇)

).

erf (

−

2

2𝜎

2𝜎√2

For simplicity, we have 𝑃 ≡ 𝑃((𝑦/2)−𝑛(|𝑧|/√2) ≤ 𝑥 ≤ (𝑦/2)+

𝑛(|𝑧|/√2)). The expectation with respect to 𝑦 can be written

as

(𝑥1 + 𝑥2 )

(𝑥 + 𝑥2 )

) + (𝑥2 − 1

)

2

2

2

𝑦

𝜇

1

𝑛 |𝑧|

−

)

erf (

+

2

2𝜎

𝜎√2 2𝜎√2

3. The Probability Averaged over

the Estimate of the Mean

Let 𝑁 = 2. This implies that

𝑥=

(10)

(𝑦 − 2𝜇) 𝑛 |𝑧|

1

+

erf (

)

2

2𝜎

2𝜎√2

+

(7)

𝑛 (|𝑧| /√2) + 𝜇 − (𝑦/2)

1

)

erf (

2

𝜎√2

𝑦

𝜇

1

𝑛 |𝑧|

+

)

erf (

−

2

2𝜎

2𝜎√2

𝜎√2

+

This allows us to write

𝑃 (𝑥 − 𝑛𝑠 ≤ 𝑥 ≤ 𝑥 + 𝑛𝑠) =

(𝑦/2) + 𝑛 (|𝑧| /√2) − 𝜇

1

)

erf (

2

𝜎√2

2

𝐸𝑦 (𝑃) =

(8)

1

2𝜎√𝜋

∞

(𝑦 − 2𝜇) 𝑛 |𝑧|

1

+

[ erf (

)

2

2𝜎

−∞

2𝜎√2

×∫

1

𝑛 |𝑧| (𝑦 − 2𝜇)

)]

+ erf (

−

2

2𝜎

2𝜎√2

2

× 𝑒−(𝑦−2𝜇)/4𝜎 𝑑𝑦,

(11)

Journal of Applied Mathematics

3

where we have used the fact that the standard deviation of 𝑦

is √2𝜎 because

var (𝑋1 + 𝑋2 ) = var (𝑋1 ) + var (𝑋2 ) ,

𝜎𝑋 = √var (𝑋);

(12)

see [6].

Let 𝑢 = (𝑦 − 2𝜇)/2𝜎, so that 𝑑𝑢 = 𝑑𝑦/2𝜎. Equation (11)

becomes

𝐸𝑦 (𝑃)

=

𝑔=

2

𝑎

𝑎

1 ∞

+ 𝑏) − erf (

)] 𝑒−𝑎 𝑑𝑎. (20)

∫ [erf (

√𝜋 −∞

√𝑚

√𝑚

Since

lim erf (

𝑚→0

𝑎

𝑎

) = lim erf (

+ 𝑏) = sgn (𝑎) ,

𝑚→0

√𝑚

√𝑚

(21)

we have the following.

Condition 3. Consider

∞

𝑛 |𝑧|

1

1

𝑢

1

𝑛 |𝑧|

𝑢

+

)]

) + erf (

−

∫ [ erf (

√𝜋 −∞ 2

√2

√2

2𝜎

2

2𝜎

2

× 𝑒−𝑢 𝑑𝑢

=

Therefore, (19) can be added to 𝑔 to obtain

1 ∞

𝑛 |𝑧| −𝑢2

𝑢

+

) 𝑒 𝑑𝑢.

∫ erf (

√𝜋 −∞

√2

2𝜎

lim 𝑔 = 0.

𝑚→0

Based on Conditions 1, 2, and 3, 𝑔 must have the following

form:

𝑔 = erf (𝑏

(13)

2

1 ∞

1

).

∫ erf (𝑎 + 1) 𝑒−𝑎 𝑑𝑎 = erf (

√𝜋 −∞

√1 + 𝑐

∞

(14)

(15)

For 𝑁 = 200 and Δ𝑎 = 0.1, we find

2

1 𝑁

∑ erf (𝑎 + 1) 𝑒−𝑎 Δ𝑎 = 0.6827.

√𝜋 𝑖=−𝑁

Now, let us determine the following integral:

∞

𝑎

𝑠 = ∫ erf (

+ 𝑏) 𝑑𝑎,

√𝑚

−∞

𝑠 = √𝑚 ∫

∞

−∞

erf (

𝑠 = √𝑚 ∫

∞

−∞

𝑑𝑎

𝑎

,

+ 𝑏)

√𝑚

√𝑚

(16)

erf (

1

) = 0.6827

√1 + 𝑐

(27)

or

where V = (𝑎/√𝑚) + 𝑏 and 𝑑V = 𝑑𝑎/√𝑚. Using the known

integral of the error function, we find that

𝑠 = √𝑚[(

(26)

Consequently,

erf (V) 𝑑V,

𝑠 = √𝑚[V erf(V) +

(24)

2

2

1 ∞

1 𝑁

∑ erf (𝑎 + 1) 𝑒−𝑎 Δ𝑎.

∫ erf (𝑎 + 1) 𝑒−𝑎 𝑑𝑎 ≈

√𝜋 −∞

√𝜋 𝑖=−𝑁

(25)

Condition 1. Consider

lim 𝑔 = erf (𝑏) .

(23)

Based on (24), we can numerically determine 𝑐 as

We will find three conditions on 𝑔 that determine its structure. First, the following limit is clear from (14).

𝑚→∞

√𝑚

),

√𝑚 + 𝑐

where 𝑐 > 0. Equations (14) and (23) suggest that we can write

At this point, consider the following integral:

2

1

𝑎

𝑔=

+ 𝑏) 𝑒−𝑎 𝑑𝑎.

∫ erf (

√𝜋 −∞

√𝑚

(22)

1

= 0.7071

√1 + 𝑐

and, thus, 𝑐 = 1, so that

∞

1 −V2

𝑒 ] ,

√𝜋

−∞

𝑔 = erf (𝑏

𝑎

𝑎

1 −(𝑎/√𝑚+𝑏)2 ∞

] .

+ 𝑏) erf (

+ 𝑏) +

𝑒

√𝑚

√𝑚

√𝜋

−∞

(17)

(28)

√𝑚

)

√𝑚 + 1

(29)

or

2

√𝑚

1 ∞

𝑎

+ 𝑏) 𝑒−𝑎 𝑑𝑎 = erf (𝑏

).

∫ erf (

√𝜋 −∞

√𝑚

√𝑚 + 1

(30)

Equation (30) implies that

Condition 2. Consider

𝑠 = 2𝑏√𝑚.

Therefore, 𝑔 must vary as 𝑏 and √𝑚.

Now, because the error function is odd, we can write

2

1 ∞

𝑎

) 𝑒−𝑎 𝑑𝑎 = 0.

∫ erf (

√𝜋 −∞

√𝑚

𝑛 |𝑧| −𝑢2

𝑢

𝑛 |𝑧| √2

1 ∞

+

) (31)

) 𝑒 𝑑𝑢 = erf (

∫ erf (

√𝜋 −∞

√2

2𝜎

2𝜎√3

(18)

or

(19)

𝐸𝑦 (𝑃) = erf (

𝑛 |𝑧| √2

).

2𝜎√3

(32)

4

Journal of Applied Mathematics

4. The Probability Averaged over

the Sample Standard Deviation

1

0.9

The expectation with respect to 𝑧 can be written as

𝐸𝑧 (𝐸𝑦 (𝑃)) =

0.8

∞

𝑛 |𝑧| √2 −𝑧2 /4𝜎2

1

𝑑𝑧, (33)

)𝑒

∫ erf (

2𝜎√𝜋 −∞

2𝜎√3

where we have used the fact that the standard deviation of

𝑧 is √2𝜎 based on previous information and the fact that

var(𝑎𝑋) = 𝑎2 var(𝑋) [6]. Equation (33) can be expressed on a

semi-infinite interval as

∞

1

𝑛𝑧√2 −𝑧2 /4𝜎2

𝐸𝑧 (𝐸𝑦 (𝑃)) =

𝑑𝑧.

)𝑒

∫ erf (

𝜎√𝜋 0

2𝜎√3

(34)

𝑃

0.6

0.5

0.4

0.3

0.2

0.5

1

1.5

2

2.5

3

3.5

4

𝑛

Let 𝑞 = 𝑧/2𝜎. So, 𝑑𝑞 = 𝑑𝑧/2𝜎, and (34) becomes

𝐸𝑧 (𝐸𝑦 (𝑃)) =

0.7

2

2

2 ∞

∫ erf (𝑛𝑞√ ) 𝑒−𝑞 𝑑𝑞,

√𝜋 0

3

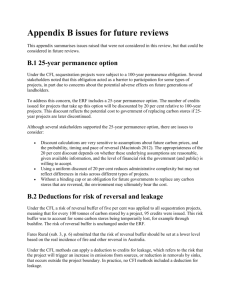

𝑃 integral

𝑃 estimate

(35)

∞

2

Figure 1: A plot of (2/√𝜋) ∫0 erf(𝑛𝑞√2/3)𝑒−𝑞 𝑑𝑞 versus the estimate 𝑀(𝛽)/𝑀 for 𝑀 = 2000.

or we can write

⟨𝑃 (𝑥𝑁=2 − 𝑛𝑠𝑁=2 ≤ 𝑥 ≤ 𝑥𝑁=2 + 𝑛𝑠𝑁=2 )⟩

=

(36)

2

2

2 ∞

∫ erf (𝑛𝑞√ ) 𝑒−𝑞 𝑑𝑞.

√𝜋 0

3

5. Computational Simulation

We can estimate ⟨𝑃(𝑥𝑁=2 − 𝑛𝑠𝑁=2 ≤ 𝑥 ≤ 𝑥𝑁=2 + 𝑛𝑠𝑁=2 )⟩

computationally. Simulate the normal, independent random

variables 𝑋 and {𝑋𝑖 }, for which

𝑥 ∈ 𝑋,

𝑥𝑖 ∈ 𝑋𝑖 .

(37)

Let the condition 𝛽 be

is in the form of an integral of the error function that we

have evaluated numerically and compared to a computer

simulation that estimates the probability based on frequency.

The comparison shows a high level of agreement that supports the validity of the derivation.

This work is part of a research effort interested in constructing confidence intervals based on various levels of

knowledge. Example applications exist in geophysical exploration and cancer treatment.

Acknowledgment

Discussions with Gwendolyn Houston are appreciated.

References

𝛽 : (𝑥𝑁=2 − 𝑛𝑠𝑁=2 ≤ 𝑥 ≤ 𝑥𝑁=2 + 𝑛𝑠𝑁=2 ) .

(38)

If 𝑀(𝛽) is the number of trials in which the condition 𝛽 is

met and 𝑀 is the total number of trials, then an estimate of

⟨𝑃(𝑥𝑁=2 − 𝑛𝑠𝑁=2 ≤ 𝑥 ≤ 𝑥𝑁=2 + 𝑛𝑠𝑁=2 )⟩ is given as

⟨𝑃 (𝑥𝑁=2 − 𝑛𝑠𝑁=2 ≤ 𝑥 ≤ 𝑥𝑁=2 + 𝑛𝑠𝑁=2 )⟩ ≈

∞

𝑀 (𝛽)

.

𝑀

(39)

2

Figure 1 shows a plot of (2/√𝜋) ∫0 erf(𝑛𝑞√2/3)𝑒−𝑞 𝑑𝑞 versus

𝑀(𝛽)/𝑀 for 𝑀 = 2000.

6. Conclusions

We have computed the probability of a confidence interval

based on minimal estimates of the mean and the standard

deviation. Specifically, the estimates are based on two measurements. The effort addresses the problem of constructing

a confidence interval based on minimal knowledge. The result

[1] L. M. Houston, “The probability that a measurement falls within

a range of n standard deviations from an estimate of the mean,”

ISRN Applied Mathematics, vol. 2012, Article ID 710806, 8 pages,

2012.

[2] L. M. Houston and G. L. Kinsland, “Minimal-effort time-lapse

seismic monitoring: exploiting the relationship between acquisition and imaging in time-lapse data,” The Leading Edge, vol. 17,

no. 10, pp. 1440–1443, 1998.

[3] C. A. Perez, M. Bauer, S. Edelstein et al., “Impact of tumor

control on survival in carcinoma of the lung treated with irradiation,” International Journal of Radiation Oncology, Biology and

Physics, vol. 12, no. 4, pp. 539–547, 1986.

[4] U. Balasooriva, J. Li, and C. K. Low, “On interpreting and

extracting information from the cumulative distribution function curve: a new perspective with applications,” Australian

Senior Mathematics Journal, vol. 26, no. 1, pp. 19–28, 2012.

[5] L. M. Houston, G. A. Glass, and A. D. Dymnikov, “Sign-bit

amplitude recovery in Gaussian noise,” Journal of Seismic Exploration, vol. 19, no. 3, pp. 249–262, 2010.

[6] Y. A. Rozanov, Probability Theory: A Concise Course, Dover

Publications, New York, NY, USA, 1977.

Advances in

Operations Research

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Advances in

Decision Sciences

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Mathematical Problems

in Engineering

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Algebra

Hindawi Publishing Corporation

http://www.hindawi.com

Probability and Statistics

Volume 2014

The Scientific

World Journal

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International Journal of

Differential Equations

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Volume 2014

Submit your manuscripts at

http://www.hindawi.com

International Journal of

Advances in

Combinatorics

Hindawi Publishing Corporation

http://www.hindawi.com

Mathematical Physics

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Complex Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International

Journal of

Mathematics and

Mathematical

Sciences

Journal of

Hindawi Publishing Corporation

http://www.hindawi.com

Stochastic Analysis

Abstract and

Applied Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

International Journal of

Mathematics

Volume 2014

Volume 2014

Discrete Dynamics in

Nature and Society

Volume 2014

Volume 2014

Journal of

Journal of

Discrete Mathematics

Journal of

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Applied Mathematics

Journal of

Function Spaces

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Optimization

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014