Achievement Growth: N E International and U.S. State Trends in Student Performance

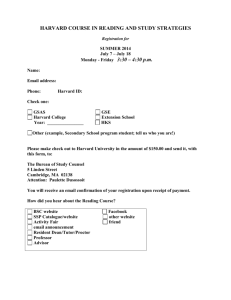

advertisement