Faster Fully Polynomial Approximation Schemes for

Knapsack Problems

by

Donguk Rhee

B.Math, University of Waterloo, 2010

M.Math, University of Waterloo, 2012

SUBMITTED TO THE SLOAN SCHOOL OF MANAGEMENT

IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF

MASTER OF SCIENCE IN OPERATIONS RESEARCH

AT THE

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2015

c 2015 Massachusetts Institute of Technology. All rights reserved.

Signature of Author

Sloan School of Management

May 15, 2015

Certified by

James B. Orlin

E. Pennell Brooks (1917) Professor in Management

Sloan School of Management

Thesis Supervisor

Accepted by

Patrick Jaillet

Dugald C. Jackson Professor

Department of Electrical Engineering and Computer Science

Co-director, Operations Research Center

Faster Fully Polynomial Approximation Schemes for

Knapsack Problems

by

Donguk Rhee

Submitted to the Sloan School of Management on May 15, 2015 in Partial Fulfillment

of the Requirements for the Degree of Master of Science in Operations Research

ABSTRACT

A fully polynomial time approximation scheme (FPTAS) is an algorithm that returns

(1 − )-optimal solution to a maximization problem of size n, which runs in polynomial

time in both n and 1/. We develop faster FPTASs for several classes of knapsack

problems.

In this thesis, we will first survey the relevant literature in FPTASs for knapsack problems. We propose the use of floating point arithmetic rather than the use of geometric

rounding in order to simplify analysis. Given a knapsack problem that yield an (1 − )optimal solution for disjoint subsets S and T of decision variables, we show how to attain

e −2 ). We

(1 − 1.5)-optimal solution for the knapsack problem for the set S ∪ T in O(

use this procedure to speed up the run-time of FPTASs for

1. The Integer Knapsack Problem

2. The Unbounded Integer Knapsack Problem

3. The Multiple-Choice Knapsack Problem, and

4. The Nonlinear Integer Knapsack Problem

Using addition ideas, we develop a fast fully polynomial time randomized approximation scheme (FPRAS) for the 0-1 Knapsack Problem, which has the run-time of

O n min(log n, log 1 ) + −2.5 log3 1 .

Thesis Supervisor: James B. Orlin

Title: E. Pennell Brooks (1917) Professor in Management

ACKNOWLEDGMENT

I would first like to thank James Orlin, my thesis adviser, for all the help he has given.

I would like to thank the Operations Research Center for providing amazing opportunities.

Finally, I would like to thank my parents, who always fully supported my academic

pursuit.

Table of Contents

1 Introduction

1.1 Knapsack Problems . . . . . . . . . . . . . . . .

1.1.1 Definition . . . . . . . . . . . . . . . . .

1.1.2 Variations . . . . . . . . . . . . . . . . .

1.2 Approximation Algorithms . . . . . . . . . . . .

1.2.1 Fully Polynomial Approximation Scheme

1.2.2 Randomized Approximation Scheme . .

1.3 Organization of Thesis . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

11

11

11

12

13

13

14

14

2 Survey

2.1 A Simple FPTAS for Knapsack . . . . . . . . . .

2.1.1 Dynamic Programming for Knapsack . . .

2.1.2 Rounding Down the Profits . . . . . . . .

2.1.3 Algorithm . . . . . . . . . . . . . . . . . .

2.2 Ibarra and Kim . . . . . . . . . . . . . . . . . . .

2.2.1 Half Optimum . . . . . . . . . . . . . . . .

2.2.2 Rounding Down the Profits . . . . . . . .

2.2.3 Algorithm . . . . . . . . . . . . . . . . . .

2.3 Lawler . . . . . . . . . . . . . . . . . . . . . . . .

2.3.1 Modification of the Dynamic Programming

2.3.2 Progressive Rounding . . . . . . . . . . . .

2.3.3 Elimination of Sorting . . . . . . . . . . .

2.4 Kellerer and Pferschy . . . . . . . . . . . . . . . .

2.4.1 Progressive Rounding . . . . . . . . . . . .

2.4.2 Improving the Dynamic Program . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

15

15

15

16

16

17

17

18

18

19

19

20

21

21

22

23

.

.

.

.

.

25

25

26

27

27

29

3 Framework for Knapsack FPTAS

3.1 -Inner Approximations . . . . . .

3.1.1 Upper and Lower Inverse .

3.1.2 -Inner Approximations .

3.2 Floating Point Arithmetic . . . .

3.3 Exact Merging . . . . . . . . . .

.

.

.

.

.

7

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3.4

3.5

3.3.1 Merging Relation . . . . . . . .

3.3.2 MergeAll Procedure . . . . . . .

Approximate Merging . . . . . . . . .

3.4.1 ApproxMerge Procedure . . . .

3.4.2 ApproxMergeAll Procedure . .

Outline of Knapsack Algorithms . . . .

3.5.1 Outline of an Exact Algorithm .

3.5.2 Outline of a FPTAS . . . . . .

4 Knapsack Approximation Algorithms

4.1 Nonlinear Knapsack Problem . . . .

4.1.1 The Upper Inverse . . . . . .

4.1.2 Profit Threshold . . . . . . .

4.1.3 Algorithm . . . . . . . . . . .

4.1.4 Correctness . . . . . . . . . .

4.1.5 Running Time . . . . . . . . .

4.2 Multiple Choice Knapsack Problem .

4.3 Unbounded Knapsack Problem . . .

4.3.1 The Upper Inverse . . . . . .

4.3.2 Profit Threshold . . . . . . .

4.3.3 Algorithm . . . . . . . . . . .

4.3.4 Correctness . . . . . . . . . .

4.3.5 Running Time . . . . . . . . .

4.4 0-1 Knapsack Problem . . . . . . . .

4.4.1 The Upper Inverse . . . . . .

4.4.2 Profit Threshold . . . . . . .

4.4.3 Algorithm . . . . . . . . . . .

4.4.4 Correctness . . . . . . . . . .

4.4.5 Running Time . . . . . . . . .

4.5 Integer Knapsack Problem . . . . . .

4.5.1 The Upper Inverse . . . . . .

4.5.2 Profit Threshold . . . . . . .

4.5.3 Algorithm . . . . . . . . . . .

4.5.4 Correctness . . . . . . . . . .

4.5.5 Running Time . . . . . . . . .

5 Randomized Algorithm

e −5/2 ) . . . . . .

5.1 Achieving O(

5.2 Small Number of Items . . . .

5.3 Large Number of Items . . . .

5.4 Properties of ψT (p) (b, λ) . . . .

5.4.1 Lagrangian Relaxation

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

8

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

29

30

31

31

33

34

34

35

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

37

37

37

38

38

38

39

39

40

40

41

41

41

43

43

43

44

44

45

45

45

45

46

46

46

47

.

.

.

.

.

49

49

51

52

53

53

5.5

5.4.2 Simple Observations . . . . .

5.4.3 An Example of ψT (p) (b, λ) . .

5.4.4 Evaluation of ψT (p) (b, λ) Using

Randomized Algorithm . . . . . . . .

. . . . . . . .

. . . . . . . .

Median Find

. . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

53

54

55

57

6 Conclusion

59

Bibliography

61

9

Chapter 1

Introduction

1.1

1.1.1

Knapsack Problems

Definition

Knapsack problems are a well-known class of combinatorial optimization problems. We

have n items, each indexed by i ∈ N := {1, 2, · · · , n}. Item i has weight wi and profit

pi . The 0-1 Knapsack Problem is to select a subset of items that maximizes the total

profit under the constraint that the total weight does not exceed a given capacity C:

max z(C) =

X

s.t.

X

p i xi

i∈N

wi xi ≤ C

i∈N

xi ∈ {0, 1} for all i ∈ N.

Knapsack problems are commonly found in practice. Suppose there are n different

investment opportunities. Investment i requires wi dollars and returns profit of pi . Then

the problem of maximizing the profit given a capital of C is a knapsack problem. Besides

capital budgeting, knapsack problem can model other real life problems such as cargo

loading and cutting stock [8]. Its hardness was once proposed to used to secure knapsack

cryptosystems [10]. A study in 1998 ranked knapsack problem as having the 4th largest

need for implementations by industrial users [12].

It is well known that knapsack problems are NP-hard. However, their combinatorial

structure and simplicity attracted many theoreticians and practitioners to research these

problems, dating back to 1897 [9]. This has resulted in many published exact algorithms

and approximation algorithms. In this thesis, we will establish a framework for approximation algorithms for variants of knapsack problems and improve many run-times.

11

1.1.2

Variations

We will consider several extensions and generalizations of the 0-1 Knapsack Problem.

One way to generalize the problem is to allow multiple copies of items to be selected.

Suppose we have li copies of the item i. Then the resulting problem is formulated as

follows:

Integer Knapsack Problem

X

max

p i xi

i∈N

s.t.

X

w i xi ≤ C

i∈N

0 ≤ xi ≤ li , xi ∈ Z for all i ∈ N.

If there is no bound on the number of each item entirely, the resulting generalization

is called the Unbounded Knapsack Problem.

Unbounded Knapsack Problem

X

max

p i xi

i∈N

X

s.t.

w i xi ≤ C

i∈N

xi ∈ N0 for all i ∈ N.

A orthogonal way to modify the problem is to restrict how the items can be selected.

Instead of allowing a choice for each item, we can have sets of items and choose one

from each set. We let S1 , S2 , · · · , Sm be a partition of N .

Multiple Choice Knapsack Problem

X

max

p i xi

i∈S

s.t.

X

w i xi ≤ C

i∈S

X

xi = 1 for all j ∈ [1, m]

i∈Sj

xi ∈ {0, 1} for all i ∈ N

12

Finally, the most general problem we will consider is the Nonlinear Knapsack Problem. Instead of having a fixed profit assigned to each item, we have a curve of profits

for each items. We let pi (xi ) be the profit of selecting a weight of xi of item i.

Nonlinear Knapsack Problem

X

max

pi (xi )

i∈N

s.t.

X

xi ≤ C

i∈N

xi ∈ N0 for all i ∈ N

Note that in the above formulation, the coefficient of xi in the constraint is 1, because

xi is now the weight. The problem in which xi is the number of copies of item i can be

transformed into the non-linear knapsack problem given above. Thus, it is sufficient to

consider this formulation.

We will consider these variations of knapsack problem and provide a fast approximation algorithm for each.

1.2

Approximation Algorithms

Since all these knapsack problems are NP-hard, there is no polynomial time algorithm

assuming N P 6= P . However, it is possible to find a solution in polynomial time that

has small amount of error. This is the idea behind approximation algorithms.

1.2.1

Fully Polynomial Approximation Scheme

Suppose we have a maximization problem maxx z(x). Suppose that the size of the

instance of the problem is n and that x∗ is an optimal solution.

Definition 1.2.1. A polynomial time approximation scheme (PTAS) is an algorithm that takes an instance of the maximization problem and a parameter > 0 with

the following properties:

• It is guaranteed to produce a solution x0 such that z(x0 ) > (1 − )z(x∗ ).

• It runs in polynomial time in n for every fixed value of .

A fully polynomial time approximation scheme (FPTAS) is a PTAS with

polynomial run-time in both n and 1/. Most of the algorithms we present in this thesis

will be FPTASs.

13

1.2.2

Randomized Approximation Scheme

The last chapter of this thesis will consider a randomized approximation algorithm for

e −1/2 ) faster then the best

the 0-1 Knapsack Problem. Our randomized algorithm is O(

deterministic FPTAS.

Definition 1.2.2. A fully polynomial time randomized approximation scheme

(FPRAS) is an algorithm that takes an instance of the maximization problem and a

parameter > 0 with the following properties:

• It has at least 50% chance of producing a solution x0 such that z(x0 ) > (1−)z(x∗ ).

• It runs in polynomial time in n and 1/.

By running a FPRAS k times and taking the maximum, we can obtain an (1 − )optimal solution with probability greater than 1 − 2−k .

1.3

Organization of Thesis

The rest of the thesis is organized as follows: the second chapter will take a brief survey

of approximation algorithms of knapsack problems. We will start from a very simple approximation algorithm and then take a look at the first FPTAS of knapsack problem by

Ibarra and Kim. We will also study the improvement by Lawler and the quickest known

FPTAS by Kellerer and Pferschy. In chapter 3, we will build a framework in which we

develop our faster approximation algorithms. Background on concepts of lower and upper inverses, inner approximations, and floating point arithmetic are presented. Then we

will develop procedures that merge solutions of knapsack problems with disjoint subsets.

These procedures are used in our algorithms for dynamic programming. In chapter 4, we

present FPTAS for various knapsack problems. We approximately solve the Nonlinear

Knapsack Problem in O(n−2 log(n/) + n/ log(n/)) time, Multiple-Choice Knapsack

Problem in O(n log n + nm−2 log(m/)) time, the Unbounded Knapsack Problem in

O n + −2 log4 1 time, the 0-1 Knapsack Problem in O(n log n + −3 log 1 ) time, and

the integer Knapsack Problem in O(n log n+−3 log 1 ) time. Finally, chapter 5 will show

a faster randomized approximation algorithm for 0-1 Knapsack Problem, achieving the

run-time of O(n log n + −5/2 log3 1 ).

14

Chapter 2

Survey

2.1

A Simple FPTAS for Knapsack

We will begin by taking a look at a simple FPTAS for 0-1 Knapsack Problem with a

run-time of O(n3 /). For more details on the FPTAS shown in this section, see [4] [11].

2.1.1

Dynamic Programming for Knapsack

For the rest of the thesis, we will assume that the 0-1 Knapsack Problem does not

contain items with weight greater than C. Such items can be removed in O(n) time, so

it is safe to make this assumption.

Let P be an upper bound on the profits pi in an instance of the 0-1 Knapsack

Problem. Then the possible total profits are in the range of [0, nP ], where the notation

[a, b] refers to the set of integers {a, a + 1, · · · , b}. Suppose that we solve the problem of

achieving minimum weight subject to getting every possible profit. Then we can easily

add one more item to the solution by making the binary decision of whether to choose

the new item for a given profit goal.

To be more precise, let G(j, p) be the minimum total weight under constraints that

only the first j items can be used and that the total profit is at least p. That is,

(

)

X

X

G(j, p) := min

wi xi :

pi xi ≥ p, xi ∈ {0, 1} .

i≤j

i≤j

Then G(j, p) satisfies the following recursion:

(

0 if p = 0

G(0, p) =

∞ otherwise,

G(j, p) = min {G(j − 1, p), G(j − 1, p − pj ) + wj }

where g(j, p) = g(j, 0) for p < 0.

15

Using this recursion, G(j, p) can be computed for all j ∈ N , p ∈ [0, nP ] in O(n2 P )

time. To get the optimal objective to the 0-1 Knapsack Problem, scan the values of

G(n, p) until we find the largest p such that G(n, p) < C.

An optimal solution could be retrieved if we keep track of whether item j + 1 was

used at each recursion step. Then we can backtrack from G(n, p) to figure out which

items were chosen.

2.1.2

Rounding Down the Profits

We now have a polynomial algorithm for 0-1 Knapsack Problem if the profits are polynomially bounded in n. In order to get a FPTAS, consider scaling down the profits like

this: p0i = bpi /δc. We would have a problem that roughly looks like the original problem,

but with smaller range on the profit of items.

Our goal is to have that the total profit incur less than relative error from the floor

operation. Suppose x is a solution with objective value at least P . For one item, we

have pi − δp0i = pi − δbpi /δc < δ. Thus the error is

P

P

0

δn

δxi

xi =1 pi − δpi

P

<P

<

.

P

xi =1 pi

xi =1 pi

We want δn

≤ , so we pick δ = P/n. It is easy to prove that the optimal solution to

P

the rounded down problem is a -approximate solution to the original problem.

The dynamic programming algorithm can be used in the scaled down problem and

run in O(n3 /) time. Note that the run-time is polynomial in both n and 1/. Thus we

now have a FPTAS for 0-1 Knapsack Problem.

2.1.3

Algorithm

0-1 Knapsack Approximation Algorithm (Slow)

Rounding

Let P = maxi∈N pi , δ = P/n.

For i from 1 to n: p0i = bpi /δc.

Let P 0 = bP/δc,

Dynamic programming

Let G(0, 0) = 0.

For p0 from 1 to nP 0 : G(0, p) = ∞.

For i from 1 to n:

For p0 from 0 to nP 0 :

G(i, p0 ) = min {G(i − 1, p0 ), G(i − 1, p0 − bp0i /δc) + wi }

Output

For p0 from 0 to nP 0 :

If G(n, p0 ) > C: Return d(p0 − 1)δe.

16

2.2

Ibarra and Kim

Ibarra and Kim’s result in 1985 is the first non-trivial FPTAS for the 0-1 Knapsack

Problem [2]. Their algorithm has the run-time of O(n log n + −4 log 1 )). All the subsequent effort on FPTAS for knapsack problems are built on their work, so it is important

to examine their work. Our presentation of this algorithm is modified to match the

presentation of our main result and to simplify the presentation by omitting the details

concerning space complexity.

Their algorithm introduces two new ideas on top of the dynamic programming FPTAS. The first is that the items with small profits can be set aside and solved quickly

with small error using greedy algorithm. The second idea is to scale more aggressively

by getting better rough approximation of the optimal objective value. Recall that the

scaling factor δ was constrained by the lower bound on the optimal objective value (P

in the previous algorithm). By getting a better lower bound, we can δ to be larger,

reducing the number of profits to be considered. On the other hand, having a good

upper bound on the optimal objective value will also reduce the number of profits to be

considered (nP in the previous algorithm).

2.2.1

Half Optimum

In the 0-1 Knapsack Problem, 1/2-approximate solution can be found in O(n log n) time.

Such solution can be found by greedily picking items by profit density. We will first note

a simple lemma about greedy algorithm in the 0-1 Knapsack Problem. This lemma will

come in handy in a few other places as well.

Lemma 2.2.1. Consider an instance of a 0-1P

Knapsack Problem

Pk+1with pi /wi ≥ pi+1 /wi+1

k

for all i. Suppose that k was found to satisfy i=1 wi ≤ C ≤ i=1 wi . Then the solution

(

1 i≤k

xi =

0 i>k

is within pk+1 of the optimal. That is: z ∗ ≤ pk+1 +

objective value.

Pk

i=1

pi where z ∗ is the optimal

P

Proof. If the capacity constraint was relaxed by increasing C to k+1

i=1 wi , then x with

∗

xk+1 = 1 is clearly

Pk an optimal solution. Thus, z cannot have an objective value greater

than pk+1 + i=1 pi .

P

If no such k exists, the problem is trivial. Let 2zL := k+1

i=1 pi . Picking the first k

Pk

∗

items is a feasible solution, so i=1 pi ≤z . Picking just the k +1th item is also feasible,

Pk

∗

∗

so pk+1 ≤ z ∗ . Therefore, zL =

i=1 pi /2 + pk+1 /2 ≤ z . On the other hand, z ≤ 2zL

by the Lemma 2.2.1. Combining the two inequalities, we have zL ≤ z ∗ ≤ 2zL and zL is

a half-approximation of the optimal objective value z ∗ .

17

2.2.2

Rounding Down the Profits

We say that item i is cheap if pi < zL and expensive otherwise. We would like to

scale down the expensive profits like this: p0i = bpi /δc so that it introduces at most multiplicative error. We have

pi − δp0i = pi − δbpi /δc < δ = pi ·

δ

δ

< pi ·

pi

zL

so the multiplicative error from the rounding is at most zδL . Let’s pick δ = 2 zL .

Now our optimal solution will have multiplicative error of at most within the set of

expensive items. It will have additive error of at most zL within the set of cheap items,

because of the Lemma 2.2.1. Combined, the overall error should be bounded by 3zL .

2.2.3

Algorithm

0-1 Knapsack Approximation Algorithm (Ibarra and Kim)

Initial sorting and rounding

Sort the items

P such that

P pi /wi ≥ pi+1 /wi+1 for all i ∈ [1, n − 1].

zL = max{ i≤k+1 pi : i≤k wi ≤ C}/2.

Let Scheap := {i ∈ N : pi < zL } and Sexpensive = N \Scheap .

Let δ = 2 zL .

For i in Sexpensive : p0i = bpi /δc.

Let P 0 = b2zL /δc,

Dynamic programming on expensive items

Without loss of generality, let Sexpensive = [1, m].

Let G(0, 0) = 0.

For p0 from 1 to P 0 : G(0, p) = ∞.

For i from 1 to m:

For p0 from 0 to P 0 :

G(i, p0 ) = min {G(i − 1, p0 ), G(i − 1, p0 − p0i ) + wi }.

Greedy on cheap items

Let bbest = 0

For p0 from 0 to P 0 :

P

P

0

0

Find k such that

G(m,

p

)

+

w

≤

C

<

G(m,

p

)

+

i

m<i≤k

m<i≤k+1 wi .

P

P

0

0

If bbest < δp + m<i≤k pi , then bbest = δp + m<i≤k pi .

Return bbest .

18

The bottlenecks in running time are the sorting (which takes O(n log n) time) and the

dynamic programming (which takes O(mP 0 ) = O(n−2 ) time). Therefore, the run-time

is O(n log n + n−2 ). However, note that an optimal solution cannot have more than

P 0 /p0i items with rounded profit p0i . Thus it is sufficient to consider only P 0 /p0i items of

the same rounded profit with less weight. The total number of items to consider is at

most

Z 1/2

1/2

X

0

0

0

1/xdx = −2 log(1/)

bP /pi c ≈ P

p0i =1/

x=1/

Therefore, the run-time can be improved to O(n log n + −4 log 1 ).

2.3

Lawler

Lawler’s work in 1979 [5] introduces two tweaks to the algorithm proposed by Ibarra

and Kim. The first idea is to more aggressively round profits that are large by gradually increasing the rounding factor depending on the size of the individual profit. The

second idea eliminates the sorting by density, improving the first term of the runtime from O(n log n) to O(n min(log n, log 1 )). The overall run-time ends up being

O(n min(log n, log(1/)) + −4 ).

2.3.1

Modification of the Dynamic Program

Because of the progressive rounding used in Lawler’s algorithm, it is harder to work

with the previous dynamic program where functions are defined on [0, b] for some b. We

will be skipping values as the profits increase to further reduce the number of possible

profits and thus speeding up the algorithm.

Instead of keeping track of a function of profits to minimum weight subject to the

profit requirements, we will now keep track of list of sets that are extreme points on

the boundary of the feasible set. Initially, the

P list only

P has (0, 0). As we iterate over

the items, the list will contain pairs of form i∈S pi , i∈S wi for some set S ⊆ N . On

iteration i, make a new list by creating a pair (b + pi , c + wi ) for each pair (b, c), and

then merge the new list into the list. If we keep this up, we end up with 2n pairs that

list all possible feasible solutions. This is obviously bad, so we will trim the list at every

iteration during merge so that pairs that are dominated are removed. To be precise,

if both (b, c) and (b0 , c0 ) are in the list where c0 ≥ c and b0 ≤ b, then the pair (b0 , c0 )

is removed. Additionally, we can remove (b, c) such that c are greater than the given

capacity C. At the end of all iterations, the optimal value can be found by locating the

pair (b, c) with the largest c.

19

Example 2.3.1. Suppose n = 3, C = 15, p1 = 100, p2 = 120, p3 = 140, and w1 =

7, w2 = 6, w3 = 8. Then the list is

Iteration

Iteration

Iteration

Iteration

0:

1:

2:

3:

(0,0)

(0,0), (100, 7)

(0,0), (120, 6), (100, 7), (220, 13)

(0,0), (120, 6), (140, 8), (220, 13), (260, 14), (360, 21)

Thus the optimal value is 260.

This algorithm may be written differently, but it is in fact same as the previous

dynamic programming algorithm. The only difference is that only the extreme points

are stored, opposed to storing every point of the function. The run-time is the same:

O(n2 P ).

The optimal solution can be encoded on this list by keeping a pointer to the “parent”

pair as before. We omit the detail on retrieving the optimal solution and the space

complexity here, but it can be found in Lawler’s paper in detail.

2.3.2

Progressive Rounding

Recall the integral in the analysis of the run-time of the Ibarra and Kim’s algorithm.

Let’s take a more close look at this counting of relevant expensive items.

The rounded profits lie in the interval [1/, 1/2 ). In the interval [1/, 2/), having more than P 0 /(1/) = 1/ of items of each profit is not useful because their total

profit will exceed the optimal bound P 0 . Thus, we only care for (1/)(1/) = 1/2 items

in this interval, and the heavier items can be discarded without consideration. Similarly, the interval [2/, 4/) has (2/)(1/(2)) = 1/2 relevant items and [4/, 8/) has

(4/)(1/(4)) = 1/2 and so on. Thus, the total number of expensive items that are

relevant is O(−2 log 1 ). This is what the integral approximated.

Lawler observed that more expensive items can be rounded more aggressively so that

the number of possible profits is smaller. This would bring down the number of relevant

items and thus improving the run-time. Instead of rounding p0i = bpi /δc uniformly, let

p0i = bpi /(2k δ)c2k for pi ∈ [2k /, 2k+1 /), rounding progressively with bigger factor as the

profits increase. Since the relative error of the rounding is still less than , the previous

proof that bounds the approximation solution is still intact.

In the improved rounding, the interval [1/, 2/) still has all the profits so it has 1/2

relevant items. But the next interval [2/, 4/) only has 1/ possible profits, bringing

down the number of relevant items to 1/(22 ). The total number of expensive items

becomes O(−2 ), saving a log 1 factor.

20

2.3.3

Elimination of Sorting

In Ibarra and Kim’s FPTAS, the sorting of the items by profit-weight density served

two roles:

1. Computation of half-optimal value, thus allowing us to compute the profit threshold and scale factor.

2. Approximation of knapsack sub-problems involving cheap items by using greedy

algorithm.

We will now show that these two tasks can be performed without sorting.

To get the half-optimal value, we start by computing the density pi /wi for all items

in O(n) time. Next, get the median of the densities in O(n) time. Say d1 is the median.

Calculate

X

wi ,

pi /wi >d1

which is a sum of weights of all items with density above the median. If this sum is greater

than the capacity C, then get the median d2 of the densities in the set {i : pi /wi > d1 }

in time O(n/2). Otherwise get the median d2 of the densities in the set {i : pi /wi ≤ d1 }

in time O(n/2). Continue to evaluate the medians in this binary searching manner until

the item that causes the greedy selection to go over the capacity is found. Since the

medians are evaluated over the sets of size n, n/2, n/4, · · · , the whole procedure takes

O(n) time. Therefore, we computed the half-optimal value in O(n) time.

We could use the same median-find trick for the approximation of sub-problems

involving cheap items. However, unlike the task of finding half-optimal value which is

one-time deal, the sub-problems have to be solved for each pair that is left in the final

iteration of the modified dynamic program. Luckily, these sub-problems are all defined

on the same set of cheap items, difference only being the target capacity. So it can share

medians that were found in other sub-problems.

The number of pairs m is at most O(−2 ) as we just showed from the previous section.

In the worst case, each pairs will take completely different traversal of the binary search,

forcing us to compute every median possible up to the depth log m. Therefore, the

overall time for solving the sub-problems for all pairs is bounded by O(n log 1 ).

2.4

Kellerer and Pferschy

Lastly, we look at the latest FPTAS by Kellerer and Pferschy [3], which has the runtime of O(n min(log n, log(1/)) + −3 log 1 ) time. Their paper is largely concerned with

retrieval of the optimal solution after evaluating the approximate optimal objective

value, and doing so in a small space. However, we will focus on how the approximate

optimal objective value is reached.

21

2.4.1

Progressive Rounding

Kellerer and Pferschy also uses a type of progressive rounding found in Lawler’s algorithm so that the total number of expensive items to consider is at most O(1/2 ).

However, their setup is little more involved as their rounding method has more bearing

on the run-time of their modified dynamic program.

As before, the rounded profits are in the interval [1/, 1/2 ). Let’s assume that 1/

is an integer (otherwise, make new equal to 1/d 1 e). Break this interval into 1/ − 1

equally sized intervals:

Ij := [j/, (j + 1)/),

for j = 1, 2, · · · , 1/ − 1. Then each Ij is broken into d1/(j)e roughly equally sized

intervals:

Ijk := [j(1 + (k − 1)), j(1 + j)) ∩ Ij ,

for k = 1, 2, · · · , d1/(j)e.

All expensive profits lie in exactly one Ijk . We perform additional rounding, rounding

down all the profits in the interval Ijk to the smallest integer profit in the interval. It

can be observed that this rounding incurs at most relative error for each profit.

Example 2.4.1. Suppose that = 1/5 and zL = 1000. Then the expensive profits

[ 51 · 1000, 1000) is partitioned into the following intervals

I1 = [200, 400), I2 = [400, 600), I3 = [600, 800), I4 = [800, 1000),

and

I11 = [200, 200(1 + 1/5)), I12 = [200(1 + 1/5), 200(1 + 2/5)), I13 = [200(1 + 2/5), 200(1 + 3/5)),

I14 = [200(1 + 3/5), 200(1 + 4/5)), I15 = [200(1 + 4/5), 400),

I21 = [400, 400(1 + 1/5)), I22 = [400(1 + 1/5), 400(1 + 2/5)), I23 = [400(1 + 2/5), 600),

I31 = [600, 600(1 + 1/5)), I32 = [600(1 + 1/5), 800),

I41 = [800, 800(1 + 1/5)), I42 = [800(1 + 1/5), 1000),

partition I1 , I2 , I3 , I4 into 5, 3, 2, 2 intervals, respectively.

Consider I1 . We only need to consider 2/ least weight items for each profit value in

I1 . The profits in I1 round down to d1/e, so we have roughly 2/2 relevant items with

profit in I1 . Similarly, the number is 222 ·1/2 for I2 and 322 ·1/2 for I3 and so on. Therefore,

P1/−1 2

the total number of relevant expensive items is roughly j=1

· 1/2 = O(1/2 ).

j2

22

2.4.2

Improving the Dynamic Program

Recall again, how the dynamic programming was done. We iterated on the items and on

each iteration for an item, we updated the solution for all possible profits. This resulted

in the run-time of O(n · U ) where n is the number of items to iterate over (which was

O(1/2 ) in the previous algorithm) and U is the size of the range of profits to consider

(which also was O(1/2 ) in the previous algorithm).

Their improvement on the run-time comes from the fact that we can process the

items with same profit at the same time, resulting in smaller run-time. Let G(p) be the

minimum total weight given the constraint that the total profit is at least p, for some

˙ , where T is

set of items S. We now describe how to update G(p) to a set of items S ∪T

a set of items that has the same profit.

Without loss of generality, let T = {1, 2, · · · , m}, p1 = p2 = · · · = pm = q, w1 ≤

w2 ≤ · · · ≤ wm .

For p from 0 to U : alreadyU pdated(p) = false.

For p from 0 to U − q:

If alreadyU pdated(p) = false and G(p + q) > G(p) + w1

Gold (p + q) = G(p + q)

G(p + q) = G(p) + w1

alreadyU pdated(p + q) = true

numN ewItems = 1

l=1

While p + (l + 1)q ≤ U and numN ewItems ≤ m

WnextItem = G(p + lq) + wnumN ewItems+1

WstartOver = Gold (p + lq) + w1

Wold = G(p + (l + 1)q)

l =l+1

Gold (p + lq) = G(p + lq)

Compare WnextItem , WstartOver , Wold

If WnextItem is the smallest

G(p + lq) = WnextItem

alreadyU pdated(p + lq) = true

numN ewItems = numN ewItems + 1

Else if WstartOver is the smallest

G(p + lq) = WstartOver

alreadyU pdated(p + lq) = true

numN ewItems = numN ewItems + 1

Else if Wold is the smallest

Break

23

The variable alreadyU pdated makes sure that no solution is updated twice. Thus

this update runs in O(U ) time, and has no dependence on the number of items m. The

new run-time becomes O((number of distinct profits)·U ) from O((number of items)·U ).

There are O(−1 log 1 ) distinct expensive profits in the rounding of the previous section.

So we can achieve the run-time of O(n min(log n, log(1/)) + −3 log 1 ).

Another note-worthy work is by Magazine and Oguz [7] [6], in which they achieve

the run-time of O(nC log n), where C is the capacity of the knapsack.

24

Chapter 3

Framework for Knapsack FPTAS

We will now establish a framework in which FPTAS for Knapsack Problems can be

developed. We will focus on the 0-1 Knapsack Problem to illustrate our approach.

3.1

-Inner Approximations

Recall that the 0-1 Knapsack Problem has the following form

max f (c) =

X

s.t.

X

p i xi

i∈N

w i xi ≤ c

i∈N

xi ∈ {0, 1} for all i ∈ N.

To get an approximate solution quickly, we will want to manipulate pi , as we saw

in the previous chapter. However, wi ’s cannot be changed as that would affect the

feasibility.

It would be much easier if we could fiddle with the constraints and work with fixed

objective value. As we saw in the previous chapter, dynamic programming approaches

work by keeping track of solutions corresponding to all possible profits. Thus, we will

aim to work with this problem instead:

min g(b) =

X

w i xi

i∈N

s.t.

X

p i xi ≥ b

i∈N

xi ∈ {0, 1} for all i ∈ N.

25

In this re-formulation, we would keep the objective value fixed, but the constraint

would be subject to rounding. We will now make a simple setup so that we can do

precisely that.

3.1.1

Upper and Lower Inverse

If the above f (c) and g(b) are strictly monotone functions, then they are inverses of each

other. It is true that f (c) and g(b) are monotone, but its monotonicity is typically not

strict. So let’s define a notion of “inverse” for functions that are monotone.

Let f be a non-decreasing function with domain D and range R. We define the

upper inverse of function f to be the function g with domain R and with range D as

follows:

For every b ∈ R, g(b) = min{c ∈ D : f (c) ≥ b}.

We define the lower inverse of function f to be the function g with domain R and

with range D as follows:

For every b ∈ R, g(b) = max{c ∈ D : f (c) ≤ b}.

Example 3.1.1. Below are plots of an example function f (c) and its upper and lower

inverse.

b

b

9

8

7

6

5

4

3

2

1

0

c

0123456789

f (c)

b

9

8

7

6

5

4

3

2

1

0

c

0123456789

The upper inverse of f (c)

9

8

7

6

5

4

3

2

1

0

c

0123456789

The lower inverse of f (c)

If f is a strictly increasing function, then upper and lower inverse of f is simply the

inverse of f .

The following is an elementary property of the lower and upper inverse.

Lemma 3.1.2. Suppose that f (c) is a monotonically non-decreasing function. Let g be

the upper inverse of f . Then f is the lower inverse of g.

26

Proof. Suppose that f (c) = b0 for c ∈ [l, u] and f (l − 1) < b0 < f (u + 1). Then

g(b0 ) = l. If b > b0 , then g(b) > u. If b < b0 , then g(b) < l. Let h be the upper inverse

of g.

It follows that h(l) = max{b : g(b) ≤ l} = b0 . Moreover, h(u) = max{b : g(b) ≤ u} =

b0 . Because h is monotone, h(c) = f (c) for c ∈ [l, u], completing the proof.

The converse is also true by the same proof: if g is the lower inverse of f , then f is

the upper inverse of g.

3.1.2

-Inner Approximations

We now have the upper inverse of f (c), which is g(b). If we want to compute f (c), we

can compute g(b) instead and then take the lower inverse to get the answer. However,

our goal is to approximate f (c). So we must figure out what it means to approximate

the lower inverse. This is what -inner approximations do.

Let f be any non-decreasing integer valued function. We say that fe is an -approximation of f if (1 − )f (c) ≤ fe(c) ≤ f (c) for all c.

Let g be any non-decreasing integer valued function. We say that ge is an -innerapproximation of g if for all b, ge(d(1 − )be) ≤ g(b) ≤ ge(b).

Lemma 3.1.3. Suppose that f is a monotonically non-decreasing function, and that g

is the upper inverse of f . Suppose that ge is an -inner-approximation of g, and that fe

is the lower inverse of ge. Then fe is an -approximation of f .

Proof. Suppose that f (c) = b0 for c ∈ [l, u] and f (l − 1) < b0 < f (u + 1). Then

g(b0 ) = l. If b > b0 , then g(b) > u. If b < b0 , then g(b) < l. By assumption,

ge(d(1 − )b0 e) ≤ g(b0 ) ≤ ge(b0 ) = l.

Then for c ∈ [l, u] , fe(c) = max{b : ge(b) ≤ c} ≥ d(1 − )b0 e. This completes the proof.

Because of Lemma 3.1.3, we can work with -inner-approximation of the upper inverse

in order to find an -approximation of the original function.

3.2

Floating Point Arithmetic

As we saw from the survey of previous results, one major technique utilized in FPTAS

for Knapsack Problems is to round the profits down to a set of values that are globally

geometrically increasing. When this is done to a set of numbers in the interval [0, B],

the number of possible values goes down from O(B) to O(log B). The downside of the

technique is that it is hard to work with and the analysis becomes very ugly. Keller and

Pferschy chose their scaling so that the values are locally linear and globally geometrical, however their method still introduced complicated terms for the resulting rounded

profits.

27

We propose that it is simpler to use floating point numbers. Instead of rounding

down profits to

1

1+

8

m = {1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 13, 15, 16, 19, 21, 24, 27, · · · },

we will round down the profits to

{u · 2v : u < 8} = {1} ∪ {2, 3} ∪ {4, 5, 6, 7} ∪ {8, 10, 12, 14} ∪ {16, 20, 24, 28} ∪ · · · .

We say that integer a is a floating point integer with at most K bits if a can be

represented in the form u×2v , where u and v are both integers, and 0 ≤ u < 2K . Integer

u is called the mantissa, and v is called the exponent. We also write that a ∈ Float(K).

Integers in Float(K) may have multiple representations; e.g., 48 ∈ Float(4) has three

distinct representations; 48 = 3 × 24 = 6 × 23 = 12 × 22 . We say a = u × 2v is written

in the canonical form if 2K−1 ≤ u ≤ 2K − 1, i.e. has the maximum possible mantissa.

Any integer in Float(K) has a unique canonical form.

Suppose that a is a nonnegative real number. Then bacK is defined as max{a0 : a0 ≤

a and a0 ∈ Float(K)}. That is, bacK is the largest K-bit Floating point integer that is

at most a. Similarly, we say daeK = min{a0 : a0 ≥ a and a0 ∈ Float(K)}.

We say that g ∈ Step(K) if g(b) = g(dbeK ) for all b in the domain of g. We call such

g a K-step function.

Suppose that g is a non-decreasing function. Let dgeK be the function g 0 defined

as follows: for all b, g 0 (b) = g(dbeK ). Then g 0 is the minimum function such that

g 0 ∈ Step(K) and g 0 ≥ g. Clearly, g 0 is a step function, where steps only take place on

values b ∈ Float(K).

Lemma 3.2.1. Suppose that g is a non-decreasing function, K ∈ Z, and that ≥ 2−K+1 .

Then the function dgeK is an -inner-approximation of g.

Proof. Clearly, g(b) ≤ g(dbeK ) = dgeK (b). It remains to show that g(d(1 − )beK ) ≤

g(b). Suppose that d(1 − )beK = u × 2v for some 2K−1 ≤ u ≤ 2K − 1. Then

v

(1 − )b > (u − 1) × 2 =

1

1−

u

·u×2 ≥ 1−

thus b > d(1 − )beK and we are done.

28

v

1

2K−1

d(1 − )beK

3.3

Exact Merging

We get back to our Knapsack Problem. Let fS (c) be the optimal value function for the

0-1 Knapsack problem, as restricted to variables whose index is in subset S. That is,

for c ∈ [0, C],

X

fS (c) = max

p i xi

i∈S

s.t.

X

w i xi ≤ c

i∈S

xi ∈ {0, 1} for all i ∈ S.

We let gS () be the upper inverse of fS (). That is, for some upper bound B on the

objective value (B for budget), and for b ∈ [0, B],

X

gS (b) = min

w i xi

i∈S

s.t.

X

p i xi ≥ b

i∈S

xi ∈ {0, 1} for all i ∈ S

Recall that fS () can be approximated by taking the lower inverse of an -inner approximation of its upper inverse, gS (). Before we get to computing an -inner approximation

of gS (), we will look at how to compute gS () exactly.

3.3.1

Merging Relation

The function gS∪T () can be obtained from gS () and gT () where S and T are disjoint sets.

Below lemma shows how:

Lemma 3.3.1. Suppose that S and T are disjoint subsets of N . Then gS∪T () is obtained

from gS () and gT () as follows. For all b ∈ [0, B],

gS∪T (b) = min{gS (b0 ) + gT (b − b0 ) : b0 ∈ [0, b]}.

(3.3.1)

We say that gS () satisfies merging relation if (3.3.1) holds. We write gS∪T =

Merge(gS , gT ).

The merging relation can be seen in other variants of knapsack problem as well.

Upper inverse gS (b) tend to satisfy the merging relation if it is the upper inverse of

fS (c), which is a knapsack problem on the set of items S where c is the capacity of

knapsack. This is because gS (b) will turn out to be a problem of minimizing total

weight while guaranteeing a profit of b. Thus the minimization problem of gS∪T (b) can

be solved by finding the best way to split the profit requirement b between S and T and

then by adding up the minimum objective value gS (b0 ) and gT (b − b0 ).

29

3.3.2

MergeAll Procedure

If gS () satisfies merging relation, then we can merge gS () for any number of disjoint sets.

Suppose S1 , S2 , · · · , Sm are disjoint, and gSi () are known. We assume that m is a

power of 2 for simplicity of presentation, and let Si1 := Si for notational convenience.

Our algorithm will consist of log m iterations. At the kth iteration, compute gS k+1 () for

i S

k

k

by merging gS2i−1

and gS2ik . The end result is gS () where S = i Si .

Sik+1 := S2i−1

∪ S2i

k

First iteration

gS11

gS21

gS31

gS41

gS51

gS61

gS71

gS81

Second iteration

Third iteration

gS12

gS13

gS22

gS14

gS32

gS23

gS42

Procedure MergeAll({g1 , · · · , gm })

INPUT:

A set of m non-decreasing functions {g1 , · · · , gm }.

OUTPUT:

A non-decreasing function g that is the merging of g1 , · · · , gm

PROCEDURE:

gi1 = gi for all i ∈ [1, m]

For r from 1 to log m

For j from 1 to m/2r

r

r

gjr+1 = Merge(g2j−1

, g2j

)

(log m)+1

Return g1

30

3.4

Approximate Merging

With MergeAll, we now have means to compute gS (). However, this is too slow. We

will now see how we can speed up the procedure by computing an -inner approximation

of gS () instead.

3.4.1

ApproxMerge Procedure

With Lemma 3.3.1 in mind, we define an operation called ApproxMerge.

Procedure ApproxMerge(e

g1 , ge2 , K)

INPUT:

Non-decreasing functions ge1 and ge2 , and integer K. Let [L, U ] denote the domains

of ge1 and ge2 .

OUTPUT:

A non-decreasing function ge ∈ Step(K) with domain [L, U ] that approximates the

merging of ge1 , ge2

PROCEDURE:

For all b ∈ Float(K) ∩ [L, U ], do

Let b = u × 2v where u ∈ [2K−1 , 2K − 1] (i.e. the canonical form of b)

ge(b) = min{e

g1 (u1 × 2v ) + ge2 (u2 × 2v ) : u1 + u2 = u, u1 , u2 ∈ N0 },

where ge1 (0) and ge2 (0) are defined as 0.

The major time saving comes from the fact that the output is a step function, instead

it being computed over all of [L, U ]. This reduces the number of b’s to consider from

O(U − L) to O(2K log(U/L)). Another time saving is that for each b, the running time

to compute ge(b) is O(2K ) rather than O(2K log(U/L)). So we save a factor of log(U/L)

over the method in which b0 can vary over all possible elements of Float(K). The overall

run-time of ApproxMerge is O(4K log(U/L)).

Theorem 3.4.1. . The two sets S and T are disjoint and g is a function satisfying the

merging relation. Suppose that geS and geT are -inner approximations of gS and gT for

gS , geT , K). Then

some > 0. Let geS∪T () be the function obtained by ApproxMerge(e

−K+4

geS∪T () is a ( + 2

)-inner approximation of gS∪T ().

Proof.

We have the merging relation: gS∪T (b) = min{gS (b0 ) + gT (b − b0 ) : b0 ∈ [0, b]}. Since

geS (·) ≥ gS (·) and geT (·) ≥ gT (·), it follows that geS∪T (·) ≥ gS∪T (·). It remains to show

that gS∪T (b) ≥ geS∪T (a) for some a ≥ (1 − − 2−K+4 )b

Suppose now the case that b ∈ Float(K), and b0 is chosen so that gS∪T (b) = gS (b0 ) +

gT (b − b0 ). Without loss of generality, lets assume that b0 ≥ b/2.

31

Clearly,

gS∪T (b) = gS (b0 ) + gT (b − b0 )

≥ geS (d(1 − )b0 eK ) + geT (d(1 − )(b − b0 )eK ).

Let uS × 2vS and uT × 2vT be the canonical form of d(1 − )b0 eK and d(1 − )(b − b0 )eK ,

respectively. Then

uS × 2vS = d(1 − )b0 eK ≤ dbeK ≤ (1 + 2−K+1 )b ≤ 2b

and uS ≥ 2K−1 , thus 2vS ≤ b · 2−K+2 .

Recall that we want a such that gS∪T (b) ≥ geS∪T (a) and w ≥ (1 − − 2−K+4 )b. The

first inequality will be satisfied if a = (αS + αT ) × 2v where αS + αT ∈ [2K−1 , 2K − 1],

αS × 2v ≤ uS × 2vS , and αT × 2v ≤ uT × 2vT .

If aS + baT · 2vT −vS c ≤ 2K − 1, then we simply let αS = uS and αT = buT · 2vT −vS c:

gS∪T (b) ≥ geS (uS × 2vS ) + geT (uT × 2vT )

≥ geS (uS × 2vS ) + geT (buT × 2vT −vS c × 2vS )

≥ geS∪T ((uS + buT × 2vT −vS c) × 2vS ).

and

≥

=

=

≥

≥

(uS + buT · 2vT −vS c) × 2vS

(uS + uT · 2vT −vS ) × 2vS − 2vS

uS × 2vS + uT × 2vT − 2vS

d(1 − )b0 eK + d(1 − )(b − b0 )eK − 2vS

(1 − )b0 + (1 − )(b − b0 ) − 2vS

(1 − )b − 2vS

≥ (1 − − 2−K+2 )b.

If uS + buT · 2vT −vS c > 2K − 1, then

gS∪T (b) ≥ geS (uS × 2vS ) + geT (uT × 2vT )

≥ geS (buS /2c × 2vS +1 ) + geT (buT × 2vT −vS −1 c × 2vS +1 )

≥ geS∪T ((buS /2c + buT × 2vT −vS −1 c) × 2vS +1 ).

and

≥

=

=

≥

(buS /2c + buT × 2vT −vS −1 c) × 2vS +1

(uS /2 + uT · 2vT −vS −1 ) × 2vS +1 − 2 · 2vS +1

uS × 2vS + uT × 2vT − 2vS +2

d(1 − )b0 eK + d(1 − )(b − b0 )eK − 2vS +2

(1 − )b0 + (1 − )(b − b0 ) − 2vS +2

≥ (1 − )b − 2βS +2

≥ (1 − − 2−K+4 )b.

32

In both cases, we conclude that gS∪T (b) ≥ geS∪T (d(1 − − 2−K+4 )beK ).

3.4.2

ApproxMergeAll Procedure

Finally, we describe how to merge m -inner approximations of gSi to obtain an O()inner approximation of g∪i Si . This is done just like MergeAll.

Procedure ApproxMergeAll({e

g1 , · · · , gem }, K)

INPUT:

A set of m non-decreasing functions {e

g1 , · · · , gem } and integer K.

OUTPUT:

A monotonically non-decreasing function ge that approximates the merging of

ge1 , · · · , gem

PROCEDURE:

gei1 = gei for all i ∈ [1, m]

For r from 1 to log m

For j from 1 to m/2r

r

r

gejr+1 = ApproxMerge(e

g2j−1

, ge2j

, K + 3 + dr/3e)

(log m)+1

Return ge1

The noteworthy part of this procedure is that the mantissa accuracy at rth iteration

is K + 3 + dr/3e, which is slowly increasing. If we fix this to some constant (say K), then

the error from each iteration will sum to O(log m). This is no good since m could be as

big as n. We could instead increase the number of mantissa by one at every iteration

(say K +r) to combat this. Then the error from each iteration is bounded by a geometric

sequence and they will sum to a constant. However, the running time of ApproxMerge is

O(4K ) in terms of K. Thus the running time for the entire procedure would be too high.

It turns out that increasing the number of mantissa every three iteration is a way to 1)

keep the error constant and yet 2) keep the running time low. The next two theorems

will prove these two facts.

Theorem 3.4.2. . Let S1 , · · · , Sm be disjoint sets and {gSi : i ∈ [1, m]} be a set of

functions that satisfies the merging relation. Suppose that geSi are -inner approximations

gSi :

of gSi for some > 0. Let ge∪i Si () be the function obtained by ApproxMergeAll({e

−K

i ∈ [1, m]}, K). Then ge∪l Sl () is a ( + 2 )-inner approximation of g∪l Sl ().

Proof. By theorem 3.4.1, the error introduced at r = 3t − 2, 3t − 1, 3t is 2−K−2−t . That

is, geS (b) is an + 3 · 2−K−3 -inner-approximation of gS (b) after three rounds of iteration,

+ 3 · 2−K−3 + 3 · 2−K−4 -inner-approximation after three more rounds of iteration and

so on. After log m rounds of iteration, we obtain ge∪i Si (b) which is

+ 3 · 2−K−3 + 3 · 2−K−4 + · · · ≤ + 3 · 2−K−2 < + 2−K

-inner-approximation of g∪i Si (b).

33

Theorem 3.4.3. . Let S1 , · · · , Sm be disjoint sets and {gSi : i ∈ [1, m]} be a set

of functions with domain [L, U ]. Then ApproxMergeAll({gSi : i ∈ [1, m]}, K) has a

running time of O(m · 4K log(U/L)).

Proof. Recall that ApproxMerge(g1 , g2 , K) runs in O(4K log(U/L)) time. Hence, the rth

iteration runs in O(m/2r · 4K log(U/L)) time. In total, the run-time of ApproxMergeAll

is

O(m · 4K+1 log(U/L) + m/2 · 4K+1 log(U/L) + m/4 · 4K+1 log(U/L)

+m/8 · 4K+2 log(U/L) + m/16 · 4K+2 log(U/L) + m/32 · 4K+2 log(U/L)

+···)

=O(m4K log(U/L)(7/4 · 4 + 7/32 · 42 + 7/256 · 43 + · · · ))

=O(m4K log(U/L)).

If accessing a value of gei (b) takes O(α) time, then the above time increases to

O(m4K α log(U/L)).

3.5

Outline of Knapsack Algorithms

Before we show specific algorithms for various knapsack problems, we will first present

the general approach we take for our FPTAS. While the details will differ, the overall

idea for all FPTAS presented in this paper is very similar.

3.5.1

Outline of an Exact Algorithm

The rough outline of the algorithm given below yields an exact optimal solution to

Knapsack Problems. We present it as a preparation before getting to the approximate

algorithm.

Let fS (c) be the function of the optimal objective value to a knapsack problem of

our choice, where S is the set of items we can pick from and c is a parameter denoting

the capacity of the knapsack. Our goal is to find fN (C).

Instead of figuring out what fN (c) is, we will compute its upper inverse gN (b). The

function gN () can be obtained by merging g{i} () for all i ∈ N . Since g{i} () is trivial to

compute for any Knapsack Problem, we are done.

The outlined sketch of algorithm only returns the optimal objective value. The

corresponding optimal solution can be retrieved if the budget split at every single merge

is tracked (that is, for every Merge: gS∪T (b) = min{gS (b0 ) + gT (b − b0 ) : b0 ∈ [0, b]}, we

store b0 ). When the algorithm terminates, one can back-track from gN () to g{i} ()’s in

order to find which items were used to get the optimal objective value. We leave the

problem of optimizing the space complexity.

34

3.5.2

Outline of a FPTAS

The above exact algorithm can be modified to an approximate algorithm. Instead of

merging g{i} ()’s exactly using MergeAll, we will use ApproxMergeAll. Then the resulting function geN () is an -inner approximation of gN (). Taking the lower inverse of geN ()

yields a -approximation of fN ().

If the above idea is implemented naively, then the run-time of ApproxMergeAll will

involve O(log B) term, which we do not want for FPTAS. Thus we need to limit the

number of exponents used in ApproxMerge from O(log B) to a fully polynomial function.

In another words, we do not want work with Float(K)∩[0, B] as the domain of gS (b), but

instead restrict the domain to Float(K) ∩ [L, U ] for some L and U such that log(U/L)

is fully polynomial in n.

We achieve this by separately dealing with items with small profit before we perform

ApproxMergeAll. Pick a profit threshold L. We say an item is cheap if pi < L. Let

Scheap be the set of cheap items and Sexpensive be the set of expensive items. Then

gScheap is approximated using greedy algorithm and gSexpensive is inner-approximated using

ApproxMergeAll as we discussed. Finally, we merge the two approximations of gScheap

and gSexpensive to get an O()-inner approximation of gN . The restriction to the domain

[L, U ] can be determined by getting an rough bound on the optimal objective value.

Below is the general outline of our FPTAS of knapsack problems, summarizing all

of the above:

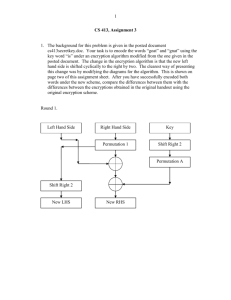

Step 1: Initial sorting

Get a rough bound on the optimal objective value z ∗ . A decent bound can often be

found by sorting the items by density and then using greedy algorithm. Let zL ≤ z ∗ ≤

q(n)zL for a polynomial q(n).

Step 2: Dealing with cheap items

An item is cheap if pi < zL /r(n) for some polynomial r(n). Let Scheap be the set

of cheap items and Sexpensive be the set of expensive items. Compute geScheap (), an

approximation of gScheap () by greedy algorithm. This approximation only has to be

good enough so that the additive error is at most O(zL ).

Step 3: Solving for expensive items

This step is the crux of our algorithm. For each expensive profit p, compute g{i:pi =p} ().

Use ApproxMergeAll to get geSexpensive (), an O()-inner approximation of gSexpensive ().

ApproxMergeAll is quick enough, as the domain of geS (b) is [zL /r(n), q(n)zL ] instead

of [0, B].

Step 4: Final Merge

Finish up by merging geScheap () and geSexpensive (). The optimal objective value z ∗ is approximated by max{b : geScheap (b0 ) + geSexpensive (b − b0 ) ≤ C}.

35

Note that in Nonlinear Knapsack Problem and Multiple-Choice Knapsack Problem,

we skip over step 2 and step 4 by simply ignoring cheap profits.

The outlined sketch of algorithm only returns the approximate optimal objective

value. Just as in the exact algorithm, the corresponding approximate optimal solution

can be retrieved if the budget split at every single merge is tracked.

Lastly, initial g{i:pi =p} (b) may not have to be computed for every b ∈ Float(K)∩[L, U ].

This is unlike subsequent calculations of geS , of which every geS (b) for all b ∈ Float(K) ∩

[L, U ] is stored in memory. Any data structure can be used to represent g{i:pi =p} (b)

without affecting correctness of the algorithm, but its construction time and query time

will affect the overall run-time. For instance, g{i:pi =p} (b) may turn out to be a linear

function in some knapsack problem. Then we do not need to evaluate the function value

at every b ∈ Float(K) ∩ [L, U ]. Just storing a slope and a point is sufficient, and a value

of the function can be queried in constant time. This may save time in some cases.

36

Chapter 4

Knapsack Approximation

Algorithms

4.1

4.1.1

Nonlinear Knapsack Problem

The Upper Inverse

The Nonlinear Knapsack Problem is as follows:

X

max

pi (xi )

i∈N

s.t.

X

xi ≤ C

i∈N

xi ∈ N0 for all i ∈ N

We let

fS (c) = max

X

s.t.

X

pi (xi )

i∈S

xi ≤ c

i∈S

xi ∈ N0 for all i ∈ S

and gS () be the upper inverse of fS ().

X

gS (b) = min

xi

i∈S

s.t.

X

pi (xi ) ≥ b

i∈S

xi ∈ N0 for all i ∈ S

37

This gS () satisfies the merging relation, thus theorem 3.4.1 and theorem 3.4.2 is applicable to gS ().

4.1.2

Profit Threshold

To get started, we let zL be a lower bound on the optimal objective function, where zL

is defined as follows: zL = max{pi (C) : i ∈ N }. The following lemma is a consequence

of our definition.

Lemma 4.1.1. Let z ∗ denote the optimal objective value for the non-linear knapsack

problem. Then

n

X

∗

zL ≤ z ≤

pi (C) ≤ nzL .

i=1

In order to limit the domain of the functions gS , we will replace pi () by p0i (), where

for each i ∈ N , and for each c ∈ [0, C],

(

0

if pi (c) < zL /n

p0i (c) =

.

pi (c) otherwise

Let z 0 be the optimal objective value for the knapsack problem with pi replaced by p0i .

Then for all i and c,

pi (c) − zL /n ≤ p0i (c) ≤ pi (c)

and thus (1 − )z ∗ ≤ z 0 ≤ z ∗ .

4.1.3

Algorithm

Nonlinear Knapsack Approximation Algorithm

zL = max{pi (C) : i ∈ N }.

For each i ∈ N and c ∈ [0, C]

if pi (c) < zL /n then p0i (c) = 0

Choose K so that 2K < 1/ < 2K+1 .

For each i ∈ N and for each b ∈ Float(K) ∩ [zL /n, nzL ]

ge{i} (b) = min{c : p0i (c) ≥ b}

geN = ApproxMergeAll({e

g{i} : i ∈ N }, K)

4.1.4

Correctness

As mentioned above, replacing p by p0 introduces an absolute error of at most zL /n,

and thus introduces a relative error of at most in the final solution. Henceforth we

will prove that the initialization and ApproxMerge procedures introduce an additional

relative error of at most O().

38

Clearly K-step function ge{i} (b) is an -inner approximation of g{i} (b). By 3.4.2, geN (b)

is + 2−K < 2 -inner approximation of gN (b).

4.1.5

Running Time

After replacing pi () by p0i (), the smallest non-zero objective value that is possible is

at least zL /n, while the optimal solution is at most nzL . Thus, we can now restrict

attention in Float(K) to

O

nzL

zL /n

= O(log(n2 /)) = O(log(n/))

different exponents.

The initial computation of ge{i} (b) for all i ∈ N and b ∈ Float(K) ∩ [0, nzL ] takes

O(n · 2K · log(n/)) ≤ O(n/ log(n/)) time.

Thus, the Nonlinear Knapsack Approximation Algorithm runs in

O(n−2 log(n/) + n/ log(n/)) time.

4.2

Multiple Choice Knapsack Problem

The Multiple-Choice Knapsack Problem is as follows:

max

X

p i xi

i∈S

s.t.

X

w i xi ≤ C

i∈S

X

xi = 1 for all j ∈ [1, m]

i∈Sj

xi = 0, 1 for all i ∈ N

where S is the disjoint union of S1 , S2 , · · · , Sm .

The Multiple-Choice Knapsack Problem can be turned into an instance of the Nonlinear Knapsack Problem as follows: for each j ∈ [1, m] define p0j (x0j ) as

max

X

i∈Sj

p i xi :

X

wi xi ≤ x0j ,

X

i∈Sj

i∈Sj

39

xi = 1, xi = 0, 1 .

Then the problem is reformulated as

X

max

p0j (x0j )

j

s.t.

X

x0j ≤ C

j

0

xj ∈

N0 for all j ∈ [1, m]

It now remains to use the result of the previous section. Note that ge{j} (b) can be

initialized quickly because of the specific structure of p0i (x0i ). The function ge{j} (b) is a step

function where discontinuity occurs at ai ’s. Therefore, it takes O(|Sj | log(|Sj |)) time to

prepare a data structure for ge{j} , where the valuation of the function takes O(log(|Sj |))

time.

Thus,

!

X

m

−2

O m log(m/) max |Sj | +

|Sj | log(|Sj |)

j

−2

= O(m

j=1

log(m/)n + n log n)

is the run-time of the algorithm.

4.3

4.3.1

Unbounded Knapsack Problem

The Upper Inverse

We let

X

fS,r (c) = max

p i xi

X

gS,r (b) = min

i∈S

s.t.

X

w i xi ≤ c

s.t.

i∈S

X

w i xi

i∈S

X

pi x i ≥ b

i∈S

X

xi ≤ r

i∈S

xi ≤ r

i∈S

xi ∈ N0 for all i ∈ S

xi ∈ Z, xi ≥ 0 for all i ∈ S

where gS,r (b) is the upper inverse of fS,r (b).

This gS,r () not only satisfies the merging relation in terms of S, but it also satisfies

a form of the merging relation in terms of r, in that

gS,2r (b) = min{gS,r (b0 ) + gS,r (b − b0 ) : b0 ∈ [0, b]}.

Thus, theorem 3.4.1 is applicable when merging two gS,r () into gS,2r ().

40

4.3.2

Profit Threshold

Let’s start by finding half-approximation to the optimal objective value. Suppose item

j have the largest profit density pi /wi . We set zL as the objective value obtained by

picking as many item j as possible: zL = pj bC/wj c. Note that pj (C/wj ) is the optimal

objective value if the integer constraint is removed, and is thus an upper bound on the

optimal objective z ∗ . Therefore, zL ≤ z ∗ ≤ 2zL and zL is a half-approximation to the

optimal objective value.

In order to bound the domain of the functions gS,r , we will separate items into two

sets: the set of cheap items, Scheap := {i ∈ N : pi < zL } and the set of expensive

items Sexpensive := N \Scheap . We compute an approximation of gScheap and an O()-inner

approximation of gSexpensive . Then they can be merged to get a O()-inner approximation

of gN .

4.3.3

Algorithm

Unbounded Knapsack Approximation Algorithm

Step 1: Initial sorting

Find item j with max density: j = argmini {pi /wi }

zL = pj bC/wj c.

Let Scheap := {i ∈ N : pi < zL } and Sexpensive := N \Scheap .

Step 2: Cheap items

Without loss of generality, let Scheap = [1, m].

Find cheap item k ∈ Scheap with max density: k = argmini {pi /wi : i ∈ Scheap }

geScheap (b) = wk bb/pk c

Step 3: Expensive items

r=0

For each b ∈ Float(dlog 1 e) ∩ [zL , 2zL ]

geSexpensive ,1 (b) = min{wi : pi ≤ b}

While 2r < 2

r =r+1

r−1 , g

r−1 , K ,

geSexpensive ,2r =l ApproxMerge

g

e

e

S

,2

S

,2

expensive

expensive

m

where K = − log log 1 − r log 1 − log1 1

geSexpensive = geSexpensive ,2r

Step 4: Final merge

Output max{bc + be : geScheap (bc ) + geSexpensive (be ) ≤ C}.

4.3.4

Correctness

In step 2, the values of geScheap at the multiples of pk are exactly equal to gScheap . Therefore,

the step function geScheap itself can only be off by at most pk < zL : more precisely,

gScheap (b) ≤ geScheap (b) < gScheap (b + zL ).

41

In step 3, geSexpensive ,1 () is dgSexpensive ,1 elog 1 so it is an -inner approximation by lemma

. We claim that resulting geSexpensive () is an 2-inner approximation of gSexpensive (). This

follows since

blog 2/c

+

X

− − log

2

log 1

−r log 1−

1

log 1

r=1

≤+2

log

log 1

blog 2/c

X

log 1−

2

1

log 1

!r

r=1

blog 2/c r

1

1−

log 1

log 2/ !,

1

1

1− 1−

1− 1−

log 1

log 1

1

1

1−

log

e

X

=+

log 1 r=1

≤+

log 1

≤+

log 1

1

≤ 2−

e

Note that ci ≥ zL for expensive

P items and v ranges from zL to 2zL . Therefore, we can

stop the merging when 2r = i∈S xi reaches 2/.

Let ze be the output of the algorithm, then

z ∗ = max{b : gN (b) ≤ C}

= max{u + v : gSc (u) + gSe (v) ≤ C}

≤ max{u + v : geSc (u − zL ) + geSe (d(1 − 2)ve) ≤ C}

1

≤ max{u0 + zL +

v 0 : geSc (u0 ) + geSe (v 0 ) ≤ C}

1 − 2

1

≤ z ∗ + max{u0 +

v 0 : geSc (u0 ) + geSe (v 0 ) ≤ C}

1 − 2

1

≤ z ∗ +

· max{u0 + v 0 : geSc (u0 ) + geSe (v 0 ) ≤ C}

1 − 2

1

· ze

≤ z ∗ +

1 − 2

and (1 − 3)z ∗ ≤ (1 − 2)(1 − )z ∗ ≤ ze. Therefore, the algorithm yields (1 − 3)approximate solution.

42

4.3.5

Running Time

Step 1 and step 2 takes O(n) time to scan through the items to find the minimum

density. Step 3’s ApproxMerge takes

blog 2/c

X

O

− log

4

log 1

−r log 1−

1

log 1

r=1

− log

=O 4

log 1

log2 1

=O 2 blog 2/c

1 X −r log

4

log

r=1

1−

blog 2/c 2r

1 X

log

r=1

1

log

log 1 − 1

1

log

1

log 1

!, !!

2 log 2

2

log 1

log 1

=O

−1

−1

log 1 − 1

log 1 − 1

1

1

3 1

=O 2 log · 1

log 1

1

4 1

=O 2 log

1

3 1

log

2

Therefore, the entire algorithm runs in O n + −2 log4

4.4

4.4.1

1

time.