Document 10859713

advertisement

Hindawi Publishing Corporation

International Journal of Combinatorics

Volume 2012, Article ID 908356, 18 pages

doi:10.1155/2012/908356

Research Article

A Convex Relaxation Bound for

Subgraph Isomorphism

Christian Schellewald

Independent Research, 7052 Trondheim, Tyholtveien 68, Norway

Correspondence should be addressed to Christian Schellewald, christian.schellewald@gmail.com

Received 31 August 2011; Accepted 27 December 2011

Academic Editor: Liying Kang

Copyright q 2012 Christian Schellewald. This is an open access article distributed under the

Creative Commons Attribution License, which permits unrestricted use, distribution, and

reproduction in any medium, provided the original work is properly cited.

In this work a convex relaxation of a subgraph isomorphism problem is proposed, which leads to a

new lower bound that can provide a proof that a subgraph isomorphism between two graphs can

not be found. The bound is based on a semidefinite programming relaxation of a combinatorial

optimisation formulation for subgraph isomorphism and is explained in detail. We consider

subgraph isomorphism problem instances of simple graphs which means that only the structural

information of the two graphs is exploited and other information that might be available e.g.,

node positions is ignored. The bound is based on the fact that a subgraph isomorphism always

leads to zero as lowest possible optimal objective value in the combinatorial problem formulation.

Therefore, for problem instances with a lower bound that is larger than zero this represents a

proof that a subgraph isomorphism can not exist. But note that conversely, a negative lower bound

does not imply that a subgraph isomorphism must be present and only indicates that a subgraph

isomorphism can not be excluded. In addition, the relation of our approach and the reformulation

of the largest common subgraph problem into a maximum clique problem is discussed.

1. Introduction

The subgraph isomorphism problem is a well-known combinatorial optimization problem

and often involves the problem of finding the appropriate matching too. It is also of particular

interest in computer vision where it can be exploited to recognise objects. For example, if an

object in an image is represented by a graph, the object could be identified as subgraph within

a possibly larger scene graph. Several approaches have been proposed to tackle the subgraph

isomorphism problem and we refer to a few 1–4 and their references therein.

Error-correcting graph matching 5—also known as error-tolerant graph matching—is

a general approach to calculate an assignment between the nodes of two graphs. It is based on

the minimisation of graph edit costs which result from some predefined edit operations when

one graph is turned exactly into the other. Commonly introduced graph edit operations are

deletion, insertion, and substitution of nodes and edges. Each graph-edit operation has a cost

2

International Journal of Combinatorics

assigned to it which is application dependent. The minimal graph edit cost defines the socalled edit distance between two graphs. The idea to define such a distance for graph matching

goes back to Sanfeliu and Fu 6 in 1983. Before that, the edit distance was mainly used for

string matching. Several approaches for error correcting graph matching have been proposed

that are based on different methods like tree search 3, genetic algorithms 7, and others see

e.g., 5 to name a few. In this paper we propose and apply a semidefinite programming

SDP relaxation to a quadratic optimization formulation for subgraph isomorphism. In

combinatorial optimization, semidefinite programming represents a valuable approach to

approximate NP-hard problems and to obtain bounds for these problems. The current

increase of interest in semidefinite programming is largely driven by the successful extension

of interior point algorithms for linear programming to semidefinite programming see e.g.,

8. A well-known example for semidefinite programming is the MAX-CUT approximation

by Goemans and Williamson 9. With the increase of the computational power of computers, SDP turned out to be useful for an increasing range of real world problems. For

example, it was applied to several problems in the field of computer vision including

segmentation/partitioning, grouping, restoration 10 matching 11, graph seriation 12,

and camera calibration 13.

2. Contribution and Aim of the Paper

The main contribution of this paper lies in the convex relaxation of a subgraph isomorphism

problem and the identification of a lower bound for this optimization problem. The computation of that bound is based on the SDP approximation of a combinatorial optimization

formulation for subgraph isomorphism. The combinatorial optimization formulation and

its convex relaxation is explained in detail. The approach is designed to find a subgraph

isomorphism which maps the entire node-set of the possibly smaller graph to a subset of

nodes in the second graph. We also discuss an interesting relation to an approach that is based

on a reformulation of the largest common subgraph problem into a largest clique problem

14, 15.

3. Organisation of the Paper

After providing the notation we use, we introduce a combinatorial quadratic optimization

formulation for the subgraph isomorphism problem that can be interpreted as an errorcorrecting graph matching approach.

The integer optimization problem we end up with is generally an indefinite quadratic

integer optimization problem which is known to be NP-hard 16 as Pardalos and Vavasis

showed that indefinite quadratic programs are NP-hard problems see 17. Then we explain

in detail a convex SDP relaxation of the combinatorial problem that leads to a lower bound for

the subgraph isomorphism problem. The bound can be computed with standard methods for

semidefinite programs see, e.g., 18–20. Finally, our experiments show that the bound can

be tight enough to prove that no subgraph isomorphism between two graphs can be found.

4. Preliminaries

In this paper, we consider simple graphs G V, E with nodes V {1, . . . , n} and edges

E ⊂ V × V . We denote the first possibly smaller graph with GK and the second graph with

International Journal of Combinatorics

3

GL . The corresponding sets VK and VL contain K |VK | and L |VL | nodes, respectively. We

assume that L ≥ K, which is in fact no constraint as we can always choose GL to represent the

larger graph. We make extensive use of the direct product C A ⊗ B, which is also known as

Kronecker product 21. It is the product of every matrix element Aij of A ∈ IRn×m with the

whole matrix B ∈ IRp×q resulting in the larger matrix C ∈ IRnp×mq .

A subgraph isomorphism is a mapping m : VK → V ⊂ VL of all nodes in the graph GK

to a subset V of VL with K nodes of the graph GL such that the structure is preserved. That

means that any two nodes i and j from GK that are adjacent must be mapped to nodes mi

and mj in GL that are adjacent too. If the nodes i and j in GK are not adjacent they must

be mapped to nonadjacent nodes in GL . The same has to be true for the inverse mapping

m−1 : V → VK which maps the nodes V of the subgraph to the nodes VK of GK .

5. Combinatorial Objective Function

In this section we propose and show the correctness of a combinatorial problem formulation

for finding a subgraph isomorphism. The general idea is to find a bipartite matching between

the set of nodes from the smaller graph to a subset of nodes of the larger graph. The matching

is evaluated by an objective function that can be interpreted as a comparison of the structure

between all possible node pairs in the first graph and the structure of the node pairs to which

the nodes are matched in the second graph. A matching that leads to no structural differences

has no costs and represents a subgraph isomorphism. Mathematically, the evaluation can be

where x ∈ IRKL resents a mapping and

formulated as a quadratic objective function x Qx,

KL×KL

∈ IR

contains the problem data of the subgraph isomorphism problem. The full task

Q

of finding a subgraph isomorphism can be stated as the following combinatorial quadratic

optimization problem, which details are explained below:

min

x

x Qx,

s.t. AK x eK ,

5.1

AL x ≤ e L ,

x ∈ {0, 1}

KL

.

The constraints make use of the matrices AK IK ⊗ eL ∈ IRK×KL and AL eK

⊗

L×KL

and ensure that the vector x is a binary 0,1-indicator vector which represents a

IL ∈ IR

bipartite matching between the two node sets of the graphs such that each node in graph GK

has a single partner in the graph GL . Here en ∈ IRn represents a vector with all elements 1. For

our purposes, the elements of the indicator vector x ∈ {0, 1}KL are arranged in the following

order:

x x11 , . . . , xL1 , x12 , . . . , xL2 , . . . , x1K , . . . , xLK .

5.2

Using double indices a nonzero vector element xji 1 indicates that the node i of the

first set of nodes VK is matched to the node j in the second set VL and otherwise xji 0. We

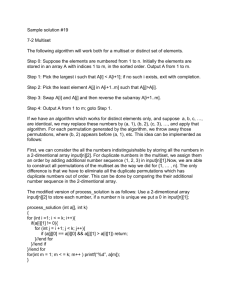

illustrate such an indicator vector in Figure 1 where a bipartite matching between two small

4

International Journal of Combinatorics

1

5

1

3

2

1

2

3

0

4

T

(0 1 0 0 0 1 0 0 0 0 0 0 0 1 0 )

Figure 1: An illustration of the 0,1-indicator vector with K 3 and L 5. The right side shows the

indicator vector representation of the particular bipartite matching which is shown on the left hand side

of this figure. For each of the K 3 mappings the vector contains exactly a single “1” within each of the K

consecutive partitions with L 5 elements.

sets of nodes and the corresponding indicator vector is shown. Note that for K 3 and L 5

the corresponding constraint matrices become

⎛

1

AK ⎝0

0

⎛

1

⎜0

⎜

⎜

AL ⎜0

⎜

⎝0

0

⎞

1 1 1 1 0 0 0 0 0 0 0 0 0 0

0 0 0 0 1 1 1 1 1 0 0 0 0 0⎠,

0 0 0 0 0 0 0 0 0 1 1 1 1 1

⎞

0 0 0 0 1 0 0 0 0 1 0 0 0 0

1 0 0 0 0 1 0 0 0 0 1 0 0 0⎟

⎟

⎟

0 1 0 0 0 0 1 0 0 0 0 1 0 0⎟.

⎟

0 0 1 0 0 0 0 1 0 0 0 0 1 0⎠

0 0 0 1 0 0 0 0 1 0 0 0 0 1

5.3

which contains the structural information of the graphs

The relational structure matrix Q

and appears within the objective function of the optimization problem 5.1 can be written in

a form that exploits the Kronecker product.

Definition 5.1. Relational Structure Matrix:

NK ⊗ N L N K ⊗ NL .

Q

5.4

Here NK and NL are the 0,1-adjacency matrices of the two graphs GK and GL . The matrices

N K and N L represent the complementary adjacency matrices which are computed as the

following.

Definition 5.2. Complementary Adjacency Matrices

N L ELL − NL − IL ,

N K EKK − NK − IK .

5.5

Here Enn ∈ IRn×n are matrices with all elements equal to one and In ∈ IRn×n denotes the unit

matrix. The complementary adjacency matrices have elements Nij 1 if the corresponding

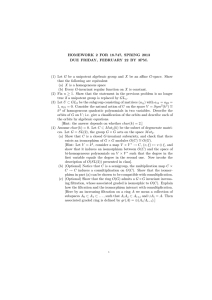

nodes i and j are not directly connected in the graph. To illustrate that, the adjacency matrix

NK for a small graph along with its complementary adjacency matrix are shown in Figure 2.

There, the matrices are represented as a binary image 0 black, 1 white.

We show this as similar representations will be used to illustrate some particular

matrices that appear later in this paper.

International Journal of Combinatorics

3

2

3

1

4

5

2

1

NK =

4

5

NK =

5

Figure 2: An example graph and its adjacency matrix NK along with its complementary counterpart N K .

The matrices are represented as an appropriate binary image 0 black, 1 white.

5.1. Subgraph Isomorphism

In the following, we show that a 0,1-solution vector x∗ of the optimization problem 5.1

that has an optimal objective value of zero represents a subgraph isomorphism. The matrix

is defined by 5.4. We first show that zero is the smallest possible value of the integer

Q

optimization problem. Then we show that every deviation from a subgraph isomorphism

results in an objective value larger than 0, meaning that an objective value of zero represents

a subgraph isomorphism.

Proposition 5.3. The minimal value of the combinatorial optimization problem 5.1 is zero.

and x are all nonnegative. In fact all their elements are either zero

Proof. The elements of Q

or one. Therefore, the lowest possible value of the quadratic cost term which can be rewritten

as the following sum:

x NK ⊗ N L N K ⊗ NL x

x Qx

K,L K,L N K NL rs xra xsb

NK ab N L

rs

ab

a,r b,s 5.6

ra,sb

Q

is zero.

Proposition 5.4. A solution with the minimal value of zero of the quadratic optimization problem

5.1 represents a subgraph isomorphism.

ra,sb xra xsb within the sum of 5.6

In order to prove this we look closer at the term Q

and show that it leads to a cost larger than 0 only if the considered matching violates the

condition for a subgraph isomorphism.

Proof. Due to the constraints, x represents a bipartite matching and only if the product xra xsb

ra,sb is equal to one can the term within the sum 5.6 be different from zero, and Q

ra,sb also as

NK ab N L rs N K ab NL rs has to be considered in detail. We refer to Q

structure comparison term. There are the following two cases that lead to xra xsb 1 in the

sum 5.6.

Case A. The node a and node b refer to the same node in GK meaning that a b. As the

diagonals of NK and N K are zero, one finds that NK aa 0 and N K aa 0. Then the term

NK aa N L rs N K aa NL rs xra xsa is always equal to zero and does not contribute to the

sum.

6

International Journal of Combinatorics

Table 1: List of all outcomes of the structure comparison term when two different nodes a and b of graph

GK are mapped to two different nodes r and s in the second graph GL . The first column describes the

relation between the concerned nodes and the last column shows the associated cost NK ab N L rs N K ab NL rs . Only in cases I and IV is the structure preserved and can lead to an isomorphism. No cost

is added in this cases. The other cases II and III do not preserve the structure and result in a total cost

larger than 0. For details see the text.

Node configurations

I: a,b adjacent; r,s adjacent

II: a,b adjacent; r,s not adjacent

III: a,b not adjacent; r,s adjacent

IV: a,b not adjacent; r,s not adjacent

NK ab

1

1

0

0

N L rs

0

1

0

1

N K ab

0

0

1

1

NL rs

1

0

1

0

Cost

0

1

1

0

Case B. The nodes a and b in GK refer to different nodes in GK a /

b. Due to the bipartite

matching constraint, a value xra xsb 1 represents the situation xra 1 and xsb 1, where

the nodes a and b in GK are mapped to two different nodes, r and s, in the second graph

GL respectively. Then four possible cases for the structure comparison term NK ab N L rs N K ab NL rs exist which result in a cost of either zero or one. These subcases are considered

separately below.

The subcases we have to consider in B include all four possible structural configurations between the two pairs of nodes a, b and r, s and are listed in Table 1. The

two mappings which do not preserve the structure, therefore violating the isomorphism

conditions, result in a cost of one. However, these four cases I–IV are described in more

detail below and we will see that a cost is added for every mapping that results in a difference

between the structure of graph GK and the considered subgraph of the second graph GL .

I If the two nodes a and b in the first graph GK are neighbours, NK ab 1, then no

cost is added in 5.6 if the nodes r and s in the second graph are neighbours too:

N L rs 0.

II Otherwise, if a and b are neighbours in GK , NK ab 1, and the corresponding

nodes r and s are no direct neighbours in the second graph, N L rs 1, then a cost

of 1 is added.

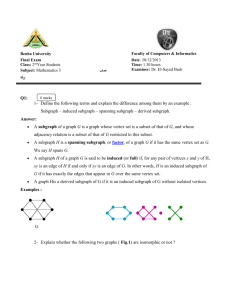

The mappings which correspond to configuration case I and II are visualised in

Figure 3.

III Analogously, the structure comparison term penalises assignments where pairs of

nodes a and b in the graph GK become neighbours r and s in the second graph

GL which were not adjacent before.

IV Finally, if a and b are not adjacent in the first graph GK and the nodes r and s in GL

are also not adjacent, no cost is added.

Figure 4 illustrates the situations III and IV in detail.

The itemisation of these four possible cases shows that only mappings that lead to a

change in the structure are penalised with a cost. Structure preserving mappings which are

compatible with a subgraph isomorphism are without costs and if all mappings are structure

preserving it represents a subgraph isomorphism.

International Journal of Combinatorics

Graph GL

Graph GK

a

xra = 1

b

7

Graph GK

a

Graph GL

xra = 1

r

r

b

xsb = 1

xs ′ b = 1

s

s′

a I: Good assignment no costs

b II: Bad assignment costly

Figure 3: a Case I adjacent nodes a and b in the graph GK are assigned to adjacent nodes r and s in the

graph GL . b Case II adjacent nodes a and b are no longer adjacent in the graph GL after the assignment.

The left mapping leads to no additional costs while the right undesired mapping adds 1 to the total cost.

Graph GK

a

Graph GL

xra = 1

x s′ b = 1

r

Graph GK

a

Graph GL

xra = 1

r

s′

b

a III: Bad assignment costly

b

xsb = 1

s

b IV: Good assignment no costs

Figure 4: a Case III a pair of nodes a and b becomes neighbours r and s after the assignment. This

undesired assignment adds 1 to the costs. b Case IV nodes a and b which are not adjacent in the object

graph GK are assigned to nodes which are also not adjacent in the scene graph GL . This assignment is

associated with no additional cost in 5.6.

Note that due to the symmetry of the adjacency matrices the quadratic cost term x Qx

is symmetric and every difference in the compared structures of the two graphs is considered

twice, resulting actually in a cost of 2 for every difference in the structure.

evaluate the full

Finally, the sum 5.6 and therefore the objective function x Qx

matching encoded in x. And only for matchings which lead to no difference in the mapped

substructure—and vice versa—all the terms within the sum 5.6 are zero. In this case, the

bipartite matching, represented by the vector x, is a subgraph isomorphism.

We wish to emphasise that the minimisation of 5.1 represents the search for a

bipartite matching which has the smallest possible structural deviation between GK and the

considered subgraph of GL . The optimization problem 5.1 can therefore be seen as a graph

edit distance with a cost of 2 for each addition or removal of an edge that is needed to turn the

first graph GK into the considered subgraph of the second graph GL .

6. Example Problem

In the next section we discuss in detail how we obtain the semidefinite relaxation of 5.1. In

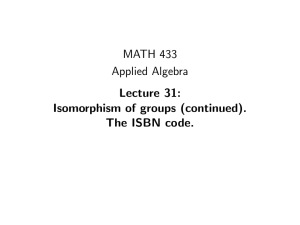

order to ease the reproduction of our approach, we illustrate some occurring details based

on the particular subgraph isomorphism problem that is shown in Figure 5. We will also be

8

International Journal of Combinatorics

3

5

6

2

4

3

7

4

2

8

1

1

9

5

6

15

10

7

11

14

12

13

Figure 5: A randomly created subgraph problem instance with K 7 and L 15. The two graphs

are shown along with their binary adjacency matrices. Is there a subgraph isomorphism? For the

shown problem instance we can compute a lower bound larger than 0, which proves that no subgraph

isomorphism is present.

able to conclude from a lower bound larger than 0 that for this problem instance no subgraph

isomorphism can be found.

7. Convex Problem Relaxation

In the following we explain the convex relaxation of the combinatorial isomorphism approach

5.1. It will be relaxed to a convex semidefinite program SDP which has the following

standard form:

LB min,

s.t.

TrQX,

TrAi X ci ,

for i 1, . . . , m, X 0.

7.1

The constraint X 0 means that the matrix X has to be positive semidefinite.

This convex optimization problem can be solved with standard methods like interior point

algorithms see, e.g., 22. Note that the solution of the convex relaxation 7.1 provides

a lower bound LB to the combinatorial optimization problem 5.1. A lower bound with a

value LB > 0 shows that no subgraph isomorphism can be present as the objective value of

5.1, for instance with subgraph isomorphism is zero. Below, we describe in detail how we

derive such a semidefinite program from 5.1.

7.1. SDP Objective Function

In order to obtain an appropriate SDP relaxation for the combinatorial subgraph matching

problem, we start with the reformulation of the objective function of 5.1

Tr x Qx

Tr Qxx

X

.

Tr Q

fx x Qx

7.2

Here we exploited the invariance of the trace operator under cyclic exchange. Besides

xx is positive semidefinite and has rank 1. The objective

being symmetric, the matrix X

and leaving the constraint X

0

function is relaxed by dropping the rank 1 condition of X

untouched. This makes the set of feasible matrices convex see, e.g., 8 and lifts the original

International Journal of Combinatorics

9

NK ⊗ N L N K ⊗ NL for the example subgraph

Figure 6: Here the relational structure matrix Q

isomorphism problem that is shown in Figure 5 is depicted.

problem 5.1 defined in a vector space with dimension KL into the space of symmetric,

positive semidefinite matrices with the dimension KL × KL. We note that the diagonal

reflect the approximation of the vector x which we are searching

elements of the relaxed X

2

for as xi xi is true for 0,1-binary values. We further remark that the computed lower bound

is constraint to be positive semidefinite.

LB in 7.1 can be negative although X

is a binary matrix and the particular matrix which

The relational structure matrix Q

represents the example subgraph isomorphism problem introduced in Section 6 is depicted

in Figure 6. One easily detect the patterns resulting from the Kronecker product formulation

in 5.4. On the fine scale, the pattern of the adjacency matrix of the graph GL can be seen.

The adjacency matrix of the graph GK is present on a larger scale compare the adjacency

matrices in Figure 5.

7.2. SDP Constraints

In the convex relaxation 7.1, we have to incorporate constraints in the form TrAi X ci ,

where Ai and X are matrices and ci ∈ IR is a scalar value. The aim is to approximate the

original constraints as good as possible in this way. Below we describe four different types

a–d of constraint matrices Ai along with their corresponding values for ci that we used

to tighten the relaxation and to approximate the bipartite matching constraints within the

standard SDP formulation 7.1.

a In order to get a tight relaxation, we exploit the fact that xi xi2 holds true for 0,1variables by introducing an additional first row and column in the problem matrix resulting

in matrices Q ∈ IRKL1×KL1 and X ∈ IRKL1×KL1 with the dimension increased by one

and X.

In Q the additional elements were set to zero in order not to change

compared to Q

the value of the objective function. The elements of the new row and the new column in X

are forced to be equal to the corresponding diagonal elements by using the following KL

10

International Journal of Combinatorics

constraint matrices

defined by:

int

int

Aj ∈ IRKL1×KL1 , j 2, . . . , KL 1 which are elements that can be

j

Akl 2δkj δlj − δkj δl1 − δlj δk1

for k, l 1, . . . , KL 1.

7.3

Here we make use of the Kronecker delta δij which is one if i j and zero otherwise.

Note that the Kronecker delta representation allows for an easy creation of the matrices in

any computer programming language. Here each constraint matrix int Aj has only 3 nonzero

elements. The corresponding constraint variables int cj are all zero.

b We restrict the first element in the matrix X to X11 1, using a single additional

constraint matrix one A ∈ IRKL1×KL1 whose elements can be expressed as

one

Akl δk1 δl1

for k, l 1, . . . , KL 1.

7.4

The matrix one A has only one A11 1 as nonzero element and the corresponding

constraint variable one c is 1.

c The equality constraints Lj1 xij 1, i 1, . . . , K, which are part of the bipartite

matching constraints AK x eK represent the constraint that each node of the smaller graph

is mapped to exactly one node of the scene graph. To model these, we define K constraint

matrices sum Aj ∈ IRKL1×KL1 , j 1, . . . , K, which ensure taking the chosen order of the

elements into account that the sum of the appropriate partition of the diagonal elements in

X is 1. Note that we operate on the diagonal elements of X as they reflect the vector x. The

matrix elements for the jth constraint matrix sum Aj can be expressed as follows:

sum

j

Akl jL1

ij−1L1

δik δil

for k, l 1, . . . , KL 1.

7.5

This defines K matrices where the appropriate partition with L consecutive elements

on the diagonal are set to one. All other elements are zero. For these constraints the

corresponding values of sum cj are all 1.

xx ∈ IRKL×KL , where x represents a bipartite matching,

d All integer solutions X

IK ⊗ELL −IL EKK −IK ⊗IL

have zero values at those matrix elements where the matrix Z

has nonzero elements. In order to approximate the bipartite matching constraints we want to

force the corresponding elements in the enlarged matrix X ∈ IRKL1×KL1 to be zero. The

matrices Enn ∈ IRn×n have all elements 1 and In ∈ IRn×n represent the unit matrices. We can

force the elements to be zero with the help of the constraint matrices which we denote Aars ,

They have the

Asab ∈ IRKL1×KL1 and that are determined by the indices a, r,s and s, a, b.

following matrix elements:

Aars

kl δk,aLr1 δl,aLs1 δk,aLs1 δl,aLr1 ,

ab

δk,sKb1

δl,sKa1 δk,sKa1 δl,sKb1,

Askl

with k, l 1, . . . , KL 1.

7.6

International Journal of Combinatorics

11

EKK − IK ⊗ IL IK ⊗ ELL − IL indicates all matrix elements here in

Figure 7: The shown matrix Z

for an example problem of the size K 7,

black that are forced to be zero within the solution matrix X

L 15. A single constraint forces two symmetric elements in the enlarged matrix X to be zero.

The indices a, r, s and s, a, b attain all valid combinations of the following triples where

s > r and b > a:

a, r, s : a 1, . . . , K; r 1, . . . , L; s r 1, . . . , L,

s, a, b : s 1, . . . , L; a 1, . . . , K; b a 1, . . . , K.

7.7

Even if the number of indices is high the structure of a single matrix is fairly simple

as every matrix has only two nonzero elements. For all these constraints the corresponding

constants c have to be zero. With this we get LL−LK/2KK−KL/2 additional constraints

that ensure zero values within the matrix X, where the corresponding elements in Z enlarged

have nonzero values. Therefore TrZX 0 is valid. Note

by one dimension compared to Z

that each constraint forces two elements in the solution matrix to be zero. The black pixels

in the matrix shown in Figure 7 indicate all the elements that are forced to be zero for the

example problem with K 7 and L 15.

Altogether this sums up to LL − LK/2 KK − KL/2 KL K 1 constraints of

the form TrAi X ci within the convex semidefinite problem formulation 7.1. By solving

the convex relaxation 7.1 we get a lower bound to the combinatorial optimization problem

5.1.

7.3. Computational Effort

The most computational effort within the SDP approach is needed for the computation of

the solution of the SDP relaxation 7.1. We used external SDP solvers for this task. An

independent benchmarking for several SDP solvers can be found in 23. A comparison of

three SDP solvers DSDP 19, PENSDP 20 and CSDP 18 on the basis of our own data is

12

International Journal of Combinatorics

Table 2: Mean computation time for three SDP solvers needed to solve the SDP relaxations of our experiments. The CSDP-solver fits best our needs.

Problem size K/L

7/15

CSDP 6.0.1

13.9 ± 1.2s

DSDP 5.8

17.7 ± 1.9s

PENSDP 2.2

19 ± 2s

shown in Table 2. There we compared the mean computation time needed to solve the SDP

relaxations for random problems of the size K 7 and L 15. The comparison was made on

a 2.8 GHz PC with 2 GB storage. We found that the CSDP-solver fits best our needs.

The CSDP-solver is a variant of the predictor corrector algorithm suggested by

Helmberg et al. 24 and is described in detail in 18. Below we sketch the storage

requirement and the computational time for this solver.

7.4. Storage Requirement

In 25 the author points out that the CSDP-solver requires approximately

8 m2 11 n21 n22 · · · n2s

7.8

bytes of storage. Here m is the number of constrains and n21 , n22 , . . . , n2s are the sizes of block

diagonal matrices used to describe the n×n problem and solution matrices of the semidefinite

program. In our case we have n1 n2 · · · ns 1 with s n. In terms of the graph sizes K

and L we have

n KL 1,

m1K

K 2 L KL2

.

2

2

7.9

Using that, we compute that a subgraph matching problem instance with K 7 and

L 15 needs about 10 MB while a problem instance with K 9 and L 14 already needs

17 MB of storage.

According to 18 the most difficult part in an iteration of the CSDP solver is

the computation of the Cholesky factorisation of a m × m matrix which requires a time

proportional to Om3 . This result is based on the here fulfilled assumption that individual

constraint matrices have O1 nonzero elements and that m is somewhat larger than n.

8. Relation to the Maximum Clique Formulation

In this section we discuss the connections that can be drawn between our subgraph

isomorphism approach and the maximum clique formulation for finding a maximum

common subgraph isomorphism. Details about the maximum clique search in arbitrary

graphs can be found for example in 14, 15, 26 and references therein. For our discussion

we introduce and use the following abbreviations. We denote the adjacency matrix of the

association graph, which is introduced to transform the maximum common subgraph problem

International Journal of Combinatorics

13

into a maximum clique problem, with A NK ⊗ NL N K ⊗ N L . This is equivalent to the

elementwise expression

Ara,sb 1 − NK ab − NL rs 2 , for a / b, r / s,

0,

otherwise,

8.1

that is, for example, provided in 15. This can be seen by rewriting the Kronecker product

representation of A directly into the elementwise expression Ara,sb NK ab NL rs 1 −

NK ab −δab 1−NL rs −δrs and exploiting that for 0,1-binary elements Nij Nij 2 is true.

We use Q NK ⊗N L N K ⊗NL for the structure matrix and Z EKK −IK ⊗IL IK ⊗ELL −

IL for the indicator matrix, where the integer solution—holding the bipartite matching

constraints—is expected to have zero values compare also Figure 7. Furthermore, the unit

matrix is denoted by I IK ⊗ IL and E EKK ⊗ ELL is the matrix with all elements one.

Note that all the involved matrices have a dimension of KL × KL. Using these abbreviations

one finds the following connection between the matrices involved in the maximum clique

formulation A and I and our SDP-approach Q and Z:

E Q Z A I.

8.2

Equation 8.2 shows explicitly the complementary nature of the matrices in the

SDP approach and the maximum clique formulation. We can rewrite the combinatorial

minimisation problem 5.1 as

min,

x

x Qx x Zx,

s.t., AK x eK ,

AL x ≤ e L ,

x ∈ {0, 1}KL ,

8.3

where x Zx 0 is guaranteed by the bipartite matching constraint. Recall, that the 0,1indicator-vector x ∈ IRKL with length KL has K elements set to one e x K, a single

one within each consecutive segment of length L. Exploiting this, we obtain x Ex K 2 and

x Ix K. Then using 8.2 we find

x Ex − x Ix K 2 − K x Q Zx x Ax,

8.4

and due to the fixed value on the left-hand-side of 8.4 minimizing x Q Zx is equivalent

to maximising x Ax with the same constraints for x. Therefore, maximising x Ax holding

the bipartite matching constraint is the search for a set of nodes with size K that deviates

the least from a clique. In the maximum clique problem, that arises from the largest common

subgraph problem, one is searching for a 0,1-vector x that indicates the nodes belonging to the

maximum clique. Thereby making no further assumptions about the constraints. However,

from the construction of the association graph from the largest common subgraph problem,

one finds that the largest clique w that can be found in the association graph in the presents

of a full subgraph isomorphism has w K members and that the obtained indicator vector

will hold the bipartite matching constraint. That becomes clear as two nodes in the association

graph that violate the bipartite matching constraints are not connected the adjacency matrix

14

International Journal of Combinatorics

A has zero elements where Z has elements one. and therefore cannot be part of the same

clique. That means also, that the reformulation of the largest common subgraph problem into

a maximum clique problem for graphs GK and GL with K ≤ L has a trivial upper bound of K

for the largest clique size w. Using the SDP relaxation 7.1 and that TrZX 0 we obtain a

lower bound LB for x Q Zx which we can use in 8.4 and get the following expression:

1−

1

K

−

x Ax

LB

≥

.

2

K

K2

8.5

Knowing from the Motzkin Straus theorem 27 or see, e.g., 26 that maxx Ax/K 2 1 − 1/K holds exactly if there is a maximum clique with size K indicated by a binary

vector x, we see that a positive lower bound LB > 0 shows that a clique with K nodes in

the association graph cannot be found meaning also that no full subgraph isomorphism can

be found in the original problem. Computing the following upper bounds for the clique-size

of the unconstraint maximum clique search that were, for example, used in 26:

w≤

3

9 8m − n

UB1 ,

2

8.6

w ≤ ρA 1 UB2 ,

8.7

w ≤ N−1 1 UB3 ,

8.8

w ≤n−

rank A

UB4 ,

2

8.9

one finds that these are largely above the bound w ≤ K which is inherent in the reformulation

of the largest common subgraph problem into a maximum clique problem. In the experiment

section we will see that the bounds for the clique size are not even close to the trivial bound

arising from the largest common subgraph problem reformulation. Of course they are still

useful for the general maximum clique problem of arbitrary graphs. We used the same

notation as the authors in 14 and 26. Therefore, ρA is the spectral radius of the adjacency

matrix A of the association graph, n KL is the number of nodes and m the number of edges

in the association graph and δ 2m/n2 is the density of ones in A. Furthermore, N−1 denotes

the number of eigenvalues of A that are smaller or equal to minus one −1 and A E − A − I

is the inverse/complementary adjacency matrix. Computing these bounds for our example

subgraph matching problem see Section 6, one obtains largest upper bounds for the clique

size in the range from 35 to 61 see also the bounds listed in Table 3 which is far above

the trivial bound of 7. Note that the computed upper bounds approximately agree with the

results for this density δ 0.346 in 26. However, the lower bound LB 0.855 > 0 proves

that the maximum clique size is lower than K 7 which accounts for a tight upper bound

w ≤ 6. As the clique-based upper bounds 7.1–8.9 are largely above the trivial bound

w ≤ 7 this suggests that additional knowledge in the clique formulation namely the bipartite

matching constraints for this particular problem-formulation can and should be exploited to

improve the upper bounds. We summarise our results/experiments in the next section.

International Journal of Combinatorics

15

Table 3: Bounds for the example problem. The largest clique that can be obtained in the related maximum

clique problem is w 6 and the lower bound LB 0.855 > 0 translates to the fact that the size of the

maximum clique must be lower than K 7, providing a tight upper bound ubnd w ≤ 6.

K

7

n KL

105

δ

0.346

w

6

LB 7.1

0.855

UB1 8.6

61.602

UB2 8.7

38.344

UB3 8.8

35

UB4 8.9

52.50

Table 4: Summarisation of the results. We performed 1000 NExp. random subgraph experiments from

which 661 NnoIso. instances are known to have no subgraph isomorphism. The column denoted with

Nfailed shows how often the bound was not tight enough to detect that no subgraph isomorphism can

be found. In Nbnd>0 cases the bound is tight enough. Without preprocessing the problem matrix size is

n × n KL 1 × KL 1 106 × 106 while with the described pruning the size varies from problem

instance to problem instance. However the mean problem matrix is reduced to about 69 × 69 n × n.

Preproc.

Non

Pruning

K/L

7/15

7/15

NExp.

1000

1000

NnoIso.

661

661

n×n

106 × 106

69 × 69

Nbnd>0

123 ≈19%

327 ≈49%

Nbnd≤0

877

673

Nfailed

538

334

9. Results to the Non-Isomorphism Bound

For the illustrative example see Figure 5 we computed a lower bound LB 0.855 > 0 using

the SDP relaxation 7.1, which proves that a subgraph isomorphism does not exist in this

problem instance. The bound and optimal values along with the distribution of the objective

values for that problem are shown in Figure 8. Note that the objective values of 5.1 that can

can only have values

be attained are restricted to discrete values as the quadratic term αx Qx

which are multiples of 2α. With α 1.0 the optimal objective value is 2.0. Note that we did not

apply any preprocessing like the elimination of mappings that could not lead to a subgraph

isomorphism for the illustrative example.

For a further investigation of the bound 7.1, we created 1000 connected random

subgraph matching problem instances for which we have chosen the size of the two graphs

GK and GL to be K 7 and L 15. The edge probability of the graph GK was set to 0.5

and the probability for an edge in the second graph was set to 0.2. The ground truth for these

experiments were computed using the VFlib 28.

The experiments reveal that for various problem instances, the relaxation is tight

enough to conclude that no subgraph isomorphism can exist. We obtained 123 problem

instances with a lower bound LB > 0 which proves that no subgraph isomorphism can occur

in these problem instances. The other 877 problem instances have a lower bound LB ≤ 0. From

the ground truth data we know that for 538 of these problem instances the combinatorial

optimum is larger than 0 indicating that for these cases the relaxation is not tight enough to

detect that a subgraph isomorphism cannot be found. These results are summarised in Table 4

in the row labeled with “non-” preprocessing. The upper bounds for the equivalent maximum

clique formulation of the same 1000 problems are all largely above the trivial bound of K 7

that is inherent in the problem formulation see Table 5 and in no case this allows to prove

that no subgraph isomorphism can occur.

In the next section we investigate the improvements to the bound computation

when mappings are removed from the problem formulation that cannot lead to a subgraph

isomorphism.

16

International Journal of Combinatorics

Probability density

Probability distribution of the objective function values

Graph: K = 7, L = 15, α = 1

0.2

0.15

0.1

∗

= 0.855

fbound

∗

= 40

fmax

∗

fopt

=2

0.05

0

0

8

16

24

32

40

Objective value

Figure 8: The distribution of the objective values for the subgraph isomorphism problem which is shown

can only

in Figure 5. The objective values are restricted to discrete values, as the quadratic term αx Qx

attain values which are multiples of 2α. With α 1.0 the optimal objective value is 2.0 and the obtained

lower bound is 0.855 > 0, which is a nonisomorphism proof for this problem instance.

Table 5: None of the upper bounds for the maximum clique size comes close to the trivial bound K 7

that is inherent in the reformulation of the largest common subgraph problem into the maximum clique

problem. The clique bounds are computed for the equivalent maximum clique formulation of the same

1000 problems used in Table 4.

Bound

UB1

UB2

UB3

UB4

K/L

7/15

7/15

7/15

7/15

Mean bound

66.00 ± 3.32

44.06 ± 3.88

40.09 ± 2.80

52.55 ± 0.19

Min.

53.31

30.69

32

52.50

Max

74.55

54.34

54

54.50

9.1. Towards Larger Problem Instances

We implemented a simple pruning technique to reduce the dimension of the SDP problem

size. The basic idea of this is to eliminate all mappings i → j for which the degree the number

of incident edges of a node i in the first graph is larger than the degree of node j in the

second graph. Such a mapping cannot lead to a subgraph isomorphism. The computational

advantage is that every removed mapping reduces the dimension of the problem matrix

by one n × n → n − 1 × n − 1 and also allows to remove the corresponding constraints

from the semidefinite problem. A feasibility test then checks whether the remaining mapping

opportunities can still lead to a bipartite matching such that all nodes of the smaller graph

can be mapped to different nodes in the larger graph. Note that such an infeasible situation

might also results in an infeasible semidefinite problem formulation. For this pruning

approach one has to keep in mind that the new bound of the remaining problem does not

necessarily represent a lower bound of the original problem. This is because in the case of

a nonisomorphism a removed mapping could be part of the matching which belongs to the

global optimum of the original problem. In fact the combinatorial optimum for the remaining

problem can only increase or stay the same and the computed bound is a lower bound to

the new problem only. However, for problem cases with an isomorphism the optimum does

not change it is still zero and therefore a bound LB > 0 of the new problem still proves that

an isomorphism cannot exist.

Applying the above-described technique to the problem instances in the previous

section, the size of the SDP problem matrices reduces from 106 × 106 KL 1 × KL 1 to

International Journal of Combinatorics

17

69 × 69 in the mean. The number of cases where the relaxation is not tight enough improved

from 538 to 334 out of 661 cases with a combinatorial optimum larger than 0 see also the

second row in Table 4.

We expect that the bound could be slightly tightened by including also the L

inequalities in the SDP relaxation that are currently not considered. However, first findings

indicate that the influence might be negligible which could be caused due to the fact that they

are already modeled by the incorporated constraints. Another computational improvement

within the SDP algorithms could result from an exploitation of the highly regular structure

within the SDP matrix.

10. Discussion

In this paper we proposed a convex relaxation bound to the subgraph isomorphism problem

and showed that the bound is not only of theoretical interest but also applies to several

instances of subgraph matching problems. It would be interesting to investigate which

criteria a subgraph matching problem has to fulfill to result in a tight relaxation. Such insights

could be useful in the process of creating or obtaining object graphs from images for object

recognition tasks. At the current stage, reasonable tight bounds result from semidefinite

problems with a problem matrix size of up to 200 × 200 elements. An improvement of the

proposed method could be expected when also the inequalities are included in the SDP

relaxation. However, for increasing problem instances the relaxation will get less tight and a

lower bound not larger than zero becomes more likely. But note that even less tight solutions

in practice still lead to good integer solutions see, e.g., 11.

Acknowledgments

This research was partly supported by Marie Curie Intra-European Fellowships within the

6th European Community Framework Programme and an Alain Bensoussan Fellowship from

the European Research Consortium for Informatics and Mathematics ERCIM.

References

1 J. R. Ullmann, “An algorithm for subgraph isomorphism,” Journal of the Association for Computing

Machinery, vol. 23, no. 1, pp. 31–42, 1976.

2 H. G. Barrow and R. M. Burstall, “Subgraph isomorphism, matching relational structures and

maximal cliques,” Information Processing Letters, vol. 4, no. 4, pp. 83–84, 1976.

3 B. T. Messmer and H. Bunke, “A new algorithm for error-tolerant subgraph isomorphism detection,”

IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 20, no. 5, pp. 493–504, 1998.

4 D. Eppstein, “Subgraph isomorphism in planar graphs and related problems,” Journal of Graph

Algorithms and Applications, vol. 3, no. 3, pp. 1–27, 1999.

5 H. Bunke, “Error correcting graph matching: on the influence of the underlying cost function,” IEEE

Transactions on Pattern Analysis and Machine Intelligence, vol. 21, no. 9, pp. 917–922, 1999.

6 A. Sanfeliu and K. S. Fu, “A distance measure between attributed relational graphs for pattern

recognition,” IEEE Transactions on Systems, Man and Cybernetics, vol. 13, no. 3, pp. 353–362, 1983.

7 Y.-K. Wang, K.-C. Fan, and J.-T. Horng, “Genetic-based search for error-correcting graph isomorphism,” IEEE Transactions on Systems, Man, and Cybernetics, Part B, vol. 27, no. 4, pp. 588–597, 1997.

8 H. Wolkowicz, R. Saigal, and L. Vandenberghe, Eds., Handbook of Semidefinite Programming, Kluwer

Academic Publishers, Boston, Mass, USA, 2000.

18

International Journal of Combinatorics

9 M. X. Goemans and D. P. Williamson, “Improved approximation algorithms for maximum cut and

satisfiability problems using semidefinite programming,” Journal of the Association for Computing

Machinery, vol. 42, no. 6, pp. 1115–1145, 1995.

10 J. Keuchel, C. Schnörr, C. Schellewald, and D. Cremers, “Binary partitioning, perceptual grouping,

and restoration with semidefinite programming,” IEEE Transactions on Pattern Analysis and Machine

Intelligence, vol. 25, no. 11, pp. 1364–1379, 2003.

11 C. Schellewald and C. Schnörr, “Probabilistic subgraph matching based on convex relaxation,” in

Proceedings of the 5th International Workshop on Energy Minimization Methods in Computer Vision and

Pattern Recognition (EMMCVPR ’05), vol. 3757 of Lecture Notes in Computer Science, pp. 171–186, 2005.

12 H. Yu and E. R. Hancock, “Graph seriation using semi-definite programming,” in Proceedings of the

5th IAPR International Workshop on Graph-Based Representations in Pattern Recognition (GbRPR ’05), vol.

3434 of Lecture Notes in Computer Science, pp. 63–71, 2005.

13 M. Agrawal and L. S. Davis, “Camera calibration using spheres: a semi-definite programming

approach,” in Proceedings of the 9th IEEE International Conference on Computer Vision (ICCV ’03), vol.

2, pp. 782–789, 2003.

14 I. M. Bomze, M. Budinich, P. M. Pardalos, and M. Pelillo, “The maximum clique problem,” in Handbook

of Combinatorial Optimization, D.-Z. Du and P. M. Pardalos, Eds., pp. 1–74, Kluwer Academic, Boston,

Mass, USA, 1999.

15 M. Pelillo, “Replicator equations, maximal cliques, and graph isomorphism,” Neural Computation, vol.

11, no. 8, pp. 1933–1955, 1999.

16 M. R. Garey and D. S. Johnson, Computers and Intractability, A Guide to the Theory of NP-Completeness,

W. H. Freeman and Company, San Francisco, Calif, USA, 1991.

17 P. M. Pardalos and S. A. Vavasis, “Quadratic programming with one negative eigenvalue is NP-hard,”

Journal of Global Optimization, vol. 1, no. 1, pp. 15–22, 1991.

18 B. Borchers, “CSDP, a C library for semidefinite programming,” Optimization Methods and Software,

vol. 11, no. 1, pp. 613–623, 1999.

19 S. J. Benson and Y. Ye, “DSDP3: dual scaling algorithm for general positive semidefinite

programming,” Tech. Rep. ANL/MCS-P851-1000, Argonne National Labs, 2001.

20 M. Kočvara and M. Stingl, “Pennon: a code for convex nonlinear and semidefinite programming,”

Optimization Methods & Software, vol. 18, no. 3, pp. 317–333, 2003.

21 A. Graham, Kronecker Products and Matrix Calculus with Applications, Ellis Horwood and John Wiley &

Sons, 1981.

22 Y. Ye, Interior Point Algorithms: Theory and Analysis, John Wiley & Sons Inc., New York, NY, USA, 1997.

23 H. D. Mittelmann, “An independent benchmarking of SDP and SOCP solvers,” Mathematical

Programming Series B, vol. 95, no. 2, pp. 407–430, 2003.

24 C. Helmberg, F. Rendl, R. J. Vanderbei, and H. Wolkowicz, “An interior-point method for semidefinite

programming,” SIAM Journal on Optimization, vol. 6, no. 2, pp. 342–361, 1996.

25 Brian Borchers. CSDP 4.8 User’s Guide, 2004.

26 M. Budinich, “Exact bounds on the order of the maximum clique of a graph,” Discrete Applied

Mathematic, vol. 127, no. 3, pp. 535–543, 2003.

27 T. S. Motzkin and E. G. Straus, “Maxima for graphs and a new proof of a theorem of Turán,” Canadian

Journal of Mathematics. Journal Canadien de Mathématiques, vol. 17, pp. 533–540, 1965.

28 L. P. Cordella, P. Foggia, C. Sansone, and M. Vento, “Performance evaluation of the vf graph matching

algorithm,” in Proceedings of the 10th International Conference on Image Analysis and Processing (ICIAP

’99), p. 1172, IEEE Computer Society, Washington, DC, USA, 1999.

Advances in

Operations Research

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Advances in

Decision Sciences

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Mathematical Problems

in Engineering

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Algebra

Hindawi Publishing Corporation

http://www.hindawi.com

Probability and Statistics

Volume 2014

The Scientific

World Journal

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International Journal of

Differential Equations

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Volume 2014

Submit your manuscripts at

http://www.hindawi.com

International Journal of

Advances in

Combinatorics

Hindawi Publishing Corporation

http://www.hindawi.com

Mathematical Physics

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Complex Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International

Journal of

Mathematics and

Mathematical

Sciences

Journal of

Hindawi Publishing Corporation

http://www.hindawi.com

Stochastic Analysis

Abstract and

Applied Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

International Journal of

Mathematics

Volume 2014

Volume 2014

Discrete Dynamics in

Nature and Society

Volume 2014

Volume 2014

Journal of

Journal of

Discrete Mathematics

Journal of

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Applied Mathematics

Journal of

Function Spaces

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Optimization

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014