Document 10847200

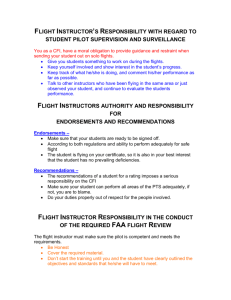

advertisement