A Moving Average Sieve Bootstrap for Unit Root Tests Patrick Richard

advertisement

A Moving Average Sieve Bootstrap for Unit Root Tests

by

Patrick Richard

Department of Economics

McGill University

Montréal, Québec, Canada

Département d’économie

Université de Sherbrooke

Sherbrooke, Québec, Canada

ABSTRACT

This paper considers the use of bootstrap methods for the test of the unit root

hypothesis for a time series with a first difference that can be written as a general

linear process admitting an infinite moving average (MA(∞)) representation. The

standard test procedure for such cases is the augmented Dickey Fuller (ADF) test

introduced by Said and Dickey (1984). However, it is well known that this test’s

true rejection probability under the unit root null hypothesis is often quite different from what asymptotic theory predicts. The bootstrap is a natural solution to

such error in rejection probability (ERP) problems and ADF tests are consequently

often based on block bootstrap or autoregressive (AR) sieve bootstrap distributions. In this paper, we propose the use of moving average (MA) sieve bootstrap

distributions. To justify this, we derive an invariance principle for sieve bootstrap

samples based on MA approximations. Also, we demonstrate that the ADF test

based on this MA sieve bootstrap is consistent. Similar results have been proved

for the AR sieve bootstrap by Park (2002) and Chang and Park (2003). The finite

sample performances of the MA sieve bootstrap ADF test are investigated through

simulations. Our main conclusions are that it is often, though not always, more accurate than the AR sieve bootstrap or the block bootstrap, that it requires smaller

parametric orders to achieve comparable or better accuracy than the AR sieve and

that it is more robust than the block bootstrap to the choice of approximation

order or block length and slightly more robust to the data generating process.

I am very grateful to my thesis director, professor Russell Davidson, for suggesting this research and

for his constant guidance and support. I also thank professors John Galbraith and Victoria Zinde-Walsh

for their very insightful comments.

April 2006.

1

1

Introduction

We consider the problem of testing the hypothesis of the presence of a unit root

in a time series process yt when its first difference ∆yt is stationary. The simplest

unit root test procedure is the one devised by Dickey and Fuller (1979, 1981) and

consists in testing the hypothesis H0 : α=1 against the alternative H1 : |α| <1 in the

simple regression, often referred to as the Dickey-Fuller (DF) regression:

yt = αyt−1 + et

(1)

where et , which is equal to ∆yt under the null, is assumed to be white noise. Often, the DF regression is augmented with an intercept and a deterministic trend.

Under the null hypothesis, this regression is unbalanced, in the sense that it implies regressing a non-stationary variable on a stationary one. Accordingly, the

asymptotic distribution of the t-statistic of H0 against H1 , henceforth called the

DF statistic, is non-standard and is a function of Brownian motions. This distribution was first derived by Phillips (1987) and its critical values can be found in

Fuller (1996) among others. The major flaw of the DF testing procedure is that it

assumes that the error process et is a white noise. In reality, this is seldom true.

Unfortunately, this happens to be a crucial assumption for the derivation of the

asymptotic distribution of the DF test, so that whenever et is not white noise, the

DF test is not appropriate.

Numerous alternative testing procedures have been proposed over the years,

among which two have come to be widely used. The first, which is both the

simplest and the most popular, consists in augmenting the DF regression with

lagged values of ∆yt . Under some fairly weak conditions, this augmented Dickey

Fuller (ADF) test has been shown to be asymptotically consistent, see Said and

Dickey (1984) and Chang and Park (2002). The second, called the Phillips and

Perron (PP) test (Phillips 1987, Phillips and Perron 1988), seeks to modify the DF

test statistic non-parametrically so that it has the DF distribution asymptotically

under the null.

The success of these two tests at providing reliable inferences in finite samples

depends on their ability to correctly model the correlation structure of ∆yt . In some

cases, such as when ∆yt has an infinite correlation structure, this is impossible.

It is nevertheless still possible to show that both tests are asymptotically valid,

provided that the lag length (ADF) or the lag truncation (PP) increases at an

2

appropriate rate function of the sample size and that some regularity conditions

are respected. Unfortunately, even though they are asymptotically valid, these

tests are known to suffer from important error in rejection probability (ERP) in

small samples when the series ∆yt is highly correlated. 1

The bootstrap is a natural solution to ERP problems. It does however entail

the estimation of the model under the null. This is a necessary condition for the

bootstrap samples to display the same kind of correlation as the original sample.

This estimation must, of course, be consistent, but also as precise as possible in

small samples in order to yield accurate inferences. Indeed, the key feature of

bootstrap methods for dependent processes is to successfully replicate the original

sample’s correlation. One way to do this is to use a method called the block bootstrap (Künsch, 1989). This is carried out by first estimating the model under the

null and then resampling blocks of residuals. Another way is to approximate the

dependence structure of the residuals under the null by an increasing, finite order

model. This is called the sieve bootstrap. Insofar, the only sieve bootstrap procedure that has been considered by theoretical econometricians is the autoregressive

sieve bootstrap (see, among others, Bülhmann, 1997). This is mainly because it

is easy to implement and it has proven to perform better than asymptotic tests

in small samples. With modern computer technology and the advancement of estimation theory, other sieve bootstrap methods can now be considered. Among

these are MA and ARMA sieves.

In the present paper, we derive some asymptotic properties of MA sieve bootstrap unit root tests. We also propose to estimate the MA sieve bootstrap models

by the analytical indirect inference method of Galbraith and Zinde-Walsh (1994)

(henceforth, GZW (1994)). This method is computationally much faster than maximum likelihood, which is an asset for the bootstrap test if one needs to compute

several test statistics or perform simulation experiments. Moreover, as argued by

the authors, this method is more robust to under-specification and is more accurate because it minimizes the Hilbert distance between the estimated process and

the true one. We discuss these points below. Our results can however be extended

to maximum likelihood estimates without any problem since both estimators are

asymptotically consistent. In related work, we combine the results of the present

1 Several analytical solutions have been proposed to circumvent this problem. Among these,

one of the most popular is the non-parametric correction of the PP test proposed by Perron

and Ng (1996). A more recent one is the correction to the ADF test proposed by Johansen

(2005). We do not discuss them here.

3

paper to those derived by Park (2002) and Chang and Park (2003) (henceforth CP

(2003)) for AR sieve bootstrap unit root tests and propose a sieve bootstrap test

based on ARMA sieves. In the next section, we derive an invariance principle for

the MA sieve bootstrap. In section 3 we establish the asymptotic validity of ADF

tests based on these sieves. Finally, we present some simulation evidence in favor

of the MA sieve bootstrap ADF test in section 4, apply the technique to a quite

simplified test for PPP among a small set of European countries in section 5 and

we conclude and point to further research directions in section 6.

2

The invariance principle

It is a well known fact that standard central limit theory cannot be applied to

unit root tests. The standard tools for the asymptotic analysis of unit root tests

are theorems called invariance principles or functional central limit theorems. Establishing results of this sort for sieve bootstrap procedure is a relatively new

strand in the literature and only 2 attempts have been made to date. First, Bickel

and Bühlmann (1999) derive a bootstrap functional central limit theorem under

a bracketing condition for the AR sieve bootstrap. Second, Park (2002) derives

an invariance principle for the AR sieve bootstrap. The approach of Park (2002)

is more interesting for our present purpose because his invariance principle establishes the convergence of the bootstrap partial sum process and that most of the

theory on unit root tests is based on such processes. In this section, we derive a

similar invariance principle for processes generated by a MA sieve bootstrap. This

in turn allows us to prove, in section 3, that the MA sieve bootstrap ADF test

follows the DF distribution asymptotically under the I(1) null hypothesis.

2.1

General bootstrap invariance principle

Let {εt} be a sequence of iid random variables with finite second moment. Consider

a sample of size n and define the partial sum process:

[nt]

1 X

εk

Wn (t) = √

σ n

k=1

4

where [y] denotes the largest integer smaller or equal to y and t is an index such

j

that j−1

n ≤ t < n , j=1,2,...n. Thus, Wn (t) is a step function that converges to a

random walk as n→ ∞. Also, as n→ ∞, Wn (t) becomes infinitely dense on the [0, 1]

interval. By the classical Donsker’s theorem, we know that

d

Wn → W.

where W is the standard Brownian motion. The Skorohod representation theorem

tells us that there exists a probability space (Ω, F , P ), where Ω is the space containing all the possible outcome, F is a σ-field and P a probability measure, that

supports W and a process W0n such that W0n has the same distribution as Wn and

a.s.

Wn0 → W.

(2)

Indeed, as demonstrated by Sakhanenko (1980), W0n can be chosen so that

P r{kWn0 − W k ≥ δ} ≤ n1−r/2 Kr E|εt |r

(3)

for any δ > 0 and r > 2 such that E|εt |r < ∞ and where Kr is a constant that depends

on r only. The result ( 3) is often referred to as the strong approximation. Because

the invariance principle we seek to establish is a distributional result, we do not

need to distinguish Wn from W0n . Consequently, because of equations ( 2) and

( 3), we say that Wn a.s.

→ W, which is stronger than the convergence in distribution

implied by Donsker’s theorem.

Now, suppose that we can obtain an estimate of {εt }nt=1, which we will denote as

{ε̂t }nt=1 , from which we can draw bootstrap samples of size n, denoted as {ε? }nt=1 . If

we suppose that n→ ∞, then we can build a bootstrap probability space (Ω? , F ? , P ? )

which is conditional on the realization of the set of residuals {ε̂t}∞

t=1 from which

the bootstrap errors are drawn. What this means is that each bootstrap drawing

{ε?t }nt=1 can be seen as a realization of a random variable defined on (Ω? , F ? , P ? ).

In all that follows, the expectation with respect to this space (that is, with

respect to the probability measure P ? ) will be denoted by E? . For example, if

the bootstrap samples are drawn

from {(ε̂t − ε̄n )}nt=1 , then E? ε?t = 0 and E? ε?t 2 =

?

?

P

p

d

a.s.?

n

σ̂n2 = (1/n) t=1 ε̂2t . Also, →, → and → will be used to denote convergence in

distribution, in probability and almost sure convergence of the functionals of the

bootstrap samples defined on (Ω? , F ? , P ? ). Further, following Park (2002), for any

d?

sequence of bootstrapped statistics {Xn? } we say that X?n →

X a.s. if the conditional

?

distribution of { Xn } weakly converges to that of X a.s on all sets of {ε̂t}∞

t=1 . In other

5

?

d

words, if the bootstrap convergence in distribution (→

) of functionals of bootstrap

samples on (Ω? , F ? , P ? ) happens almost surely for all realizations of {ε̂t}∞

t=1 , then we

d?

write → a.s.

Let {ε?t }nt=1 be a realization from a bootstrap probability space. Define

[nt]

Wn? (t) =

1 X ?

√

εk .

σ̂n n

k=1

Once again, by Skohorod’s theorem, there exists a probability space on which a

Brownian motion W? is supported and on which there also exists a process W?n 0

which has the same distribution as W?n and such that

P r? {kWn? 0 − W ? k ≥ δ} ≤ n1−r/2 Kr E ? |ε?t |r

(4)

for δ , r and Kr defined as before. Because W?n 0 and W?n are distributionaly equivalent, we will not distinguish them in all that follows. Equation ( 4) allows us to

state the following theorem, which is also theorem 2,2 in Park (2002)

Theorem (Park 2002, theorem 2.2, p. 473) If E? |ε?t |r < ∞ a.s. and

a.s.

n1−r/2 E ? |ε?t |r → 0

(5)

?

d

for some r>2, then W?n →

W a.s. as n→ ∞.

This result comes from the fact that if condition ( 5) holds, then equation ( 4)

d?

implies W?n →

W? a.s. Since the distribution of W? is independent of the set of

?

? d

residuals {ε̂t}∞

t=1 , we can equivalently say Wn → W a.s. Hence, whenever condition

( 5) is met, the invariance principle follows.

2.2

Invariance principle for MA sieve bootstrap

We now establish the invariance principle for ε̂t obtained from a MA sieve bootstrap. We consider a general linear process:

ut = π(L)εt

where

π(z) =

∞

X

k=0

6

πk z k

(6)

and the εt are iid random variables. Moreover, let π(z) and εt satisfy the following

assumptions:

Assumption 1.

(a) The εt are iid random variables such that E(εt )=0 and E(|εt |r )< ∞ for some

r>4.

(b) π(z) 6= 0 for all |z| ≤ 1 and

P∞

k=0

|k|s |πk | < ∞

for some s ≥ 1.

These are usual assumptions in stationary time series analysis. Notice that (a)

along with the coefficient summability condition insures that the process is weakly

stationary. On the other hand, the assumption that π(z) 6= 0 for all |z| ≤ 1 is

necessary for the process to have an AR(∞) form. See CP (2003) for a discussion

of these assumptions.

The MA sieve bootstrap consists into approximating equation ( 6) by a finite

order MA(q) model:

ut = π1 εq,t−1 + π2 εq,t−2 + ... + πq εq,t−q + εq,t

(7)

where q is a function of the sample size. Our theoretical framework is built on the

assumption that the parameters of the MA sieve bootstrap DGP are estimated by

the analytical indirect inference method of GZW (1994) rather than by maximum

likelihood because we believe that it is more appropriate for the task. There are

several reasons for this. The first is computation speed. Consider that in practice,

one often uses information criterions such as the AIC and BIC to chose the order of

the MA sieve model. These criterions make use of the value of the loglikelihood at

the estimated parameters, which implies that, if we want q to be within a certain

range, say q1 ≤ q ≤q2 , then we must estimate q2 -q1 models. With maximum

likelihood, this requires us to maximize the log likelihood q2 -q1 times. With GZW

(1994)’s method, we need only estimate one model, namely an AR(f), from which

we can deduce all at once the parameters of all the q2 -q1 MA(q) models. We then

only need to evaluate the loglikelihood function at these parameter values and

chose the best model accordingly. This is obviously much faster then maximum

likelihood.

Second, the simulations of GZW (1994) indicate that their estimator is more

robust to changes in q. For example, suppose that the true model is MA(∞) and

7

that we consider approximating it by either a MA(q) or a MA(q+1) model. If

we use the GZW (1994) method, for fixed f, going from a MA(q) to a MA(q+1)

specification does not alter the values of the first q coefficients. On the other

hand, these q estimates are likely to change very much if the two models are

estimated by maximum likelihood, because this latter method strongly depends

on the specification. Therefore, bootstrap samples generated from parameters

estimated using the GZW estimator are likely to be more robust to the choice of

q than samples generated usiong maximum likelihood estimates.

Another reason to prefer GZW (1994)’s estimator is that, according to their

simulations, it tends to yield less non-invertible roots. Finally, it allows us to

determine, through simulations, which sieve bootstrap method yields more precise

inference for a given quantity of information (that is, for a given lag length).

Approximating an infinite linear process by a finite model is an old topic in

econometrics. Most of the time, finite f-order autoregressions are used, with f

increasing as a function of the sample size. The classical reference on the subject

is Berk (1974) who proposes to increase f so that f3 /n → 0 as n→ ∞ (that is,

f=o(n1/3 )). This assumption is quite restrictive because it does not allow q to

increase at the logarithmic rate, which is what happens if we use AIC or BIC.

Here, we make the following assumption about q and f:

Assumption 2

q→ ∞ and f→ ∞ as n→ ∞ and q=o (n/log (n))1/2 and f=o (n/log (n))1/2 with f>q.

The reason for this choice is closely related to lemma 3.1 in Park (2002) and the

reader is referred to the discussion following it. Here, we limit ourselves to pointing

out that this rate is consistent with both AIC and BIC, which are commonly used

in practice.

The bootstrap samples are generated from the DGP:

u?t = π̂q,1 ε?t−1 + ... + π̂q,q ε?t−q + ε?t .

(8)

where the π̂q,i , i=1,2,...q are estimates of the true parameters πi , i=1,2,... and the

ε?t are drawn from the EDF of (ε̂t − ε̄n ), that is, from the EDF of the centered

residuals of the MA(q) sieve. We will now establish an invariance principle for the

partial sum process of u?t by considering its Beveridge-Nelson decomposition and

8

showing that it converges almost surely to the same limit as the corresponding

partial sum process built with the original ut . First, consider the decomposition

of ut :

ut = π(1)εt + (ũt−1 − ũt )

where

ũt =

∞

X

π̃k εt−k

k=0

and

∞

X

π̃k =

πi .

i=k+1

Now, consider the partial sum process

[nt]

1 X

Vn (t) = √

uk

n

k=1

hence,

[nt]

[nt] ∞

k=1

k=1 k=0

1 X

1 XX

= √

π(1)εt + √

n

n

"

∞

X

πi

i=k+1

!

#

(εt−k−1 − εt−k )

1

Vn (t) = (σπ(1))Wn (t) + √ (ũ0 − ũ[nt] ).

n

Under assumption 1, Phillips and Solo (1992) show that

p

max1≤k≤n |n−1/2 ũk | → 0.

Therefore, applying the continuous mapping theorem, we have

d

Vn (t) → V = (σπ(1))W

On the other hand, from equation ( 8), we see that u?t can be decomposed as

u?t = π̂(1)ε?t + (ũ?t−1 − ũ?t )

where

π̂(1) = 1 +

q

X

π̂q,k

k=1

ũ?t

=

q

X

π̂˜k ε?t−k+1

k=1

π̂˜k =

q

X

i=k

9

π̂q,i

It therefore follows that we can write:

[nt]

Vn? (t)

1

1 X ?

ut = (σ̂n π̂n (1))Wn? + √ (ũ?0 − ũ?[nt] )

=√

n

n

k=1

?

d

In order to establish the invariance principle, we must show that V?n →

V=

(σπ(1))W a.s.. To do this, we need 3 lemmas. The first one shows that σ̂n and π̂n (1)

d?

converge almost surely to σ and π(1). The second demonstrates that W?n (t) →

W

a.s. Finally, the last one shows that

a.s.

P ? {max1≤t≤n |n−1/2 ũ?t | > δ} → 0

(9)

for all δ > 0, which is equivalent to saying that

p?

max1≤k≤n |n−1/2 ũk | → 0. a.s.

and is therefore the bootstrap equivalent of the result of Philips and Solo (1992).

These 3 lemmas are closely related to the results of Park (2002) and their counterpart in this paper are identified.

Lemma 1 (Park 2002, lemma 3.1, p. 476)

Let assumptions 1 and 2 hold. Then,

h

i

M ax1≤k≤q |π̂q,k − πk | = O c (logn/n)1/2 + o c(q −s )

a.s.

(10)

for large n and where c is a constant equal to the qth element in the progression

[1, 2, 4, 8, 16, ...]. Also,

h

i

σ̂n2 = σ 2 + O c (logn/n)1/2 + o(cq −s )

a.s.

h

i

π̂n (1) = π(1) + O qc (logn/n)1/2 + o(cq −s )

a.s.

(11)

(12)

Proof: see the appendix.

Lemma 2 (Park 2002, lemma 3.2, p. 477). Let assumptions 1 and 2 hold.

Then, E ? |ε?t |r < ∞ a.s. and n1−r/2 E ? |ε?t |r a.s.

→ 0

Proof: see the appendix.

10

?

d

Lemma 2 proves that W?n (t) →

W a.s. because it shows that condition ( 5) holds

almost surely.

Lemma 3 (Park 2002, theorem 3.3, p. 478). Let assumptions 1 and 2 hold.

Then, equation ( 9) holds.

Proof: see the appendix.

With these 3 lemmas, the MA sieve bootstrap invariance principle is established.

It is formalized in the next theorem.

Theorem 1. Let assumptions 1 and 2 hold. Then by lemma 1, 2 and 3,

d?

Vn? → V = (σπ(1))W a.s.

3

Consistency of the sieve bootstrap ADF tests

We will now use the results from the preceding section to show that the ADF bootstrap test based on the MA sieve is asymptotically valid. A bootstrap test is said

to be asymptotically valid or consistent if it can be shown that its large sample

distribution under the null converges to the test’s asymptotic distribution. Consequently, we will seek to prove that MA sieve bootstrap ADF tests statistics follow

the DF distribution asymptotically under the null. Let us begin by considering a

time series yt with the following DGP:

yt = αyt−1 + ut

(13)

where ut is the general linear process described in equation ( 6). We want to test

the unit root hypothesis against the stationarity alternative (that is, H0 : α = 1

against H1 : |α| < 1). This test is frequently conducted as a t-test in the so called

ADF regression, first proposed by Said and Dickey (1984):

yt = αyt−1 +

p

X

αk ∆yt−k + ep,t

(14)

k=1

where p is chosen as a function of the sample size. A large literature has been

devoted to selecting p, see for example, Ng and Perron (1995, 2001). CP (2003)

11

have shown that the test based on this regression asymptotically follows the DF

distribution when H0 is true under very weak conditions, including assumptions 1

and 2. Let y?t denote the bootstrap process generated by the following DGP:

yt? =

t

X

u?k

k=1

and the u?k are generated as in ( 8). The bootstrap ADF regression equivalent to

regression ( 14) is

?

yt? = αyt−1

+

p

X

?

αk ∆yt−k

+ et .

(15)

k=1

Let us suppose for a moment that u?t has been generated by an AR(p) sieve

bootstrap DGP. Then, it is easy to see that the errors of regression ( 15) would be

identical to the bootstrap errors driving the bootstrap DGP. This is a convenient

fact which CP (2003) use to prove the consistency of the AR(p) sieve bootstrap

ADF test based on this regression. If however the y?t are generated by the MA(q)

sieve described above, then the errors of regression ( 15) are not identical to the

bootstrap errors under the null because the AR(p) approximation captures only a

part of the correlation structure present in the MA(q) process. It is nevertheless

possible to show that they will be equivalent asymptotically. This is done in lemma

A1, which can be found in the appendix.

?

?

?

Let x?p,t = (∆yt−1

, ∆yt−2

, ..., ∆yt−p

) and define:

A?n

=

n

X

?

yt−1

ε?t

t=1

Bn?

=

n

X

t=1

? 2

yt−1

−

−

n

X

?

yt−1

x?p,t >

t=1

n

X

?

yt−1

x?p,t >

t=1

!

!

n

X

x?p,t x?p,t >

t=1

n

X

x?p,t x?p,t >

t=1

!−1

!−1

n

X

t=1

n

X

t=1

x?p,t ε?t

!

?

x?p,t yt−1

!

Then, it is easy to see that the t-statistic computed from regression ( 15) can be

written as (e.g. Davidson and MacKinnon, 2003):

Tn? =

α̂?n − 1

+ o(1) a.s.

s(α̂?n )

for large n and where α̂?n − 1 = A?n Bn? −1 and s(α̂?n )2 = σ̂n2 Bn? −1 .

The equality is asymptotic and almost surely holds because the residuals of the

ADF regression are asymptotically equal to the bootstrap errors, as shown in

12

lemma A1. This also justifies the use of the estimated variance σ̂n2 . Note that in

small samples, it may be preferable to use the estimated variance of the residuals

from the ADF regression, which is indeed what we do in the simulations. We must

now address the issue of how fast p is to increase. For the ADF regression, Said

and Dickey (1984) require that p=o(nk ) for some 0< k ≤ 1/3. As we argued earlier,

these rates do not allow the logarithmic rate. Hence, we state new assumptions

about the rate at which q (the sieve order) and p (the ADF regression order)

increase:

Assumption 2’

q=cq nk , p=cp nk where cq and cp are constants and 1/rs < k < 1/2.

Assumption 2 can be fitted into this assumption for appropriate values of k.

Also, notice that assumption 2’ imposes a lower bound on the growth rate of both

p and q. This is necessary to obtain almost sure convergence. See CP (2003) for a

weaker assumption that allows for convergence in probability. Several preliminary

and quite technical results are necessary to prove that the bootstrap test based

on the statistic T?n is consistent. To avoid rendering the present exposition more

laborious than it needs to be, we relegate them to the appendix (lemmas A2 to

A5). For now, let it be sufficient to say that they extend to the MA sieve bootstrap

samples some results established by CP (2003) for the AR sieve bootstrap. In turn,

some of CP (2003)’s lemmas are adaptations of identical results in Berk (1974) and

An, Chen and Hannan (1982).

In order to prove that the MA sieve bootstrap ADF test is consistent, we now

prove two results on the elements of A?n and B?n . These results are stated in terms

of bootstrap stochastic orders, denoted by O?p and o?p , which are defined as follows.

Consider a sequence of non-constant numbers {cn}. Then, we say that X?n =o?p (cn )

a.s. or in p if P? {|Xn? /cn > } →0 a.s. or in p for any > 0. Similarly, we say that

X?n =O(cn ) if for every > 0, there exists a constant M > 0 such that for all large

n, P? {|Xn? /cn | > M } < a.s or in p. It follows that if E? |Xn? | → 0 a.s., then X?n = o?p (1)

a.s. and that if E? |Xn? |=O(1) a.s., then X?n =O?p (1) a.s. See CP (2003), p. 7 for a

slightly more elaborated discussion.

13

Lemma 4. Under assumptions 1 and 2’, we have

n

n

1X ? ?

1X ? ?

yt−1 εt = π̂n (1)

w ε + o?p (1) a.s.

n t=1

n t=1 t−1 t

n

n

X

1 X ? 2

2 1

y

=

π̂

(1)

w? 2 + o?p (1) a.s.

n

t−1

n2 t=1

n2 t=1 t−1

(16)

(17)

Proof: see appendix.

Lemma 5. Under assumptions 1 and 2’ we have

n

1X

?

? > −1 xp,t xp,t ) = Op? (1) a.s.

(

n

t=1

n

X

? x?p,t yt−1

= Op? (np1/2 ) a.s.

t=1

n

X

x?p,t ε?t = Op? (n1/2 p1/2 ) a.s.

(18)

(19)

(20)

t=1

Proof: see appendix.

Lemma 5 allows us to place an upper bound on the absolute value of the second

term of A?n . This is:

!−1 ! n

!−1 n

! n

X

n

n

X

X

X

X

X

n ? ? >

>

>

>

?

?

?

?

?

?

?

?

?

?

xp,t εt xp,t xp,t

yt−1 xp,t xp,t εt ≤ xp,t xp,t

yt−1 xp,t

t=1

t=1

t=1

t=1

t=1

t=1

But by lemma 5, the right hand side is O?p (n−1 )O?p (np1/2 )O?p (n1/2 p1/2 ) which gives

O?p (n1/2 p). Now, using the results of lemma 4, we have that:

n

n

−1

A?n

1X ? ?

= π̂n (1)

w ε + o?p (1)a.s.

n t=1 t−1 t

because the second term of A?n multiplied by n−1 is O?p (n−1/2 p). We can further

say that

n−2 Bn? = π̂n (1)2

14

n

1 X ? 2

w

+ o?p (1) a.s.

n2 t=1 t−1

because n−2 times the second part of B?n is O?p (n−1 ). Therefore, the T?n statistic

can be seen to be:

1 Pn

?

?

Tn? =

n

1

n2

σ

Pt

wt−1 εt

+ o?p (1) a.s.

2 1/2

?

t=1 wt−1

t=1

Pn

Recalling that wt? = k=1 ε?k , it is then easy to use the result of section 3 along with

the continuous mapping theorem to deduce that:

n

1 X ? ? d?

w ε →

n t=1 t−1 t

Z

1

Wt dWt a.s.

0

Z

n

1 X ? 2 d? 1 2

w

→

Wt dt a.s.

n2 t=1 t−1

0

under assumptions 1 and 2’. We can therefore state the following theorem.

Theorem 2. Under assumptions 1 and 2’, we have

d?

Tn? →

R1

0

R

1

0

Wt dWt

1/2 a.s.

Wt2 dt

which establishes the asymptotic validity of the MA sieve bootstrap ADF test.

4

Simulations

We now present a set of simulations designed to illustrate the extent to which

the proposed MA-sieve bootstrap scheme improves upon the usual AR-based sieve

bootstrap. For this purpose, I(1) data series were generated from the model described by equation ( 13) with errors generated by a general linear model of the

class described in equation ( 6) with iid N(0,1) innovations. Most of the existing

literature on the rejection probability of asymptotic or sieve bootstrap unit root

tests is mainly concerned with the case where the unit root process is driven by

stationary and invertible errors processes with a moving average root near the

unit circle. This typically results into large ERP and low power of the asymptotic

ADF test. Classical references on this are Schwert (1989) and Agiakoglou and

Newbold (1992). Recently, CP (2003) have shown, through Monte Carlo experiences, that the AR sieve bootstrap allows one to substantially reduce this ERP,

15

but not to eliminate it altogether. Their simulations however show that the AR

sieve bootstrap looses some of its accuracy as the dependence of the error process

increases.

Most of the time, simulation studies use an invertible MA(1) process to generate

ut . The reason is that it is easy to generate and easy to control and graph the

degree of correlation of ut by simply changing the MA parameter. This simple

device is obviously not appropriate in the present context because the MA(1) would

correctly model the first difference process under the null and would therefore not

be a sieve anymore. We therefore used ARMA(p,q) DGPs so that neither an AR

or an MA sieve may represent a correct specification of the first difference process.

We have also accounted for the possibility of long memory in the first difference

by using ARFIMA(1,d,1) DGPs as well.

4.1

Implementation

The bootstrap tests are computed as follows.

1. Estimate by OLS regression of the first difference of yt . Obtain

the residuals:

p

ε̂t = ∆yt −

X

α̂p,k ∆yt−k

k=1

For the MA sieve bootstrap, we fit an MA(q) model to the first

difference process and obtain the residuals:

ε̂t = Ψ̂> ∆yt

where Ψ̂ is the triangular GLS transformation matrix for MA(q)

processes.

2. Draw bootstrap errors (ε?t ) from the EDF of the recentered and

Pn

n 1/2

rescaled residuals ( n−p

) (ε̂t − n1 t=1 ε̂t )

3. Generate bootstrap samples of yt . For the AR sieve bootstrap:

∆yt? =

p

X

k=1

16

?

∆yt−k

+ ε?t .

For the MA sieve bootstrap:

∆yt? =

q

X

π̂q,k ε?t−k + ε?t .

k=1

Then, we generate bootstrap samples of yt? : yt? =

Pt

j=1

∆yj?

4. Compute the bootstrap T?n i ADF test based on the bootstrap

ADF regression:

?

+

yt? = αyt−1

p

X

?

αk ∆yt−k

+ et

k=1

5. Repeat steps 2 to 4 B times to obtain a set of B ADF statistics

T?ni , i=1,2,...B. The p-value of the test is defined as:

P? =

B

1 X

I(Tn? i < Tn )

B i=1

where Tn is the original ADF test statistic and I() is the indicator function which is equal to 1 every time the bootstrap

statistic is smaller than Tn and 0 otherwise. The null hypothesis is rejected at the 5 percent level whenever P? is smaller than

0.05.

In all the simulations reported here, the AR sieve and the ADF regressions are

computed using OLS. Further, all MA parameters were estimated by the analytical

indirect method of GZW (1994). The bootstrap samples are generated recursively,

which requires some starting values for ∆yt? . We have set the first p values of ∆yt?

equal to the first p values of ∆yt and generated samples of n+100+p observations.

Then, we have thrown away the first 100+p values and used the last n to compute

the bootstrap tests. For the MA sieve bootstrap parameters, we have set the first

q errors to 0, generated samples of size n+100+q and thrown away the first 100+q

realizations.

The GLS transformation matrix Ψ is defined as being the matrix that satisfies

the equation Ψ> Ψ = Σ−1 where Σ−1 is the inverse of the process’s covariance matrix.

There are several ways to estimate Ψ. A popular one is to compute the inverse

of the covariance matrix evaluated at the parameter estimates and to obtain Ψ̂

using a numerical algorithm (the Choleski decomposition for example). This exact

17

method, however, requires the inversion of the n×n covariance matrix, which is

computationally costly when n is large. A corresponding approximation method

consists into decomposing the covariance matrix of the inverse process (for example,

of a MA(∞) in the case of an AR(1) model). This has the advantage of not

requiring the inversion of a large matrix. For all the simulations reported here,

the transformation matrix was estimated using the exact method proposed by

Galbraith and Zinde-Walsh (1992), who provide exact algebraic expressions for

Ψ rather than for Σ. This is computationally advantageous because it does not

require the inversion, the decomposition nor the storage of a n×n matrix since all

calculations can be performed through row by row looping.

4.2

Error in rejection probability

We now turn to the analysis of the finite sample performances of the MA sieve

bootstrap ADF unit root test in terms of ERP relative to other commonly used

bootstrap methods. In order to do this, we use size discrepancy curves which

plot the ERP of the test with respect to nominal size. This is obviously much

more informative than simply considering the ERP at a given nominal size. See

Davidson and MacKinnon (1998) for a discussion. All the curves presented below

were generated from 2500 samples and 999 bootstrap replications per sample. We

have used 4 different DGP for the first difference process, all of which have N(0,1)

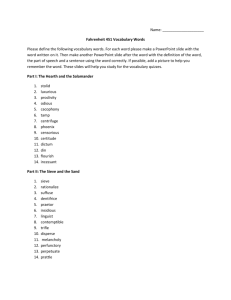

errors. Their characteristics are summarized in the table below.

Table 1. Models.

ARMA 1

ARMA 2

ARMA 3

ARFIMA 1

AR

-0.85

-0.85

-0.4

-0.85

MA

-0.85

-0.4

-0.85

-0.85

d

0

0

0

0.45

Figures 1 and 2 compare size discrepancy of certain AR and MA sieve bootstrap

tests for sample sizes of 25 and 100 respectively generated by model 1. Overall,

the MA sieve bootstrap tests seem to be somewhat more accurate than the AR

for similar parametric orders. For example, the MA(3) test has virtually no ERP

when N=25, while the AR(3) strongly over-rejects. Similarly, the MA(7) only

slightly over-rejects when N=100 while the AR(7) over-rejects quite severely. A

second feature is that the AR sieve bootstrap test is often able to perform as well

18

as the MA sieve bootstrap, but only at the cost of a much higher parametric order.

A good example of this is the fact that the AR(15) has a size discrepancy curve

similar to the MA(7). This last point may be of extreme importance in small

samples, as is evident from figure 1.

In figure 3, we compare the MA sieve bootstrap test to the overlapping block

bootstrap in a sample of 25 observations generated from model 1. It appears that

the MA sieve bootstrap procedure has 2 advantages over the block. First of all,

it is clearly more robust to the choice of model order or block size. Indeed, for

example, choosing a block length of 3 instead of 4 when testing at 5 percent results

in the RP being around 0.202 instead of 0.045. In the same situation, choosing an

MA order of 2 instead of 3 results in the RP being around 0.100 instead of 0.052.

Since the choice of order or block size is done either arbitrarily or using data based

methods whose reliability is not certain, this point is of capital importance for

applied work. The second advantage of the MA sieve bootstrap is that it seems to

be more robust to the nominal level of the test than the block. For example, the

figure shows that even though the block bootstrap with block size 4 has almost no

ERP at 5 percent, it over-rejects by almost 3.5 percent at a nominal level of 10

percent, while the MA sieve bootstrap still has only negligible ERP.

Figure 4 shows the effects of the relative strength of the AR and MA parts on

the AR and MA sieve bootstrap ADF tests. We denote by strong AR the curves

obtained using model 2 and by strong MA the ones obtained from model 3. The

simulations reported there indicate that the MA sieve may be more robust than

the AR sieve in terms of absolute ERP relatively to the relative strength of the

different parts of the DGP. Indeed, even though it exhibits almost no ERP when

the AR part is stronger (a little over 1 percent at 5 percent nominal level), the

AR sieve has a considerable over-rejection problem when the MA part is stronger

(6 percent ERP at 5 percent nominal level). On the other hand, the MA sieve

has almost the same absolute ERP in both cases (around 2 percent at 5 percent

nominal size).

Figure 5 compares AR and MA sieve bootstrap ADF tests’ discrepancy curves

for yet another DGP, namely the stationary ARFIMA model described in table

1. Just like in figure 1, we see that the MA sieve bootstrap can achieve better

accuracy than the AR sieve bootstrap. In figure 6, we compare size discrepancy

plots for the MA sieve bootstrap ADF test when the sieve model is estimated

19

by the analytical indirect inference method GZW or MLE. It appears that the

estimating method has very little effects on the small sample performances of the

test.

4.3

Power

We now briefly turn to power considerations. There is no good reason why the

MA sieve bootstrap test would have better power properties than the AR sieve

bootstrap tests. It is however possible that the accuracy gains under the null

observed in the previous subsection come at the cost of a loss of power. Fortunately,

it does not appear to be so. Figure 7 compares size-power curves of the AR and

MA sieve bootstrap ADF tests for nominal size from 0 to 0.5 and N=50. They are

based on model 1 and two alternatives are considered: one quite close to the null

where the parameter α in equation ( 1) is 0.9 and the other where it is equal to

0.7. According to the simulations, both sieve bootstrap procedures have similar

power characteristics. Similar results were obtained for N=100.

5

Test of PPP

In order to get a feeling of how the MA sieve bootstrap performs on real data, we

have carried out a very simple test of the purchasing power parity (PPP) on a small

set of European countries, namely, France, Germany, Italy and the Netherlands.

The purpose of this section being to illustrate the finite sample performances of

the proposed bootstrap technique, we have chosen a sample containing only 50

monthly observations, from the first month of 1977 to the second month of 1981.

Our method is as simple as possible: under the PPP hypothesis, there should be a

long term relationship between the price level ratio and the exchange rate of any

two countries. Thus, simple Engle-Granger cointegration tests should provide a

simple and easy way to detect PPP. This implies two steps, both giving us the

opportunity to try out our MA sieve bootstrap: first, we must run unit root tests

on each time series and second, we must test for cointegration by means of unit

root tests performed on the residuals of a regression of one series on the other. The

results of the former are shown in table 2 while the results of the latter are shown

in table 3. The bootstrap tests are based on 9999 replications and are conducted

20

using models with a constant and no deterministic trend (the addition of such a

trend does not alter the results significantly).

Table 2. Unit root tests.

Series

E (F-G)

E (F-I)

E (F-N)

E (I-N)

P (F-G)

P (F-I)

P (F-N)

P (I-N)

llgtd

5

2

5

6

4

6

4

6

ADF

-2.2405

-1.1563

-2.4146

-1.5684

0.0993

2.2813

2.3903

3.1417

AR ord p-val

5

0.1094

2

0.2640

5

0.0986*

6

0.1026

4

0.5912

6

0.9898

4

0.9892

6

0.9969

MA ord p-val

1

0.1188

1

0.2637

1

0.1459

1

0.1092

1

0.6427

1

0.9745

1

0.9743

4

0.9945

p-val diff

-0.0094

0.0003

-0.0473

-0.0066

-0.0515

0.0153

0.0149

0.0024

Table 2 reads as follows: the first column gives the name of the time series being

tested, the second and fourth columns show how many lags were used in the ADF

regression and the AR sieve model respectively while the sixth gives the order of

the MA sieve used, the third reports the value of the ADF test statistic, the fifth

and seventh show the p-values of the AR and MA sieve bootstrap tests respectively

and the last one compares these two quantities. One, two and three stars denote

rejection of the unit root hypothesis at 10%, 5% and 1%. The autoregressive orders

of the ADF tests regressions and of the AR sieve and the order of the MA sieve

were all chosen using the AIC selection criterion.

There is little to say about this table, except that the two bootstrap procedures

give similar diagnostics in all cases but one, namely the exchange rate between

France and the Netherlands, where the AR sieve rejects very narrowly at the 10%

nominal size. This appears to be a rather insignificant result and we will not

discuss it any further. A more interesting feature of the numbers shown is that

both methods produce very similar p-values, but that the MA sieve bootstrap test

uses less parameters. This confirms what we observed in the simulations presented

in the preceding section.

Table 3. Cointegration tests.

Series

F-G

F-I

I-N

llgtd

3

0

1

ADF

-4.1137***

-1.3324

-1.6471

AR ord

3

0

1

21

p-val

0.0019***

0.6008

0.4530

MA ord p-val

1

0.3089

1

0.7268

1

0.5107

p-val diff

-0.3070

-0.1260

-0.0577

Table 3 reads the same way as table 2. The test of PPP between France and

Germany is particularly interesting. We see that both the asymptotic and AR sieve

bootstrap tests strongly reject the unit root hypothesis, and therefore find evidence

in support of PPP, while the MA sieve test does not even come close to rejecting

the null. This may be an example of a situation where the MA sieve bootstrap

test is capable of correcting the AR sieve’s tendency to over-reject. Notice that

imposing an MA order of either 2 or 3 yielded p-values of 0.1730 and 0.1750, so

we may consider this result as quite robust.

6

Conclusions

Using results on the invariance principle of MA sieve bootstrap partial sum processes

derived in section 2, we have shown that the MA sieve bootstrap ADF test is consistent under fairly weak regularity conditions. Most of our analysis is based on

the analytical indirect inference method of Galbraith and Zinde-Walsh (1994) but

certainly also applies to maximum likelihood estimation, a supposition supported

by our simulations.

Our simulations have also shown that the MA sieve bootstrap may have some

advantages in the context of unit root testing over the AR sieve and block bootstrap. In particular, we have found that, for the DGPs and sample sizes considered,

the MA sieve bootstrap ADF tests are likely to require much more parsimonious

models than the AR sieve bootstrap tests to achieve the same, or even better,

accuracy in terms of ERP. Also, the MA sieve tests appear to be more robust to

changes in the DGP in terms of absolute ERP. Finally, it seems to be more robust

to order choice than the block bootstrap and also more robust with respect to the

choice of nominal level. We find no evidence that these gains are made at the cost

of a power loss.

Further ongoing research includes the generalization of these results to ARMA

sieve bootstrap methods, which naturally follows from combining our results to

those of Park (2002) and Chang and Park (2003). We also consider the utilization

of bias correction methods to obtain sieve models that are closer to the DGP. This

should allow us to further reduce ERP problems.

22

Appendix

PROOF OF LEMMA 1.

First, consider the following expression from Park (2002, equation 19):

ut = αf,1 ut−1 + αf,2 ut−2 + ... + αf,f ut−f + ef,t

(21)

where the coefficients αf,1 are pseudo-true values defined so that the equality

holds and the ef,t are uncorrelated with the ut−k , k=0,1,2,...r. Using once again

the results of GZW (1994), we define

πq,1 = αf,1

πq,2 = αf,2 + αf,1 ∗ πq,1

..

.

πq,q = αf,q + αf,q−1 ∗ πq,1 + ... + αf,1 ∗ πq,q−1

to be the moving average parameters deduced from the parameters of the AR

process ( 21). It is shown in Hannan and Kavalieris (1986) that

1/2

a.s.

max1≤k≤q |α̂f,k − αf,k | = O (logn/n)

where α̂f,i are OLS or Yule-Walker estimates (an equivalent result is shown to hold

in probability in Baxter (1962)). Further, they show that

f

X

k=1

|αf,k − αk | ≤ c

∞

X

k=f +1

|αk | = o(f −s ).

where c is a constant. This yields part 1 of lemma 3.1 of Park (2002):

1/2

+ o(f −s ) a.s.

max1≤k≤f |α̂f,k − αk | = O (logn/n)

Now, it follows from the equations of GZW (1994) that:

k−1

X

(α̂f,k−j π̂q,j − αk−j πj )

|π̂q,k − πk | = j=0

23

(22)

Of course, it is possible to express all of the π̂q,k and πk as functions of the α̂f,k and

αk respectively. For example, we have π1 = α1 , π2 = α21 + α2 , π3 = α31 + α1 α2 + α1 α2 + α3

and so forth. Note that, as is made clear from this progression, the expression of

πk has twice as many terms as the expression of πk−1 . It is therefore possible to

rewrite ( 22) for any k as a function of α̂f,j and αj , j=1,...k. There is no satisfying

general expression for this so let us consider, as an illustration, the case of k=3:

|π̂q,3 − π3 | = α̂3f,1 + α̂f,1 α̂f,2 + α̂f,1 α̂f,2 + α̂f,3 − α31 − α1 α2 − α1 α2 − α3 using the triangle inequality:

|π̂q,3 − π3 | ≤ α̂3f,1 − α31 + |α̂f,1 α̂f,2 − α1 α2 | + |α̂f,1 α̂f,2 − α1 α2 | + |α̂f,3 − α3 |

Thus,

using the

i results of lemma 3.1 of Park (2002), |π̂q,3 − π3 | can be at most

h

1/2

O 4 (logn/n) +o(4f −s) because the number of summand is proportional to a power

h

i

of n. Similarly, |π̂q,4 − π4 | can be at most O 8 (logn/n)1/2 + o(8f −s ) and so forth.

Generalizing this and considering that we have assumed that the order of the MA

model, q, increases at the same rate as the order of the AR approximation (f), so

that we can replace f by q in the preceding expressions, yields the stated result.

The other two results follow in a similar manner.

PROOF OF LEMMA 2.

r First, note that n1−r/2 E ? |ε?t |r = n1−r/2 n1 nt=1 ε̂q,t − n1 nt=1 ε̂q,t because the

bootstrap errors are drawn from the series of recentered residuals from the MA(q)

model. Therefore, what must be shown is that

P

n1−r/2

P

r !

n

n

1X

1 X a.s.

ε̂q,t → 0

ε̂q,t −

n t=1 n t=1

as n→ ∞. If we add and subtract εt and εq,t (which was defined in equation 7)

inside the absolute value operator, we obtain:

n

1−r/2

r !

n

n

1X

1 X ε̂q,t ≤ c (An + Bn + Cn + Dn )

ε̂q,t −

n t=1 n t=1

where c is a constant and

An =

1

n

Bn =

1

n

Pn

t=1

Pn

t=1

r

|εt |

r

|εq,t − εt |

24

Cn =

1

n

Pn

t=1

Pn

Dn = n1

r

|ε̂q,t − εq,t |

t=1 ε̂q,t

r

To get the desired result, one must show that n1−r/2 times An , Bn , Cn and Dn

each go to 0 almost surely.

→ 0. This holds by the strong law of large numbers

1. n1−r/2 An a.s.

r

which states that An a.s.

→ E |εt | which has been assumed to be

finite. Since r>4, 1-r/2<-1 from which the result follows.

2. n1−r/2 Bn a.s.

→ 0. This is shown through proving that

r

E |εq,t − εt | = o(q −rs )

(23)

holds uniformly in t and where s is as specified in assumption 1

part b. We begin by recalling that from equation ( 7) we have

εq,t = ut −

q

X

πk εq,t−k .

k=1

Writing this using an infinite AR form:

εq,t = ut −

∞

X

α̈k ut−k

k=1

where the parameters α̈k are functions of the first q true parameters πk in the usual manner (see proof of lemma 1). We also

have:

∞

εt = u t −

X

πk εt−k .

k=1

which we also write in AR(∞) form:

εt = u t −

∞

X

αk ut−k .

k=1

Evidently, αk = α̈k for all k=1,...,q. Subtracting the second of

these two expressions to the first we obtain:

εq,t − εt =

∞

X

k=q+1

25

(αk − α̈k ) ut−k

(24)

Using Minkowski’s inequality, the triangle inequality and the

stationarity of ut ,

r

r

E |εq,t − εt | ≤ E |ut |

∞

X

k=q+1

r

|(αk − α̈k )|

(25)

The second element of the right hand side can be rewritten as

q

r

k−q

r

∞ X

k

∞ X

X

X

X

π` αk−` −

π` αk−` − πk =

π` αk−` − πk

−

k=q+1 `=1

`=1

k=q+1

`=1

that is, we have a sequence of the sort: -(πq+1 + πq+2 + π1 αq+1 +

πq+3 + π1 αq+2 + π2 αq+1 ...) Then, it follows from assumptions 1 b

and 2 that

r

E |εq,t − εt | = o(q −rs )

(26)

because E|ut |r < ∞ (see Park 2002, equation 25, p. 483). The

equality ( 26) together with the inequality ( 25) imply that

equation ( 23) holds. In turn, equation ( 23) implies that

a.s.

n1−r/2 Bn → 0 is true, provided that q increases at a proper

rate, such as the one specified in assumption 2.

3. n1−r/2 Cn a.s.

→ 0

We start from the AR(∞) expression for the residuals to be

resampled:

ε̂q,t = ut −

∞

X

α̂q,k ut−k

(27)

k=1

where α̂q,k denotes the parameters corresponding to the estimated MA(q) parameters π̂q,k . Then, adding and subtracting

P∞

k=1 αq,k ut−k , where the parameters πq,k were defined in the

proof of lemma 1 and using once more the AR(∞) form of ( 7),

equation ( 27) becomes:

ε̂q,t = εq,t −

∞

X

k=1

(α̂q,k − αq,k )ut−k −

∞

X

k=1

(αq,k − α̈k )ut−k

(28)

It then follows that

r !

r ∞

∞

X

X

|ε̂q,t − εq,t | ≤ c (α̂q,k − αq,k )ut−k + (αq,k − α̈k )ut−k r

k=1

k=1

26

for c=2r−1 . Let us define

C1n

∞

r

n

1 X X

=

(α̂q,k − αq,k )ut−k n t=1 k=1

C2n

r

∞

n

1 X X

=

(αq,k − α̈k )ut−k n

t=1 k=1

a.s.

then showing that n1−r/2 C1n a.s.

→ 0 and n1−r/2 C2n → 0 will give us

our result. First, let us note that C1n is majorized by:

n

r

C1n ≤ (max1≤k≤∞ |α̂q,k − αq,k | )

∞

1 XX

r

|ut−k |

n t=1

(29)

k=1

By lemma 1 and equation (20) of Park (2002), we have

1/2

max1≤k≤∞ |α̂q,k − αq,k | = O (c logn/n)

a.s.,

that is, the estimated parameters are alomst sure consistent

estimates of the pseudo-true parameters. Therefore, using yet

again a triangle inequality on ( 29) and truncating it, without

loss of generality because it applies to the whole sequence from

1 to ∞, for some order p which increases according to the rate

specified in assumption 2 (which is necessary if we want to

apply the result to a finite sample size),

C1n

p

≤ (max1≤k≤p |α̂q,k − αq,k | )

n

r

n−1

X

t=0

r

|ut | +

1−p

X

t=−1

r

|ut |

!

(30)

which can easily be seen to go to 0 because of the results of

lemma 1 and equation 25 in Park (2002). See also Park (2002),

p. 484.

On the other hand, if we apply Minkowski’s inequality to the

absolute value part of C2n , we obtain

r

∞

X

r

E

(αq,k − α̈k ) ut−k ≤ E |ut |

k=1

∞

X

k=1

!r

|αq,k − α̈k |

(31)

of which the right hand side goes to 0 by the boundedness of

r

E |ut | , the definition of the α̈, lemma 1 and equation (21) of

27

Park (2002), where it is shown that pk=1 |αp,k − αk | = o(p−s ) for

some p→ ∞, which implies a similar result between the πq,k and

the πk , which in turn implies a similar result betwee the αq,k

and the α̈k . This proves the result.

P

4. n1−r/2 Dn a.s.

→ 0

In order to prove this result, we show that

n

n

n

1X

1X

1X

ε̂q,t =

εq,t + o(1) a.s. =

εt + o(1) a.s.

n t=1

n t=1

n t=1

Recalling equations ( 24) and ( 28), this will hold if

n

∞

1X X

a.s.

(αk − α̈k ) ut−k → 0

n t=1

(32)

k=q+1

n

∞

1 XX

a.s.

(αq,k − α̈k )ut−k → 0

n t=1

(33)

k=1

n

∞

1 XX

a.s.

(α̂q,k − αq,k )ut−k → 0

n t=1

(34)

k=1

where the first equation serves to prove the second asymptotic

equality and the other two serve to prove the first asymptotic

equality. Proving those 3 results requires some work. Just like

Park (2002), p.485, let us define

Sn (i, j) =

n

X

εt−i−j

n

X

ut−i

t=1

and

Tn (i) =

t=1

so that

Tn (i) =

∞

X

πj Sn (i, j)

j=0

and remark that by Doob’s inequality,

r

|maxi≤m≤n Sm (i, j)| ≤ z |Sn |

28

r

where z = 1/(1 − r1 ). Taking expectations and applying Burkholder’s inequality,

r/2

n

X

2

E (max1≤m≤n |Sm (i, j)| ) ≤ c1 zE εt r

t=1

where c1 is a constant depending only on r. By the law of large

numbers, the right hand side is equal to c1 z(nσ2 )r/2 =c1 z(nr/2 σ).

Thus, we have

r

E(max1≤m≤n |Sm (i, j)| ) ≤ cnr/2

uniformly over i and j where c = c1 zσ. Define

∞

X

Ln =

k=q+1

(αk − α̈k )

n

X

ut−k .

t=1

It must therefore follow that

r

1/r

[E (max1≤m≤n |Lm | )]

≤

but

∞

X

≤

k=q+1

∞

X

k=q+1

∞

X

k=q+1

|(αk − α̈k )| [E(max1≤m≤n |Tm (k)|r )]

1/r

|(αk − α̈k )| cnr/2

|(αk − α̈k )| = o(q −s )

by assumption 1 b and the construction of the α̈k , recall part 2

of the present proof. Thus,

E [max1≤m≤n |Lm |r ] ≤ cq −rs nr/2

Then it follows from the result in Moricz (1976, theorem 6),

that for any δ > 0,

1/r

(1+δ)/r

= o(n) a.s.

Ln = o q −s T 1/2 (log n) (log log n)

this last equation proves ( 32).

Now, if we let

Mt =

∞

X

k=1

(αq,k − α̈k )

29

n

X

t=1

ut−k ,

then we find by the same devise that

1/r

r

[E(max1≤m≤n |Mm | )]

≤

∞

X

(αq,k − α̈) [E(max1≤m≤n |Tm (k)|r )]1/r

k

k=1

the right hand side of which is smaller or equal to cq−s n1/2 ,

see the discussion under equation ( 31) . Consequently, using

Moricz’s result once again, we have Mn = o(n) a.s. and equation

( 33) is demonstrated. Finally, define

Nn =

∞

X

k=1

further, let

(α̂q,k − αq,k )

n

X

ut−k

t=1

n

∞ X

X

Qn =

ut−k .

k=1 t=1

Then, the larger element of Nn is Qn max1≤k≤∞ |α̂q,k − αq,k |. Further, using again Doob’s and Burkholder’s inequalities along

with assumption 1 b and the result underneath ( 29), we have

r

E [max1≤m≤n |Qm | ] ≤ cq r nr/2 .

Therefore, we can again deduce that for any δ > 0,

and

1/r

(1+δ)/r

Qn = o qn1/2 (logn) (loglogn)

a.s.

(r+2)/2r

(1+δ)/r

1/2

= o(n).

Qn = o q (logn)

(loglogn)

Nn = O (logn/n)

Hence, equation ( 34) is proved.

The proof of the lemma is complete. Consequently, the conditions required for

d?

theorem 2.2 of Park (2002) are satisfied and we may conclude that W?n →

W a.s.

•

PROOF OF LEMMA 3.

30

We begin by noting that

n

i

i X

h

h

P ? n−1/2 ũ?t > δ

P ? max1≤t≤n n−1/2 ũ?t > δ ≤

t=1

i

h

= nP ? n−1/2 ũ?t > δ

r

≤ (1/δ r )n1−r/2 E ? |ũ?t |

where the first inequality is trivial, the second equality follows from the invertibility

of ũ?t conditional on the realization of {ε̂q,t} (which implies that the AR(∞) form

of the MA(q) sieve is stationary) and the last inequality is an application of the

Tchebyshev inequality. Recall that

ũ?t =

q

q X

X

π̂q,i )ε?t−k+1 .

(

k=1 i=k

Then, by Minkowski’s inequality and assumption 1, we have:

q

X

r

r

k |π̂q,k |)r E ? |ε?t |

E ? |ũ?t | ≤ (

k=1

But by lemma 1, the estimates π̂q,i are consistent for πk . Hence, by assumption

1, the first part must be bounded as n→ ∞. Also, we have shown in lemma 2 that

n1−r/2 E ? |ε?t |r a.s.

→ 0. The result thus follows.

•

PROOF OF THEOREM 1.

The result follows directly from combining lemmas 1 to 3. Note that, had proved

lemmas 1 to 3 in probability, the result of theorem 1 would also be in probability.

•

Lemma A1. Let assumptions 1 and 2 hold. Then, the errors from regression ( 15)

are asymptotically equal to the bootstrap error terms, that is, et = ε?t + o(1) a.s.

PROOF OF LEMMA A1.

31

Let us first rewrite the ADF regression ( 15) under the null as follows:

∆yt? =

p

X

αp,k (

k=1

k+q

X

π̂q,j ε?t−j + ε?t−k ) + et

j=k+1

?

. This can be rewritten as

where we have substituted the bootstrap DGP for ∆yt−k

∆yt? =

q

p X

X

αp,i π̂q,j ε?t−i−j + et .

(35)

i=1 j=0

where π̂0,j is constrained to be equal to 1 as usual. Then, from equations ( 35) and

( 8),

et =

q

X

π̂q,j ε?t−j

+

ε?t

q

p X

X

−

j=1

αp,i π̂q,j ε?t−i−j .

i=1 j=0

Let us rewrite this result as follows:

et = At + Bt + ε?t

where

At = (π̂q,1 − α1 )ε?t−1 + (π̂q,2 − αp,1 π̂q,1 − αp,2 )ε?t−2 + ... + (π̂q,q − αp,1 π̂q,q−1 − ... − αp,q )ε?t−q

and

Bt =

p+q

X

j=q+1

q

X

ε?t−j (

π̂q,i αp,j−i )

i=0

where π̂0 =1 and αp,i =0 whenever i > p. First, we note that the coefficients appearing in At are the formulas linking the parameters of an AR(p) regression to those

of a MA(q) process (see Galbraith and Zinde-Walsh, 1994). Hence, no matter how

the π̂q,j are estimated, these coefficients all equal to zero asymptotically under assumption 2. Since we assume that q → ∞, we may conclude that At →0. Further,

by lemma 1 and lemma 3.1 of Park (2002), this convergence is almost sure.

On the other hand, taking Bt and applying Minkowski’s inequality to it:

r

p+q X

q

X

r

E ? |Bt | ≤ E ? |ε?t | (

j=q+1 i=0

|π̂q,i αp,j−i |)r .

Pq

But for each j, i=0 πq,i αp,j−i is equal to -αp,j , the jth parameter of the approximating

autoregression (see Galbraith and Zinde-Walsh, 1994). Hence, we can write:

r

r

E ? |Bt | ≤ E ? |ε?t | (

p+q

X

j=q+1

32

|αj−i |)r a.s.

by lemma 1. But, as p and q go to infinity with p>q,

under assumption 1.

Pp+q

r

j=q+1 (|αj−i |)

=o(q−rs ) a.s.

•

Lemma A2 (CP lemma A1) Under assumptions 1 and 2’, we have σ?2 a.s.

→ σ 2 and

a.s.

Γ?0 → Γ0 as n→ ∞ where E? |ε?t |2 = σ?2 and E? |u?t |2 = Γ?0

PROOF OF LEMMA A2.

Consider the bootstrap DGP ( 8) once more:

u?t =

q

X

π̂q,k ε?t−k + ε?t

k=1

Under assumption 1 and lemma 1, this process admits an AR(∞) representation.

Let this be:

∞

u?t +

X

ψ̂q,k u?t−k = ε?t

(36)

k=1

where we write the ψ̂q,k parameters with a hat and a subscript q to emphasize that

they come from the estimation of a finite order MA(q) model. We can rewrite

equation ( 36) as follows:

u?t = −

∞

X

ψ̂q,k u?t−k + ε?t .

(37)

k=1

Multiplying by u?t and taking expectations under the bootstrap DGP, we obtain

Γ?0 = −

∞

X

ψ̂q,k Γ?k + σ?2 .

k=1

dividing both sides by Γ?0 and rearranging,

Γ?0 =

1+

σ?2

P∞

k=1

ψ̂q,k ρ?k

(38)

where ρ?k are the autocorrelations of the bootstrap process. Note that these are

functions of the parameters ψ̂q,k and that it can easily be shown that they satisfy

the homogeneous system of linear differential equations described as:

ρ?h +

∞

X

k=1

33

ψ̂q,k ρ?h−k = 0

for all h > 0. Thus, the autocorrelations ρ?h are implicitly defined as functions of

the ψ̂q,k . On the other hand, let us now consider the model:

ut =

q

X

π̂q,k ε̂q,t−k + ε̂q,t

k=1

which is simply the result of the computation of the parameters estimates π̂q,k .

This, of course, also has an AR(∞) representation:

ut = −

∞

X

ψ̂q,k ut−k + ε̂q,t .

k=1

where the parameters ψ̂q,k are exactly the same as in equation ( 37). Applying the

same steps to this new expression, we obtain:

Γ0,n =

σ̂n2

P∞

1 + k=1 ψ̂q,k ρk,n

(39)

where Γ0,n , is the sample autocovariance of ut when we have n observations, σ̂n2 =

Pn

(1/n) i=1 ε̂2t and ρk,n is the kth autocorrelation of ut , which are functions of the

parameters π̂q,k that generated ut . Since the autocorrelation parameters are the

same in equations ( 38) and ( 39), we can write:

Γ?0 = (σ?2 /σ̂n2 )Γ0,n .

The strong law of large numbers implies that Γ0n a.s.

→ Γ0 . Therefore, we only need

2

2 a.s.

to show that σ? /σ̂n → 0 to obtain the second result (that is, to show that Γ?0 a.s.

→ Γ0 ).

2 a.s. 2

By the consistency results in lemma 1, we have that σ̂n → σ . Also, recall that the

Pn

ε?t are drawn from the EDF of (ε̂q,t − (1/n) t=1 ε̂q,t ). Therefore, ε?t is defined as:

n

σ?2 =

n

1X

1X

(ε̂q,t −

ε̂q,t )2 .

n t=1

n t=1

It follows that:

σ?2 = σ̂n2 + ((1/n)

n

X

ε̂q,t )2 .

(40)

t=1

But we have shown in lemma 2 that ((1/n) nt=1 ε̂q,t )2 = o(1) a.s. (to see this, take

the result for n1−r/2 Dn with r=2). Therefore, we have that σ?2 a.s.

→ σ̂n2 , and thus,

a.s.

a.s.

a.s.

σ?2 /σ̂n2 → 1. It therefore follows that Γ?0 → Γ0 . On the other hand, σ?2 → σ̂n2 implies

a.s.

σ?2 → σ 2 .

P

•

34

Lemma A3 (CP lemma A2, Berk, theorem 1, p. 493)Let f and f ? be the spectral

densities of ut and u?t respectively. Then, under assumptions 1 and 2’,

supλ |f ? (λ) − f (λ)| = o(1) a.s.

for large n. Also, letting Γk and Γ?k be the autocovariance functions of ut and u?t

respectively, we have

∞

X

Γ?k =

∞

X

Γk + o(1) a.s.

k=−∞

k=−∞

for large n. Notice that the result of CP (2003) and our are almost sure whereas

Berk’s is only in probability.

PROOF OF LEMMA A3.

The spectral density of the bootstrap data is

σ2

f (λ) = ?

2π

?

Further, let us define

2

q

X

ikλ π̂q,k e .

1 +

k=1

2

q

X

σ̂n2 ikλ ˆ

f (λ) =

π̂q,k e .

1 +

2π k=1

Recall that

σ?2

#2

"

n

n

1X

1X

=

ε̂q,t −

ε̂q,t .

n t=1

n t=1

From lemma 2 (proof of the 4th part) and lemma A2 (equation 40), we have

n

σ?2 =

1X 2

ε̂ + op (1).

n t=1 q,t

Thus,

ˆ + op (1).

f ? (λ) = f (λ)

Therefore, the desired result follows if we show that

Supλ f ˆ(λ) − f (λ) = o(1) a.s.

Now, denote by fn (λ) the spectral density function evaluated at the pseudo-true

parameters introduced in the proof of lemma 1:

σ2

fn (λ) = n

2π

35

2

q

X

πq,k eikλ 1 +

k=1

where σn2 is the minimum value of

−2

q

X

ikλ f (λ) 1 +

πq,k e dλ

−π

Z

π

k=1

and σn2 → σ2 as shown in Baxter (1962). Obviously,

Supλ f ˆ(λ) − fn (λ) = o(1) a.s.

by lemma 1 and equation (20) of Park (2002). Also,

Supλ |fn (λ) − f (λ)| = o(1) a.s.

by the same argument we used at the end of part 3 of the proof of lemma 2. The

first part of the present lemma therefore follows. If we consider that

∞

X

Γk = 2πf (0) and

−∞

∞

X

Γ?k = 2πf ? (0)

−∞

the second part follows quite directly.

•

Lemma A4 Under assumptions 1 and 2, we have

4

E ? |ε?t | = O(1) a.s.

PROOF OF LEMMA A4.

From the proof of lemma 2, we have E? |ε?t |4 ≤ c (An + Bn + Cn + Dn ) where c is a

constant. The relevant results are:

1. An =O(1) a.s.

2. E(Bn ) = o(q−rs ) (equation 23)

3. Cn ≤ 2r−1(C1n + C2n )

where C1n =o(1) a.s. (equation 30)

and E(C2n )=o(q−rs ) (equation 31)

4. Dn =o(1) a.s.

36

Under assumption 2’, we have that Bn =o(1) a.s. and C2n =o(1) a.s. because o(q−rs )

= o((cnk )−rs ) = o(n−krs ) = o(n−1−δ ) for δ >0. The result therefore follows.

•

Lemma A5 (CP lemma A4, Berk proof of lemma 3)Define

Mn? (i, j)

=E

?

"

n

X

t=1

u?t−i u?t−j

−

Γ?i−j

#2

.

Then, under assumptions 1 and 2, we have M?n (i, j)=O(n) a.s.

PROOF OF LEMMA A5.

For general linear models, Hannan (1960, p. 39) and Berk (1974, p. 491) have

shown that

Mn? (i, j) ≤ n 2

∞

X

k=−∞

Γ?k + |K4? |

∞

X

k=0

!2

2

π̂q,k

for all i and j and where K is the fourth cumulant of ε?t . Since our MA sieve

bootstrap certainly fits into the class of linear models (with π̂k,q =0 for all k>q and

π̂0,q =1), this result applies here. But K?4 can be written as a polynomial of degree

4 in the first 4 moments of ε?t . Therefore, |K4? | must be O(1) a.s. by lemma A4.

P

The result now follows from lemma A3 and the fact that ∞

−∞ Γk =O(n).

?

4

•

Before going on, it is proper to note that the proofs of lemmas 4 and 5 are

almost identical to the proofs of lemma 3.2 and 3.3 of CP (2003). We present

them here for the sake of completeness.

PROOF OF LEMMA 4.

First, we prove equation ( 16). Using the Beveridge-Nelson decomposition of

P

u and the fact that y?t = tk=1 u?k , we can write:

?

t

n

n

n

n

1X ? ?

1X ? 1X ? ?

1X ?

yt = π̂n (1)

wt−1 εt + ũ?0

ε −

ũ ε .

n t=1

n t=1

n t=1 t

n t=1 t−1 t

37

Therefore, to prove the result, it suffices to show that

E

?

"

#

n

n

1X ? 1X ? ?

ε −

ũ ε = o(1) a.s.

n t=1 t n t=1 t−1 t

ũ?0

(41)

Since the ε?t are iid by construction, we have:

E

n

X

?

ε?t

t=1

and

E

?

n

X

!2

ũ?t−1 ε?t

t=1

= nσ?2 = O(n) a.s

!2

= nσ?2 Γ̃?0 = O(n) a.s

(42)

(43)

where Γ̃?0 =E? (ũ?t )2 . But the terms in equation ( 41) are n1 times the square root

of ( 42) and ( 43). Hence, equation ( 41) follows. Now, to prove equation ( 17),

consider again from the Beveridge-Nelson decomposition of u?t :

yt? 2 = π̂n (1)2 wt? 2 + (ũ?0 − ũ?t )2 + 2π̂n (1)wt? (ũ?0 − ũ?t )

= π̂n (1)2 wt? 2 + (ũ?0 )2 + (ũ?t )2 − 2ũ?t u?0 + 2π̂(1)wt? (ũ?0 − ũ?t )

thus,

n

n

n

n

n

n

X

X

1 X ? ?

1 ? 2 1 X ? 2 2 ?X ?

1 X ?2

?2

?

2 1

? 1

y

w

(ũ

)

−

w

+2π̂

(1)

w ũ .

=

π̂

(1)

+

(ũ

)

+

ũ

ũ

+2π̂

(1)ũ

n

n

n

0 2

n2 t=1 t

n2 t=1 t

n2 0

n2 t=1 t

n2 0 t=1 t

n t=1 t

n2 t=1 t t

By lemma 3, every term but the first of this expression is o(1) a.s. The result

follows.

•

PROOF OF LEMMA 5.

Using the definition of bootstrap stochastic orders, we prove the results by

showing that:

!−1 n

1X

?

? >

= Op (1)

E? x

x

p,t p,t

n

t=1

n

X

? E? x?p,t yt−1

= Op (np1/2 )a.s.

t=1

38

(44)

(45)

n

X

?

?

E xp.t εt = Op (n1/2 p1/2 )a.s.

?

(46)

t=1

The proofs below rely on the fact that, under the null, the ADF regression is a

finite order autoregressive approximation to the bootstrap DGP, which admits an

AR(∞) form.

Proof of ( 44): First, let us define the long run covariance of the vector x?p,t as

Ω?pp = (Γ?i−j )pi,j=1 . Then, recalling the result of lemma A5, we have that

2

n

1 X

?

? >

? xp,t xp,t − Ωpp = Op (n−1 p2 ) a.s.

E n

t=1

?

(47)

This is because equation ( 47) is squared with a factor of 1/n and the dimension

of x?p,t is p. Also,

? −1 −1

Ωpp ≤ [2π(infλ f ? (λ))] = O(1) a.s.

(48)

because, under lemma A4, we can apply the result from Berk (1974, equation

2.14). In that paper, Berk considers the problem of approximating a general linear

process, of which our bootstrap DGP can be seen as being a special case, with

a finite order AR(p) model, which is what our ADF regression does under the

null hypothesis. To see how his results apply, consider assumption 1 (b) on the

parameters of the original data’s DGP. Using this and the results of lemma 1, we

P

may say that ∞

k=0 |π̂q,k | < ∞. Therefore, as argued by Berk (1974, p. 493), the

P

polynomial 1 + qk=1 π̂q,k eikλ is continuous and nonzero over λ so that f? (λ) is also

continuous and there are constant values F1 and F2 such that 0 < F1 < f ? (λ) < F2 .

This further implies that (Grenander and Szegö 1958, p. 64) 2πF1 ≤ λ1 < ... < λk ≤

2π F2 where λi , i=1,...,p are the eigenvalues of the theoretical covariance matrix

of the bootstrap DGP. To get the result, it suffices to consider the definition of

the matrix norm. For a given matrix C, we have that kCk=sup kCxk for kxk ≤ 1

P

where x is a vector and kxk2 =x> x. Thus, kCk2 ≤ i,j c2i,j , where ci,j is the element

in position (i,j) in C. Therefore, the matrix norm kCk is dominated by the largest

modulus of the eigenvalues of C. This in turn implies that the norm of the inverse

of C is dominated by the inverse of the smallest modulus of the eigenvalues of C.

Hence, equation ( 48) follows.

Then, we write the following inequality:

39

!−1

n

n

1 X

X

?

? −1 ?

? >

?

? >

? −1 ? 1

xp,t xp,t − Ωpp ≤ E xp,t xp,t

− Ωpp E n

n t=1

t=1

where we used the fact that E? Ω?pp = Ω?pp . By equation ( 47), the right hand

side goes to 0 as n increases. Equation ( 44) then follows from equation ( 48).

Proof of ( 45): Our proof is almost exactly the same as the proof of lemma 3.2 in

Chang and Park (2002), except that we consider bootstrap quantities. As them,

we let y?t = 0 for all t≤0 and, for 1 ≤ j ≤ p, we write

n

X

?

yt−1

u?t−j

=

t=1

n

X

?

yt−1

u?t + Rn?

(49)

t=1

where

Rn? =

n

X

t=1

?

yt−1

u?t−j −

n

X

?

yt−1

u?t

t=1

where u is the bootstrap first difference process. First of all, we note that

Pn

P

?

?

th

?

element is nt=1 yt−1

u?t−j . Therefore, as we will

t=1 xp,t yt−1 is a 1×p vector whose j

see, by the definition of the Eucledian vector norm, equation ( 45) will be proved

if we show that R?n =O?p (n) uniformly in j for j from 1 to p. We begin by noting

that

n

n

n

?

t

X

?

yt−1

u?t =

t=1

X

t=1

?

yt−j−1

u?t−j −

X

?

yt−1

u?t

t=n−j+1